Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

Atlassian launched Atlassian Rovo, a generative AI assistant built on Atlassian Intelligence, which will operate across the company's teamwork platform. In addition, Atlassian said it was combining Jira Software and Jira Work Management into one project management tool.

The company announced its product updates at Atlassian Team '24 in Las Vegas.

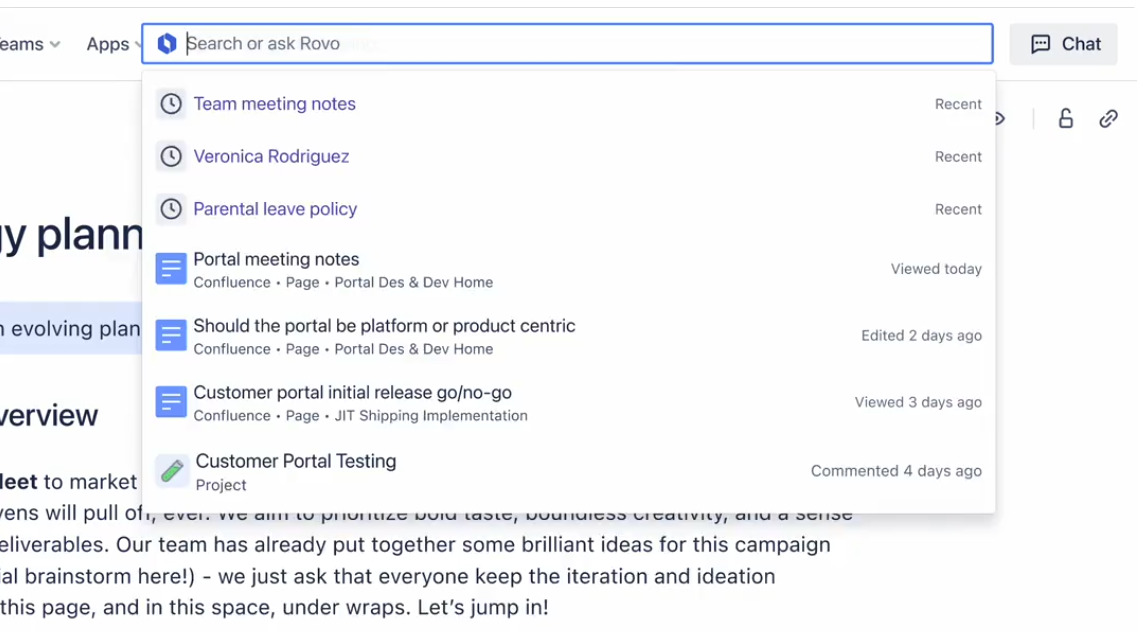

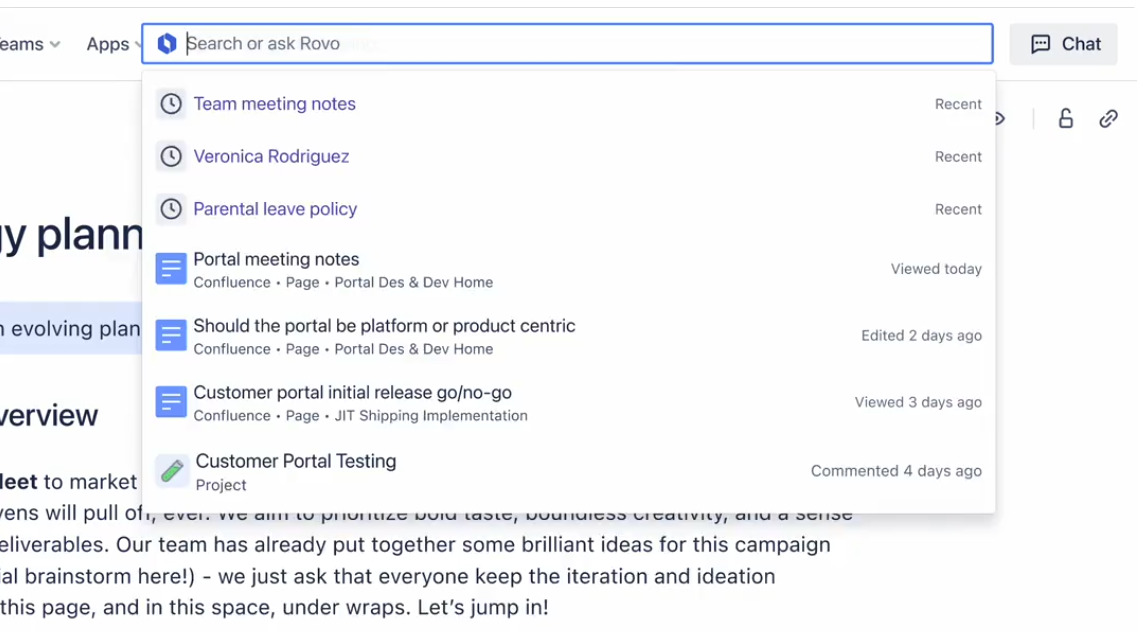

Rovo is designed to find, learn and act on information stored across an enterprise. Atlassian Rovo is designed to surface data, understand it and deliver insights and use specialized agents to handle tasks.

With the move, Atlassian Rovo will leverage one data model, dubbed the teamwork graph, which will pull data from the company's applications and other SaaS apps. The goal is to deliver one view of goals, knowledge, actions, projects and execution.

Core components of Atlassian Rovo include:

- Rovo Search, which will comb through content wherever it is stored (Google Drive, Microsoft SharePoint, GitHub, Slack etc.), and query across applications. Rovo Search will identify team players, projects and information needed to make decisions. According to Atlassian, Rovo Search will connect niche and custom apps via API and have enterprise-grade governance to data governance.

- Insights, which are delivered via knowledge cards that offer context about projects, goals and teammates.

- Rovo Chat, a conversational bot that is built on company data and learns as it goes. Rovo will surface information and offer follow up questions.

On the backend, Atlassian Intelligence will feature generative AI in editor tools across the company's portfolio, AI-powered summaries, Loom AI workflows, virtual help center agents, AIOps and natural language AI automation rules.

Constellation Research's take on Atlassian's Rovo

Constellation Research analyst Andy Thurai received a full demo and deep dive of Atlassian's AI efforts. Here's Thurai's assessment:

"Rovo (a name designed to satisfy international customers) is primarily an enterprise knowledge and search tool. Powered by Atlassian Intelligence, you can search across Jira and Confluence for information within the platform. Currently, Rovo is limited to Atlassian and some third-party products, but you'll eventually be able to search Atlassian's marketplace. Rovo provides the contextual information that was hard to reach on the Atlassian platform before.

Atlassian uses an OpenAI private instance on the backend but has a specific agreement with OpenAI so it can’t retain the data used for prompting. OpenAI also can't use the data to train an LLM. The chatbots are currently limited only to structured data with no specific plan or timeline for unstructured data. In a demo, the chatbot had contextual awareness from Confluence and Jira and a focus on workforce productivity.

I also liked AI summarization in the Atlassian platform. When product teams are rushed for time, employees can ask the agents to summarize the critical points without reading a bunch of lengthy documents. Rovo can create actionable items based on those documents. One customer was able to take a backlog risk analysis from two months to 20 minutes with the help of Rovo.

Rovo comes with 20+ default agents but can be extended by the customers with the no code options. Since its release few months ago, 500 internal agents have been created.

Overall, Atlassian has quietly done quite a bit of work on the AI front. Many of the new features are in beta mode so be sure to test after the full release. Atlassian focused on the system of worked and developed a bevy of capabilities. Given that competition is very limited for the knowledge worker category, Atlassian can gain traction. Atlassian's sales motion is geared toward mid-sized enterprises, but the company is trying to move up.

Going forward, Atlassian may have to address pricing since all its go-to-market and pricing motions are geared toward large teams collaborating in an agile production cycle. With generative AI, team sizes are going to shrink. Atlassian needs to move the model away from seat-based licensing to a value-based AI-driven pricing model."

It's just Jira now

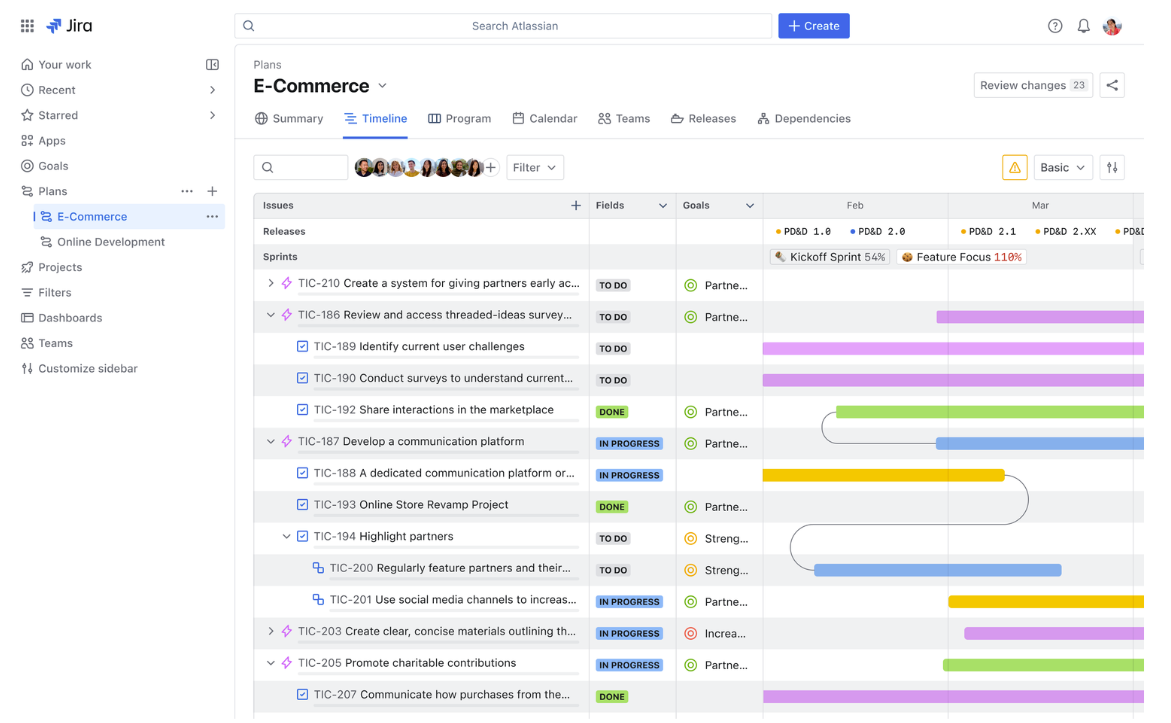

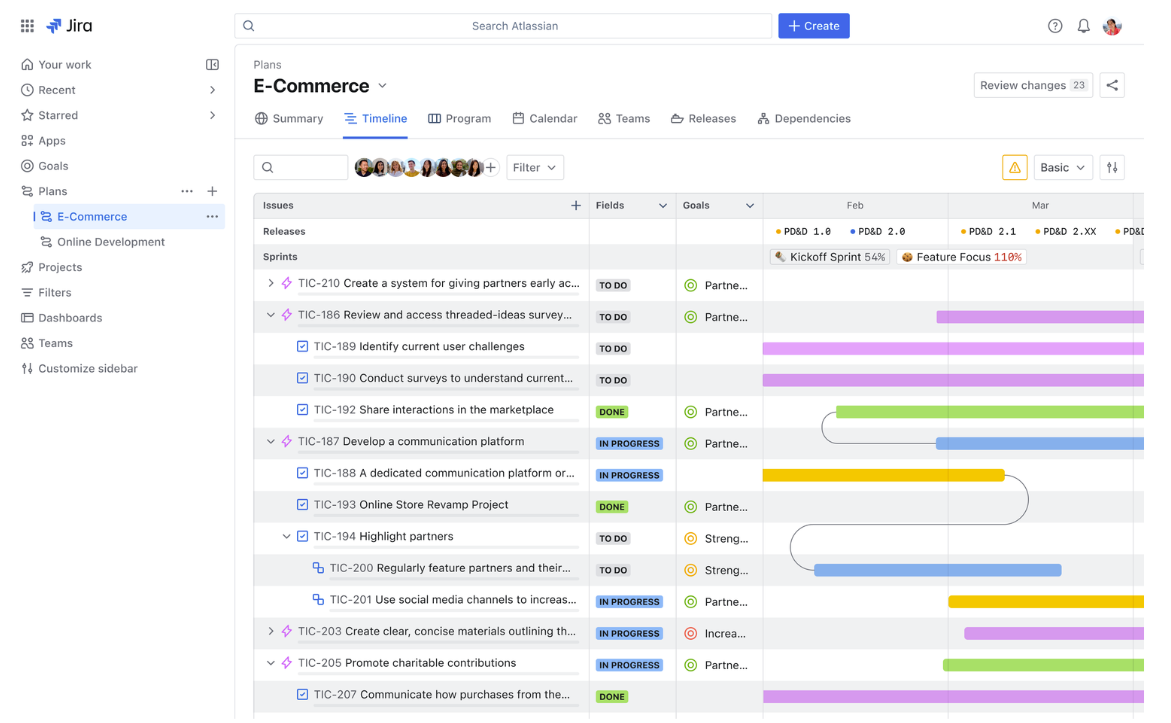

In addition to the Rovo news, Atlassian said it is taking the best of Jira Work Management and Jira Software to create one project management suite called Jira.

Jira will include goal tracking, shortcuts via AI, visualization and integrations with Confluence and Loom for knowledge sharing.

For enterprises, Atlassian said they will be able to combine SKUs and have one project management software invoice.

Atlassian added that Goals in Jira will launch in the next few months to visualize tasks and track progress to goals, Atlassian AI will break works into digestible chunks, and feature list views, calendar integration and collaboration tools.

CEO transition and earnings

Atlassian's conference kicked off a week after the company reported third quarter earnings and said it would transition to one CEO over the co-CEO model. Co-Founder Scott Farquhar will step down as co-CEO effective Aug. 31 and Mike Cannon Brookes will lead the company as CEO.

Farquhar is leaving to spend more time with his young family and philanthropy while remaining an active board member.

The company in the third quarter reported revenue of $1.2 billion, up 30% from a year ago with net income of $12.8 million, or 5 cents a share. Atlassian said it now gets most of its revenue from its cloud products and has 300,000 customers on its cloud. Non-GAAP earnings for the third quarter were 89 cents a share.

For the fourth quarter, Atlassian projected revenue between $1.12 billion to $1.13 billion with cloud revenue growth of 32%.

Brookes said, "we're incredibly bullish about AI" and the scale across the Atlassian platform is "one of the areas that I always think is underestimated in terms of durable growth and in terms of long-term advantage."

CFO Joe Binz said Atlassian is navigating a mixed demand picture. On the third quarter earnings conference call, Binz said:

"Enterprise was healthy across both cloud and data center and that drove the record billings, strong growth in annual multiyear agreements. Strong migration and good momentum in sales of premium and enterprise additions of our products will roll through our revenue results.

The macro impact on SMB, on the other hand, continued to be challenging, although also in-line with expectations. And that macro headwind in SMB lands primarily in cloud, given SMB makes up a significant part of that business."

Future of Work

Data to Decisions

Innovation & Product-led Growth

Tech Optimization

Next-Generation Customer Experience

Digital Safety, Privacy & Cybersecurity

AI

GenerativeAI

ML

Machine Learning

LLMs

Agentic AI

Analytics

Automation

Disruptive Technology

Chief Information Officer

Chief Executive Officer

Chief Technology Officer

Chief AI Officer

Chief Data Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Product Officer