Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

Apple CEO Tim Cook outlined the company's generative AI strategy at Apple WWDC that revolves around Apple Intelligence, a personal intelligence system, on-device processing of large language models and a private cloud model to go along with an OpenAI partnership.

Apple's tagline was that Apple Intelligence is "AI for the rest of us."

The stakes were high going into Apple's WWDC as Wall Street and the tech sector were closely watching how the company would approach generative AI as Google, Microsoft and a bevy of other technology giants have been regularly launching new features. In the end, Apple's real mission with generative AI was to spur another iPhone upgrade cycle. There was enough meat with Apple's strategy to give customers an excuse to upgrade.

Cook set up the AI approach to generative models. He said:

"We've been using artificial intelligence and machine learning for years. Recent developments in generative intelligence and large language models offer powerful capabilities that provide the opportunity to take the experience of using Apple products to new heights. As we look to build on these new capabilities, we want to ensure that the outcome reflects the principles at the core of our products. It has to be powerful enough to help with the things that matter most to you. It has to be intuitive and easy to use. It has to be deeply integrated into your product experiences. Most importantly, it has to understand you and being grounded in your personal context, like your routine, your relationships, your communications and more."

Craig Federighi, SVP of Software Engineering, said the approach to Apple Intelligence is to go horizontal and systemwide.

"With iOS 18, iPad OS 18 and macOS Sequoia, we are embarking on a new journey to bring you intelligence that understands you. Apple intelligence is the personal intelligence system that puts powerful generative models right at the core of your iPhone, iPad and Mac."

Apple Intelligence capabilities

Apple Intelligence will provide large language model (LLM) capabilities in the background systemwide for writing, prioritizing and tackling daily tasks. Apple Intelligence will also provide image capabilities with personalization tools by contacts.

Personal context was a key talking point for Federighi. "Understanding this kind of personal context is essential for delivering truly helpful intelligence. but it has to be done right. You should not have to hand over all the details of your life to be warehoused. and analyzed in someone's AI cloud with Apple intelligence, powerful intelligence goes hand in hand with powerful privacy," said Federighi.

Apple also outlined Apple Intelligence foundation models and favorable comparisons to other LLMs when running on Apple hardware. Apple said in its developer state of the union talk that anything running on Apple's cloud or device is its own models.

Not surprisingly, Apple went for the killer app of generative AI and it's problem GenEmoji, which can create emojis on the fly based on whatever you cook up.

What Apple is really doing is creating an abstract layer that keeps the experience and device integration while leveraging LLMs underneath. See: Foundation model debate: Choices, small vs. large, commoditization

Architecture is all about private cloud

Apple's plan is to process Apple Intelligence queries on device with a semantic index. Apple said much of the AI processing will be on device, but some will go to servers in a system called Apple Private Cloud Compute.

A request will be analyzed to see if it can be done on device. Only data that's required to fulfill a request will be sent to a server, run on Apple Silicon.

Federighi explained:

"We have created Private Cloud Compute. Private cloud computing allows Apple intelligence to flex and scale its computational capacity and draw on even larger server base models for more complex requests, while protecting your privacy. These models run on servers we've specially created using Apple silicon. These Apple silicon servers offer the privacy and security of your iPhone from the silicon on, draw on the security properties of the Swift programming language and run software with transparency built in.

When you make a request, Apple Intelligence analyzes whether it can be processed on device if it needs greater computational capacity. It can draw on private cloud compute, and send only the data that's relevant to your task to be processed on Apple's silicon servers. Your data is never stored or made accessible to Apple. It's used exclusively to fulfill your request. And just like your iPhone, independent experts can inspect the code that runs on the servers to verify this privacy promise. In fact, private cloud computing cryptographically ensures your iPhone, iPad and Mac will refuse to talk to a server unless its software has been publicly logged for inspection. This sets a brand-new standard privacy and AI."

Siri's brain transplant

Apple Intelligence will give Siri a new brain and leverage settings data and other device information. Siri will take actions inside apps on your behalf and across apps.

Siri will move through the system to find a set of actions for various intentions across applications and know your personal context.

Apple said it will continue to roll out Siri features based on data already held. Siri has been configuring settings and asking questions for years. This repository to date has basically served as a personal context engine that can now be surfaced via Apple Intelligence.

The goal for Apple is to make Siri more intelligent, helpful and integrated with you.

Many of the features across Apple native apps can be found elsewhere. Apple Intelligence essentially looked like a spin on Google's Gemini, OpenAI's ChatGPT or Microsoft's Copilot. The big difference is the architecture used--Apple Silicon to Apple Silicon in a data center--and a horizontal approach that's more Amazon Q than app specific.

Federighi said Apple Intelligence will be free across its iPhone, iPad and Mac devices with the latest OS updates. ChatGPT will be free on Apple devices without an OpenAI account. There will be upgrade opportunities for OpenAI with new models on tap in the future.

Key points about the ChatGPT integration:

- ChatGPT will be available in Apple's systemwide tools and native apps.

- IP addresses sent to OpenAI are obscured and requests aren't stored.

- ChatGPT's data-use policies only apply for users that connect accounts.

- Apple will use GPT-4o for free without creatin an account.

- Paid features will be available to OpenAI subscribers.

For developers, Apple said it will layer LLMs into various SDKs.

"This is the beginning of an exciting new chapter of personal intelligence, intelligence built for your most personal products. We are just getting started," said Federighi.

Apple's AI strategy came at the end of what was a series of incremental software updates across its collection of operating systems. Here's a look at what's being added to select Apple platforms.

Constellation Research's take

Constellation Research CEO Ray Wang said "the main differential Apple has is their philosophical view."

"Privacy at the core means Apple designs AI to be mindful. The AI must work for the user first not the network. It's a different way of looking at what AI can do," said Wang. "Apple is late but they have time to do it right."

In addition, Apple's 1 billion devices in the field make a great model training set.

Constellation Research analyst Andy Thurai said that Apple's AI strategy is different because of the privacy approach, custom processors, hybrid approach and multimodal features that will go mainstream. "Apple's doubling down on its core value of privacy, even in the AI age," said Thurai in a LinkedIn post. "This is a MAJOR differentiator from other vendors who often prioritize data collection over user protection."

Constellation Research's Holger Mueller said:

"Apple's new AI capabilities are not only a few years behind what Google users have available, but now also what Microsoft users can do. Creating a ‘private’ cloud in the public cloud is the price Apple has to pay to keep the ‘fig leaf on Cook’s ‘differential privacy’ going. The OpenAi deal maybe the backdoor to bolster Apple Intelligence with super big LLM for real world awareness – which is a hint that Apple sees Google more and more like a competitor."

Vision OS 2

Apple said Apple Vision Pro will roll out to more countries including China, Japan and Singapore June 13 for preorders. Customers in Australia, Canada, France, Germany and UK can preorder June 28. With the rollout, Apple is betting on more sales and distribution for the 2,000 apps designed specifically for Apple Vision Pro.

The company's spatial computing efforts have a heavy dose of entertainment and video updates with Vision OS 2, but there was a bevy of business updates.

Apple said utility apps such as AirLauncher, GlanceBar, Splitscreen, Screens 5, and Widgetsmith and easier pairing will boost productivity in Vision OS 2. Apple also rolled out new frameworks and APIs to build 3D apps, anchor apps to flat surfaces and enable use cases by industries.

iOS 18

iOS 18 updates included personalization, support for RCS so Android users won't be singled out and bullied for having green bubbles and incremental features across native apps notable Photos, Maps and Messages.

Watch OS

Apple is adding training features to measure training load, recovery time and performance during individual workouts. These features have been in Garmin devices for years.

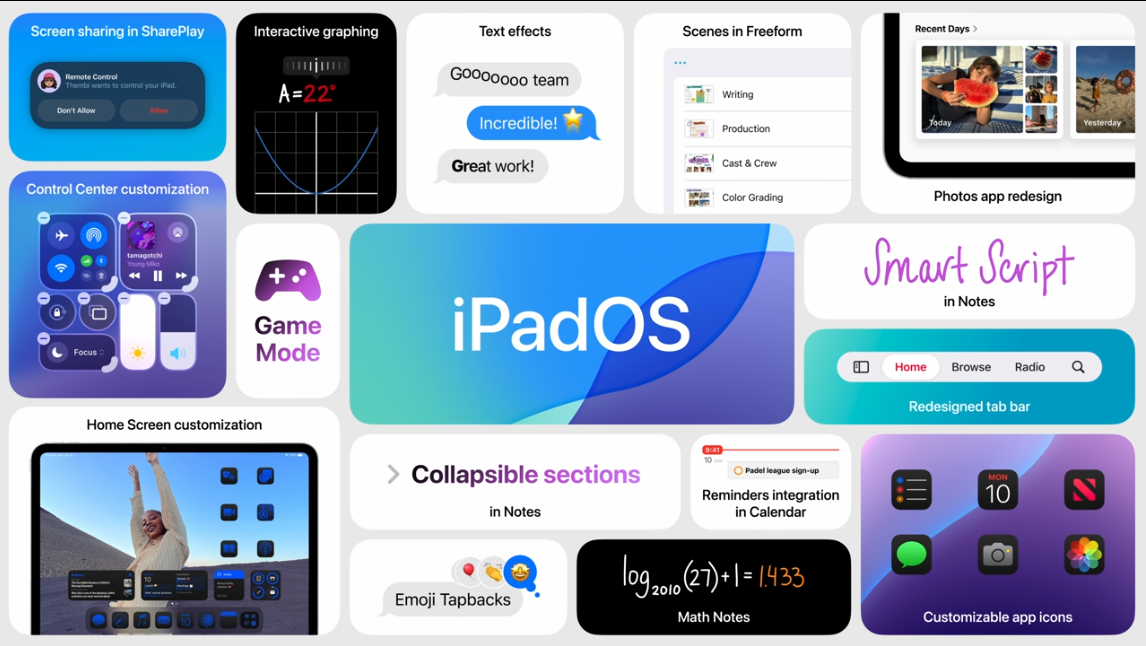

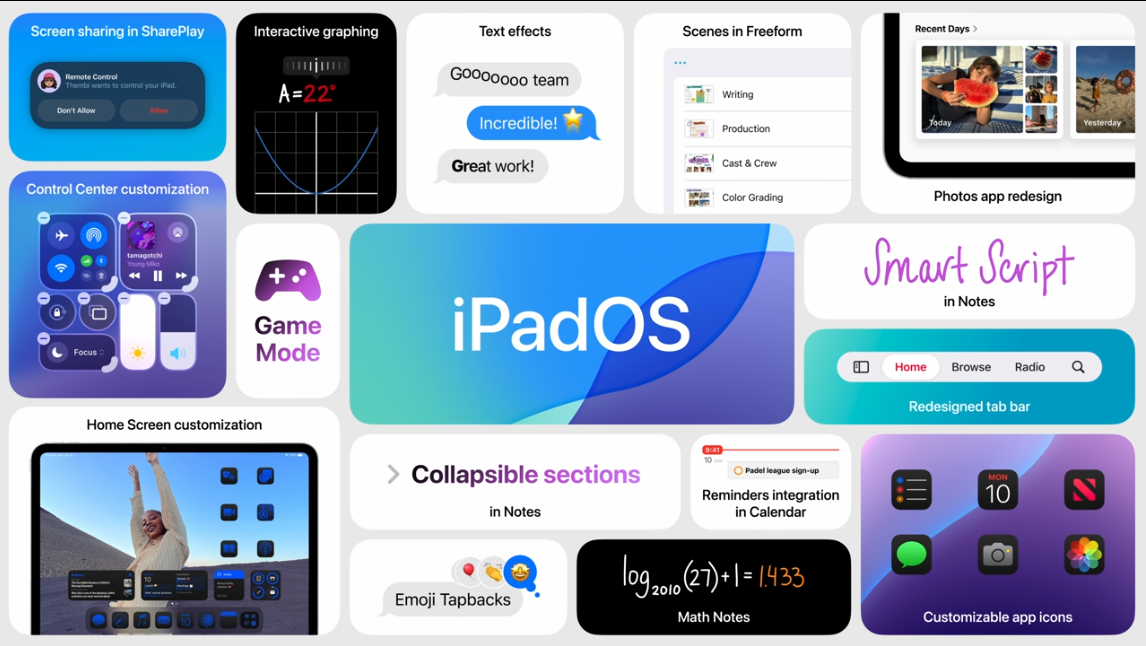

iPad OS

iPad OS will get a floating bar at the top with many of the customization features in iOS 18. Documents will be surfaced more easily by application. There were a few Apple Pencil enhancements worth noting. Adding space in for inserting words into handwriting was a nice touch.

Mac OS Sequoia

Mac OS gets many of the features found in iOS and iPad OS as well as tools such as Continuity to make the hand off between Apple devices more seamless such as iPhone Mirroring. Keychain was also updated for better password management.

More:

Data to Decisions

Next-Generation Customer Experience

Innovation & Product-led Growth

Future of Work

Tech Optimization

Digital Safety, Privacy & Cybersecurity

apple

AI

GenerativeAI

ML

Machine Learning

LLMs

Agentic AI

Analytics

Automation

Disruptive Technology

Chief Information Officer

Chief Executive Officer

Chief Technology Officer

Chief AI Officer

Chief Data Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Product Officer