Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

Enterprises that are leaders in artificial intelligence are evolving quickly and laying out plans for their next phases. The next phase of genAI deployments revolve around integrating AI into the business, optimizing processes, agility and scale. You should watch these companies closely for the road ahead.

When I think of leading AI companies, I'm usually looking for the buy side of the enterprise equation. These companies are deploying AI as well as integrating it into their business. For instance, Rocket spent years deploying its data platform and working with machine learning and AI models. When generative AI hit the enterprise, Rocket had all the DNA to move quickly and leverage the technology to deliver better customer experiences.

Intuit was in a similar position and saw its bets on data science and then AI work. It has an Investor Day coming up that'll feature a lot of strategy and AI. JPMorgan Chase is another player that has moved to the next phase of its genAI strategy. The upshot is that JPMorgan Chase has moved to integrating AI operations into its various business units.

JPMorgan Chase: Digital transformation, AI and data strategy sets up generative AI (download PDF) | JPMorgan Chase: Why we're the biggest tech spender in banking

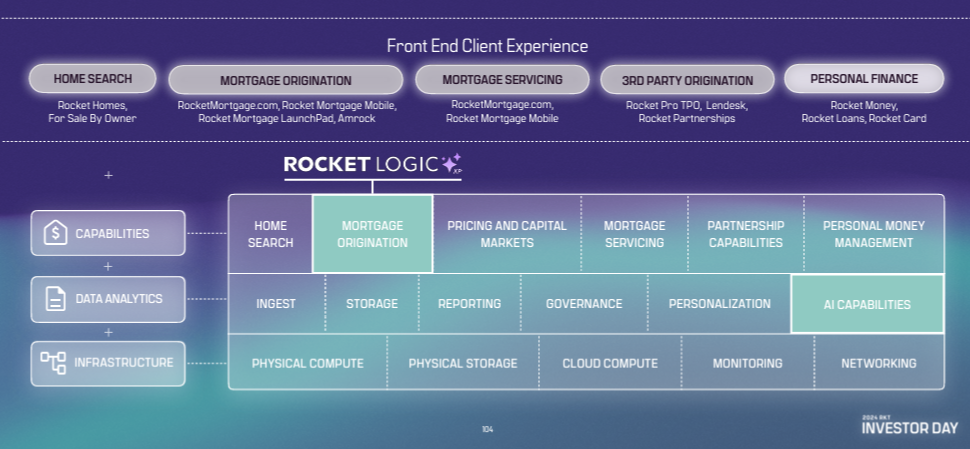

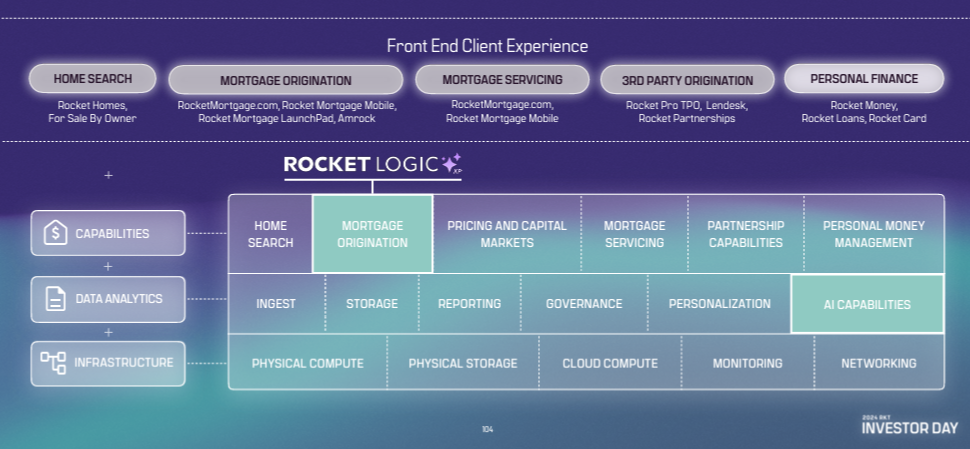

With that backdrop, it's prudent to think of customer stories and case studies as living documents. Consider Rocket. Constellation Insights documented Rocket's use of AI and strategy (PDF) in May. Since then, the company has named a new Chief Technology Officer and outlined its next steps in its AI progression. Perhaps the biggest takeaway from Rocket is that there are no overnight AI successes. Rocket has spent $500 million over the last five years on Rocket Logic, its proprietary loan origination system that uses AI to streamline income verification, document processing and underwriting.

Simply put, AI isn't merely lift and shift. The work is never done.

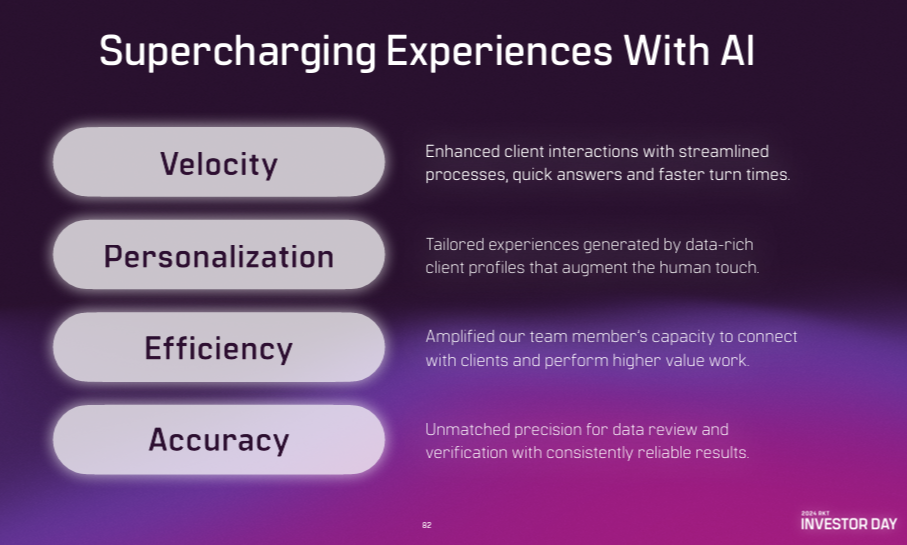

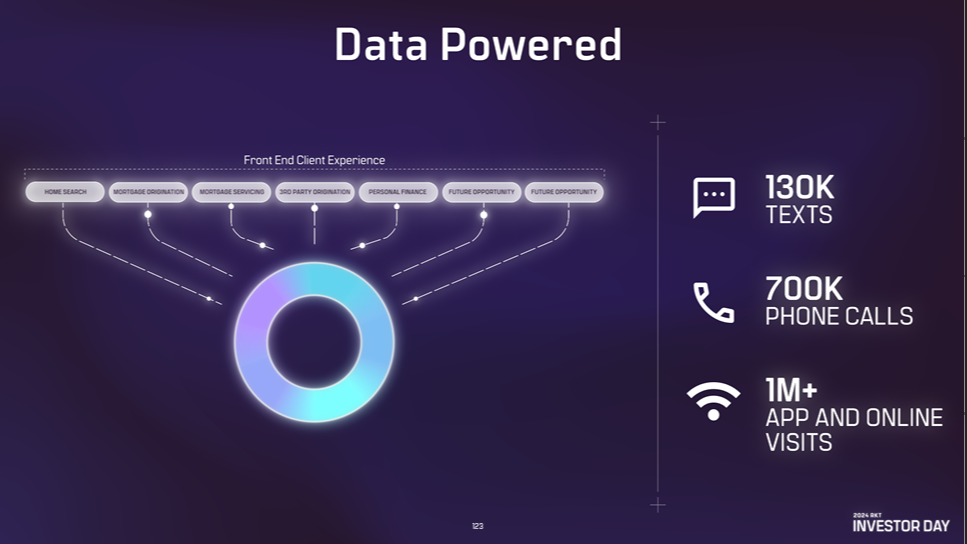

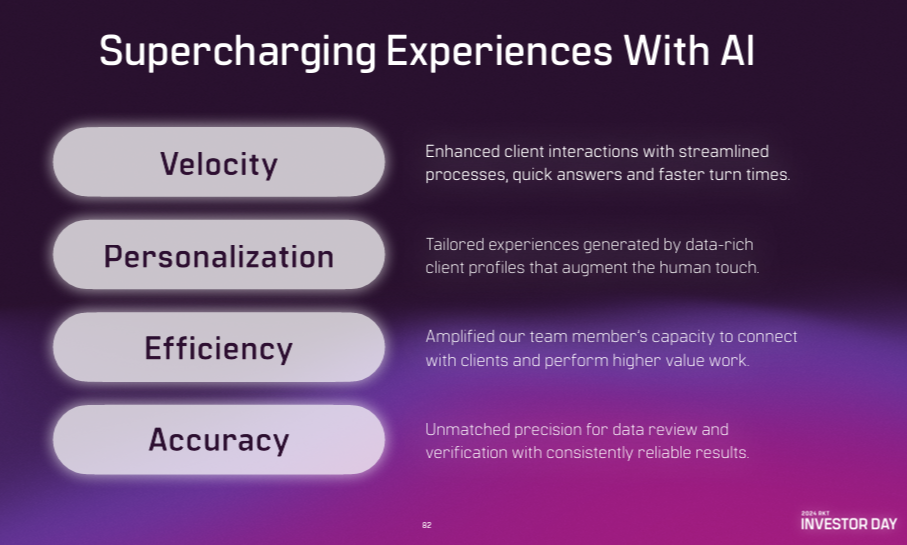

Rocket CEO Varun Krishna said the company's "super stack" of technology is critical. "What makes our super stack special from a technology perspective? We have created a groundbreaking new architecture. It's data powered, humanity driven, and self-learning. This engine fuels every aspect of our ecosystem and we've spent years perfecting it. It's now driving efficiency, velocity and experience across the company," he said.

Heather Lovier, Chief Operating Officer at Rocket, said the technology stack has been applied to every process at Rocket. In underwriting, the company has " taken complex processes and the categories of income property asset, and credit and broken them down into hundreds of thousands of discrete tasks in order to apply automation and AI." That approach also expands into the experience layer. Lovier said it's a game of inches and continual improvement with AI. "We've been working on AI long before it was sexy," she said.

During Rocket's investor day, CTO Shawn Malhotra, who started in May after being Head of Engineering and Product Development at Thomson Reuters, laid out the plan. First, Malhotra outlined how Rocket's previous technology decisions left it in good shape for genAI. "AI is not new. It's been around for a while and it's been powerful for a while. Rocket delivered its first production AI models back in 2012," said Malhotra. "We now have more than 200 AI models in production adding real value for our business and our clients. It's important to remember that AI is not a what, it's a how that enables powerful outcomes. Those outcomes are what matter to our clients and our business."

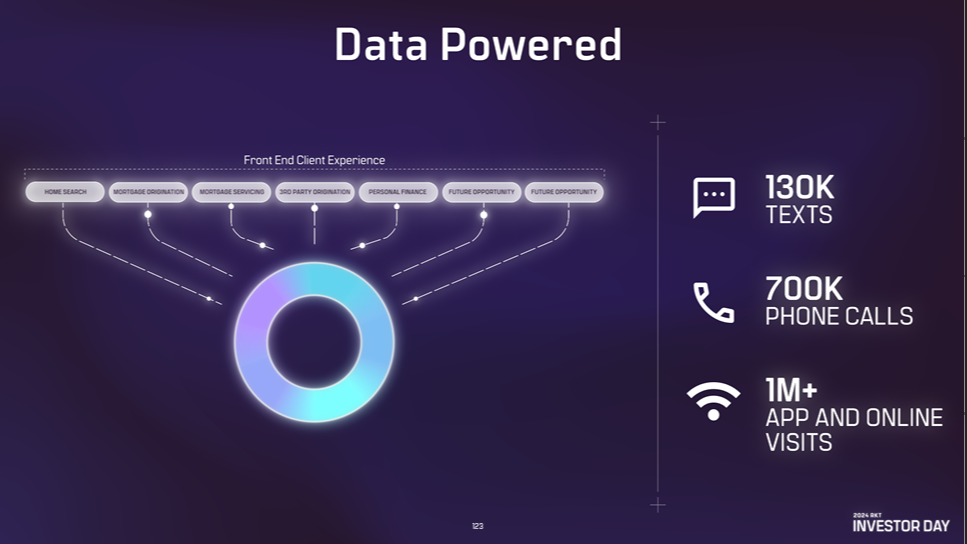

For Rocket, those outcomes revolve around using AI to enhance the "entire homeownership journey" with more seamless processes that are efficient and personalized. This strategy means AI touches every customer touchpoint. Rocket works with Amazon Web Services and Anthropic to meld third-party models and proprietary systems. "We're always going to focus on our secret sauce and our proprietary AI models, but then we're going to deeply partner with the world's best to great large language models that are across domains," said Malhotra. "We're not going to just buy this from them. We're going to co-create."

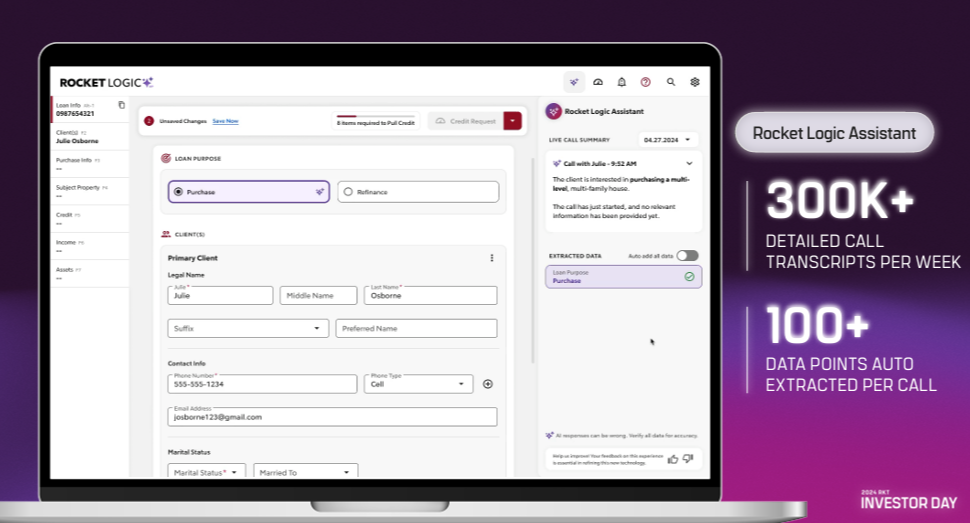

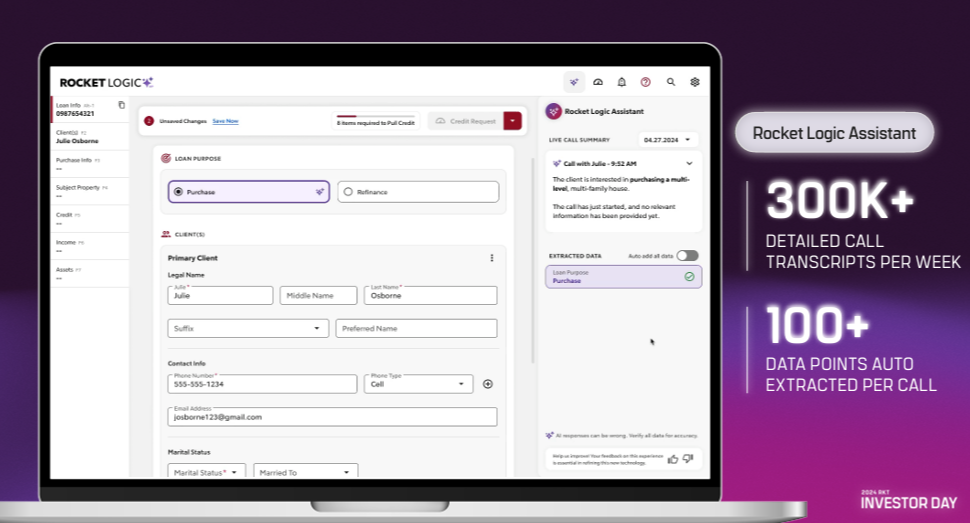

GenAI is also about productivity. Rocket said that it has reduced closing times for refinancing and home equity loans by 30% to 45% faster than industry benchmarks. The company also uses AI to process more than 300,000 transcripts from client calls weekly to extract data points.

Malhotra said the next phase of AI for Rocket is about accelerating experiences in the homeownership journey. GenAI will be deployed more in marketing automation, customer interactions and servicing. Rocket also plans to double its use of AI in software development and customer-facing operations.

"We're helping our developers produce more code automatically in the last 30 days. We estimate that 27% of our code over a quarter was written by the AI. That's a good start, but soon, we're going to be doubling the number of developers who are using these tools," said Malhotra.

AI-powered chat across all digital platforms was another key theme. Malhotra said models will be improved to blend human empathy with models.

He outlined the following AI services that are being enhanced or rolled out.

Rocket Data Platform: Malhotra said that data platform needs continual improvement with new features such as ID resolution, more data ingestion and democratization of access with natural language.

Rocket Exchange: A platform with 122 proprietary models powered by 6TB of data that allows the company to accurately price mortgage-based securities in less than a minute.

Rocket Logic Assistant: A personal assistant for mortgage bankers, Rocket Logic Assistant transcribes calls, auto-completes mortgage applications, and extracts key data points. This helps bankers focus on relationship-building and customer service rather than manual tasks. Rocket plans to expand its capabilities and automate more of the mortgage process.

Pathfinder and Rocket Logic Synopsis: These tools use generative AI to assist customer service agents by reducing the time needed to resolve customer queries. This has led to a 68% reduction in the time to resolve client requests.

Rocket Navigator will be built out so non-technical team members can leverage AI and contribute to product development.

Rocket said it is increasingly focused on developing AI tools to improve the midfunnel experiences with a focus on personalization and long-tail engagement. These tools will deliver bespoke guidance, educational content, and tailored recommendations to potential homebuyers over time.

The company also hinted that it wants to develop AI-powered real estate tools to help homebuyers search for properties, get insights and automate more of the home-buying process beyond mortgages. This effort is likely to be built on data from Rocket Homes and other properties in its ecosystem.

Insights Archive

Data to Decisions

Innovation & Product-led Growth

Future of Work

Tech Optimization

Next-Generation Customer Experience

Digital Safety, Privacy & Cybersecurity

AI

GenerativeAI

ML

Machine Learning

LLMs

Agentic AI

Analytics

Automation

Disruptive Technology

Chief Executive Officer

Chief Information Officer

Chief Data Officer

Chief Technology Officer

Chief AI Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Product Officer

In a statement, T-Mobile CEO Mike Sievert said:

In a statement, T-Mobile CEO Mike Sievert said: The tandem of Breeze and Breeze Intelligence, outlined in

The tandem of Breeze and Breeze Intelligence, outlined in