Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

Agentic AI is going to hit sprawl quickly, boardrooms are being reconstituted over fears of being left behind, genAI is still mostly an experiment with fuzzy returns and old-school issues like change management still determine whether companies successfully move from pilot to production.

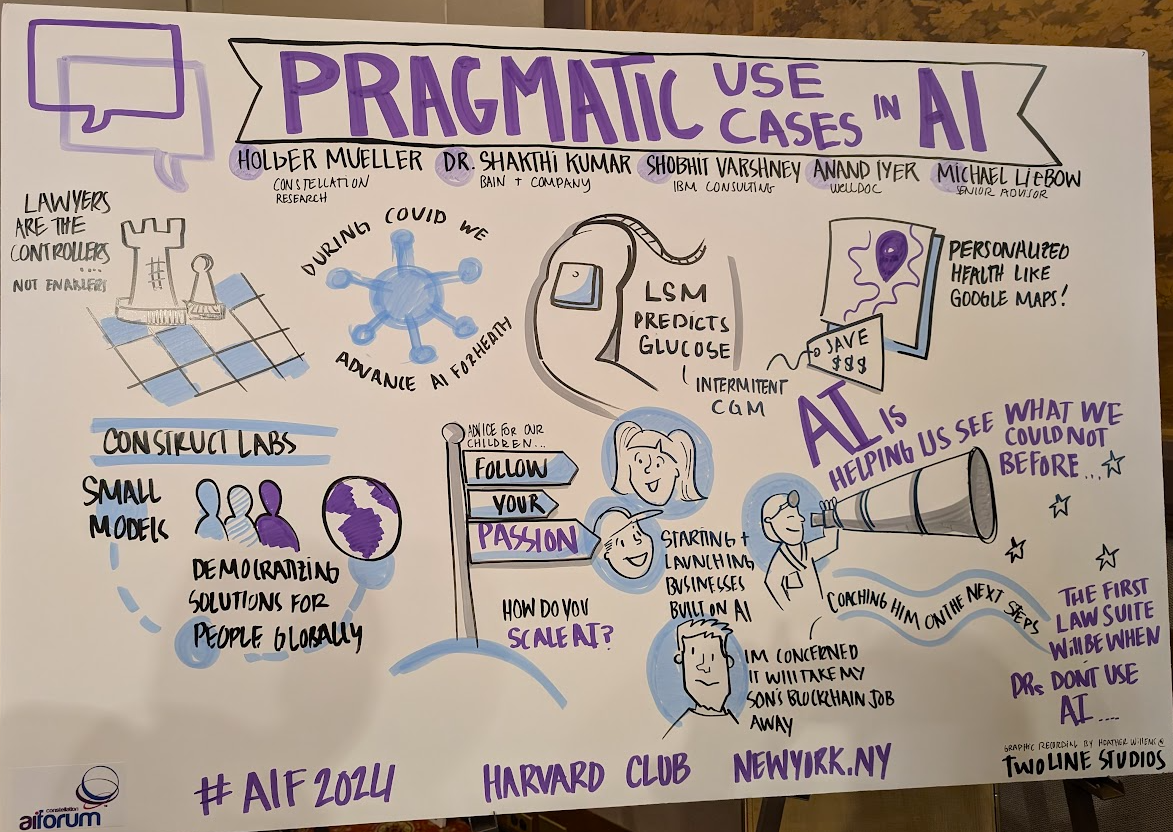

Those are a few of the takeaways from Constellation Research's AI Forum. Here's a look at everything we learned at the AI Forum in New York.

The board of directors is driving the AI conversation. AI is clearly a boardroom issue, said Betsy Atkins, CEO of Bajacorp. "What boards have figured out is that if they don't lean in and adopt AI and technology they're going to be left behind," said Atkins.

Boards are also being reconstituted for AI. "I see boards shifting in terms of cohorts," said Atkins, who said enterprises are creating boardrooms that can look at technology as well as new business models to differentiate.

However, the board also wants ROI. Atkins said that enterprises are looking at use cases with quick ROI because boards now realize how expensive AI can be.

Change management is more important than technical capability in production generative AI deployments, Michael Park, SVP, Global Head of AI GTM at ServiceNow. Park added: "I think getting the data structure ready and the instance ready is the easy part. That's just the tech, and there's hard work that needs to be done around it. The challenge that we're seeing right now is the organizational change management and getting people to see what's possible. Change management has been the biggest struggle. The tech is real."

Agentic AI. "There's no doubt that agentic AI is the future," said Park. He said there will be two domains of AI agents. One will augment a human being to supercharge capabilities. And another domain will be an aggregated set of agents that work on behalf of a unit. "I think every job is going to be affected in some ways and transform productivity for employees and customer experiences," said Park.

Attendees at the AI Forum generally agreed that the agentic AI wave is real, but doubted the technology has quite caught up with production use cases yet. That skepticism sure hasn’t stopped vendors from talking about agentic AI though.

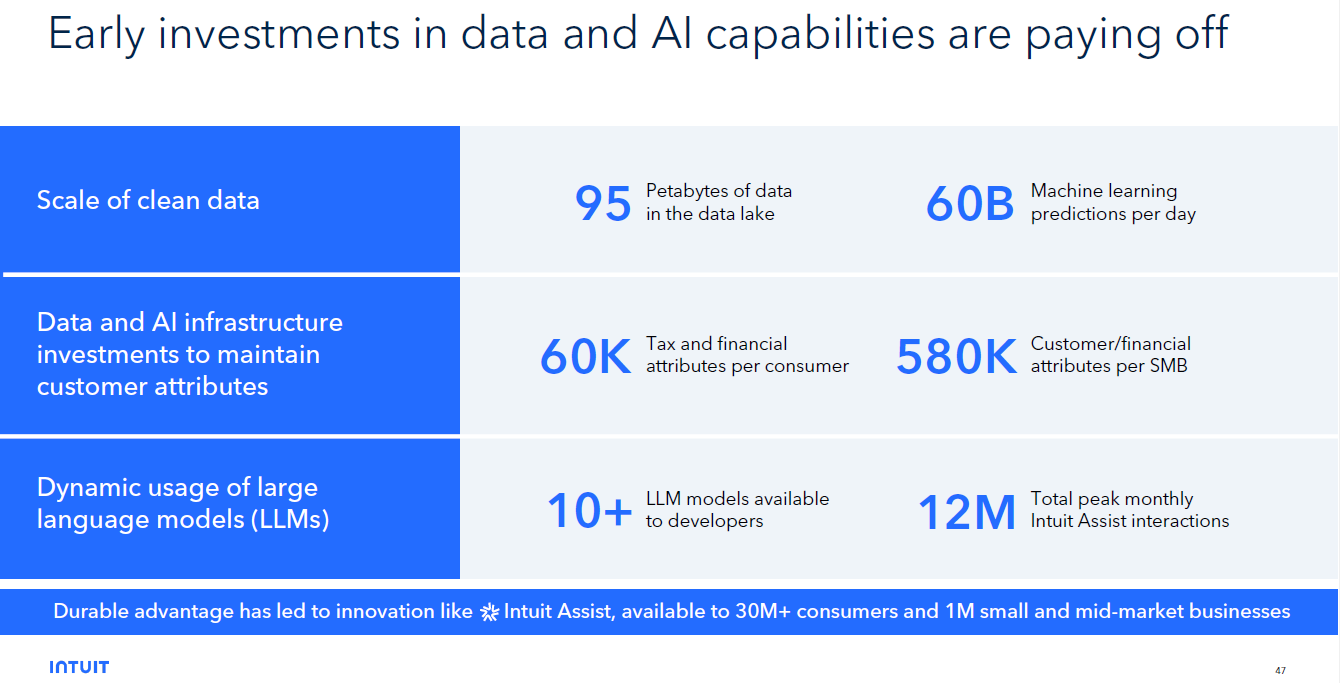

In recent weeks, Salesforce, Workday, Microsoft, HubSpot, ServiceNow, Google Cloud and Oracle all talked about AI agents and likely overloaded CxOs who have spent the last 18 months trying to move genAI from pilot to production. Other genAI front runners—Rocket, Intuit, JPMorgan Chase--have mostly taken the DIY approach and are now evolving strategies.

Agent orchestration will be needed quickly because overload will be here soon. Boomi CEO Steve Lucas said that the number of AI agents will outnumber the number of people in your business in less than three years. "The digital imperative is how do I work with agents? The number of agents will outnumber the number of humans in less than three years," said Lucas. Fun fact: Constellation Research analyst Holger Mueller thinks Lucas prediction is way conservative.

This post first appeared in the Constellation Insight newsletter, which features bespoke content weekly and is brought to you by Hitachi Vantara.

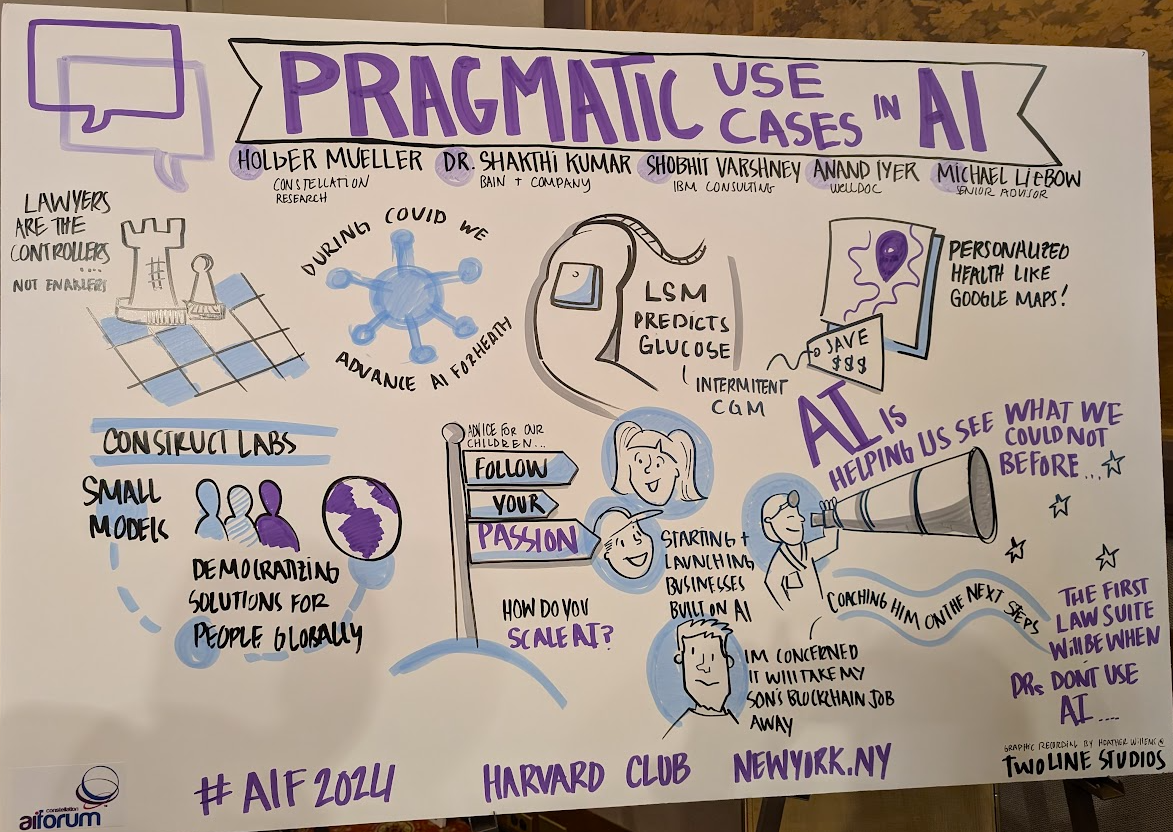

Healthcare is expected to be the most transformed industry by AI. Multiple attendees and panelists at AI Forum noted that healthcare will see the most transformational impact from AI. Anand Iyer Chief AI Officer at Welldoc, said data and AI can transform outcomes and be more preventative. Iyer said: "You can actually figure out what cocktail of exercise, food, stress, reduction, and all of these vectors that drive somebody's own health. You can figure out the exact cocktail that works for Person X, in a way that fits into their life flow and their clinician's workflow."

There may be a catch with AI health transformation. AI will bring costs down initially but may end up being more expensive due to the level of personalization.

Trust in AI will take time. Scott Gnau, Vice President of Data Platforms Intersystems, said every technology wave requires time to earn trust. Gnau noted building trust in a technology can take years, but AI has a chance to earning user trust quickly. "One or two bad answers can set generative AI back, but the addition of provenance and governance helps," said Gnau. "One of the game changers is that AI can actually be used to explain the provenance of the answer. I think we have a unique opportunity to accelerate trust."

Generative AI is still in the science experiment phase. Gnau added that there's a degree of FOMO with deploying AI. "Is generative AI or a large language model the right tool for every problem out there? Absolutely not. There are things that you've built that run your business today that are good so don't suck all of the budget away and let them crumble," said Gnau. "Make those systems better with AI and use the right tool for the right processes."

The AI playbook isn't fully baked. In a pop-up survey at the AI Forum, 35 CxOs indicated that they are trying a little bit of everything when it comes to AI (good thing they could give multiple answers). Respondents indicated that they were using multiple approaches to build AI capabilities. The majority (79%) said they were developing home-grown AI services on hyperscale cloud services and 48% were also using open-source frameworks and large language models. Many of these efforts included AI embedded in packaged applications that they already used such as Salesforce, Adobe, Oracle, SAP etc.

14 takeaways from genAI initiatives midway through 2024

Data quality remains the biggest hurdle in generative AI deployments. "Data quality is the biggest roadblock to realizing generative AI's full value. You need a data driven strategy combined with a model driven strategy and then you can iterate quickly," said Michelle Bonat, Chief AI Officer of AI Squared. But without a focus on data quality, your models won't be good enough to use.

The role of the Chief AI Officer. Chief AI Officers will need to know a lot of business functions and technology much like CIOs and CTOs, but to lead AI strategy you'll need to know the technologies. "I think it's necessary to have someone with a good knowledge of AI," said Phong Nguyen, Chief AI Officer of FPT Software, which is based in Vietnam. "You need to have the deep technical skills and understand what AI can bring."

Minerva Tantoco, CEO of City Strategies LLC, agreed. "When something is relatively new with a lot of potential it really does require a strong alignment with the goals of the organization," she said. "Once you set a strategy it becomes the fabric of the enterprise. But in the beginning, you want the chief AI officer to have a really strong background in AI. This is a transformational role."

AI leaders need to be trilingual. Tantoco said AI leaders need to be trilingual in technology, business and governance and compliance. "At this stage, you need to collaborate across multiple disciplines while leaning into the strong technical background," she said.

David Trice, CEO of inZspire AI, said AI leaders have multiple roles to juggle. First, enterprises need to drive AI or they'll fall behind. Trice echoed Tantaco's sentiment that AI leaders need to bring multiple threads together. "Product, data and AI innovation need to be at the table with legal, compliance and security," said Trice.

Human led AI or vice versa? Chris Nicholas, President and CEO Sam's Club, said artificial intelligence is enabling the company to "take 100 million tasks out of our clubs" even though it has more associates. Yet he has a clear view on who leads the AI charge: Humans. AI is about freeing humans from the mundane to solve customer problems.

AI and human rights. But just in case AI does kill jobs it's worth pondering a human rights update for age we're entering. Will there be a reskilling safety net and the ability for humans to pursue their passions for a living? A workshop on AI and human rights surfaced a lot of thoughts about the right to work as well as the right to opt out of AI and what's likely to become augmented humanity. One prevailing thought was that we are working towards using AI to augment human intelligence. In the future, that pecking order will be reversed and human intelligence will augment AI.

More from AI Forum:

Data to Decisions

Innovation & Product-led Growth

Future of Work

Tech Optimization

Next-Generation Customer Experience

Digital Safety, Privacy & Cybersecurity

AI

GenerativeAI

ML

Machine Learning

LLMs

Agentic AI

Analytics

Automation

Disruptive Technology

Chief Executive Officer

Chief Information Officer

Chief Data Officer

Chief Technology Officer

Chief AI Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Product Officer

Five Trends From Capgemini's Business To Planet Event

Five Trends From Capgemini's Business To Planet Event