Atlassian Rovo AI additions go GA with consumption pricing on deck

Atlassian said its latest AI features and Rovo, a generative AI assistant that operates across the company's platform, are generally available across the company's products. Atlassian also introduced Rovo Agents.

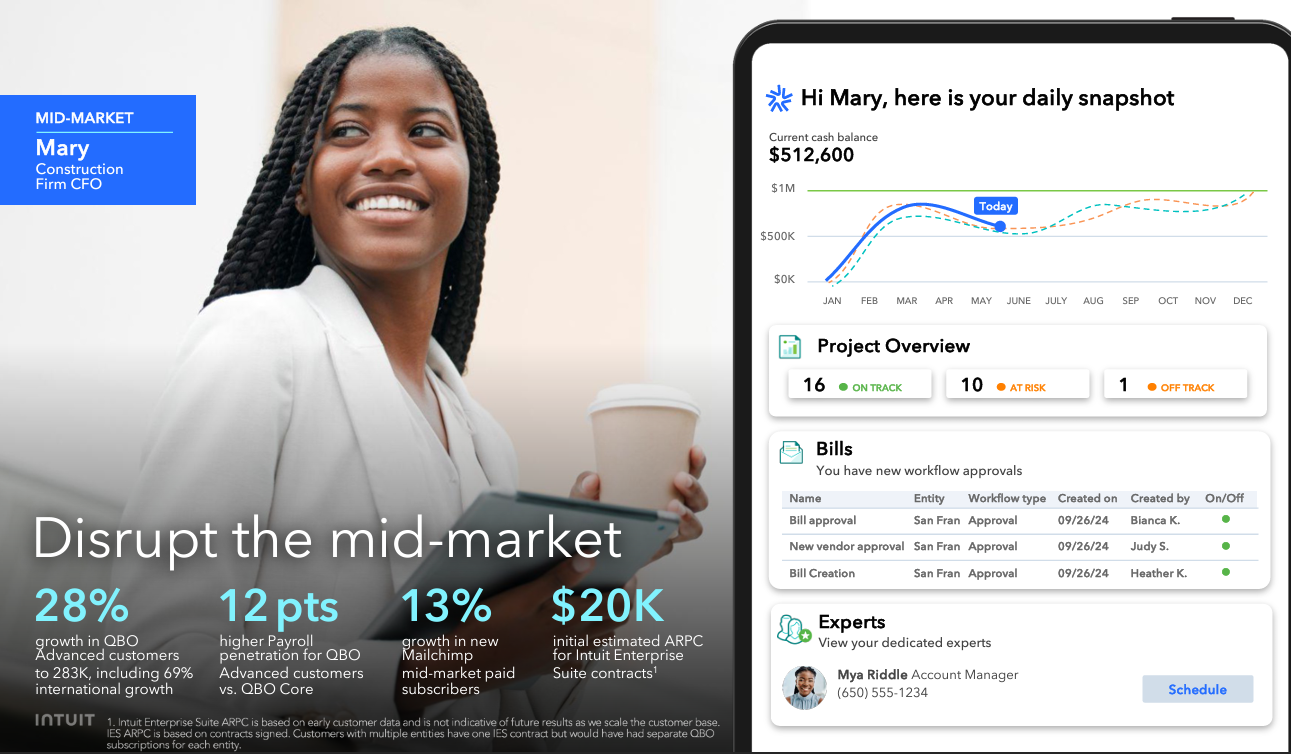

The company said it will offer Rovo for annual subscriptions at $20 per user per month, monthly at $24 per user a month and consumption pricing in mid-2025. Licensing plans will be based on Rovo use per site where any users with access to the site is a billable user. Enterprises would only pay once per billable user.

Enterprise software vendors have been tweaking monetization models as some vendors focus on consumption or even conversations with an AI agent.

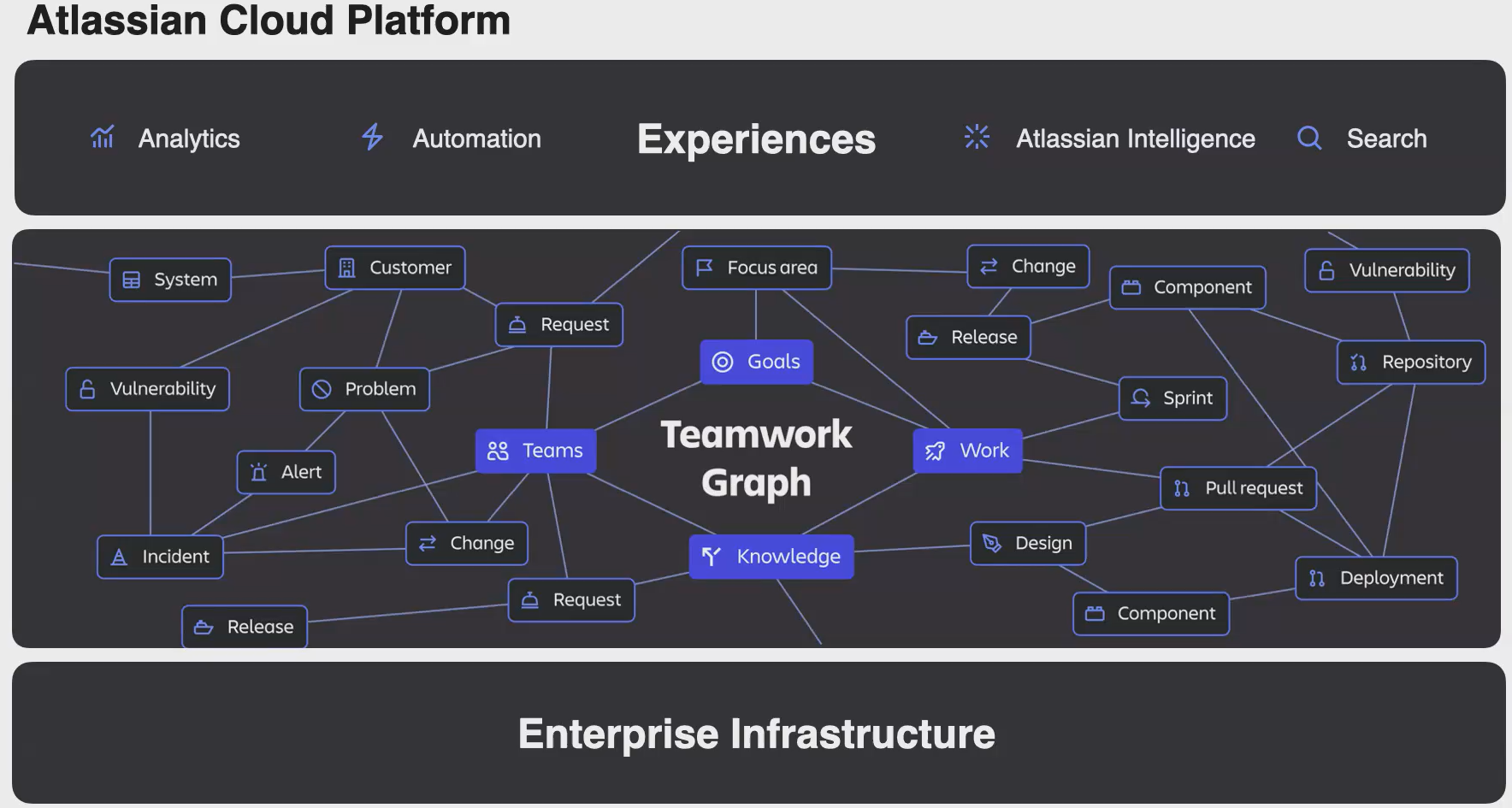

In May, Atlassian launched Rovo with the following core components:

- Rovo Search, which will comb through content wherever it is stored (Google Drive, Microsoft SharePoint, GitHub, Slack etc.), and query across applications. Rovo Search will identify team players, projects and information needed to make decisions. Rovo Search will connect niche and custom apps via API and have enterprise-grade governance to data governance.

- Insights, which are delivered via knowledge cards that offer context about projects, goals and teammates.

- Rovo Chat, a conversational bot that is built on company data and learns as it goes.

In a briefing, Jamil Valliani, Head of Product AI at Atlassian, cited early customers who have boosted efficiency by about 25% using Rovo with their development teams. Rovo beta testers said they've saved 1 hour to 2 hours of time saved per week.

Atlassian is connecting Rovo Search via connectors and connecting that data to Rovo Chat and throughout the platform.

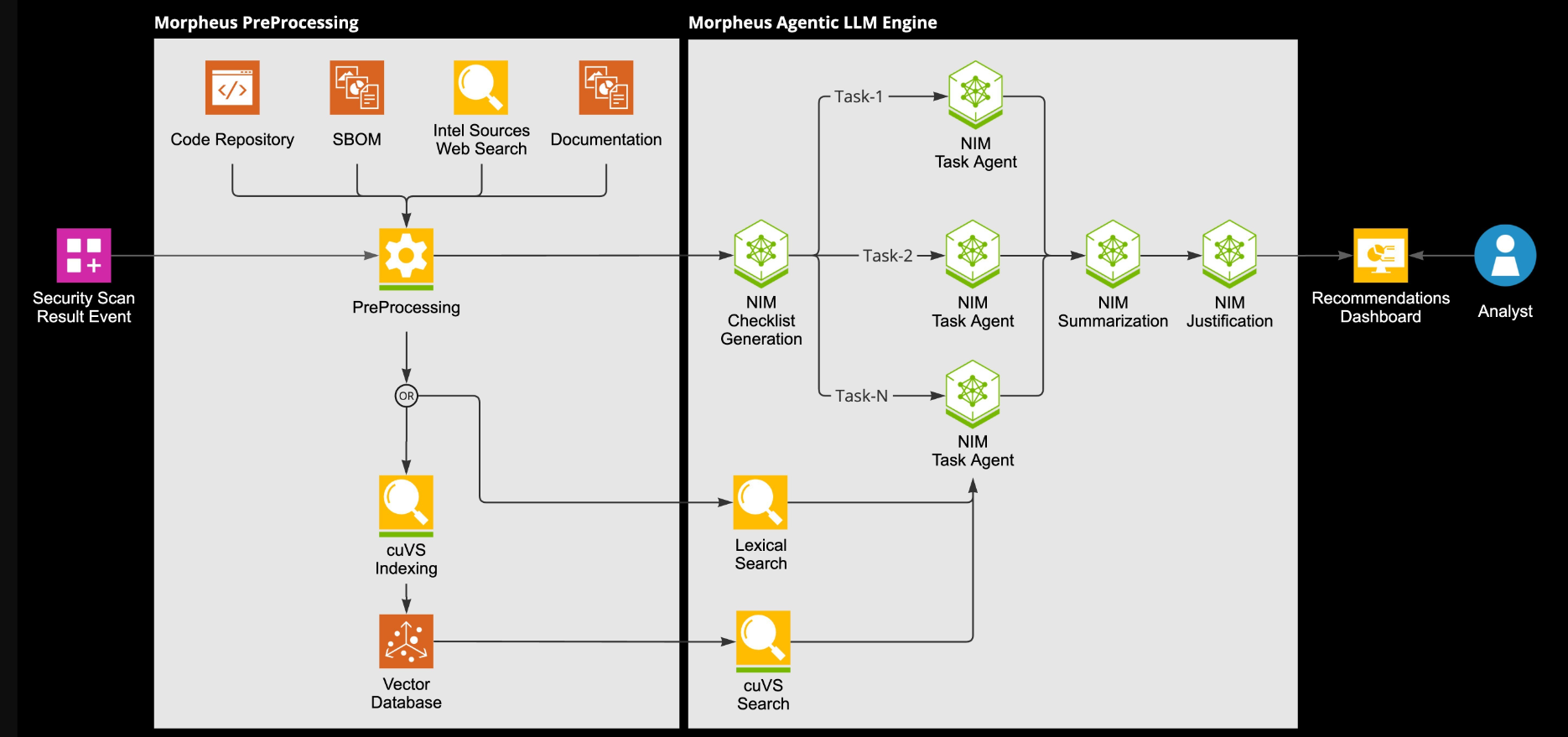

The company also outlined Rovo Agents, which operate out-of-the-ox from Atlassian's marketplace partners. Atlassian is providing more than 20 out-of-the-box agents and tools to build your own Rovo Agents with low and no code tools.

According to Atlassian, Rovo Agents can speed up the development process by automatically generating code plans, code recommendations and pull requests based on task descriptions, requirements and context.

Other updates for Atlassian Intelligence include:

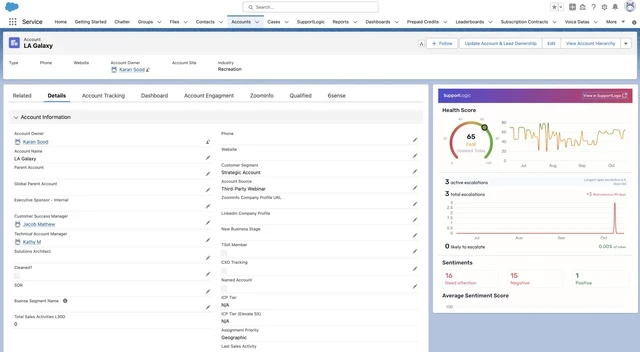

- Jira Service Management will use AI to group related alerts and surface critical incidents, suggest right resources and subject matter experts. The AIOps capabilities also capture incident timelines, generate post-incident reviews and summarize details.

- Jira Service Management virtual service agent will automate support across multiple platforms and add new onboarding and automation enhancements.

- Loom will get AI-powered automated workflows via integrations with Jira and Confluence.

Atlassian's AI additions will be critical to the company's future growth. In August, Atlassian projected first quarter revenue of $1.149 billion to $1.157 billion, below the consensus estimate of $1.16 billion. For fiscal 2025, Atlassian projected revenue growth of about 16%, below the 18% expected by Wall Street.

The company at the time cited uncertain macroeconomic conditions and an evolving go-to-market strategy.

Speaking at an investment conference, Atlassian Chief Operating Officer Anu Bharadwaj said early adoption of Atlassian Intelligence and Rovo has been strong.

"Thousands of customers have adopted Atlassian intelligence already so far, and I'm very pleased with the repeated usage that it gets because one of the interesting things about AI is where are the use cases where you can unlock tangible productivity benefits. I think it is still early innings, so I’m very much looking forward to seeing how that plays out."

Regarding pricing, Bharadwaj said Atlassian has raised prices for its cloud products over time as it has added AI, automation and new features. "The price increases are very much in tune with the amount of customer value that we are able to deliver," he said. "In terms of seat-based versus not, I do think that there is an interesting exploration there around consumption-based pricing, which we will really think through, especially in an AI world, where we talk about virtual agents, which will be different than a seat-based model."