The art, ROI and FOMO of 2025 AI budget planning

Artificial intelligence budgets will surge again in 2025, but good luck tracking expenditures with any precision as generative AI spending is lumped into other categories and driven by multiple departments.

Yes folks, it's 2025 budget season and the biggest question from CxOs on our BT150 meetup in late September revolved around whether there will be a dedicated budget for AI. Like 2024, 2025 AI budgets will be spread across multiple departments and tucked away in other areas like compliance and cybersecurity.

Why is AI spending so murky? CxOs in our BT150 meetup noted that traditional budget processes and frameworks don't align with AI science projects and unpredictable costs.

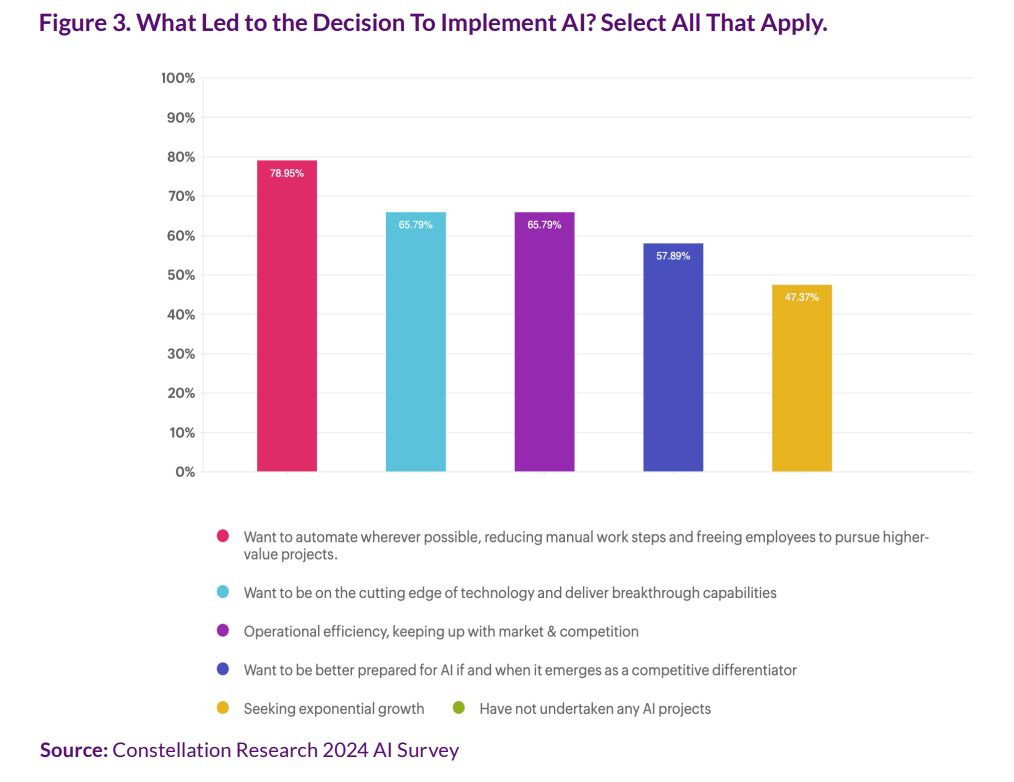

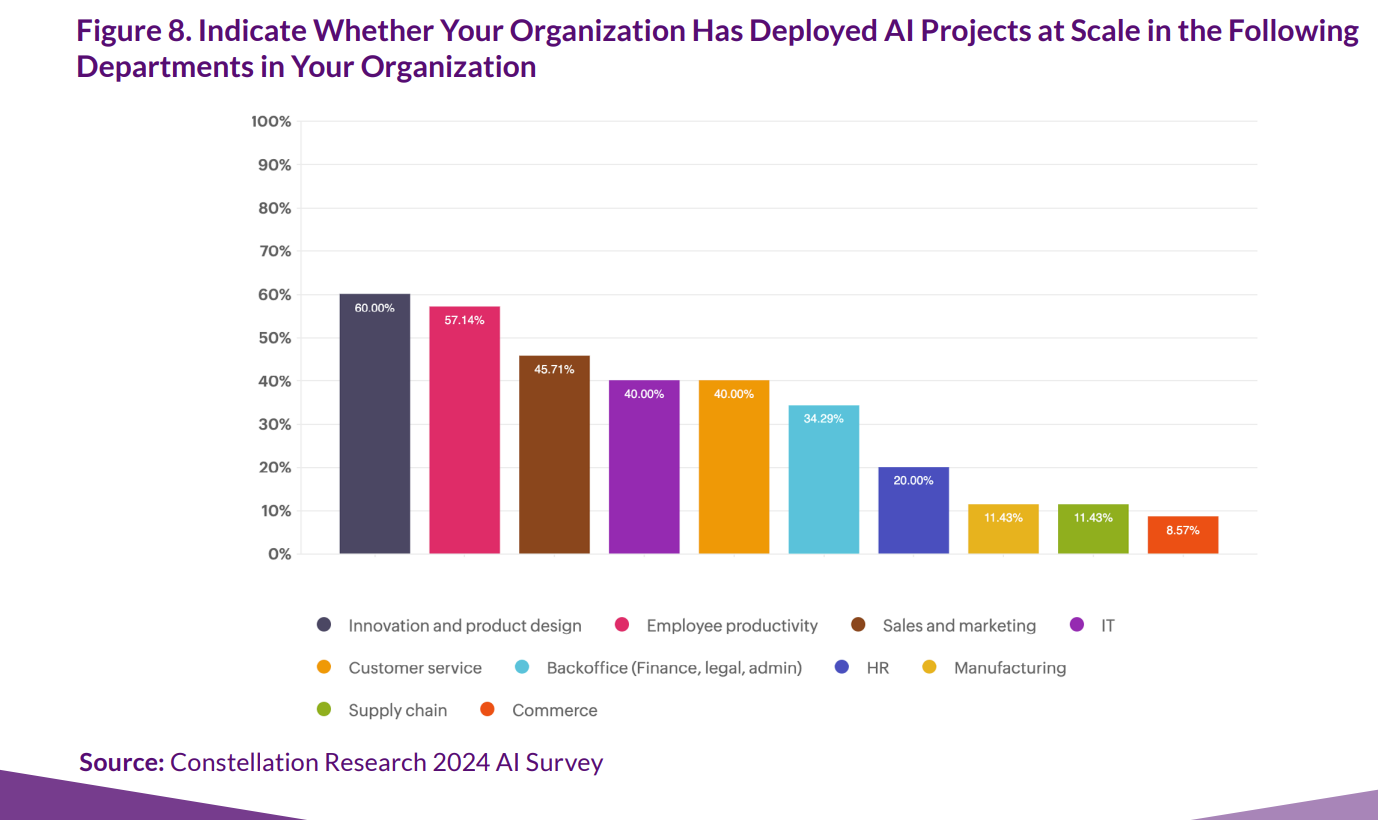

Constellation Research's BT150 meetup highlights how AI budgets are evolving. The only certainty is that enterprises plan to spend more. Constellation Research's AI Survey of 50 CxOs found that 79% of respondents are increasing AI budgets and 32% see budgets increasing 50%.

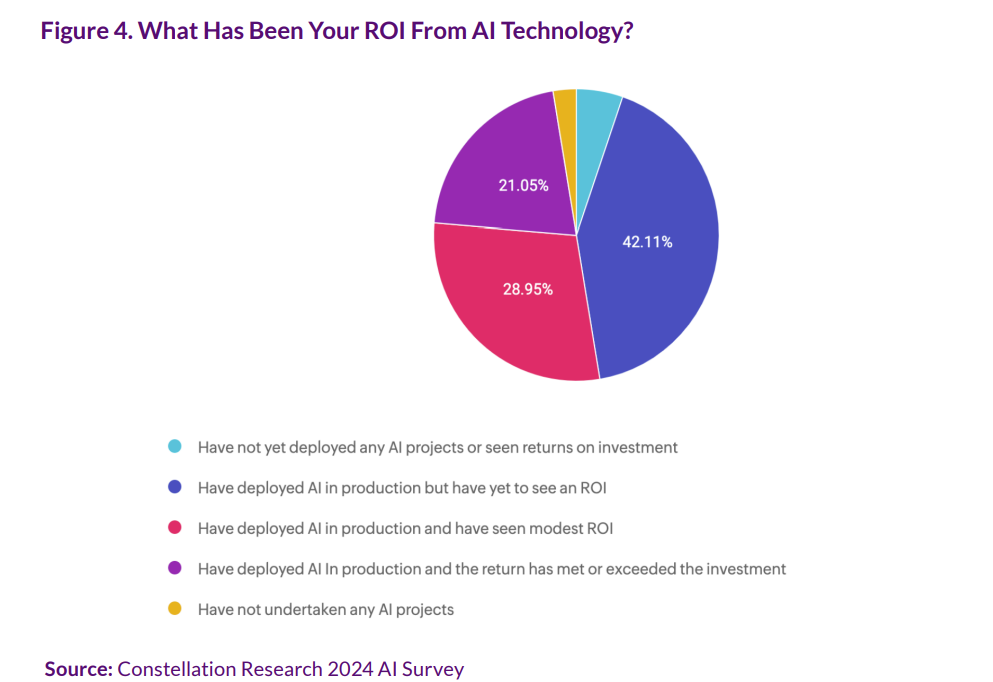

These budget increases are coming even though returns on investment have been spotty. Forty-two percent of respondents said they have deployed AI in production but haven't seen ROI. Another 29% said they've seen modest ROI.

But what is an enterprise going to do? Are companies really going to go on record saying they aren't going to spend on AI? Fears of missing out on AI and worries about forever falling behind the innovation curve are real. However, FOMO isn't much of a strategy just like hope isn't.

13 artificial intelligence takeaways from Constellation Research’s AI Forum

Rational AI spending and dedicated budgets will be a 2026 story. For now, CxOs in our network (in meetups under Chatham House rules) are noting the following:

- Companies are not creating separate AI budgets, but incorporating components into existing business cases and projects.

- There's a trend toward allocating enough funds to continue AI initiatives without committing massive, dedicated budgets that would require extensive justification and scrutiny.

- The focus in 2025 is practical AI applications with ROI.

- Funds for AI are being allocated from other areas such as regulatory compliance that may have decreased in priority.

- There's an emphasis on information gathering and staying informed about AI developments. Companies are investing time and resources in discussions, debates, and learning about AI capabilities and risks, even if this doesn't directly translate to large dollar expenditures.

- AI learning and training is getting more budget as enterprises look to upskill.

Simply put, AI budgets in 2025 will either poach from existing areas or be lumped into broader spending efforts. This game won't be as easy as it was in 2024 where CxOs could AI-wash damn near any project.

For context on budgets, I recently caught up with BT150 member Ashwin Rangan, who has been in the CxO game for three decades at ICANN, Rockwell International, Walmart and Bank of America. Rangan has seen his share of technology cycles. Here's what Rangan, currently Managing Director of the Insight Group, said about AI budgets and riding new technology waves.

First, Rangan noted that a lot of generative AI will be consumed in existing enterprise technology applications. That won't be new budget per se. On the other end of the spectrum there will be enterprises that see how generative AI can differentiate their businesses. They'll spend if the conditions--data, culture, talent--are in place. When budgeting for AI, enterprises need to focus and think through their FOMO and sometimes choose to hang back.

"If the ROI was clear up front, I would be quick out the gate," said Rangan, who noted he chose to be an early mover at Rockwell with SAP. "In other cases, I've chosen to wait with new technologies because while the technology looked promising, the return on investment was not necessarily as promising."

Rangan said genAI is developing so fast that first mover advantages may not last long because the roadblocks today may be resolved quickly. "The price you pay for waiting will not be high because we are all learning at the same time," he said.

Here is an early read from the BT150 and Constellation Research analysts on what'll drive the AI budget in 2025.

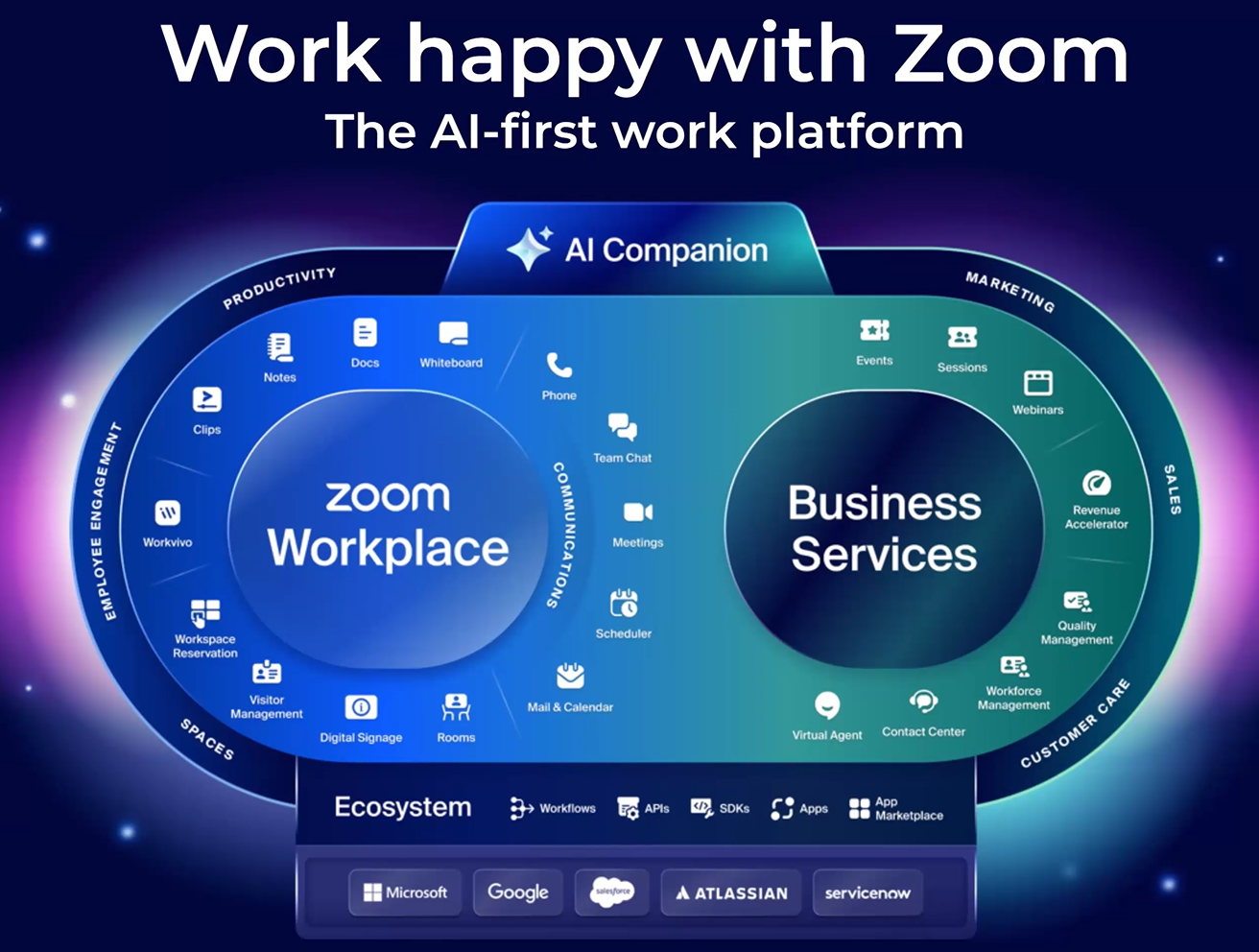

CRM. Salesforce's Agentforce pivot is going to garner some budget. The economics could be compelling. It'll be unclear whether Agentforce will be tucked into marketing, sales, customer experience or some other function, but AI agents are going to be everywhere.

- Enterprises leading with AI plan next genAI, agentic AI phases

- With Salesforce push, AI Agents, agentic AI overload looms

Data management and analytics. Generative AI is seen as the big data quality and data management bailout. Enterprises, as always, need to find a way to extract value from datasets where quality might be low.

Compliance. If you want a project funded, just make sure it has some compliance component. This strategy worked well in 2024 and you'll rinse and repeat in 2025 to get AI funds.

Automation and efficiency. AI hasn't always delivered on streamlining processes and automation, but reducing manual work and boosting productivity will always get you more funding. IT efficiencies are being actively explored, but product development is also a priority to optimize processes.

Cybersecurity. AI is being integrated into security infrastructure for automation, threat detection and responses. It's likely that cybersecurity will claw back the budget that was lost to fund AI pilots.

Bottom line: 2025 budgets are just being formed and enterprises are actively trying to separate AI marketing hype and real impact. Enterprises are also concerned about integration, AI agent and generative AI sprawl and scalability. Nevertheless, enterprises are positioned to spend on AI because the risk of not investing is too great. There will be an enterprise AI spending reset at some point, but not today.

Insights Archive

- 13 artificial intelligence takeaways from Constellation Research’s AI Forum

- Enterprises leading with AI plan next genAI, agentic AI phases

- With Salesforce push, AI Agents, agentic AI overload looms

- Enterprise security customers conundrum: Can you have both resilience, consolidation?

- Big software deals closing on AWS Marketplace, rival efforts

- Peraton's Cari Bohley: Why internal talent recruiting and retention is critical

- Starbucks lands new CEO from Chipotle: Here’s how digital strategy could change

- Disruption is coming for enterprise software

- Enterprise software vendors shift genAI narrative: 'GenAI is just software'

- The generative AI buildout, overcapacity and what history tells us

- Enterprises start to harvest AI-driven exponential efficiency efforts

- GenAI may be the new UI for enterprise software