Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

Google Cloud launched a series of updates including new Gemini 1.5 Flash and 1.5 Pro models with a 2 million context window, grounding with Google search, premade Gems in Gemini in Google Workspace and a series of AI agents designed for customer engagement and conversation.

The updates, outlined at a Gemini at Work event, come as generative AI players increasingly focus on agentic AI. Google is looking to drive Gemini throughout its platform. The pitch from Google Cloud is that its unified stack can enable enterprises to tap into multiple foundational models including Gemini, create agents with an integrated developer platform and deploy AI agents with grounding in enterprise data on optimized infrastructure.

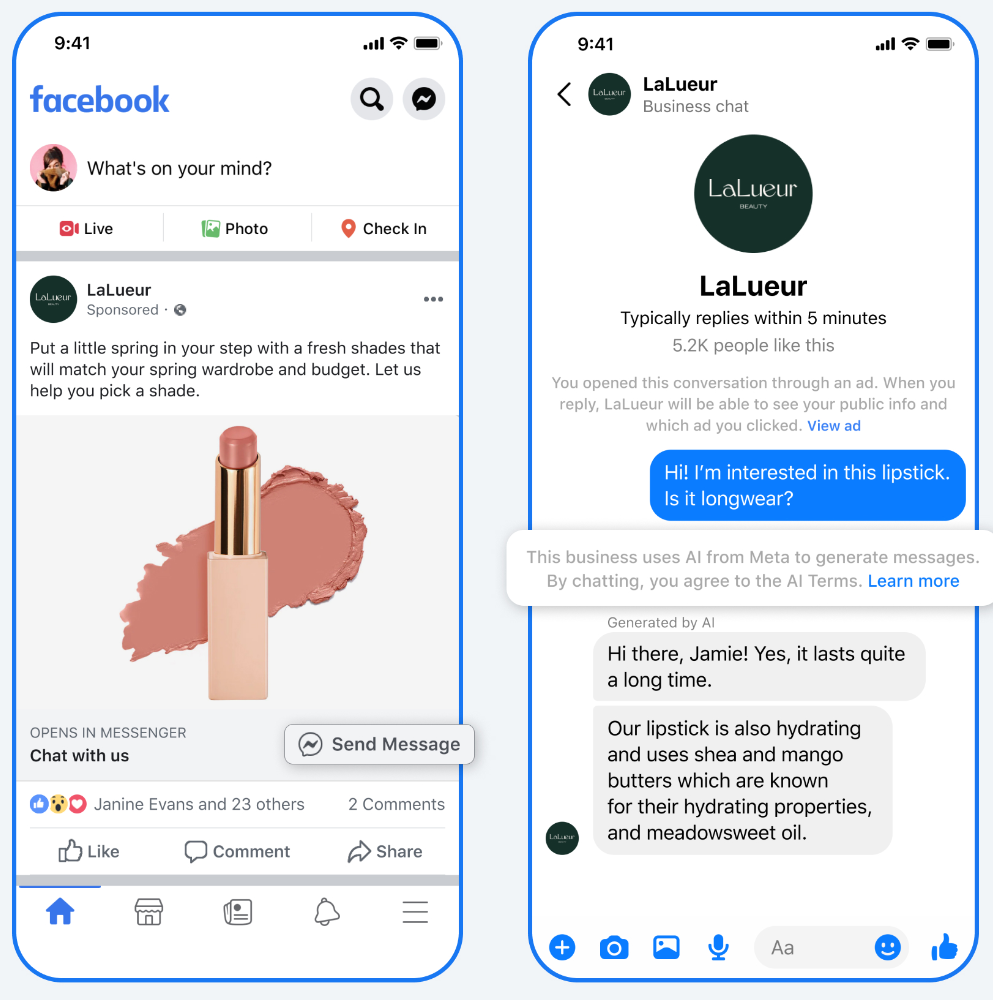

Google Cloud's agent push was noted by Google Cloud CEO Thomas Kurian at an investment conference recently. Kurian cited a series of use cases in telecom and other industries. Kurian said Google Cloud is introducing new applications for customer experience and customer service. "Think of it as you can go on the web, on a mobile app, you can call a call center or be at a retail point of sale, and you can have a digital agent, help you assist you in searching for information, finding answers to questions using either chat or voice calls," said Kurian.

Google Cloud is showcasing more than 50 customer stories and case studies for Gemini deployments including a big push into customer engagement.

During his Gemini at Work keynote, Kurian said customer agents will focus on real-world engagement, natural interaction with voice and understand the information needed to give a correct answer. "Customer agents can interact in natural ways without having to navigate menus and traverse systems," he said. "Agents can synthesize all the information you want and your data privately and securely."

Duncan Lennox, VP & GM of applied AI at Google Cloud, said "the enterprise is shaping up to be one of the most impactful transformations that I've seen in my career." Lennox added that AI agents have the potential "to revolutionize how businesses operate, how people interact with technology and even solve some of the world's biggest challenges."

Lennox said a Google Cloud survey found that 61% of organizations are using GenAI in production and increasingly looking to drive returns. Lennox argued that agents are going to enable new applications and experiences in the enterprise.

As for the news, Google Cloud outlined the following:

Vertex AI (all GA unless otherwise noted)

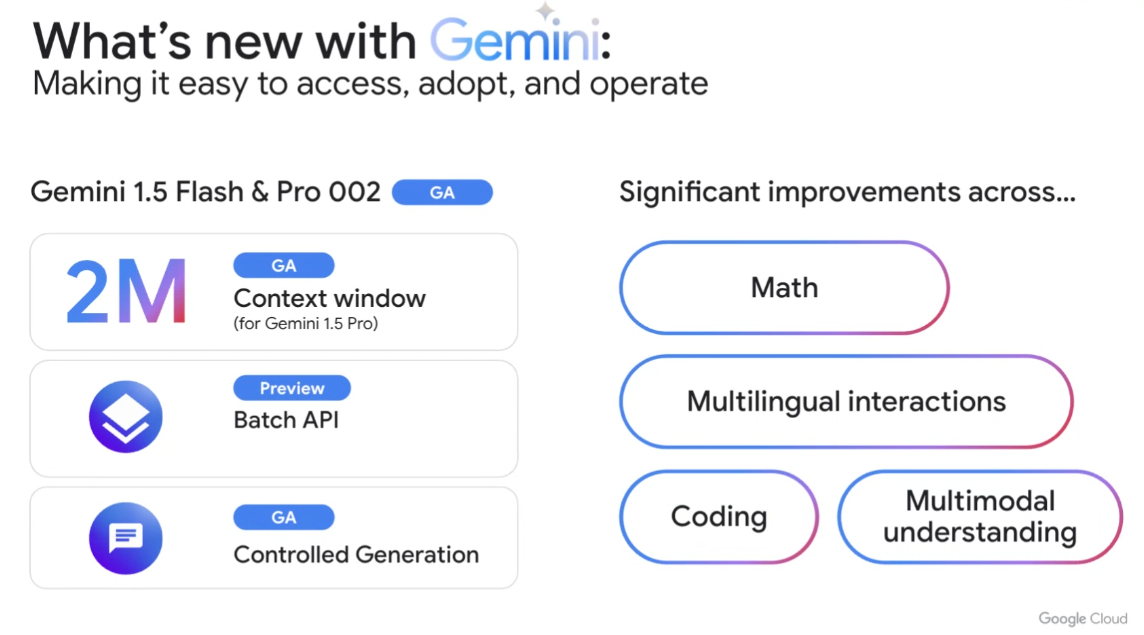

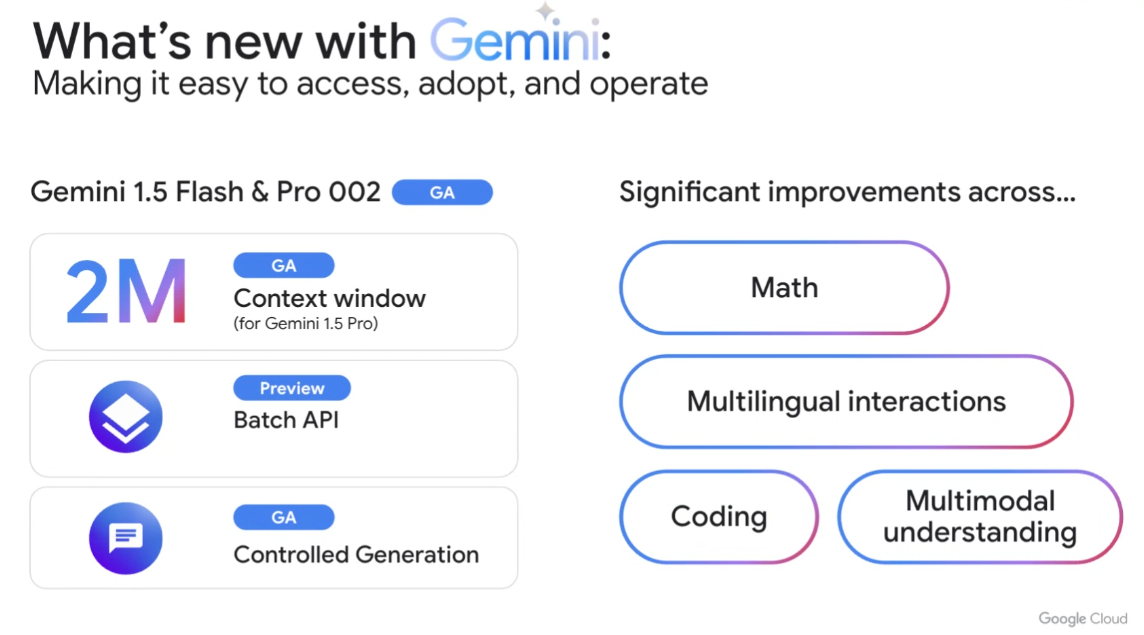

- New models Gemini 1.5 Flash and 1.5 Pro with 2 million context window, double what was available before.

- Controlled generation, which allows you to dictate the format you want to model.

- Prompt Optimizer in preview.

- Prompt Management SDK.

- GenAI Evaluation Service.

- Distillation for Gemini Models, supervised fine tuning for Gemini 1.5 Pro & Flash.

- Chirp v2.

- Imagen 3 editing and tuning in preview.

- Ground with Google search, dynamic retrieval.

- Multimodel function calling.

- Expanded machine learning processing in North America, EMEA and Japan/Asia Pacific.

Google Workspace

- Premade Gems in Gemini for brainstorming, writing social media posts and coding.

- Custom Knowledge in Gems to carry out repetitive tasks with specific instructions.

- Vids, GA by end of the year. Vids can start with a single prompt and guide users to tell a story

- Summarize and Compare PDFs.

- Gemini in Chat.

- Gemini for Workspace Certifications.

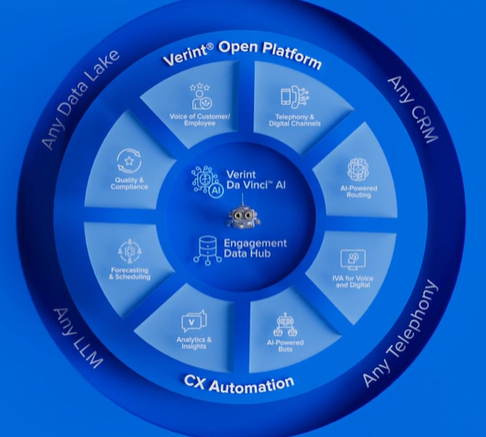

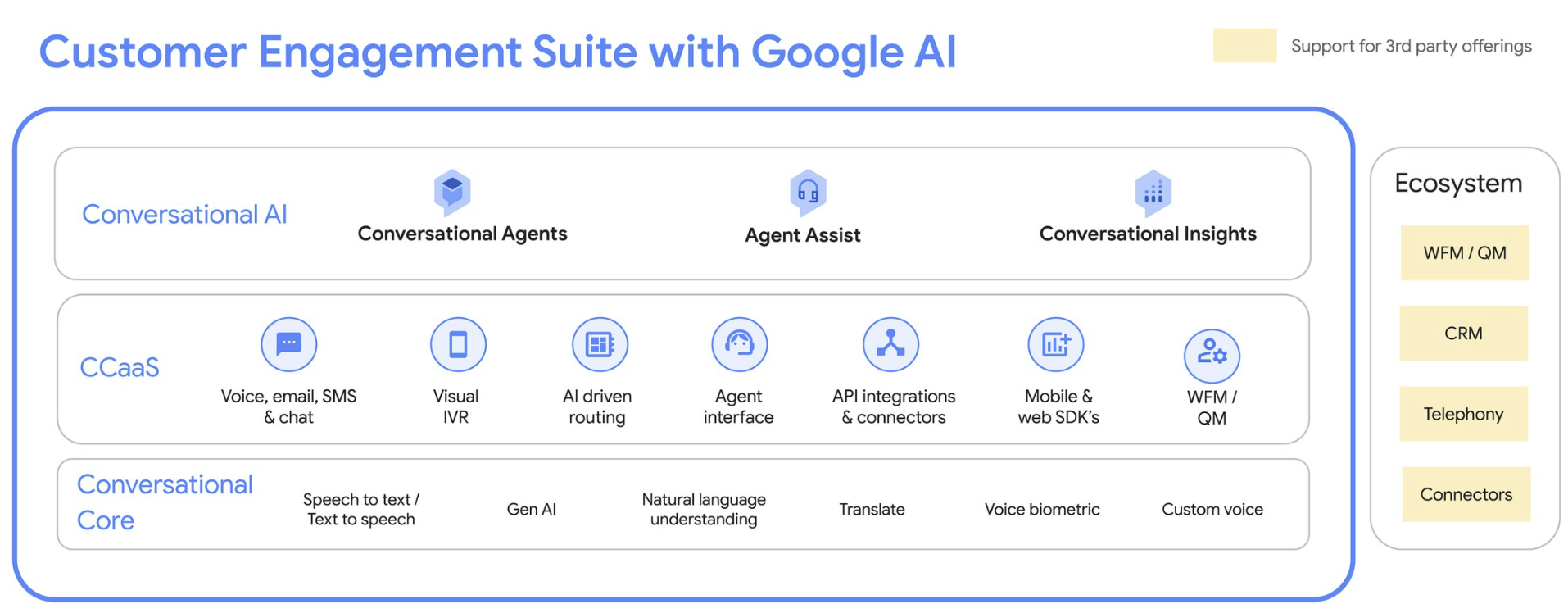

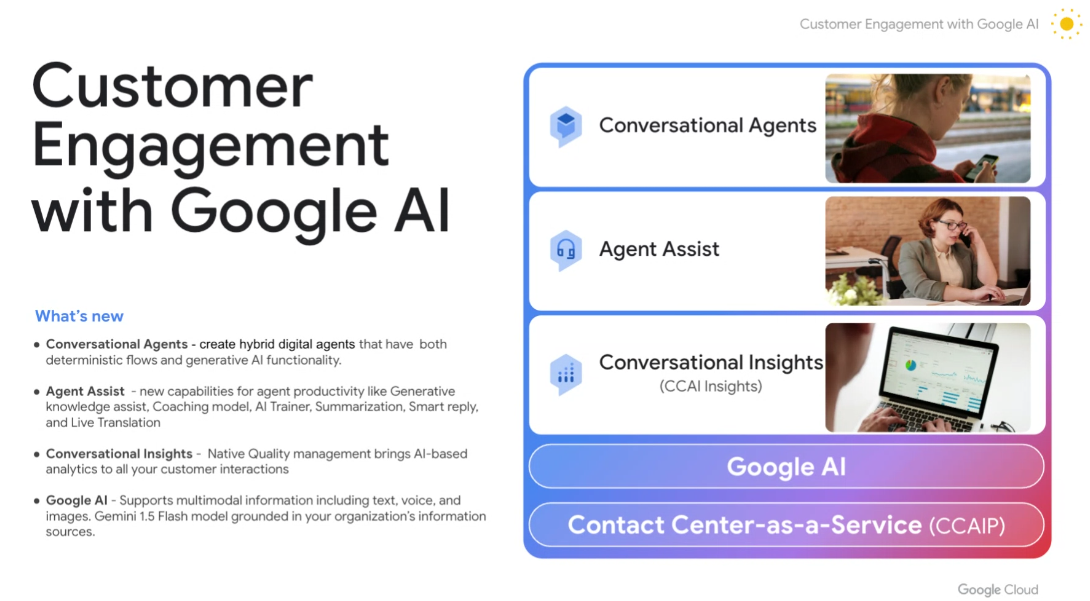

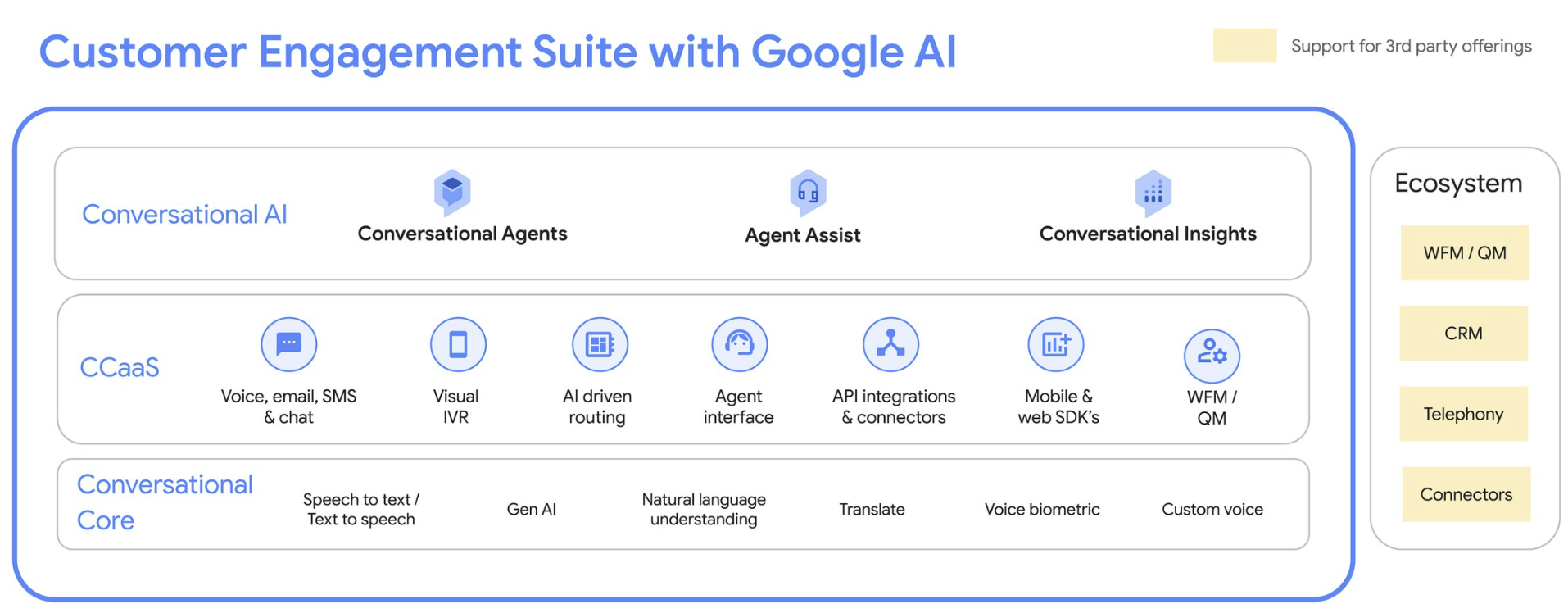

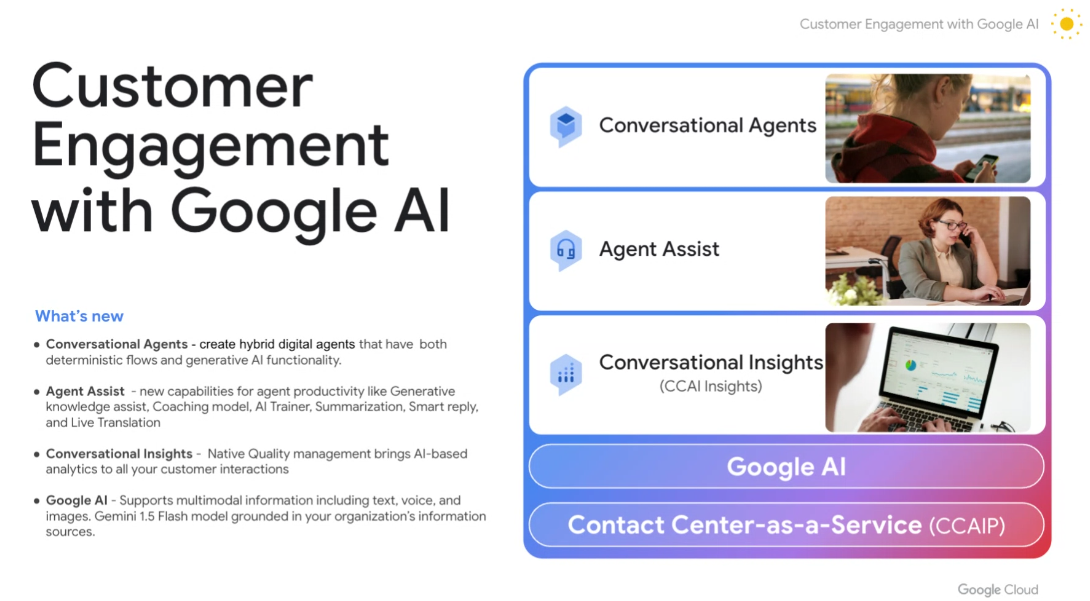

Customer Engagement with Google AI

- 1.5 Flash for Customer Engagement with Google AI.

- Deterministic and Generative Conversational Agents in preview.

- Agent Assist Coaching Model in preview.

- Agent Assist Coaching Model in preview.

- Agent Assist Summarization in preview.

- Agent Assist Smart Reply.

- Agent Assist Translation in preview.

What's an agent?

With the term agent being used extensively, Erwan Menard, Director of Product Management at Google AI, was asked in a briefing how the company segments agentic AI.

The question is a good one considering that in just the last two weeks, Salesforce, Workday, Microsoft, HubSpot, ServiceNow and Oracle all talked about AI agents and likely overloaded CxOs who have spent the last 18 months trying to move genAI from pilot to production. Other genAI front runners—Rocket, Intuit, JPMorgan Chase--have mostly taken the DIY approach and are now evolving strategies.

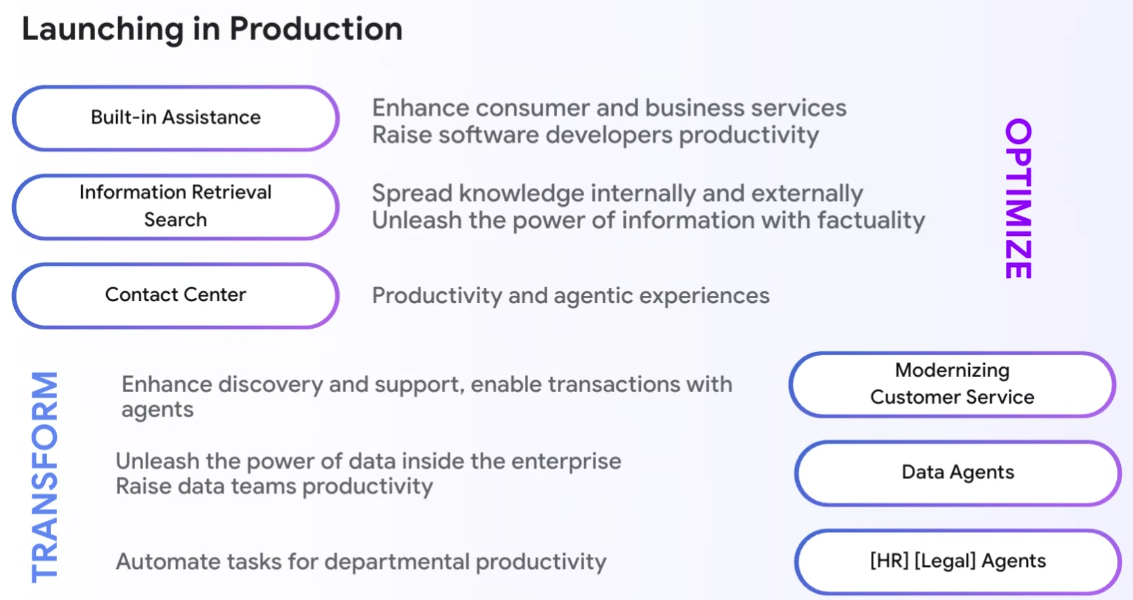

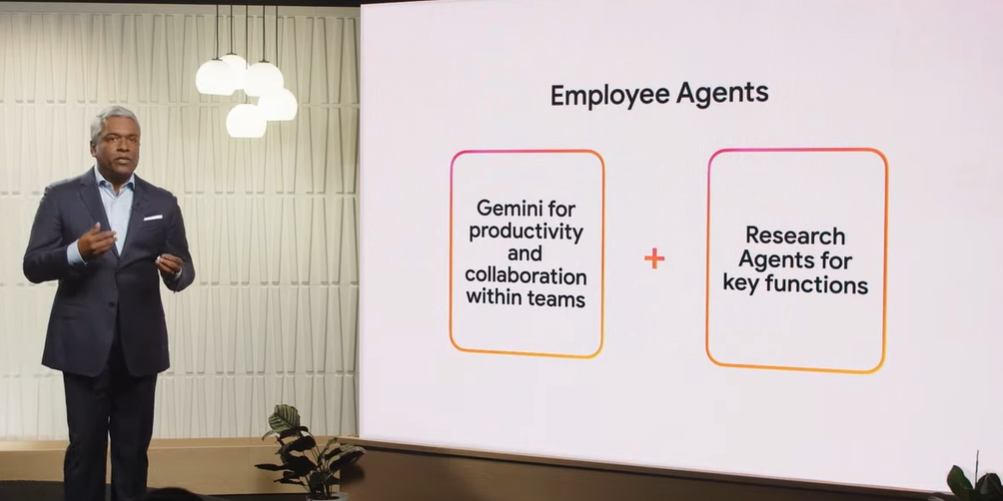

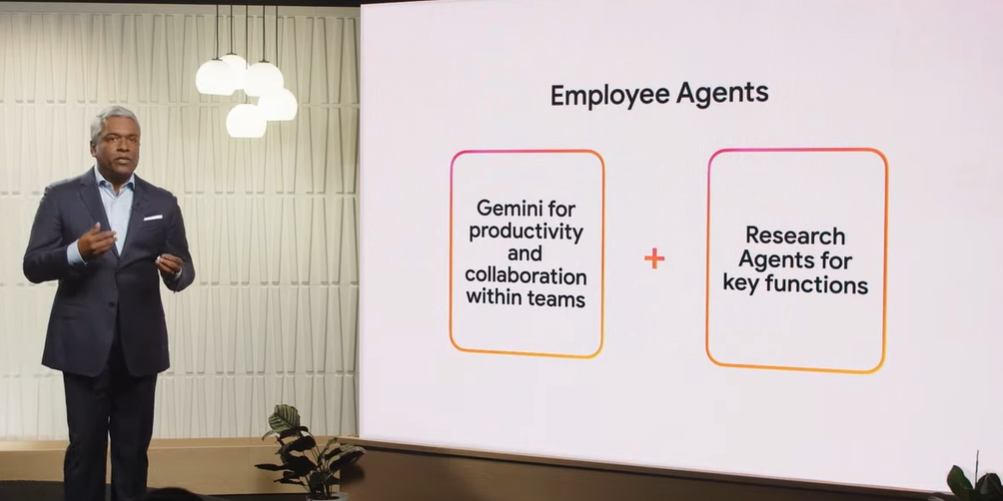

Menard said there are three flavors of agents across the Google Cloud portfolio. First, there are pre-built agents embedded into experiences in Google Workspace. Then there are Google pre-built agents designed for customer engagement platforms. And then there are agents being built by enterprises using Google Cloud.

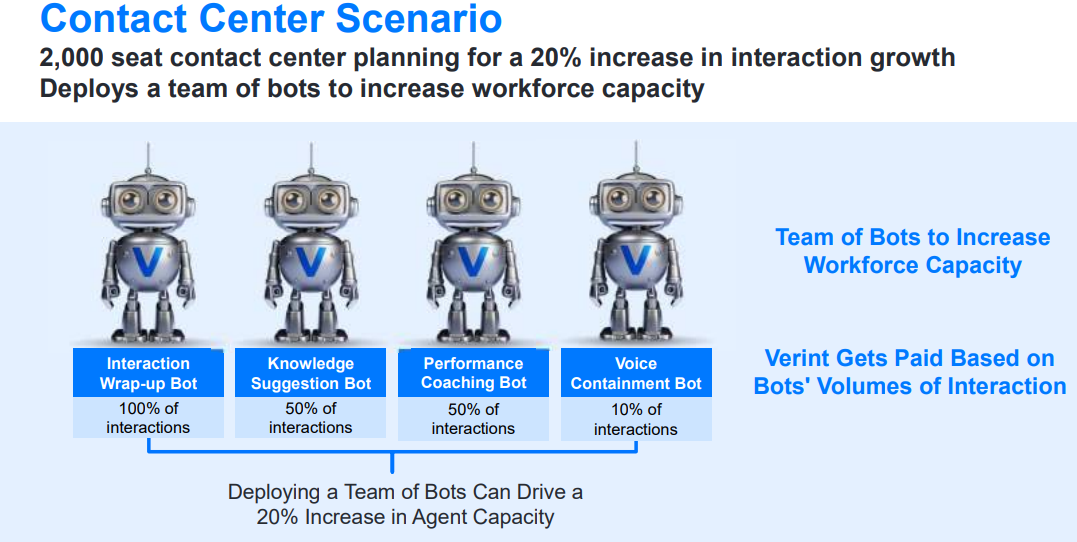

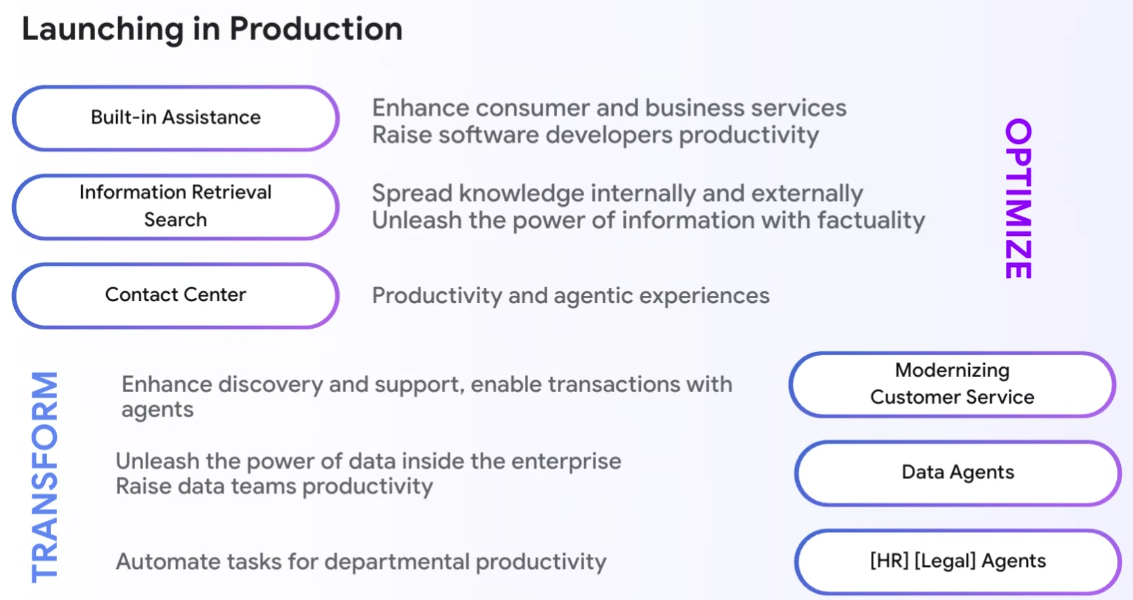

The Gemini at Work event will feature a hefty dose of companies that are building agents on Google Cloud. The genAI use cases going to production the fastest are ones that are built into existing applications and those aimed at contact centers, HR and other environments, said Menard.

Menard said:

"We think of AI agents as systems that use an AI technique to push you goals and complete tasks on behalf of users. An agent basically understands your intent, turns it into action. That's how we think of the word agent."

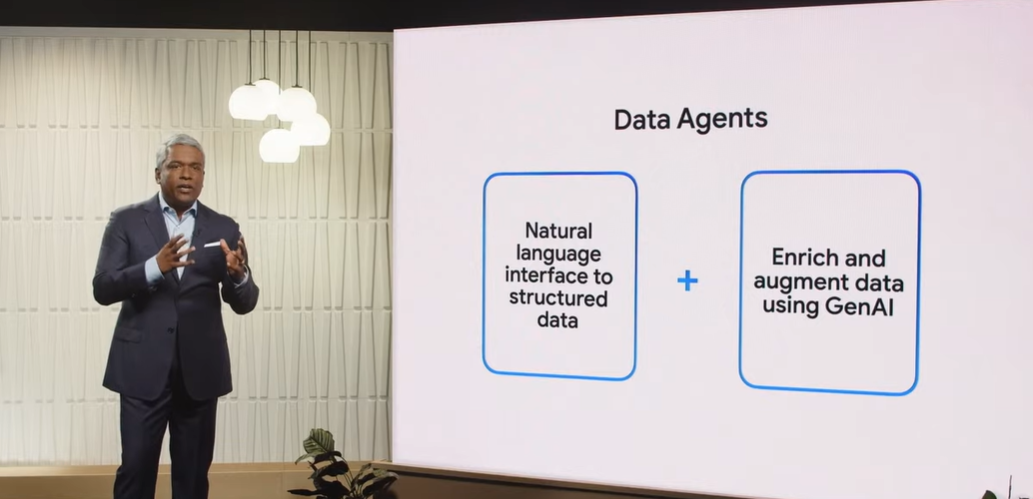

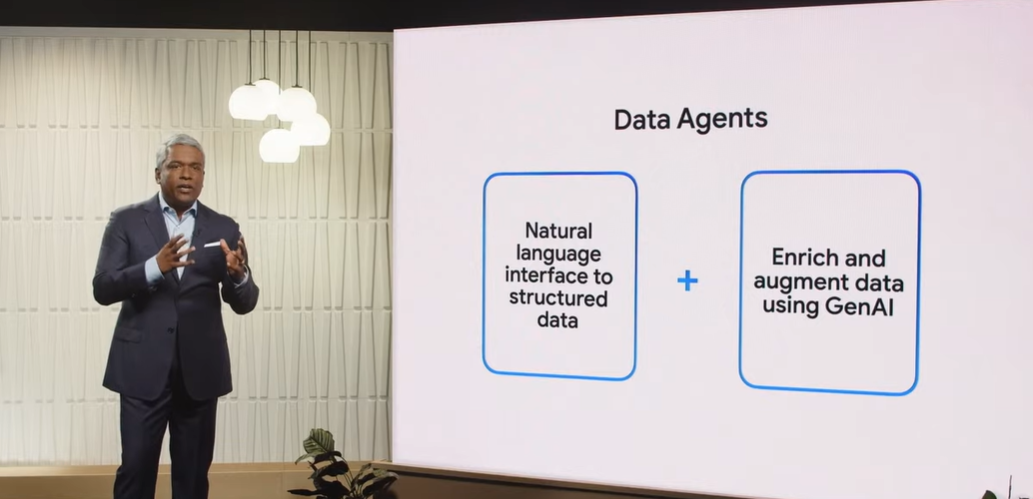

Google Cloud is seeing agentic AI revolving around complexity as well as agency. Agentic AI will revolve around complexity of workflows and the need for multiple systems.

"As we try to get more business impact--let's say a task specific agent that would execute a task on your behalf--we're going to go into workflows that we want to automate, and so we need to interact with many more systems," said Menard.

Agency will also be critical to agentic AI deployments. Agency refers to "the ability of the agent to learn to make decisions, proactively, take action, to achieve a desired goal with a minimum human supervision," said Menard.

Enterprises will likely have the following progression, said Menard, based on what Google Cloud has seen with enterprise customers.

- Task specific agents will become an early focus.

- Then there will be assistants that can help a human accomplish a ask faster.

- Multi-agent systems will then emerge to take a complex task and address it end to end.

Menard said:

"That's kind of the paradigm we're operating in terms of the agents that are being offered and going to production. Clearly, there are important decision factors for customers around the surface where the agent will be presented. Do I build a new surface and attract users, or do I meet the users where they are? Second is the skill set the customers have. Do you invest in building an agent or go with a pre-built agent, or a total DIY approach where you handpick the orchestration framework and all the different elements?"

Timeline to production will also be critical for enterprises, added Menard. "You could very much start with a pre-built experience to confirm the need and the benefit and then decide to decompose into with the DIY approach, to iterate further on your agent," he said, noting that Google Cloud's stack enables all levels and approaches to AI agents. "All of these are not conflicting but different expectations from customers."

Pilot to production takeaways

Speaking after the keynote, Kurian outlined a few takeaways based on what Google Cloud is seeing from customers as they move from pilots to production. Here are the big themes:

Timelines. "Cycle time isn't driven by models," said Kurian. "This is an actual software project and timelines depend on the systems the models interact with."

Kurian said a use case like using Gemini to create content for ad campaigns may take anywhere from 8 weeks to 12 weeks. To enhance search on a commerce site with conversational AI it could take 4 weeks to 8 weeks. If an enterprise is leveraging search and AI conversations in a contact center a project could take up to 6 months depending on the number of modern systems, legacy IT and APIs already in place. Projects where a company has to tap into an old PBX could be longer.

"A lot of it depends on whether you have to change the organization," explained Kurian. "It's not a technology problem alone. If a project doesn't require changes to an organization or workflow then it's faster."

Kurian said it has a maturity model that it has shared with SIs so they "don't go in and here's a big bang project." "There's a sequence to deliver value and we're often dealing with time windows," he said.

Change management and workflows. Kurian's comments on timelines highlight how important change management is with generative AI. Regulation, workflows, processes and technology debt and culture are all factors to consider.

Kurian said enterprises need to keep in mind change management and processes as they deploy AI agents. These processes are critical, but now Google Cloud Gemini models have memory they can wait for an asset or step to take actions.

"It's not a big bang. It's deliver the technology and methodology to deliver an AI solution," said Kurian.

Business value. Kurian also noted that genAI projects need to deliver value whether it's efficiency or revenue growth. The slew of Google Cloud customers noted at the Gemini at Work event have all seen business value. The metrics will differ by company, but the blueprint is the same. Create value quickly and then expand from there.

Data to Decisions

Future of Work

Next-Generation Customer Experience

Innovation & Product-led Growth

Tech Optimization

Digital Safety, Privacy & Cybersecurity

Google Cloud

Google

ML

Machine Learning

LLMs

Agentic AI

Generative AI

Robotics

AI

Analytics

Automation

Quantum Computing

Cloud

Digital Transformation

Disruptive Technology

Enterprise IT

Enterprise Acceleration

Enterprise Software

Next Gen Apps

IoT

Blockchain

Leadership

VR

SaaS

PaaS

IaaS

CRM

ERP

CCaaS

UCaaS

Collaboration

Enterprise Service

GenerativeAI

Chief Information Officer

Chief Executive Officer

Chief Technology Officer

Chief AI Officer

Chief Data Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Product Officer