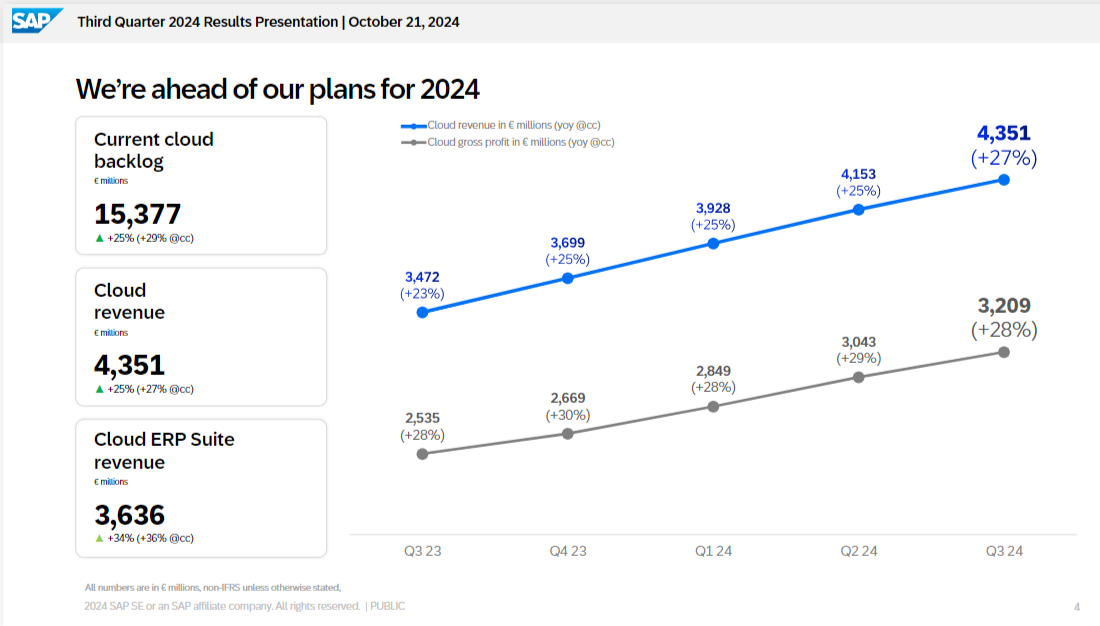

SAP ups 2024 outlook as Q3 better than expected

SAP raised its cloud and software outlook for fiscal 2024 as the company's backlog continued to surge.

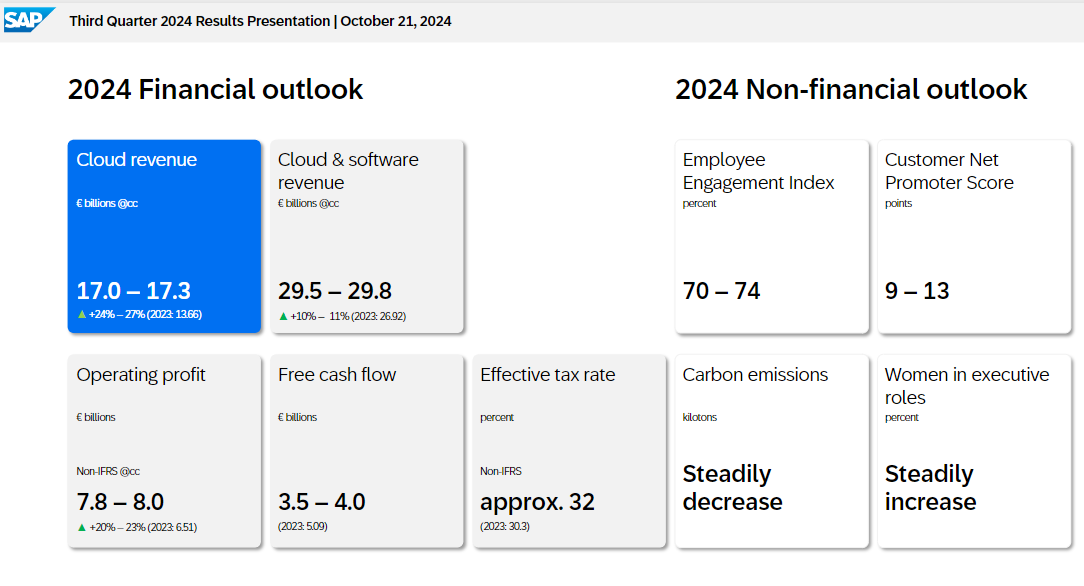

The company projected 2024 cloud and software revenue of €29.5 billion to €29.8 billion, up from the €29 billion to €29.5 billion previously projected. The company also said its free cash flow will be €3.5 billion to €4 billion.

SAP held its cloud 2024 revenue projection steady at €17.0 billion to €17.3 billion.

In the third quarter, SAP reported earnings of €1.44 billion, or €1.25 a share, on revenue of €8.47 billion, up 9% from a year ago. Cloud revenue was €4.35 billion, up 25% from a year ago. Cloud ERP revenue in the third quarter was €3.64 billion, up 34% from a year ago.

Wall Street was expecting SAP to report third quarter earnings of €1.21 a share on revenue of €8.45 billion.

- DSAG: SAP's innovation focus on cloud, discriminates against on-premise users

- SAP gives ABAP code a genAI boost, adds data lake capabilities to Datasphere

- SAP’s Joule everywhere plan: ‘We are not developing AI just for the sake of AIâ€

Christian Klein, CEO of SAP, said the third quarter showed strength for cloud ERP and "a significant part of our cloud deals in Q3 included AI use cases."

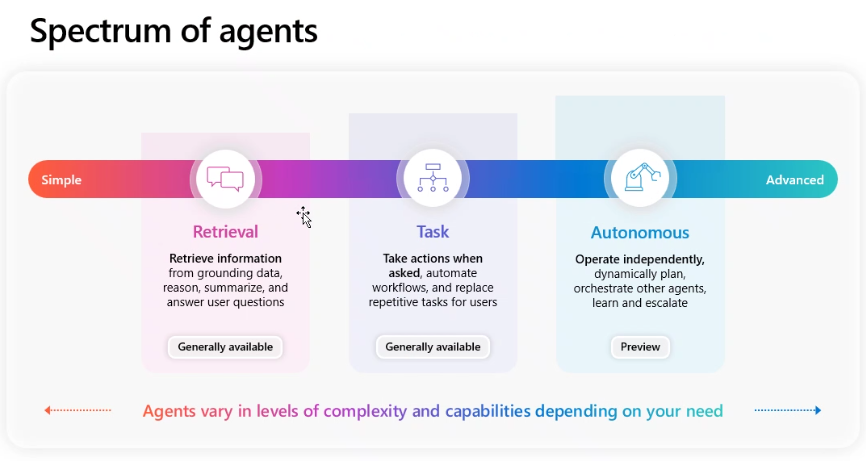

Speaking on SAP's earnings conference call, Klein talked up Joule and said it has the best chance to be a premier AI agent.

"While many in the software industry talk about AI agents these days, I can assure you, Joule will be the champion of them all. So far, we have added over 500 skills to Joule and we are well on track to cover 80% of the most frequent business and analytical transactions by the end of this year. And in Q3 alone, several hundred customers licensed Joule."

Klein said that Joule's power will be the ability to perform tasks across finance, HR, sales, supply chain and other functions. "Joule will soon be able to orchestrate several AI agents to carry out complex processes end-to-end," he said.

SAP CFO Dominik Asam said the company is seeing efficiency gains from its restructuring in 2024.

By the numbers:

- SAP's cloud backlog was up 25% in the third quarter compared to a year ago and the acquisition of WalkMe contributed 1% to that growth rate.

- Software licenses revenue in the third quarter fell 15% from a year ago.

- Restructuring expenses for the first nine months of 2024 were €2.8 billion.

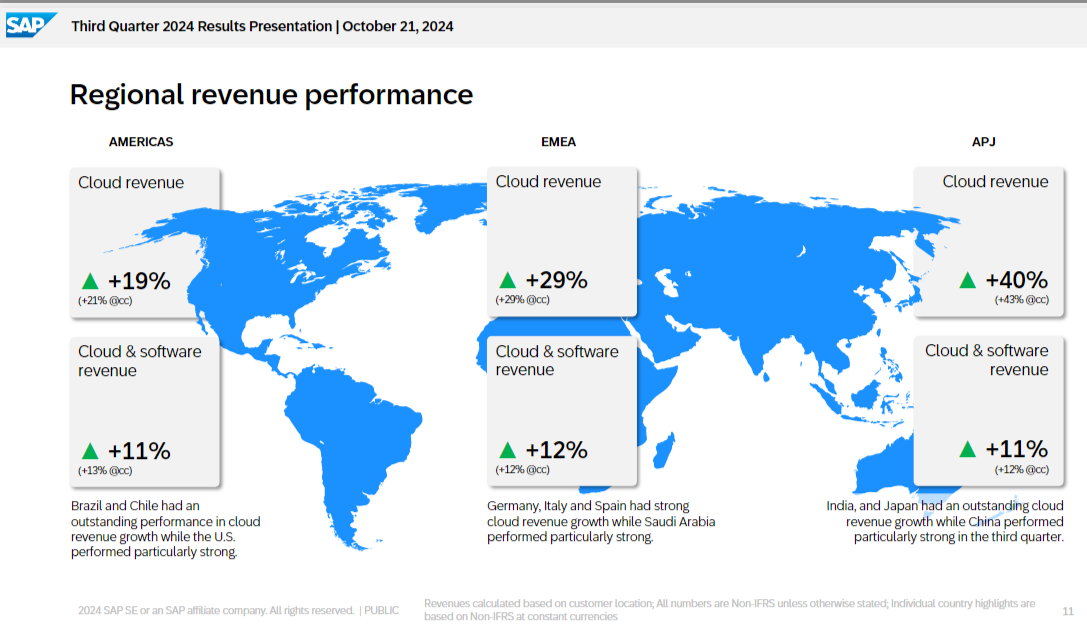

- By region, SAP said it saw cloud revenue strength in Asia Pacific and Japan and EMEA. Americas growth was "robust."

Key points from SAP's earnings conference call include:

- Klein said about 30% of SAP's cloud orders included AI use cases.

- SAP cited numerous RISE with SAP wins including grocers Schwarz Group and Sainsbury as well as Nvidia, which implemented RISE with SAP in 6 months, and Mercado Libre.

- "Our investment in Business AI are also starting to show positive results, creating new opportunities and deepening customer engagement. Now with the added capabilities of WalkMe, we are able to further improve work flow execution and user experience," said Asam.

- Klein said that SAP's move to centralize its cloud operations is paying dividends. "We are rolling out the cloud version of HANA, much more scale, better TCO, better resiliency. And of course, we're also working with the hyperscalers. I mean, we have with RISE and on the cloud infrastructure, we have really some really strong measures we are driving to further optimize not only performance, but again, also the scalability of HANA Cloud running on the hyperscaler infrastructure," he said.

Constellation Research's take

Constellation Research analyst Holger Mueller said:

"SAP had a good quarter, as expected. AI is the break Christian Klein and team as AI needs to live in the cloud, and that forces before skeptical CxOs to bite the bullet and move to S/4 HANA. SAP keeps struggling with the value for SAP Grow and Rise – as only 1/3 of cloud revenue comes from these initiatives, but this does not matter anymore as AI is the pull. With favorable announcements from the recent SAP TechED conferencebto help customers with the ABAP code assets as well as the announcement of an SAP DataLake, SAP is helping its existing customers more and better than before. All of this leads to a key milestone for the vendor: Cloud revenue for the first time is over 50% of SAP revenue. What is remarkable is that SAP is more profitable. Traditionally, the (now shrinking) perpetual license revenue is more profitable than cloud revenue (where SaaS vendors pay IaaS vendors). But with SAP charging more customers directly for their IaaS costs (and then paying the AWS, Google and Microsoft etc.), it is making margin from the pass through."

- SAP Q2 cloud ERP revenue up 33%, sees restructuring hitting 9,000 to 10,000 jobs

- Amazon Bedrock integrated into SAP AI Core, SAP to use AWS chips

- SAP acquires WalkMe for $1.5 billion

- Boomi aims to ease SAP Datasphere migrations

Speaking at the Google Public Sector Summit 2024 in Washington DC, Saltzman (right) hit on multiple themes that apply to the public and private sector, leadership and innovation within a large organization. Here's a recap of the Google Public Sector Summit:

Speaking at the Google Public Sector Summit 2024 in Washington DC, Saltzman (right) hit on multiple themes that apply to the public and private sector, leadership and innovation within a large organization. Here's a recap of the Google Public Sector Summit: