Airbnb: A look at its AI strategy

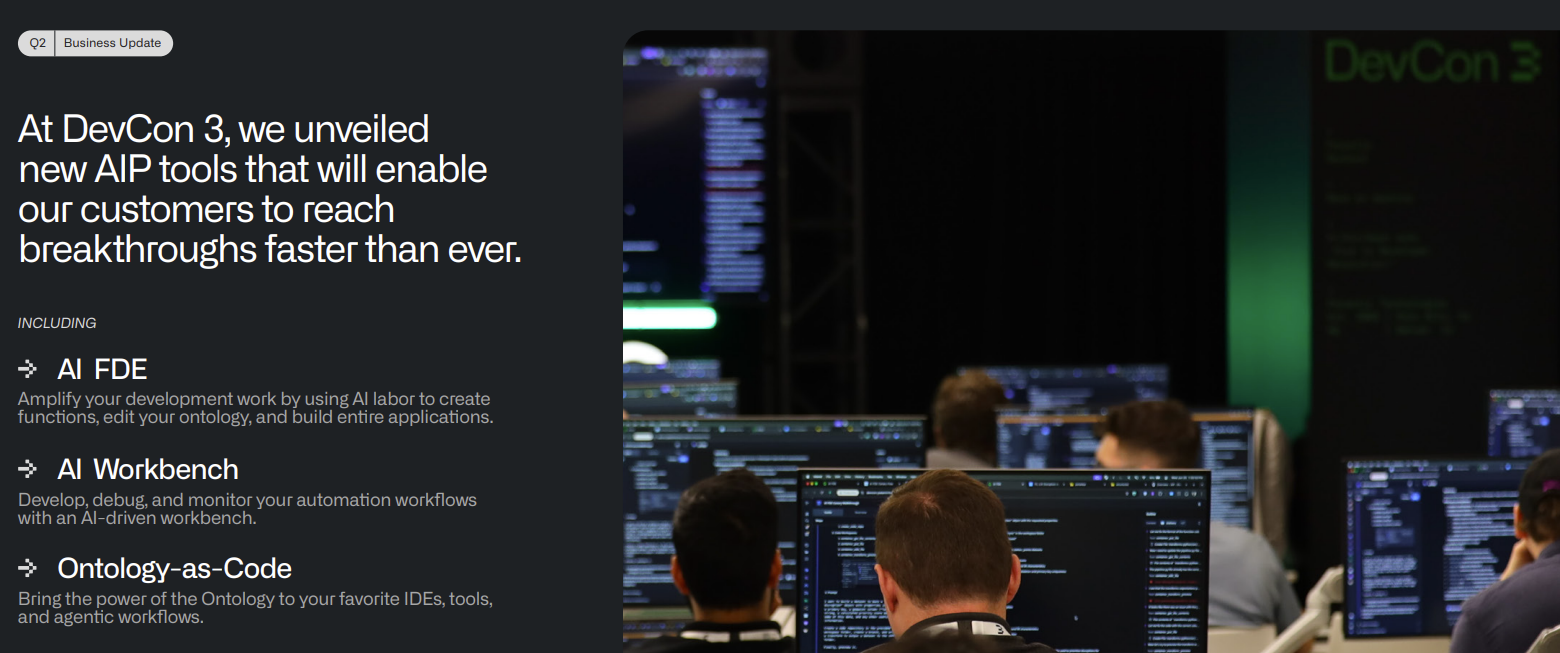

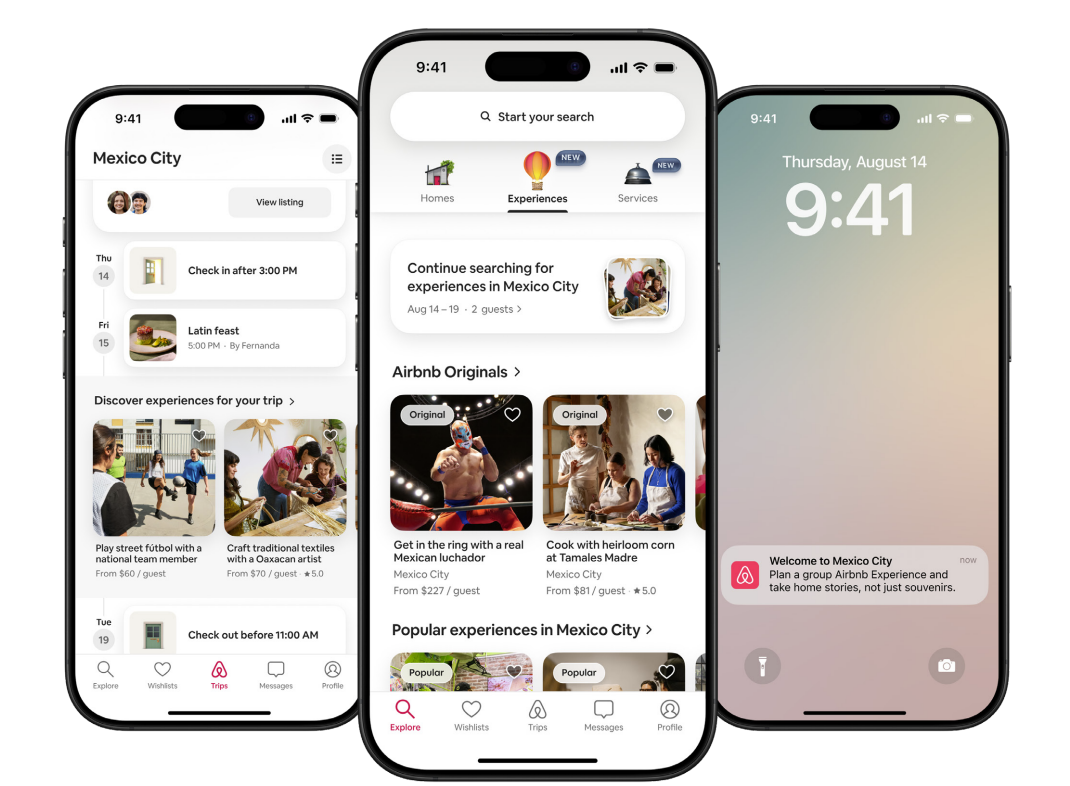

Airbnb has launched an AI agent built on 13 different models for customer service in its app and plans to add more AI tools in the quarters to come. The goal: Transform the Airbnb app into one that is AI native.

CEO Brian Chesky said on Airbnb's second quarter conference call that the company's move into complementary services paid off in the second quarter with better-than-expected results. Airbnb has retooled its tech stack and is now rolling out improvements to its app and services at a faster pace.

"We are massively ramping up development of product development pace at Airbnb. We do these typically biannual releases, but we are now iterating very, very quick even between these releases," said Chesky.

Airbnb is navigating multiple shifts. The company is expanding into complementary markets including home market services and experiences, shifting its marketing approach to focus more on social over search and TV and optimizing everything from pricing to customer service as it expands share globally.

In the second quarter, Airbnb delivered strong metrics as it expanded its AI-powered customer service agent to 100% of US users and scaled new offerings. The company said it saw travel demand accelerate from April to July despite an uncertain economy. Airbnb reported second quarter net income of $642 million on revenue of $3.1 billion, up 13% from a year ago.

As for the outlook, Airbnb said it expects revenue growth of 8% to 10% in the third quarter with stable nights and seats booked compared to the second quarter. The company did say it expects lower margins due to investments in new markets including Airbnb Services and Airbnb Experiences.

In addition, Airbnb redesigned its app to make bookings across services in one place. The company has optimized continually since the May launch. That product cadence will be necessary as Airbnb carries out its AI strategy. Chesky outlined Airbnb's approach to AI and agents. Here's a look:

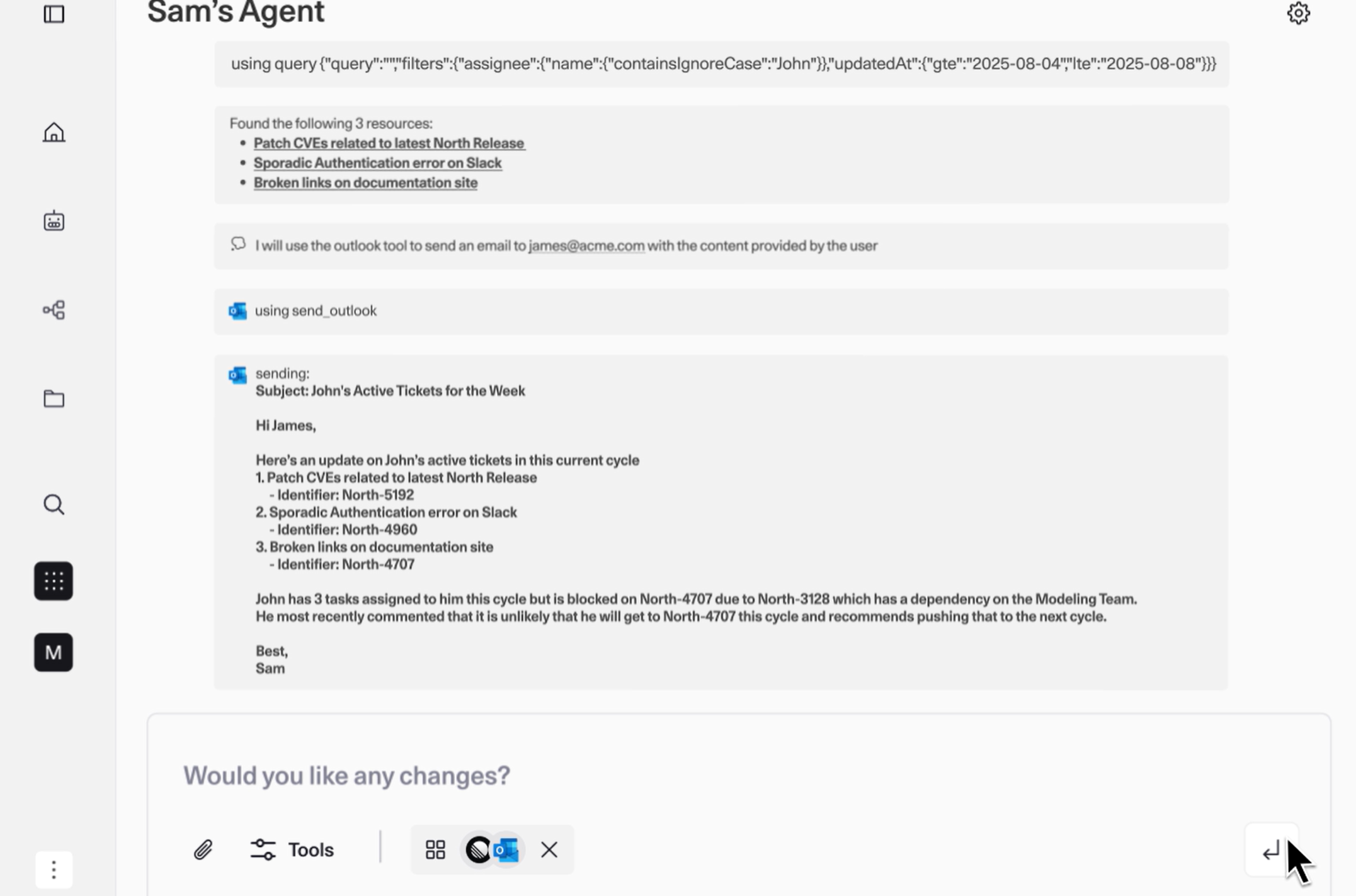

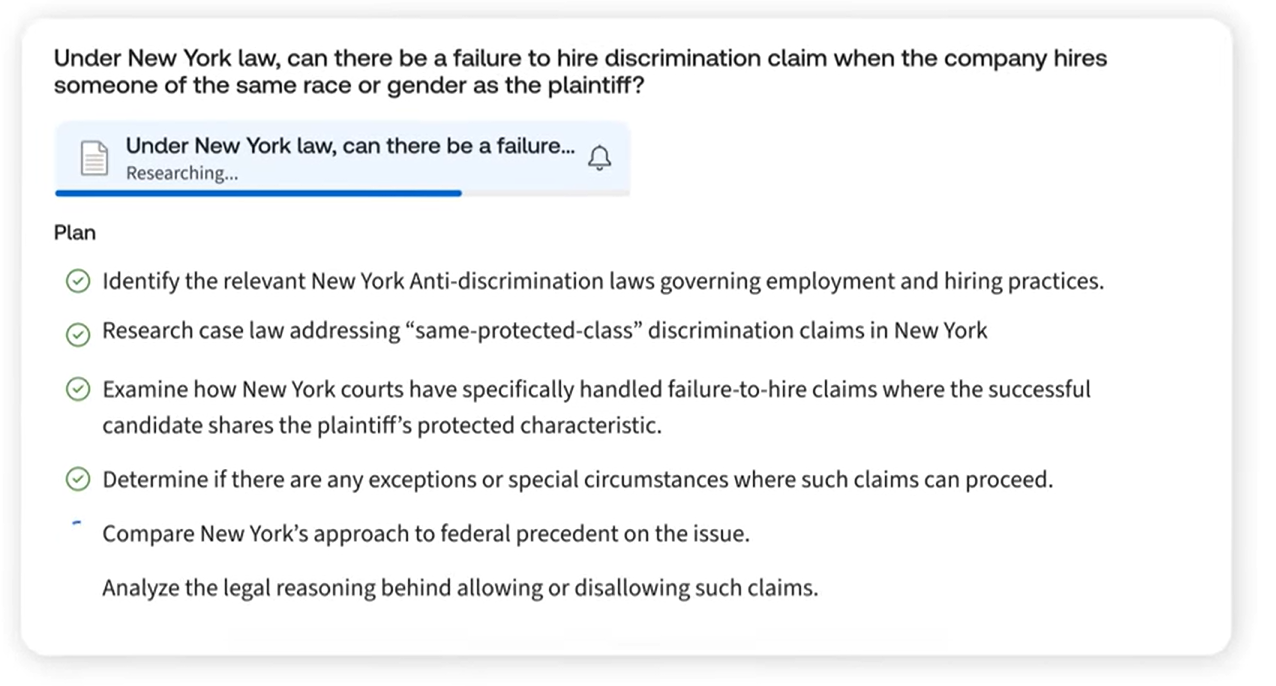

Start with the hardest problem. Chesky said most AI efforts in travel have revolved around trip planning and inspiration. Airbnb has gone with customer service as an AI use case because it is "the hardest problem because the stakes are high, you need to answer this quickly and the risk of hallucination is very high."

"You cannot have a high hallucination rate. And when people are locked out, they want to cancel reservation, they need help, you need to be accurate. And so what we've done is build a custom agent built on 13 different models that have been tuned off of tens of thousands of conversations. We rolled this out throughout the United States in English. And this has reduced 15% of people needing to contact a human agent when they interact instead with this AI agent," said Chesky.

The plan now is to bring that customer service agent to more languages, he added.

Increase personalization and context. Chesky said the customer service AI agent will become "more personalized and more agentic" throughout the next year. "The AI agent will not only tell you how to cancel your reservation, but it will also know which reservation you want to cancel, cancel it for you and it can start to search and help you plan and book your next trip."

Expand AI into travel search and planning from customer service. Chesky said the plan is for Airbnb to leverage AI throughout its use cases and app. Airbnb is looking at multiple expansion areas including hotel bookings "especially boutiques in bed and breakfast" and independents in Europe.

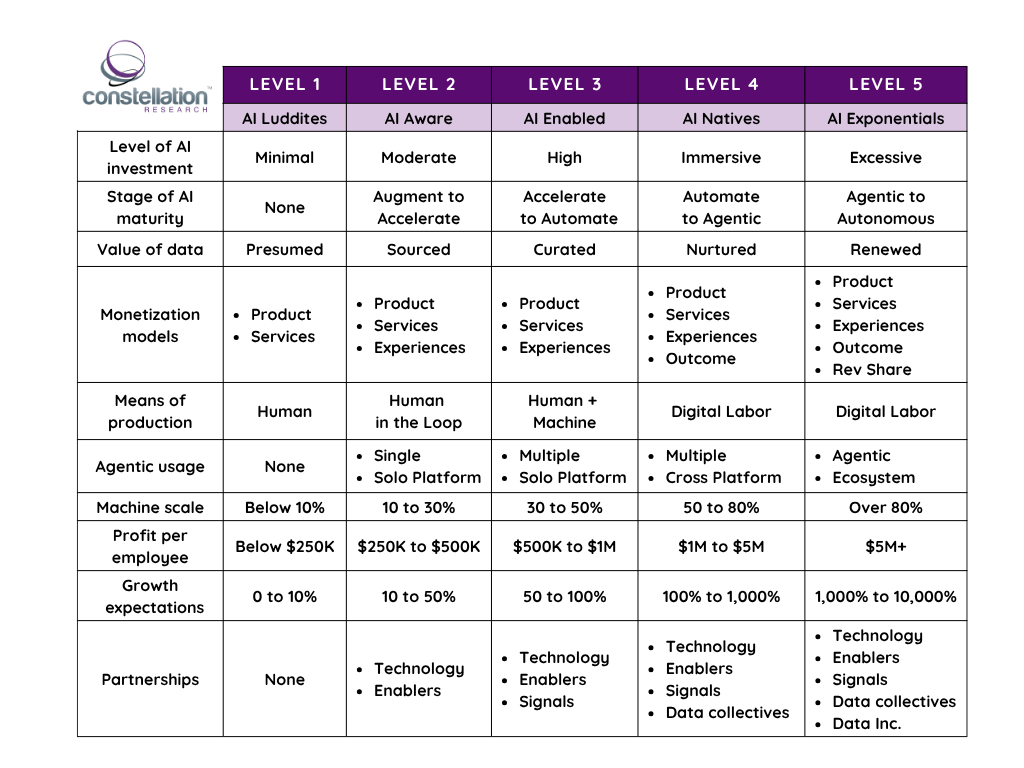

Become AI native. Chesky said Airbnb will become an AI-native app. He said today, the top 50 applications on Apple's App Store aren't AI native. ChatGPT is the top app with a few other AI natives, but for the most part the top players are not AI-native.

"You've got basically AI apps and kind of non-AI native apps. And Airbnb would be a non- AI native application.

Over the next couple of years, I believe that every one of those top 50 slots will be AI apps--either start-ups or incumbents that transform into being AI native apps. And I think at Airbnb, we are going through that process right now of transitioning from a pre- generative AI app to an AI native app. We're starting to customer service. We're bringing into travel planning. So it's really setting the stage."

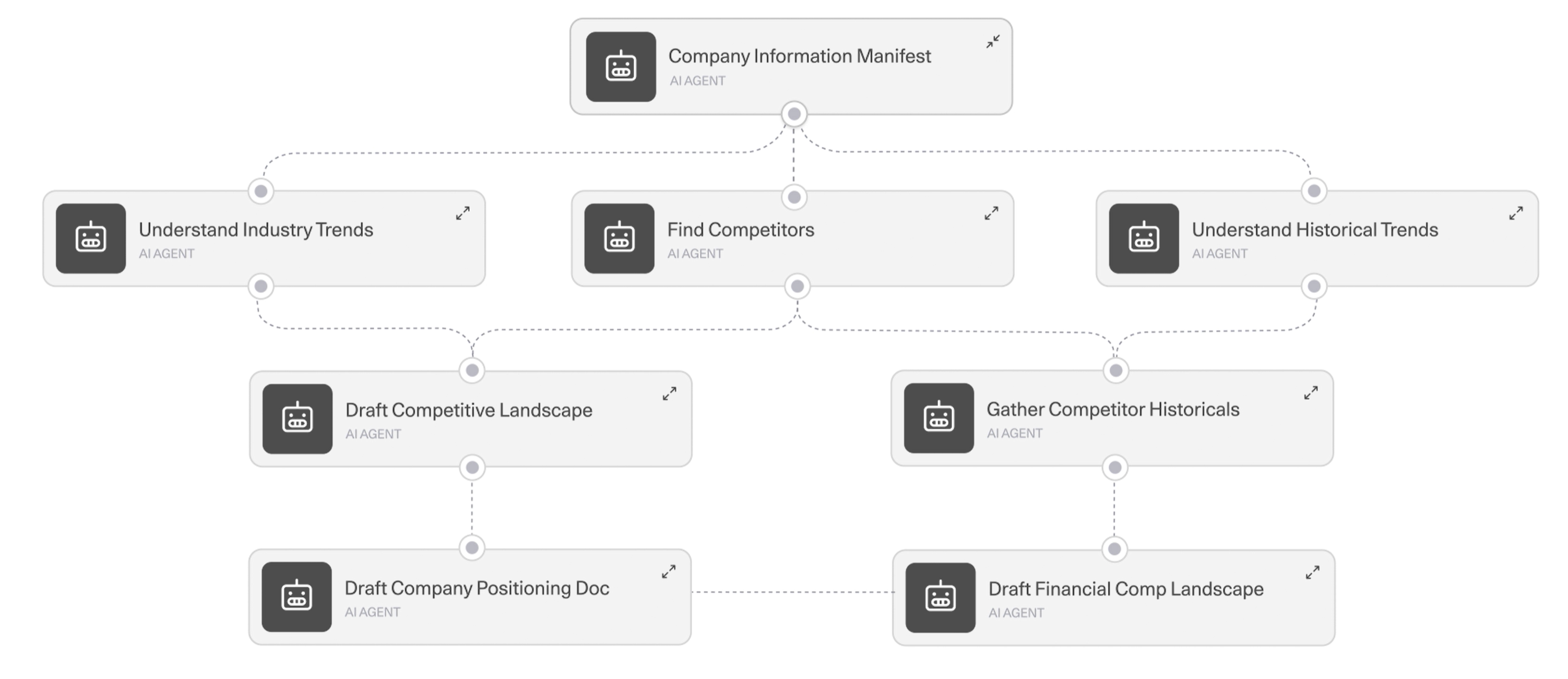

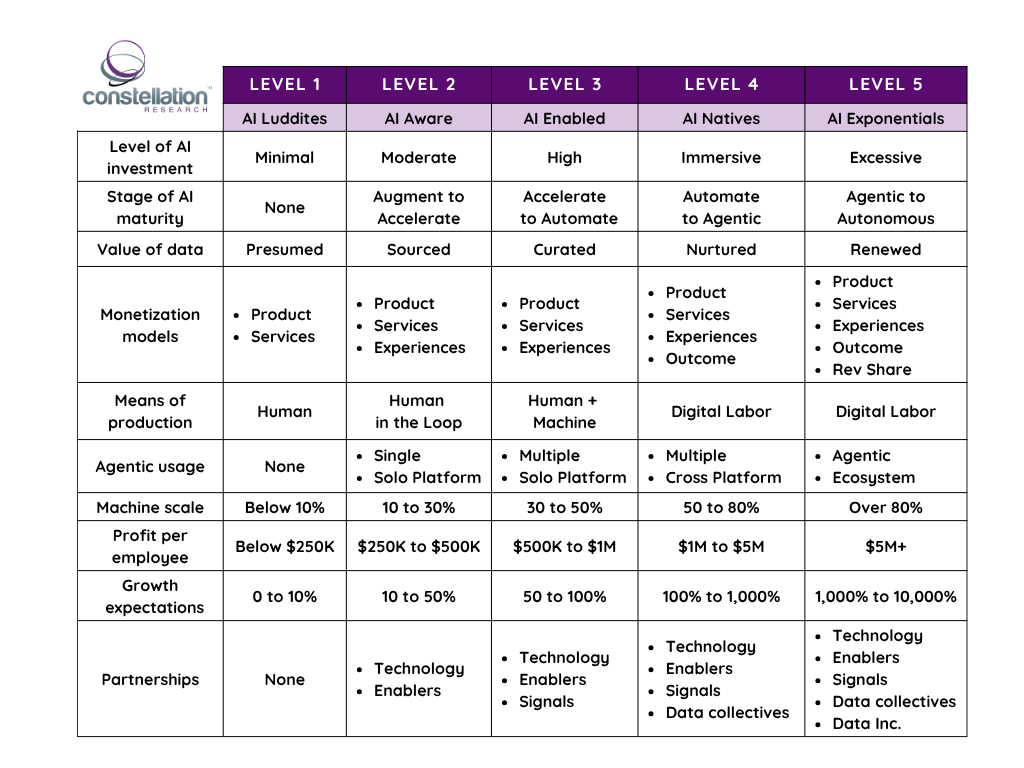

Here's a look at the characteristics of AI natives per the Constellation Research grid.

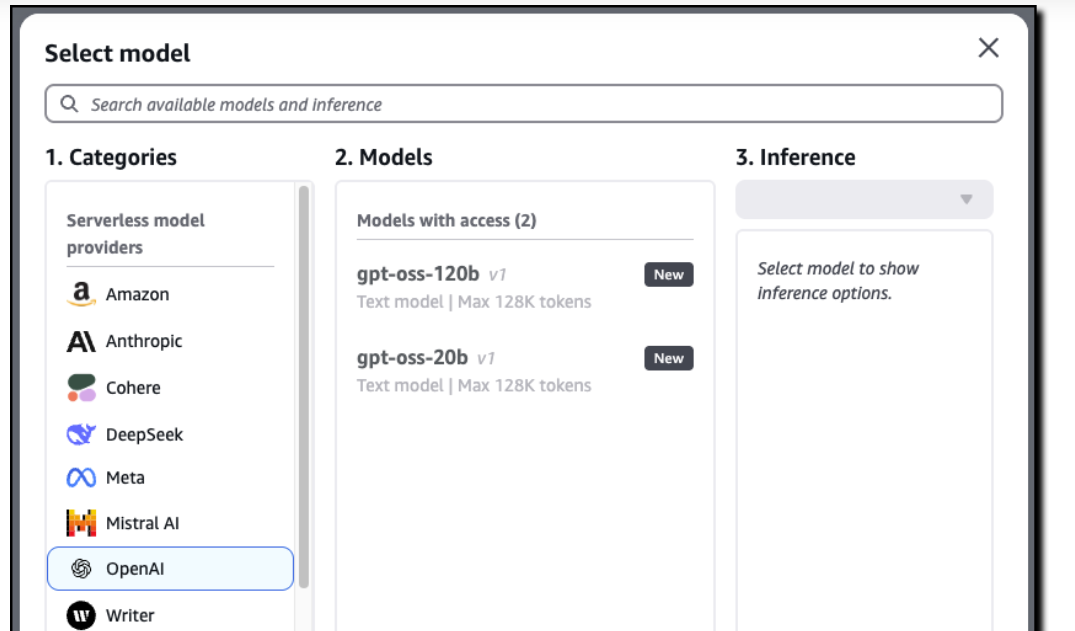

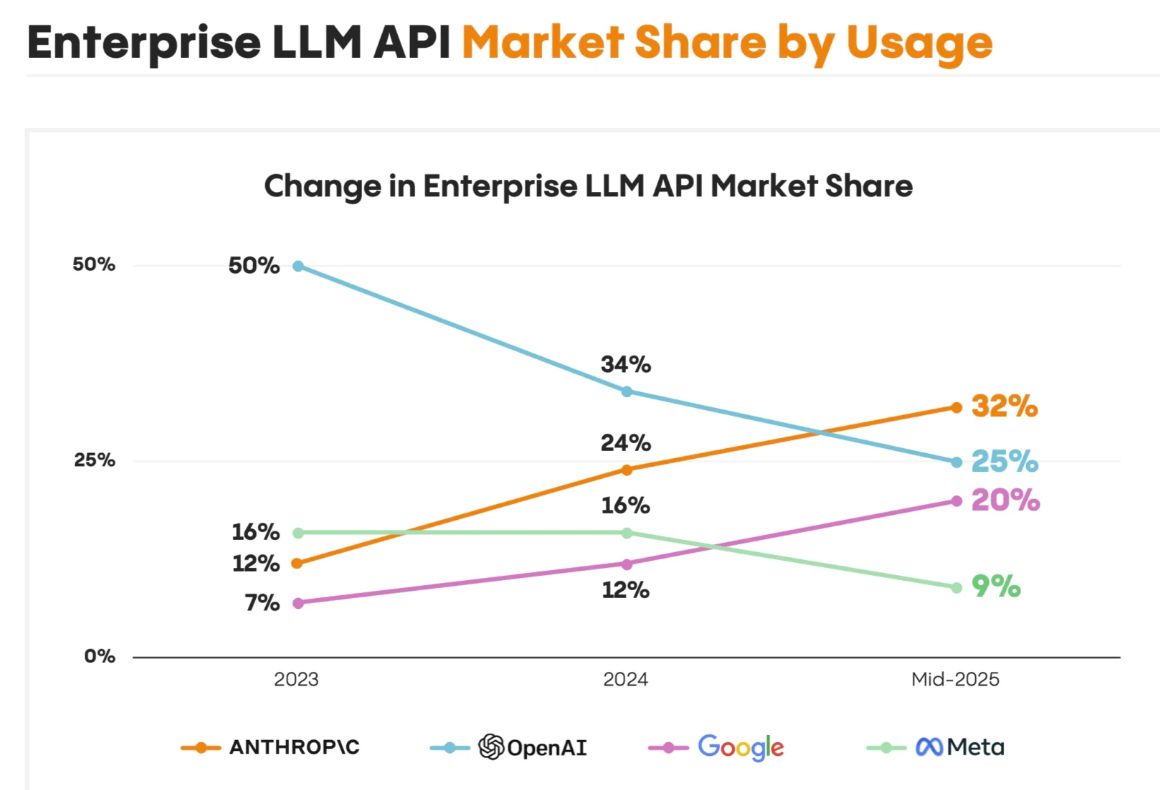

Stay focused and strategic. Chesky said it's premature to think of AI chatbots and agents as the Google replacement. The concept of one AI tool to rule all isn't proven. Chesky's bet is that there will be specialized AI models in categories.

Chesky said that ChatGPT is a great product, but the approach isn't exclusive to OpenAI. He said:

Data to Decisions Innovation & Product-led Growth Marketing Transformation Matrix Commerce Next-Generation Customer Experience Chief Information Officer"Airbnb can also use the API, and there are other models that we can use. In the coming years, you're going to have a situation where these large AI models can take more and more, and more things will start there, but people won't often go to one chatbot.

You're going to also have start-ups that are going to be custom-built to do a specific application, and you're going to have incumbents that make a shift to AI. It's not enough to just have the best model. You have to be able to tune the model and build a custom interface for the right application.

The key thing is going to be for us to lead and become the first place for people to book travel on Airbnb. As far as whether or not we integrate with AI agents, I think that's something that we're certainly open to. Remember that to book an Airbnb, you need to have an account, you need to have a verified identity. Almost everyone who books uses our messaging platform. So I don't think that we're going to be the kind of thing where you just have an agent or operator book your Airbnb for you because we're not a commodity. But I do think it could potentially be a very interesting lead generation for Airbnb."