SAP retools for generative AI, cuts 8,000 jobs, sets 2024, 2025 ambition

SAP plans to restructure and cut 8,000 positions as it focuses on artificial intelligence and uses the technology to become more efficient. SAP said it expects to add back new roles and exit 2024 with headcount at similar levels.

The enterprise software giant ended the year with nearly 108,000 full-time employees.

In a statement, SAP said it plans to retool to focus on growth markets and business AI. SAP said its restructuring will be covered by "voluntary leave programs and internal re-skilling measures." Restructuring charges will be about €2 billion with most of that being recognized in the first half of 2024 with minimal cost benefits due to reinvestment.

- SAP adds Alam to Executive Board leading product engineering

- SAP's AI ambitions depend on migrating customers to cloud, S/4HANA

- SAP aims to infuse generative AI throughout its applications: Here's everything from SAP Sapphire 2023

- SAP buys LeanIX, aims to couple it with Signavio, system transformation

- SAP user group DSAG rips S/4HANA innovation plans, maintenance increases

On a conference call with analysts, SAP CEO Christian Klein said:

"The tech industry is moving fast. We need to keep leading the way as a top enterprise application company and further advance to become the number one Business AI company as well. This is why out of a very strong position we are now accelerating the development of the company with the clear goal to grasp the opportunities of GenAI."

"We are stepping up our investment in Business AI to drive automation as we see significant growth opportunities lying ahead and want to improve our operating leverage," said SAP CFO Dominik Asam.

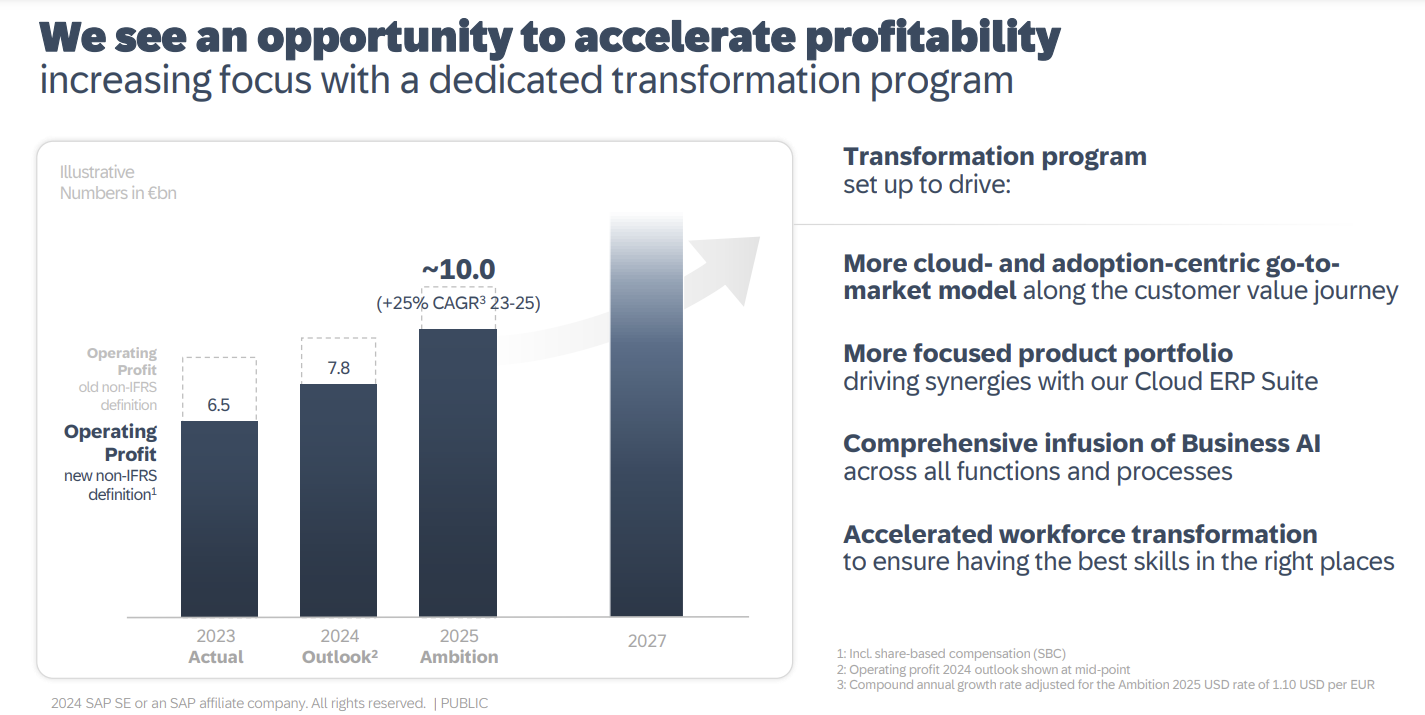

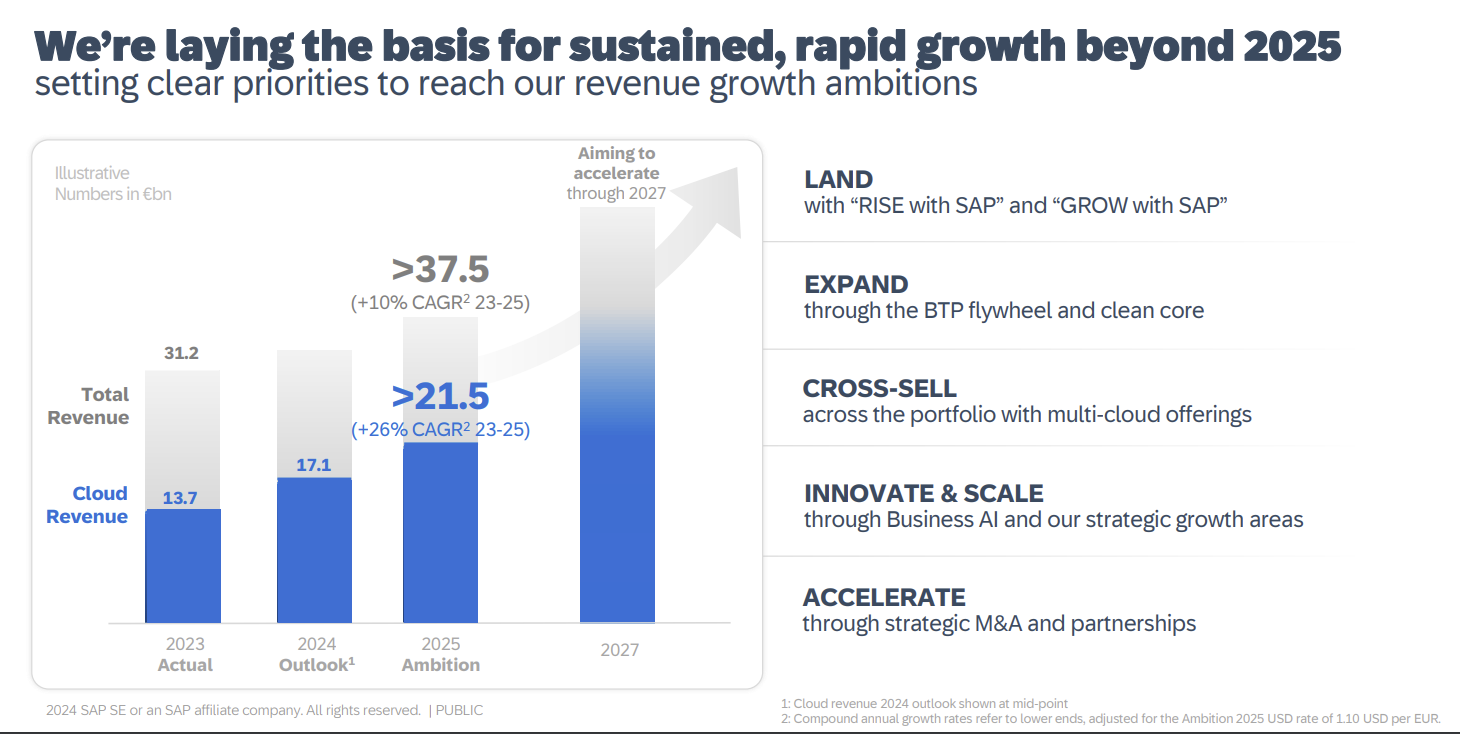

As for SAP's 2024 outlook, the company said €17 billion to €17.3 billion in cloud revenue, up 24% from €13.66 billion in 2023. The company said software and cloud revenue will be €29 billion to €29.5 billion with €7.6 billion to €7.9 billion non-IFRS operating profit.

SAP also outlined its 2025 outlook including cloud revenue of more than €21.5 billion and total revenue of more than €37.5 billion. Non-IFRS operating profit by 2025 will be €10 billion.

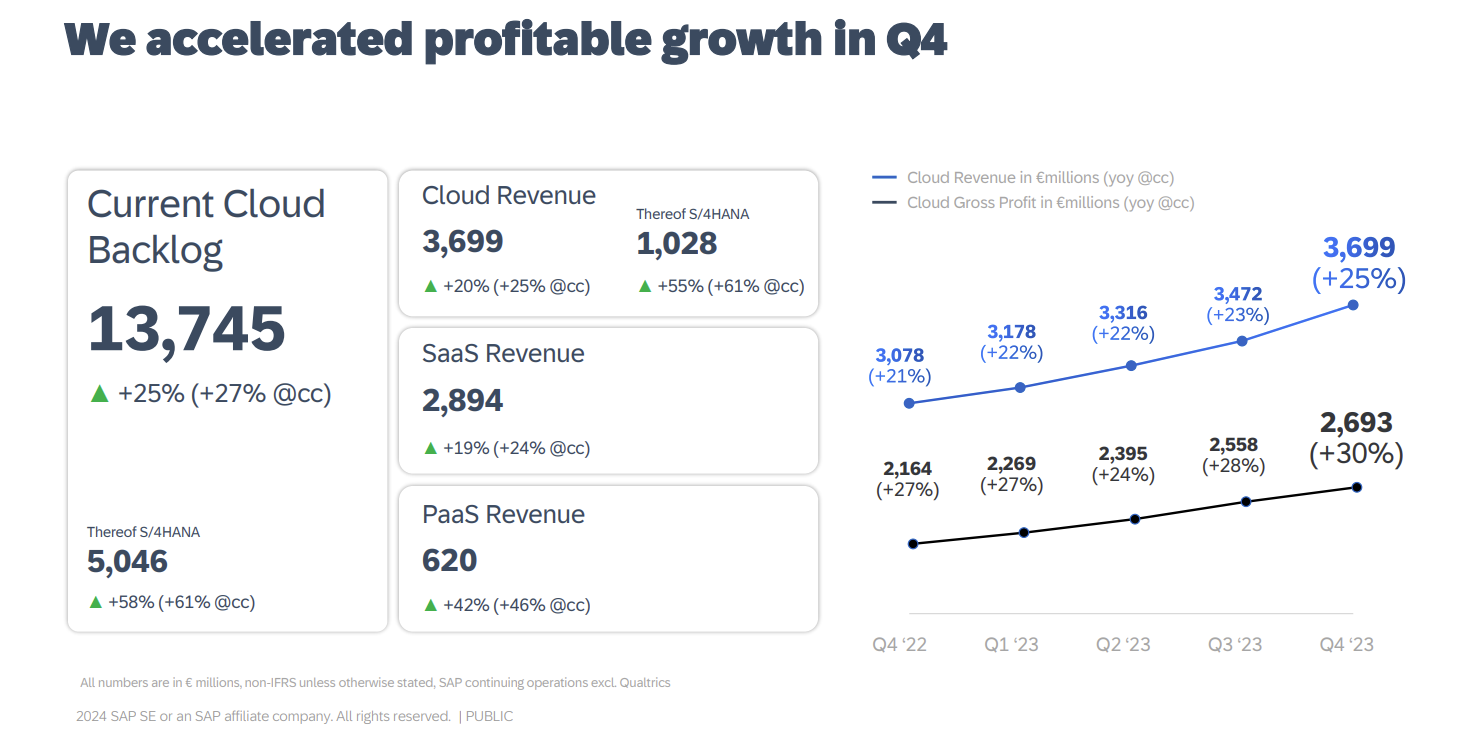

With the restructuring plan and outlook, SAP also reported fourth quarter and fiscal 2023 results. The company is retooling to drive cloud sales and move customers to S/4HANA. Customers have had a mixed reaction and SAP's 2023 net Promoter Score is 9. According to Simplesat, the average NPS for SaaS businesses is 30 and enterprise software's average NPS is 44.

SAP said fourth quarter revenue was €8.47 billion with cloud revenue of €3.7 billion. SAP S/4HANA cloud revenue was €1.03 billion. Earnings per share were €1.01 a share. Adjusted earnings were €1.41.

For fiscal 2023, SAP reported revenue of €31.26 billion with a profit of €5.93 billion.

Klein said that SAP can differentiate with its AI platform. He said:

"We are developing strong organic product, a strong organic AI platform so that our copilot tool can speak not only finance but can solve some of the hardest problems our customers facing across the company. We are going to infuse it right into the business processes. When you look at what we already can do in particular sales and optimizing inventory, it can take out a ton of CapEx and OpEx of the P&L or balance sheet of our customers. And then when you listen to our partners like Microsoft or NVIDIA, where we just closed another partnership, I mean they are keen actually now to combine their copilot with our copilot to extend our AI platform. When you have content from over 30,000 customers and access to the most mission-critical data, the algorithms become smarter every day. We can actually solve some problems."

Other takeaways to note:

- SAP said Vodafone is betting on RISE with SAP and is using Signavio and Datasphere along with other apps. Volkswagen is an expanded customer win for SAP SuccessFactors.

- Total cloud backlog is €44 billion, up 39% from a year ago.

- SAP's top 1,000 customers are now on average using 4 SAP cloud solutions, up from 3 last year.

- SAP's Cloud ERP suite represents 82% of the company's SaaS and PaaS revenue.

​​​​​​​

​​​​​​​