Rocket Companies’ genAI strategy: Playing both the short and the long game

Rocket Companies, a fintech company with mortgage, real estate, and personal finance businesses, is starting to see the payoff from its generative AI efforts as well as a bet on AWS’s Amazon Bedrock.

The company reported first-quarter revenue of $1.4 billion and net income of $291 million. The first quarter topped Wall Street’s expectations as well as the company’s internal guidance. On an earnings conference call, Rocket CEO Varun Krishna (right), formerly an executive at Intuit, PayPal, Groupon, and Microsoft, said the company is taking share, focusing on what it can control as interest rates and the mortgage market ebb and flow and investing in artificial intelligence (AI) to transform the business.

The company reported first-quarter revenue of $1.4 billion and net income of $291 million. The first quarter topped Wall Street’s expectations as well as the company’s internal guidance. On an earnings conference call, Rocket CEO Varun Krishna (right), formerly an executive at Intuit, PayPal, Groupon, and Microsoft, said the company is taking share, focusing on what it can control as interest rates and the mortgage market ebb and flow and investing in artificial intelligence (AI) to transform the business.

See PDF version of this customer story.

Rocket Companies’ journey to becoming an AI-driven mortgage and lending disruptor has been a long one. The company started as Rock Financial in 1985; created Mortgage In A Box, a mail-in mortgage application, in 1996; expanded into loans and became Quicken Loans in 1999; became the largest provider in FHA loans in 2014; became the largest residential mortgage lender in 2017; and went public in 2020. Throughout its history, Rocket has had to manage through real estate and lending boom-and-bust cycles.

AI Survey: Do you want to know where your enterprise stands within Constellation's AI maturity model of adoption and integration? Take 10 minutes to complete our 2024 AI survey and get a free 15 min. consultation and first access to the report!

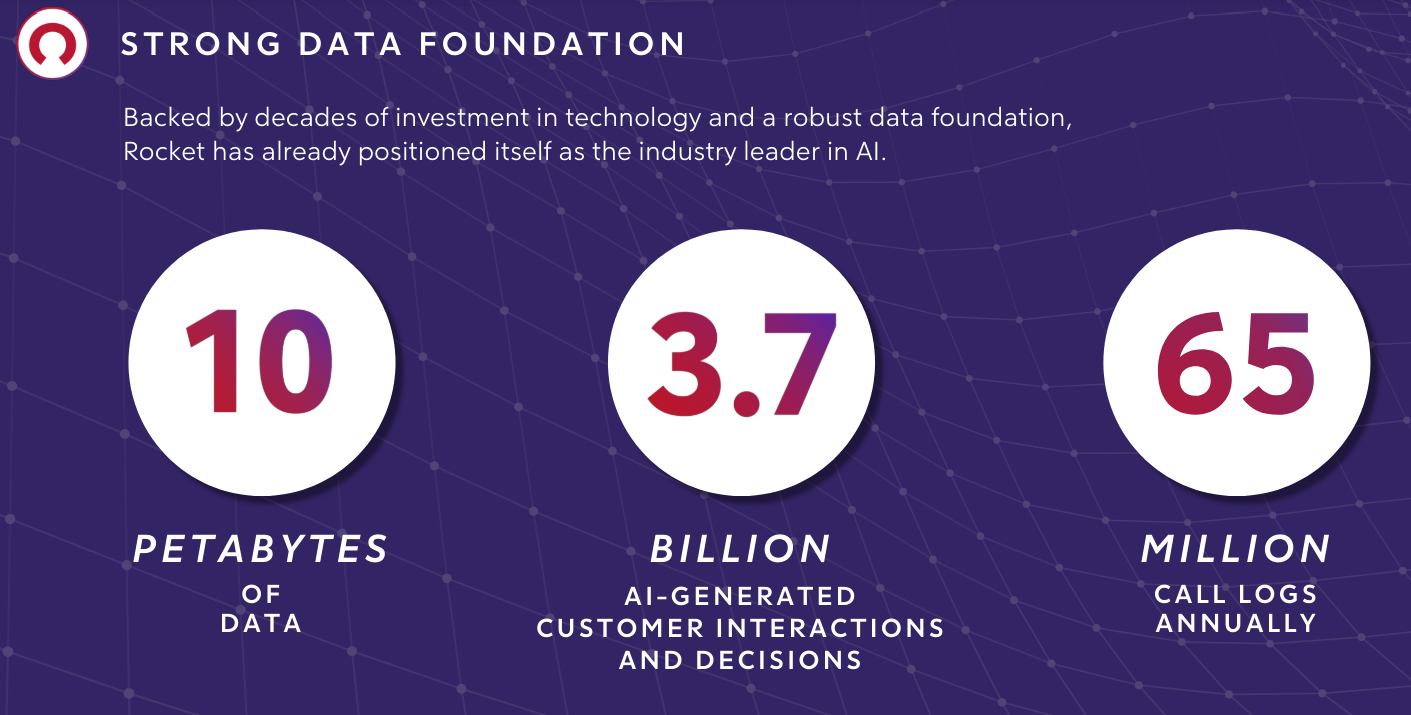

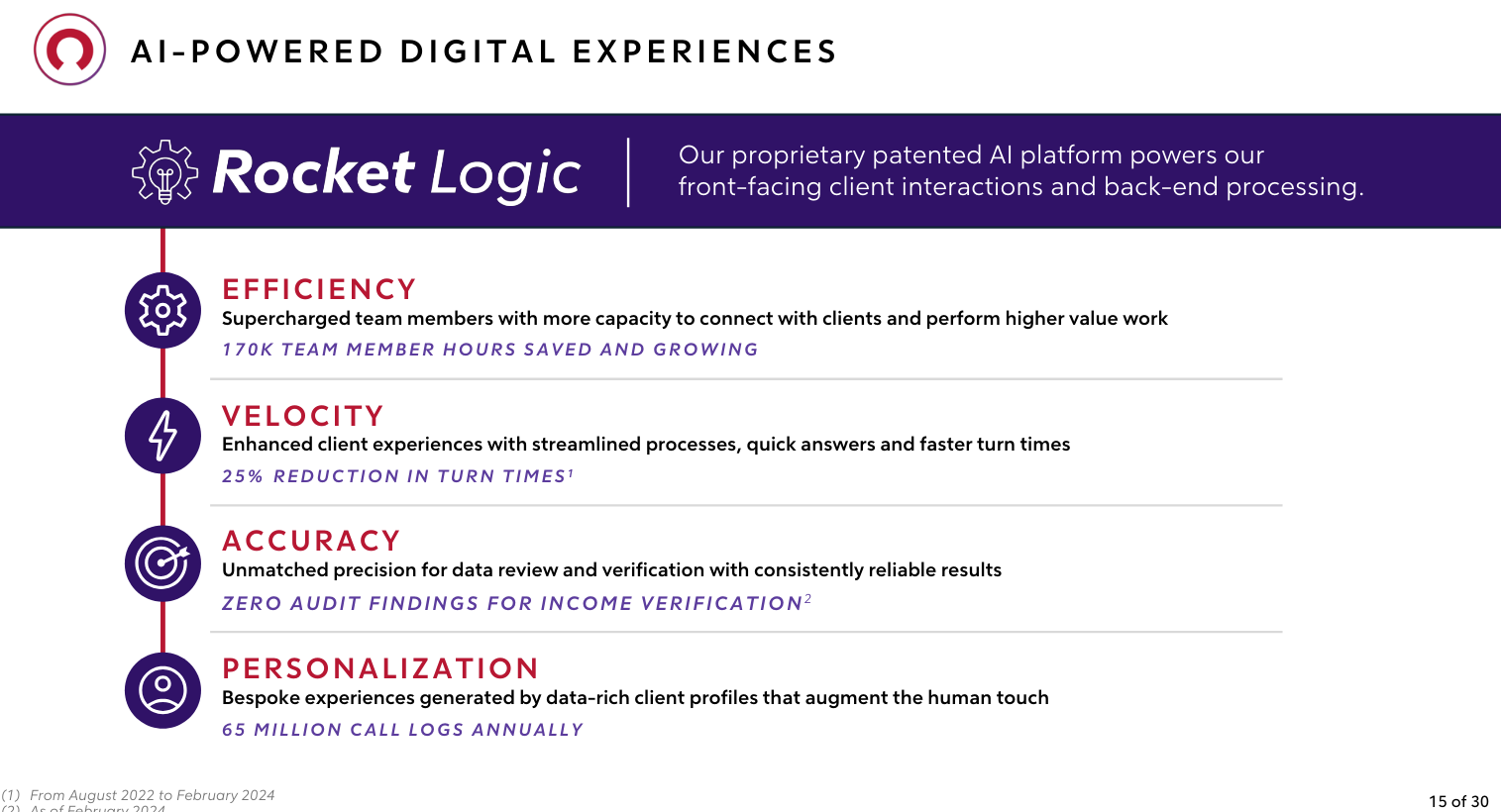

In April 2024, Rocket launched Rocket Logic, an AI platform built on insights from more than 10 million petabytes of proprietary data and 50 million annual call transcripts. Rocket Logic scans and identifies files for documentation, uses computer vision models to extract data from documents, and saved underwriters 5,000 hours of manual work in February.

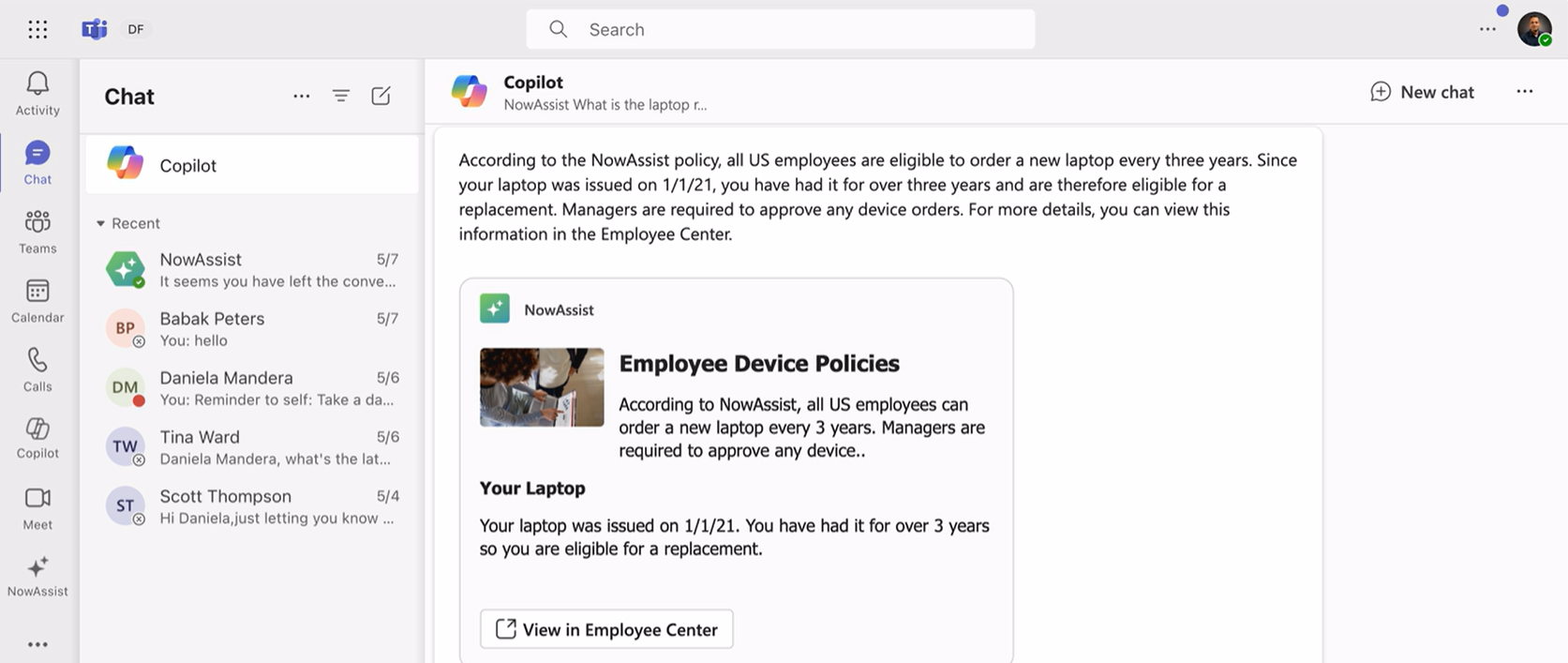

Rocket quickly followed up with Rocket Logic – Synopsis, an AI tool that analyzes and transcribes customer calls, analyzes sentiment, and identifies patterns. Synopsis is built on AWS and Amazon Bedrock, which features models from Anthropic, Cohere, Meta, Mistral, and others; has made 70% of client interactions self-service; and will learn from homeowner communications preferences over time.

Krishna also cites a new AI effort from Rocket Homes called Explore Spaces Visual Space, which enables users to upload photos of features they deem important and use image recognition to find homes. Another generative AI pilot enables clients to update their verified approval letters by using their voice. That use of AI will save bankers and underwriters time, since they manually adjust letter modifications almost 300,000 times a year.

“AI eliminates the drudgery of burdensome, time-consuming manual tasks so that our team members can spend more time on making human connections and producing higher-value work. Ultimately, with AI, we are driving operational efficiency, speed, accuracy, and personalization at massive scale,†says Krishna.

The plan for Rocket is to continue to roll out AI services on Rocket Logic. Rocket’s strategy is to leverage generative AI in a model-agnostic way to gain market share during the mortgage-and-lending downturn. Recent Rocket Logic additions include Rocket Logic Assistant, which follows conversations in real time, and Rocket Logic Docs, a document processing platform that can extract data from loan applications, W-2s, and bank statements. In February 2024, Rocket said nearly 90% of documents were automatically processed.

“We believe artificial intelligence is evolving rapidly and approaching a critical inflection point, where knowledge engineering, machine learning, automation, and personalization will be at the center of how clients buy, sell, and finance homes,†explained Rocket in its annual report.

The necessary data foundation

Like many other companies looking to scale generative AI, Rocket’s focus on data science and data governance sets the stage. The lesson in 2024 is clear: The companies, such as Intuit, JPMorgan Chase, and Equifax, that have their data strategies down can leverage generative AI for competitive advantage.

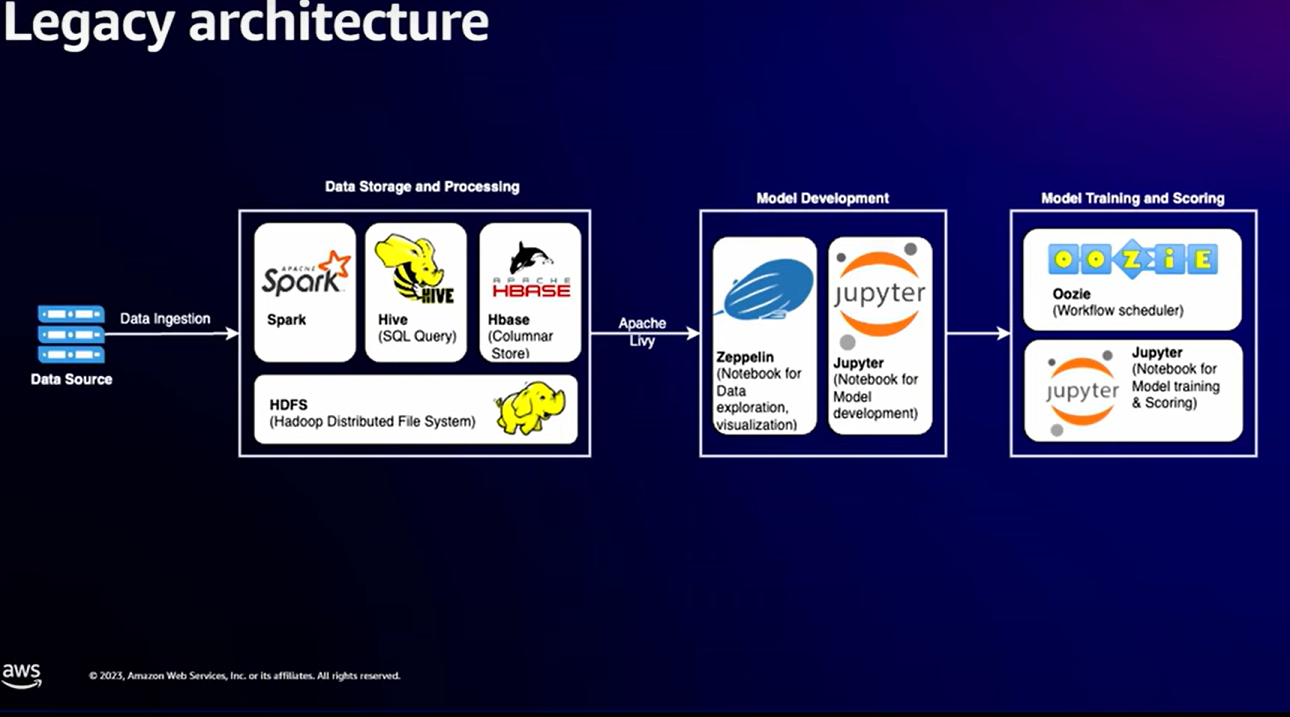

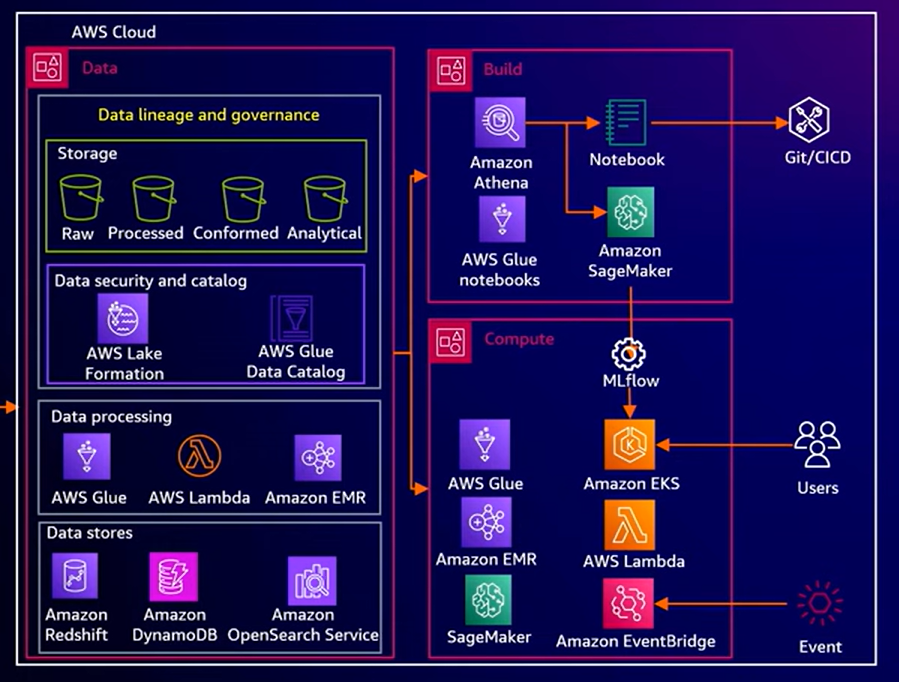

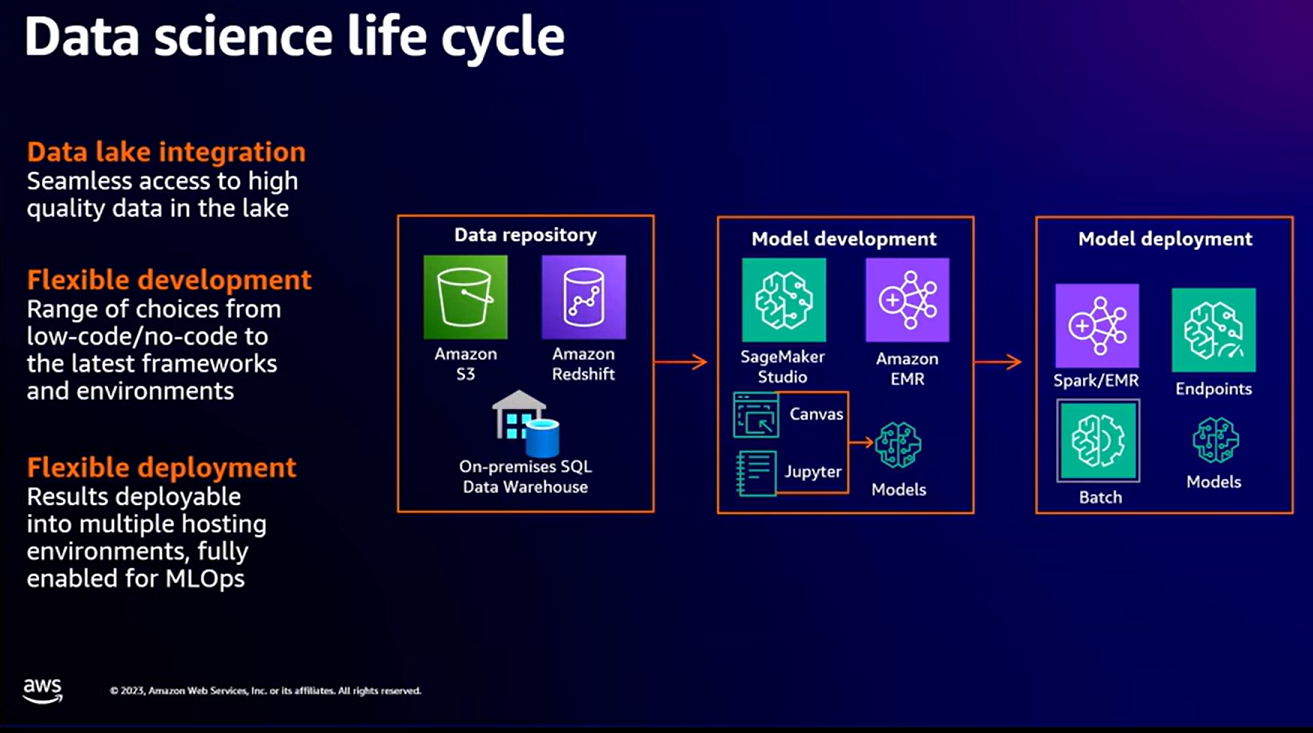

Dian Xu, director of engineering in Data Intelligence at Rocket Central, speaking at AWS re:Invent 2023, outlined how the company had evolved from a legacy big data infrastructure to a more scalable AWS infrastructure and ultimately Amazon SageMaker and Bedrock.

Xu explained that Rocket had an open-source data lake in 2017 that worked well enough but that the company’s volume subsequently doubled and then tripled. “We realized the legacy structure couldn’t scale and that data ingestion took too long,†said Xu. “We knew we had to modernize.â€

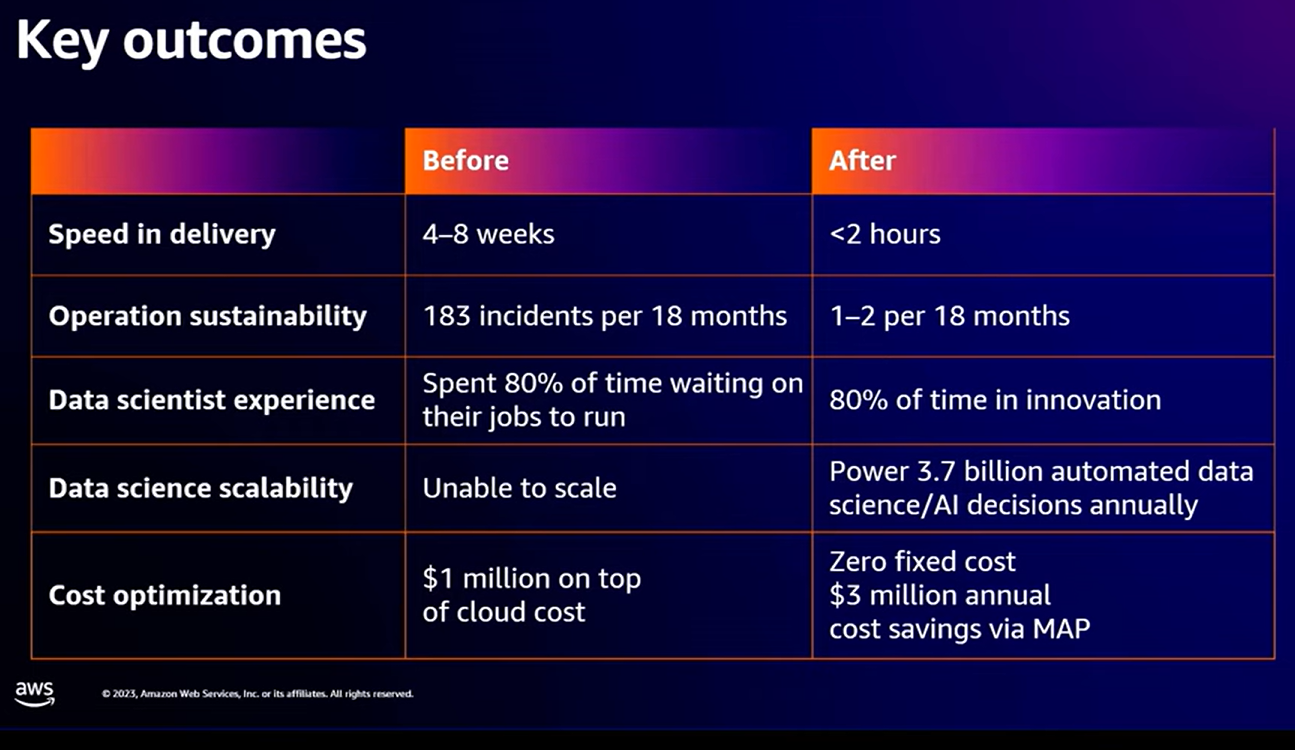

It didn’t help that legacy providers all had contracts that had to renew and support costs. Xu said Rocket had $1 million in fixed costs on top of cloud costs. Rocket used AWS’s migration acceleration program, retained cloud credits, and saved $3 million annually on supporting the data infrastructure.

Rocket’s journey included multiple services for data management and analytics before the company landed with SageMaker, which is used to manage models, deploy them, and provide an interface for multiple skill levels.

At the time of re:Invent, Xu said Rocket was prepared for generative AI, due to its data infrastructure, and was looking at AWS’s DataZone for Governance, Code Whisperer, and Amazon Bedrock. Six months later, Rocket was outlining its Rocket Logic AI platform and Synopsis.

For Rocket CEO Krishna, the data foundation is the linchpin in model training. “The key to AI is continuous training of models with recursive feedback loops and data. We are organizing this invaluable data to construct unified client profiles in a centralized repository,†he says. “From this repository, we trained models to gain deeper insights and analytics to personalize all future interactions with our clients. The ultimate objective is to deliver an industry-best client experience that translates into better conversion rates and higher client lifetime value and to just get continuously better and better at it.â€

- Amazon CEO Jassy’s shareholder letter talks AWS’s approach to generative AI

- AWS’s Matt Wood on model choice, orchestration, Q, and evaluating large language models

The returns on generative AI

Rocket executives say Rocket Logic is already generating strong returns. The company says that Rocket Logic automation reduced the number of times an employee interacts with a loan by 25% in the first quarter, compared to a year ago.

Turn times for Rocket clients to close on a home purchase declined by 25% from August 2022 to February 2024. As a result, Rocket is closing loans nearly 2.5 times as fast as the industry average.

In addition, generative AI saves hours of manual work.

Research: Enterprises Must Now Cultivate a Capable and Diverse AI Model Garden

Rocket Logic Docs saved more than 5,000 hours of manual work for Rocket’s underwriters in February 2024. Extracting data points from documents saved an additional 4,000 hours of manual work.

Rocket CFO Brian Brown says, “AI is bringing tangible business value through enhanced operational efficiency, velocity, and accuracy at scale. The most apparent and significant value add that I’ve seen is augmenting team member capacity through operational efficiency.â€

Brown says Synopsis is taking over manual tasks such as populating mortgage applications and classifying documents. “With AI handling this work, our team members have more time to provide tailored advice and engage in higher-value conversations with our clients.†He adds Synopsis cut a fourth of the manual tasks in the first quarter compared to a year ago.

Other generative AI returns from Rocket’s first quarter earnings conference call include:

- 170,000 hours saved per year

- First-call resolution improved 10% with Synopsis after a few weeks

- Zero audit findings with generative AI income verification

Rocket executives say AI is about growth and efficiency and that both are on the same continuum. Brown says generative AI brings the ability to add more capacity into the system. “We did $79 billion in originations last year, and we believe we can put significantly more capacity through the system,†says Brown.

Krishna’s take is that the savings from AI can drive investment and growth as well as velocity. He says:

Data to Decisions Future of Work New C-Suite Next-Generation Customer Experience Innovation & Product-led Growth Tech Optimization Digital Safety, Privacy & Cybersecurity amazon AI GenerativeAI ML Machine Learning LLMs Agentic AI Analytics Automation Disruptive Technology Chief Information Officer Chief Executive Officer Chief Technology Officer Chief AI Officer Chief Data Officer Chief Analytics Officer Chief Information Security Officer Chief Product Officer“The thing I’m excited about is that our AI strategy is specifically designed to create and unlock operating leverage. It will allow us to grow our capacity without increasing head count. And it will allow us to actually build our company and grow durably. So, we don’t look at this AI investment as a head count reducer. I mean that’s not how you build a growth company durably.

“But the combination of being able to invest in technology and have an ongoing principle around efficiency is how we think we’re going to create a durable flywheel.â€