Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

Nvidia CEO Jensen Huang said useful quantum computers are more than a decade away, Project Digits fills a big void for data scientists and developers and serves as a personal AI cloud, and human robotics will develop faster than expected.

Huang fielded financial analyst questions at CES 2025 and generated a few headlines worth noting. From a stock market perspective, Huang managed to tank quantum computing stocks, which have had a torrid run over the last two months.

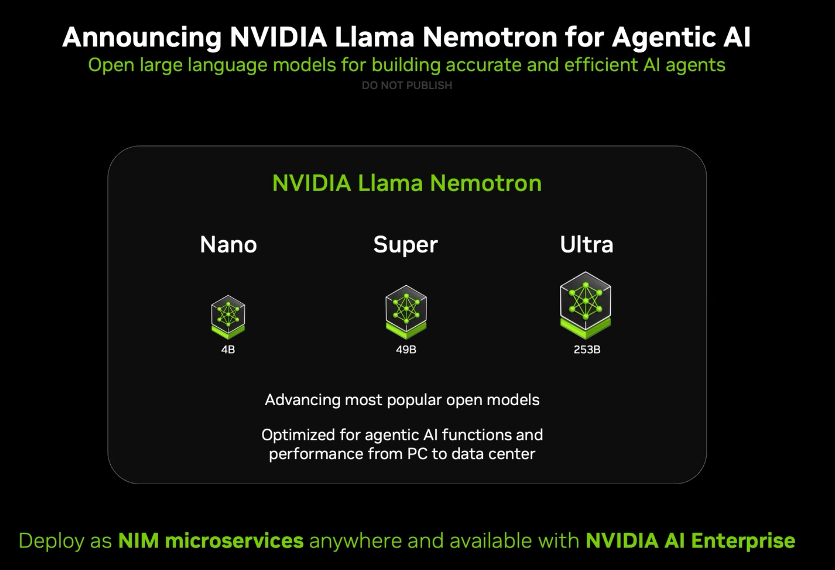

We'll start with Huang's quantum comments, but in the near term the comments on robotics and Project Digits was arguably more actionable. Nvidia had a busy CES 2025 with agentic AI developments and Project Digits, which puts an AI supercomputer on a desktop. The upshot is AI inference and development may become distributed.

Here's what Huang had to say:

Quantum computing strategy. Huang was asked about quantum computing and its usefulness in the near-term. Huang said that quantum computing can't solve every problem and that the technology is "good at small data problems."

"The reason for that is because the way you communicate with a quantum computer is microwaves. Terabytes of data is not a thing for them. There are some interesting problems that you could use quantum computers like generating a random number cryptography...

We're not offended by anything around us, and we just want to build computers that can solve problems that normal computers can't.

Just about every quantum computing company in the world is working with us now, and they're working with us in two ways. One, is quantum classical and we call it CUDA Q. We're extending CUDA to quantum and they use us for simulating the algorithms, simulating the architecture, creating the architecture itself.

Someday we'll have very useful quantum computers. We're probably five or six orders of magnitudes away, 15 years for useful quantum computers and that would be on the early side. 30 years is probably on the late side. If you picked 20 years a whole bunch of us would believe it. We want to help the industry get there as fast as possible and create the computer of the future."

Constellation Research analyst Holger Mueller said:

"It is no surprise Nvidia works with all Quantum vendor to help offload, prepare and operate workloads and data for quantum machines. Huang is a little off with the use case benefits. In Spring 2023 some quantum vendor showed and used real enterprise workloads. But he is right - the viability is limited. This may change in 2025 though - where all eyes are on IBM and there plan to couple multiple Heron systems."

Blackwell demand. Huang said Hopper and Blackwell demand is strong on a combined basis.

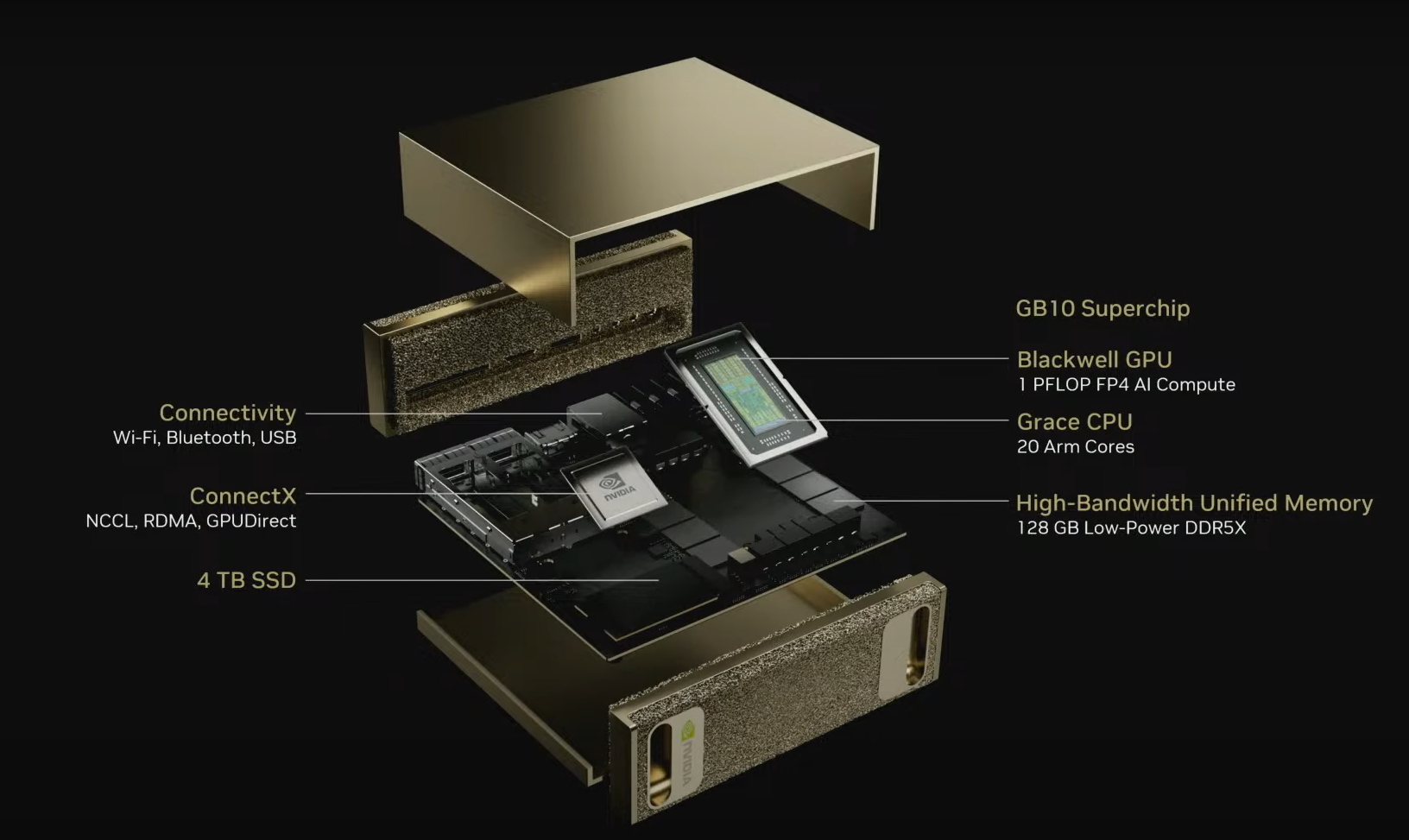

The Blackwell system on a chip (SOC) in Project Digits. Nvidia worked with MediaTek on the Blackwell SOC and Huang was asked why the company didn't do the architecture itself. "MediaTek does such as good job building lower power SOCs," said Huang. "If we can partner to do something then we can do something else. MediaTek did a wonderful job. We shared our architecture with them and it was a great win-win and saved a lot of engineering."

Project Digits market. Huang was asked about Project Digits and Nvidia's PC ambitions. He said:

"I'm getting incredible emails from developers about Digits. There's a gaping hole for data scientists and ML researchers and who are actively building something and you don't need a giant cluster. You're just developing the early versions of the model, and you're iterating constantly. You could do it in the cloud, but just costs a lot more money. Personal computing exists so you can have a op-ex, capex trade off. A lot of developers are trying to get through using Macs or PCs and now they have this incredible machine sitting next to them. You could connect it through USB-C, or you could connect it using LAN, or Wi-Fi. And now it's sitting next to you. It's your personal cloud, and it runs the full stack of what you run on DGX. Everything runs exactly the same way.

"It's essentially your own private cloud. If you would like to have your own AI assistant sitting on this device, you can as well. It's not for everyone, but it's designed for data scientists, machine learning researchers, students."

What's the PC plan? Nvidia said Project Digits is focused but Huang wasn't going to say much about future PC efforts. "Obviously we have plans," he said.

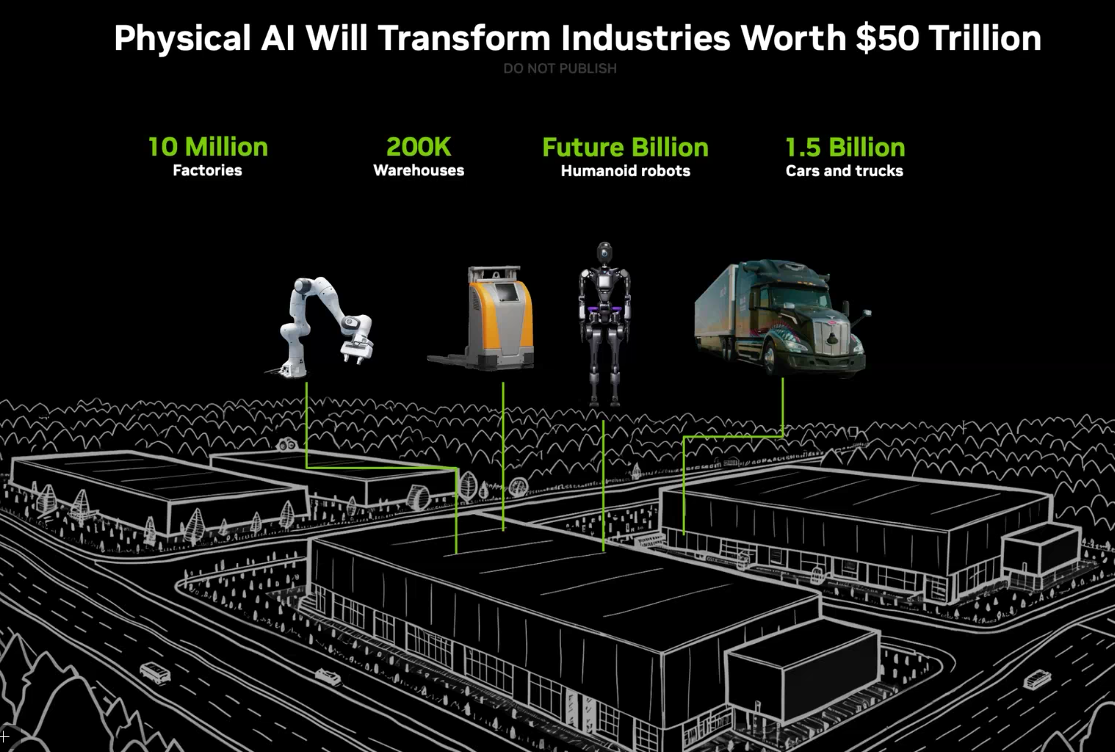

The platform. Huang emphasized that Nvidia is building a platform for "every developer and every company." He said Nvidia can extend into World Foundation Models, robotics and computational lithography for TSMC. "We love the concept of a programmable architecture," he said.

Automotive and autonomy. "Every car company in the world will have two factories, a factory for building cars and the factory for updating their AIs," he said. "Every single car company will have to be autonomous, or you're not going to be a car company. Everything that moves will be autonomous."

Robotic systems and strategy. "There are no limitations to robots. It could very well be the largest computer industry ever," said Huang. "There's a very serious population and workforce situation around the world. The workforce population is declining, and in some manufacturing countries it's fairly significant. It's a strategic imperative for some countries to make sure that robotics is stood up."

"Human robots will surprise everybody with how incredibly good they are," said Huang.

Analysts also asked Huang about the robotic strategy. He said:

"Our robotic strategy for automotive and robot and human robots, or even robotic factories, are exactly the same. It is technologically exactly the same problem. I've generalized it into an architecture that can address a massive data problem."

AI assistants for coding. "If a software engineer is not assisted with an AI, you are already losing fast. Every software engineer at Nvidia has to use AI assistance next year. That's just a mandate," he said.

What models Huang uses. Huang said he uses OpenAI’s ChatGPT the most followed by Google’s Gemini models, especially the ones aimed at deep research. “Everybody should use these, Ais at least as a tutor,” said Huang. “Every kid should use AIs as a tutor. The continuous dialog is insanely good and it’s going to get better.”

Data to Decisions

Next-Generation Customer Experience

Innovation & Product-led Growth

Tech Optimization

Future of Work

Digital Safety, Privacy & Cybersecurity

nvidia

Quantum Computing

AI

GenerativeAI

ML

Machine Learning

LLMs

Agentic AI

Analytics

Automation

Disruptive Technology

Chief Information Officer

Chief Executive Officer

Chief Technology Officer

Chief AI Officer

Chief Data Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Product Officer