Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

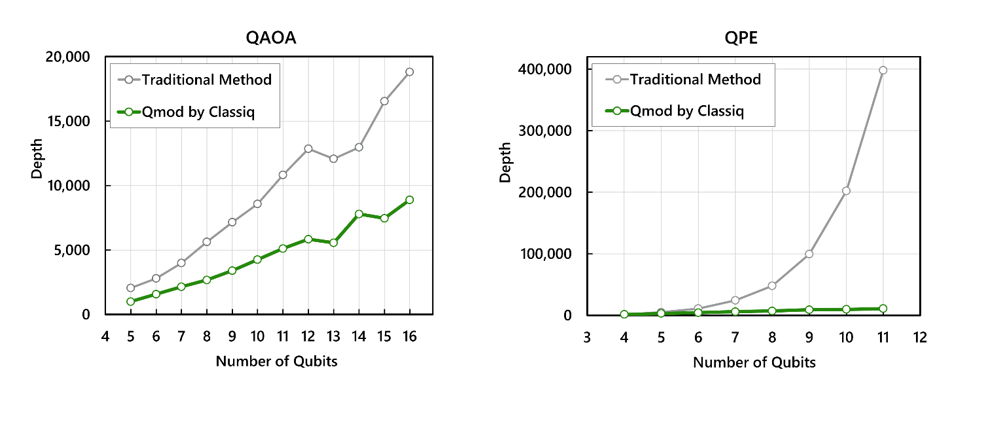

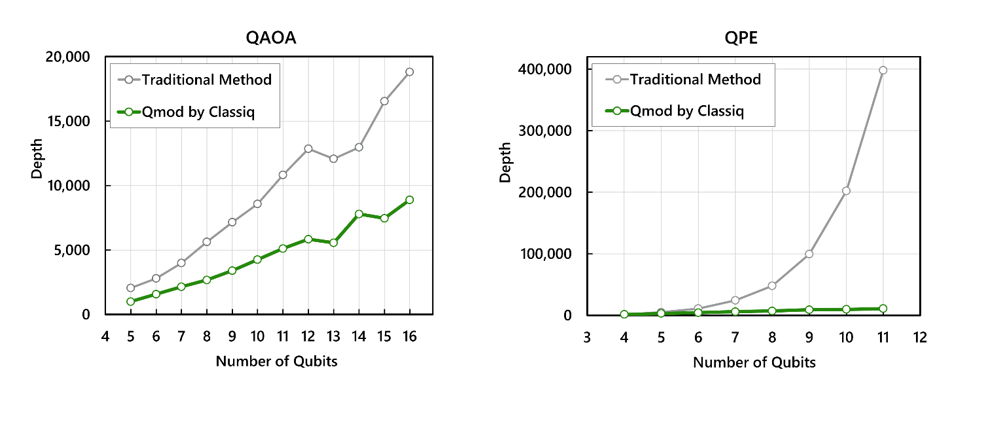

Classiq Technologies, Deloitte Tohmatsu and Mitsubishi said they have compressed quantum circuits by up to 97% in a move that reduces error rates and may accelerate practical enterprise use cases.

The news comes as practical development in quantum computing has accelerated into 2025. Consider:

With that backdrop, it's clear that quantum computing is becoming more enterprise relevant. For instance, Classiq's collaboration with Deloitte Tohmatsu and Mitsubishi Chemical highlights one big use case: Materials development.

The companies are looking to develop new materials including new organic electroluminescent (EL) materials. By compressing quantum circuits, Classiq, Deloitte Tohmatsu and Mitsubishi Chemical said algorithms have lower error rates. "This result indicates that the circuit compression method used in this demonstration can be applied to various quantum circuits, not only in the chemical field. It is also relevant for the early practical application of quantum computers in a wide range of fields such as drug discovery, AI, finance, manufacturing and logistics," the companies said in a statement.

Constellation Research analyst Holger Mueller said:

"It is end of 2024 and in the real world, tangible use cases for quantum technology are rolling in. Today it is the turn of Classiq that is showing with partners and customers Deloitte Tohmatsu and Mitsubishi Chemical substantial acceleration of quantum based insights in new material development using Classiq tools and algorithms. This development makes appetite for more quantum based real world use cases in 2025."

Here's a look at some the other quantum computing developments in recent days.

Google launches Willow

Google launched its latest quantum chip called Willow with strong error correction improvements and outlined its roadmap for quantum computing.

Willow is part of Google's 10-year effort to build out its quantum AI operations. The company said Willow moves it along the path to commercially relevant applications.

IonQ Quantum OS, Europe launch

IonQ announced its IonQ Quantum OS and new tools for its IonQ Hybrid Suite. IonQ said the platform is designed to power its flagship IonQ Forte and Forte Enterprise quantum systems.

According to IonQ, the new OS provides an average 50% reduction in on-system classical overhead, an 85% reduction in cloud and network workloads through IonQ Cloud and more than 100x improvement on accuracy.

IonQ's Hybrid Services suite gets a developer toolkit, Workload Management & Solver Service, to move hybrid workloads to the cloud, a new scheduling feature called Sessions, and an all-new software development kit.

Separately, IonQ launched its first Europe innovation center with IonQ Forte Enterprise. The effort is a partnership with QuantumBasel and designed to serve enterprises, governments and researchers.

More: IonQ’s bet on commercial quantum computing working, acquires Quibitekk | IonQ's quantum computing bets: Quantum for LLM training, chemistry and enterprise use cases

AWS quantum moves

AWS launched its Quantum Embark Program and set off a stock-buying frenzy in quantum computing plays. The program, which is delivered by Amazon's Advanced Solutions Lab, focuses on use case discovery, technical enablement and a deep dive program.

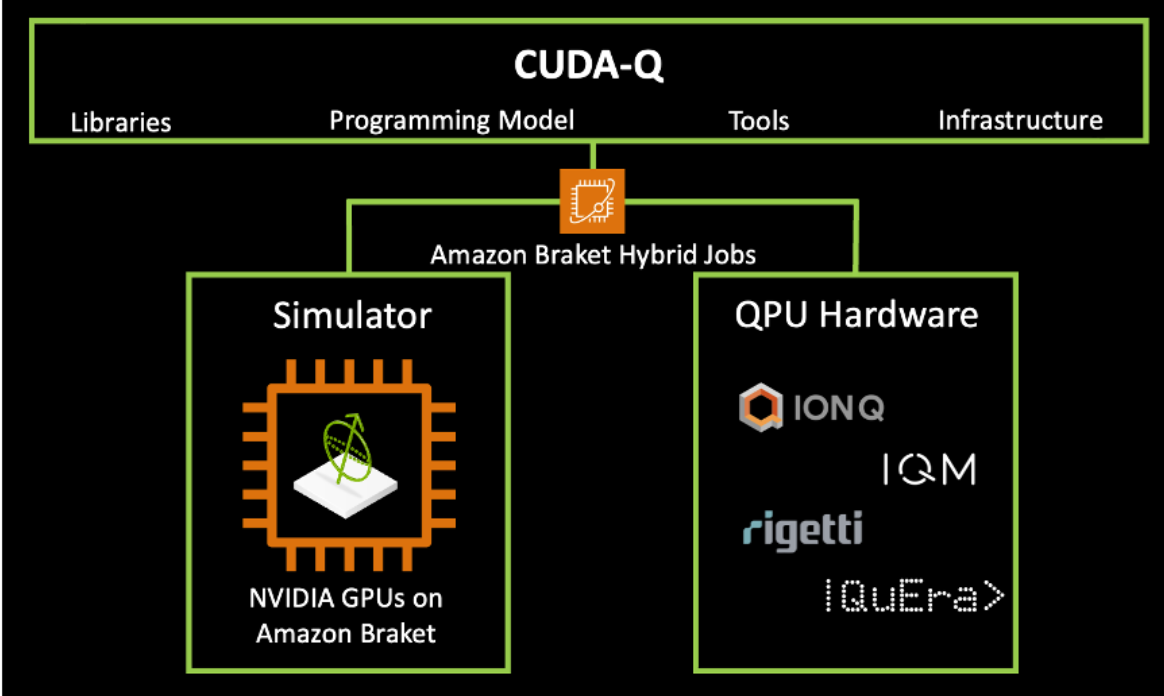

Amazon Braket is providing the quantum compute capabilities.

Under the Quantum Embark Program, AWS is providing discovery workshops to identify use cases and how quantum computing and solve business programs. AWS is also providing workshops on how quantum computing works, runs applications and performs calculations. Deep dive is focused on more technical items and targeting applications.

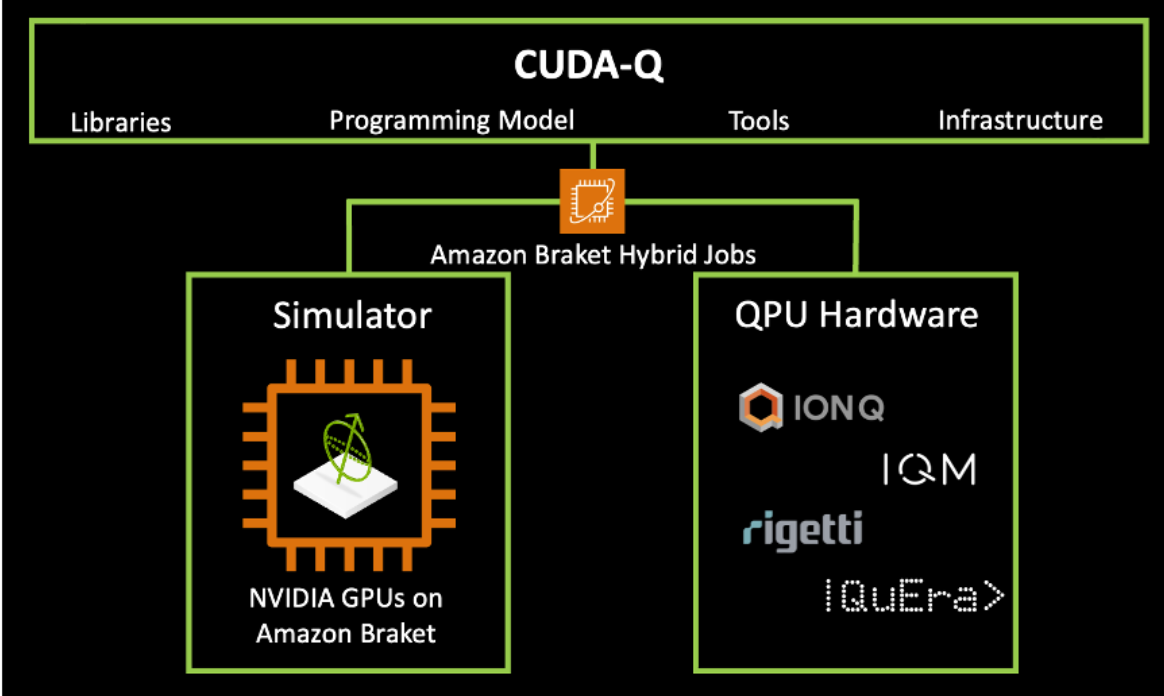

At re:Invent 2024, AWS also said it is teaming up with Nvidia. Nvidia's open source quantum development environment, CUDA-Q Platform, will be added to Amazon Bracket to combine with classical cloud compute resources.

More quantum computing:

Data to Decisions

Innovation & Product-led Growth

Tech Optimization

Quantum Computing

Chief Information Officer