Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

SAP CEO Christian Klein said the company will significantly increase its AI investments, launch AI agent innovations Feb. 13 and give customers a three-year reprieve on migrating from on-premises ERP to the cloud.

Klein teased the AI agent orchestration launch as well as licensing changes during SAP's fourth quarter earnings call. He said that customers are spending half of their IT budgets on data and analytics and falling short of leveraging data.

SAP "will harmonize structure and unstructured data" across SAP and non-SAP data with relevant semantics. "We will make AI agents much more powerful," said Klein. "Joule will become the super orchestrator of these agents, carrying out complete tasks autonomously and end to end, taking over significant workload from humans."

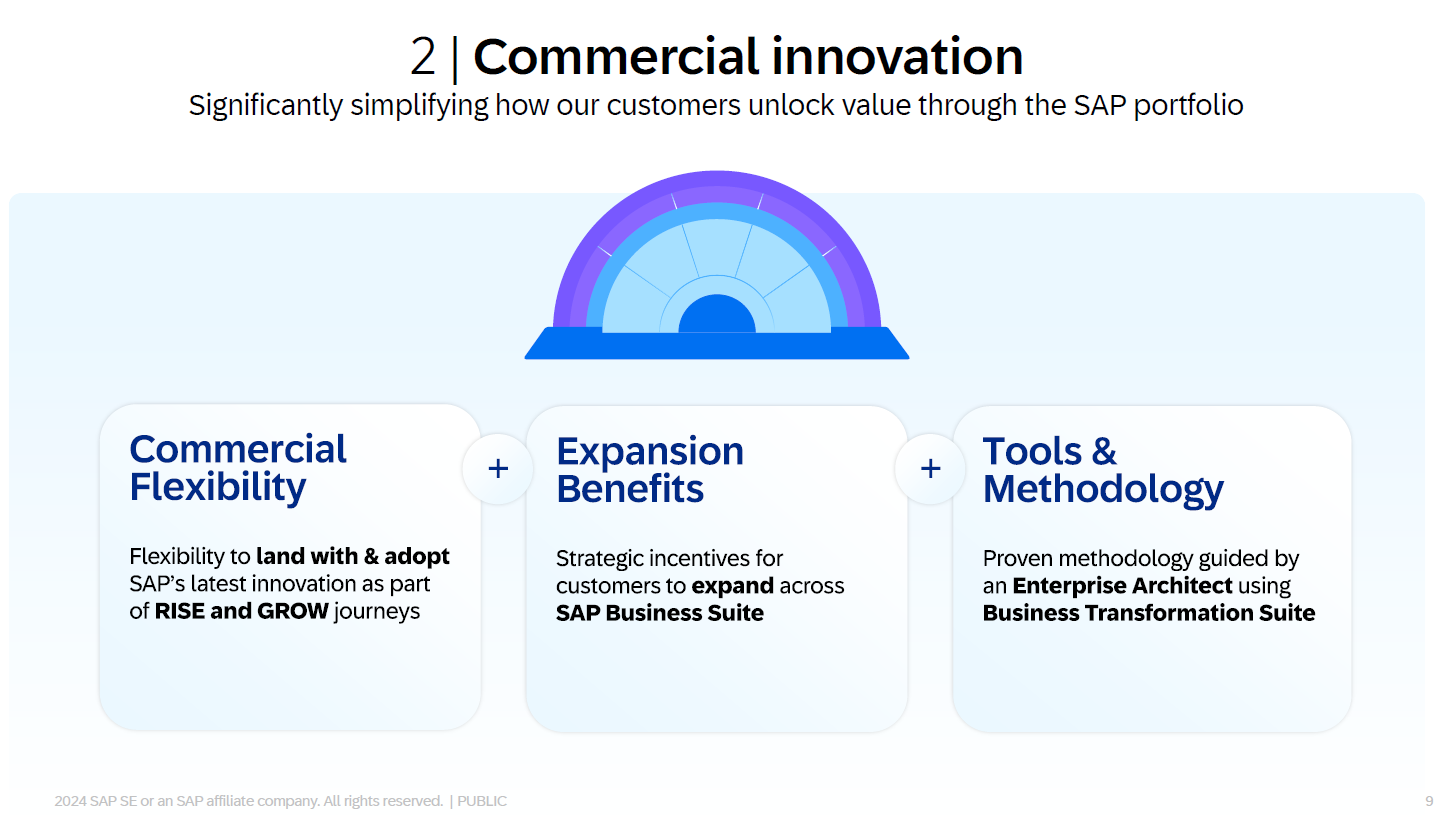

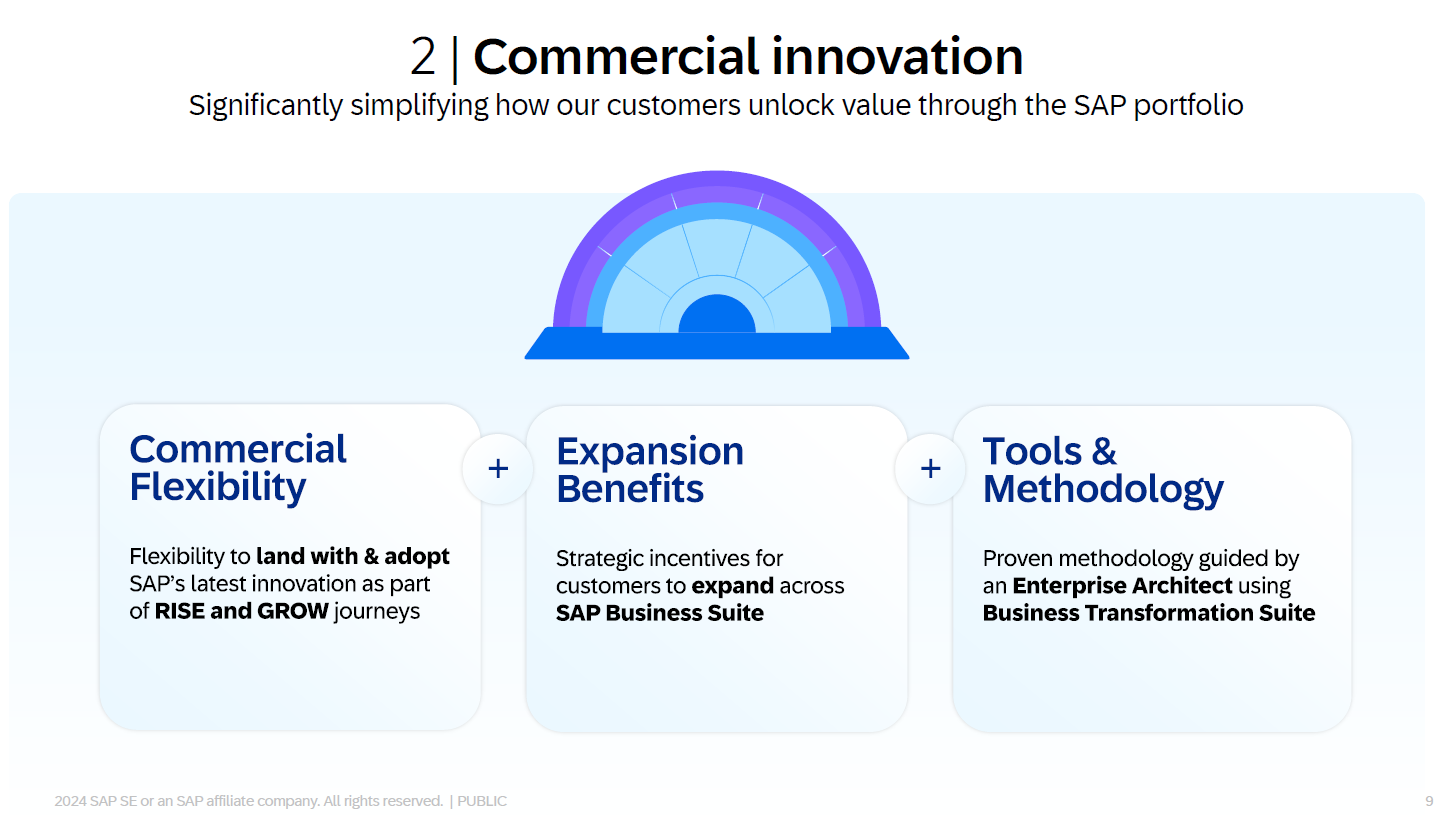

On the licensing front, Klein said "we will introduce licensing options that allow customers to upgrade and switch easily to our newest cloud solutions across the whole SAP Business Suite without additional negotiations." In short, SAP will roll out a maintenance offering that will give customers the ability to extend maintenance through 2033 from 2030 if they can't migrate to the cloud completely.

DSAG: SAP's innovation focus on cloud, discriminates against on-premise users

Although SAP's fourth quarter earnings and outlook were notable, Klein's comments about AI strategy and licensing were more notable. Klein, who was referring to US AI industry concerns about DeepSeek and cheaper models, noted that SAP can roll with multiple models as needed. The value is in business data, he added.

Klein said SAP is flexible with large language model choices and is betting its AI strategy on contextual data. SAP said half of its cloud deals included AI in the fourth quarter and 2025 cloud revenue will be up 26% to 28%.

"We are flexible when it comes to AI infrastructure and large language modules. We benefit from cost reductions and progress in the LLM space, because we are truly differentiating an element in AI today. However, it is deep process and industry knowhow, combined with access to unique context, rich business data, so value equation is more and more moving up the application layer and to building one semantical data layer, this is exactly what SAP has been focusing on."

On a conference call, Klein touted the "new SAP," which shows strong cloud growth and the ability to leverage Business AI through its suite. "Land and expand is clearly working," said Klein, who said a fifth of customers are using more than one of SAP's solutions. "We are doubling down on AI in 2025."

SAP said it is positioning Joule as the new UI for its software.

Klein said SAP brought more than 130 genAI use cases to customers in 2024. Internally, Klein said SAP is using AI internally to be more efficient with 20,000 SAP developers using AI tools, average efficiency gains of 20% on go-to-market activities and contract booking time improvements of 75%. SAP also saw a 20x productivity gain due to AI assisted go-to-cash process automation and has saved €300 million due to AI implementations.

Fourth quarter results and outlook

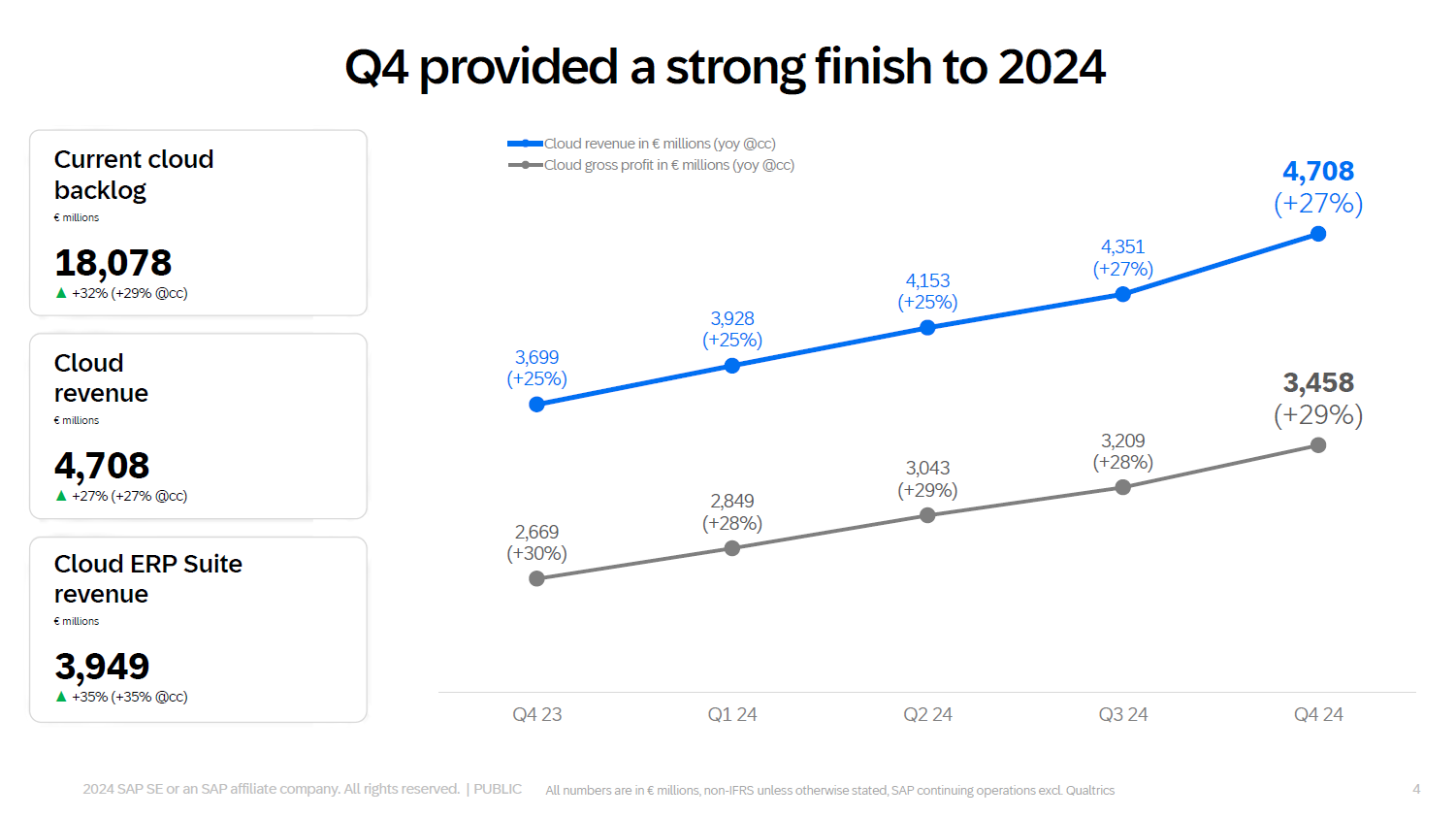

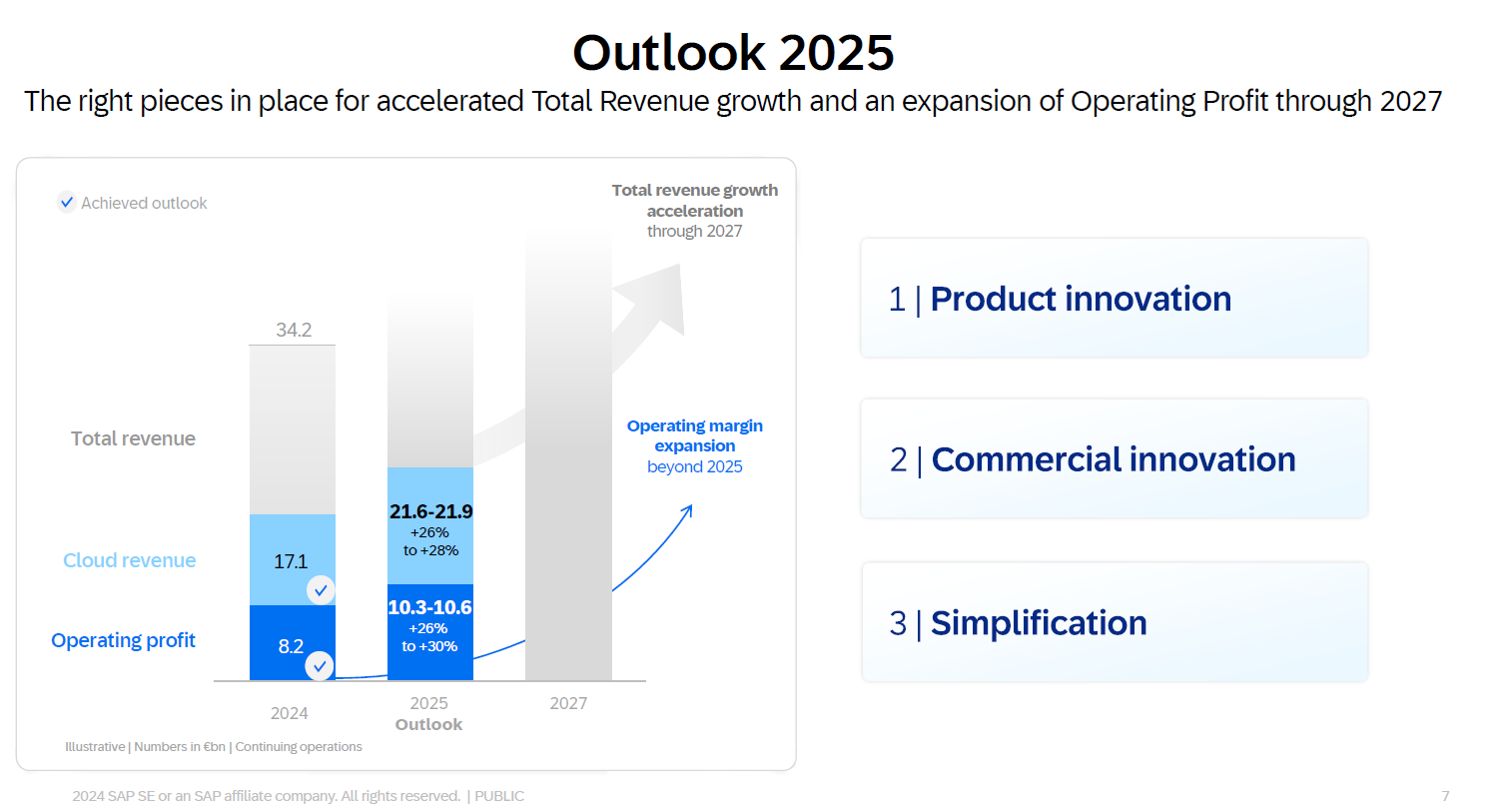

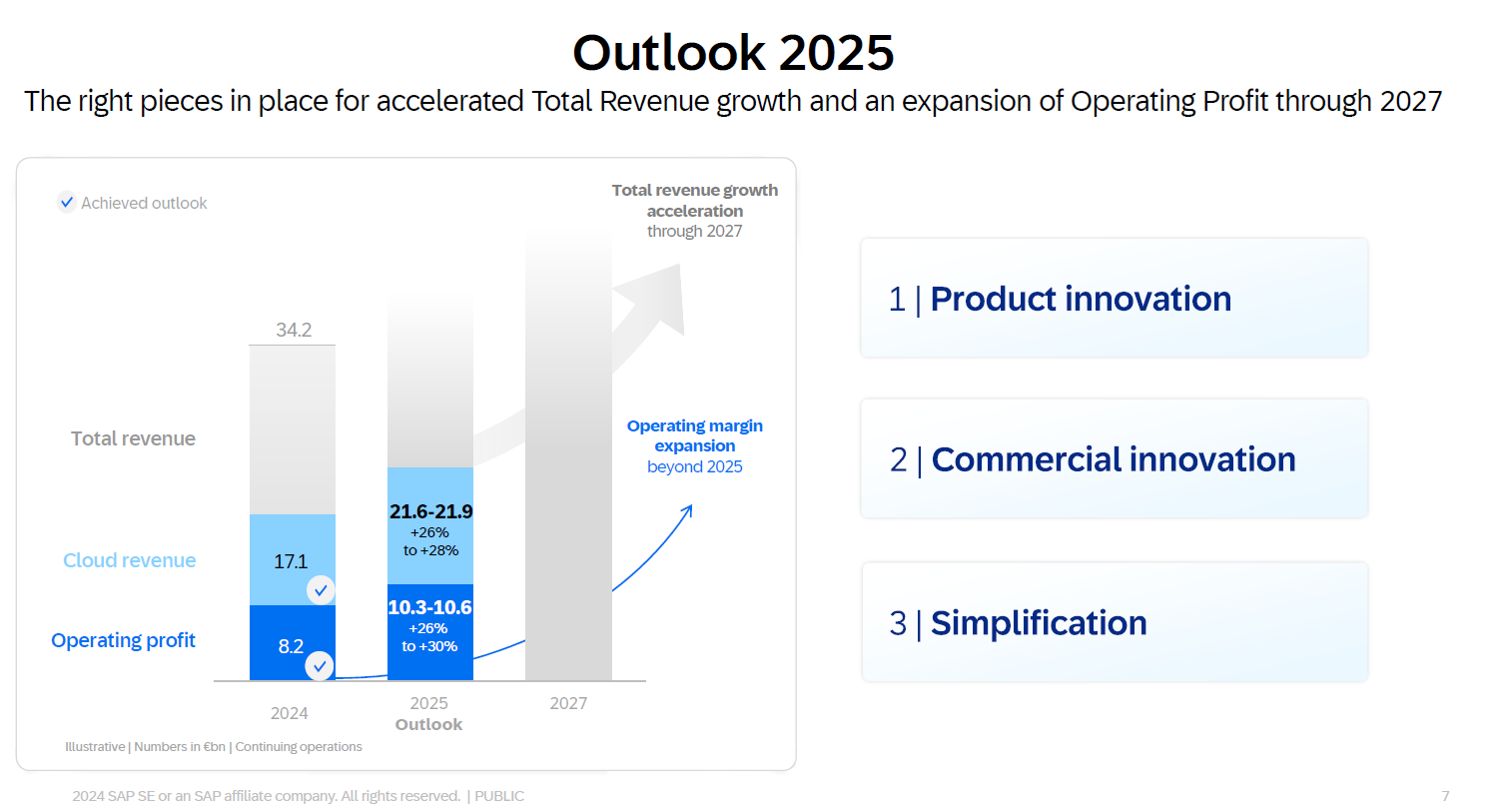

SAP said fourth quarter revenue was €9.48 billion, up 11% from a year ago, with net profit of €1.62 billion, or €1.37 a share. Adjusted earnings were €1.40 a share.

For 2024, SAP said revenue was €34.18 billion, up 10% from a year ago, with earnings of €3.15 billion, or €2.68 a share. Earnings were down due to restructuring charges. Adjusted earnings were €4.53 a share.

Klein said that SAP finished the year strong and showed the ability to migrate customers to the cloud.

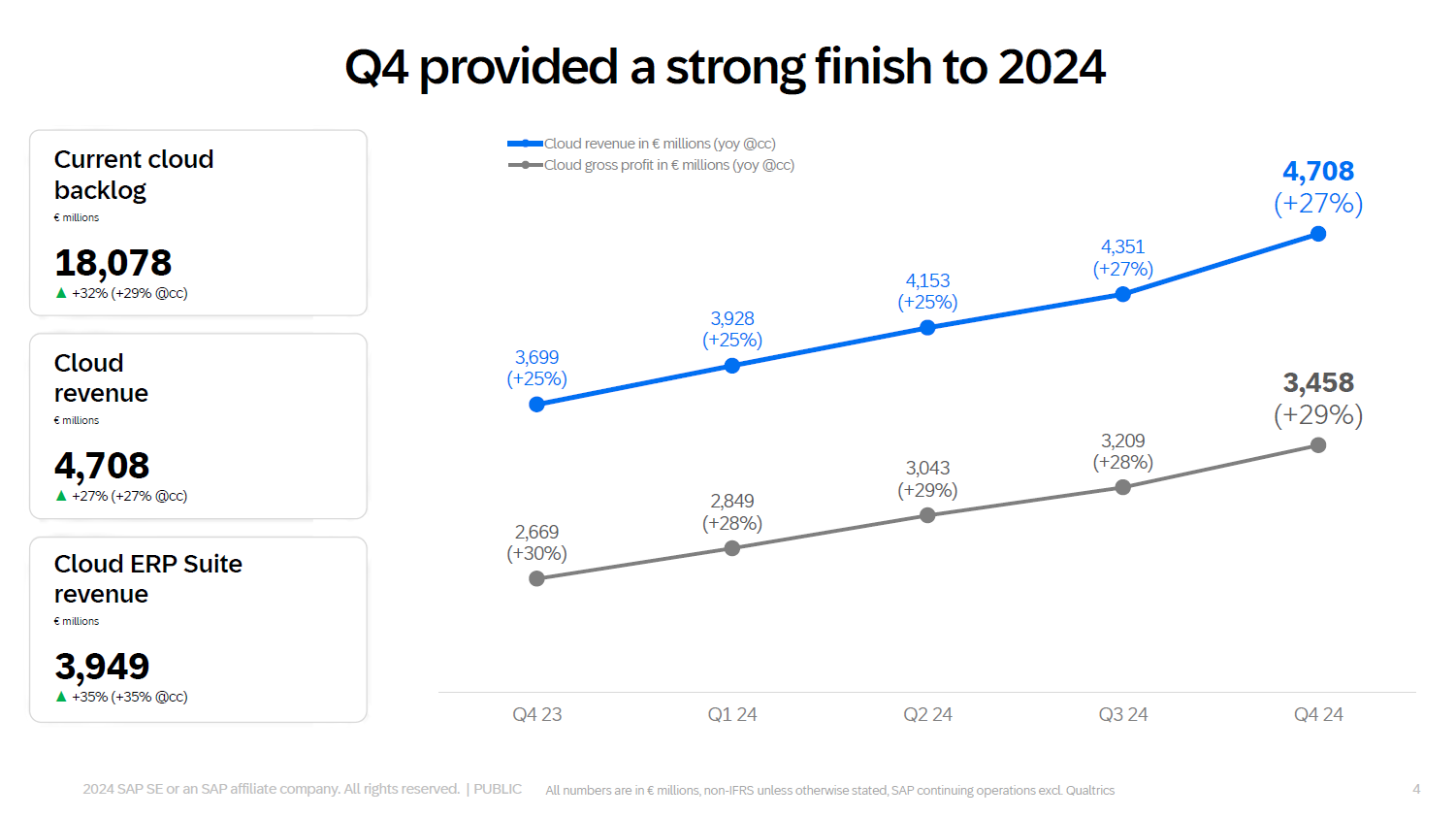

In the fourth quarter, SAP said cloud backlog grew 32% to €18.08 billion. Backlog gained due to Cloud ERP Suite revenue.

However, SAP did note that it expects current cloud backlog growth to "slightly decrease in 2025."

SAP said it projected cloud revenue of €21.6 billion to €21.9 billion. Cloud and software revenue for 2025 will be up 11% and non-IFRS operating profit will be up 26%. The company sees about €8 billion in free cash flow, nearly double from 2024.

For non-financial metrics, SAP plans to improve its Net Promoter Score to 16, up from 12 in 2024.

Don't leave customers behind

Klein said SAP can navigate macro-economic concerns in key industries such as automotive, which is struggling. Klein said SAP customers are navigating many economic and geopolitical concerns and that industries are moving to the cloud, but need time.

The upshot is that Klein said SAP is willing to play the long game with customers and extend maintenance for those that can't get to the cloud to 2033, a three-year reprieve.

SAP's new maintenance program was reported in Handelsblatt and outlined by a consultant.

"We want to be reasonable in our outlook, how we also reflect this facing of these wise deals, because customers need time. I mean, changing business modules is not only a technological move, but also about change management sometimes," said Klein.

Infosys sees 'good traction' with SAP S/4HANA migrations

Klein also acknowledged that some customers aren't going to make its deadline to go move to SAP/HANA.

He said:

"The end of maintenance by 2027 will not be changed. We will stick to that. But you also have to consider, in some parts of the stack, there are third party components included, and they are running out of maintenance as well. We don't want to leave the customers behind. As we moved all of our cloud solutions already on SAP/4HANA Cloud, we do now the same with these on premise customers. We move them to the cloud, we replace the third party components, and with that, there are 100 ERPs supported in a complete, sustainable and supported way. And that is about this offering.

It's actually for a very few large customers who will not making the time. To transform and consolidate ERP and business processes in over 100 countries is sometimes not that easy. It's not about the extension of on-premise maintenance, but it's really reaching out with helping hands with a very few large customers."

Executive changes

SAP also made executive changes.

The company said that Sebastian Steinhaeuser has been appointed to the Executive Board to lead Strategy & Operations, a new board area. Steinhaeuser will be focused on simplifying SAP operations.

SAP also extended the contract of Thomas Saueressig, head of Customer Services & Delivery, for another three years until 2028.

According to SAP, it will also form an Extended Board that will include Philipp Herzig, who takes over as global CTO in addition to Chief AI Officer, as well as two co-chief revenue officers.

Jan Gilg and Emmanuel (Manos) Raptopoulos will co-lead SAP's Customer Success organization as new CROs.

Data to Decisions

Digital Safety, Privacy & Cybersecurity

Innovation & Product-led Growth

Tech Optimization

Future of Work

Next-Generation Customer Experience

SAP

AI

GenerativeAI

ML

Machine Learning

LLMs

Agentic AI

Analytics

Automation

Disruptive Technology

Chief Information Officer

Chief Technology Officer

Chief Information Security Officer

Chief Data Officer

Chief Executive Officer

Chief AI Officer

Chief Analytics Officer

Chief Product Officer