Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

Google Cloud announced a bevy of capabilities and services designed to enable multi-agent systems for its customer base, which is increasingly integrating the company’s models, data platform and agent development tools into their stacks.

Google Cloud Next has kicked off with more than 32,000 attendees and more than 500 customer stories. With that backdrop here's a look at the key themes from Google Cloud Next in Las Vegas.

Customers and maturity

Google Cloud CEO Thomas Kurian's keynote includes Verizon, Honeywell, Intuit, Mattel, Reddit, Sphere Entertainment and McDonalds to name a few. Google Cloud is leaning into its impressive customer roster, targeting industries and adding expertise and services to its go-to-market engine. Google Cloud has leveraged its AI knowhow into a seat at the cloud vendor table and now is often an AI layer in multi-cloud environments.

Google Cloud CEO Thomas Kurian said enterprise customers have integrated AI tools into their products and service and are now demonstrating business value. "We have more than 500 customers speaking at Cloud Next, and we're thrilled that these customers will share the real value that they're seeing from the use of our technology. They span a broad range," said Kurian.

- 9 Google Cloud customers on AI implementations, best practices

- Google Cloud CEO Kurian on agentic AI, DeepSeek, solving big problems

- Google Cloud Next 2025: Agentic AI, Ironwood, customers and everything you need to know

- Google Cloud CTO Grannis on the confluence of scale, multimodal AI, agents

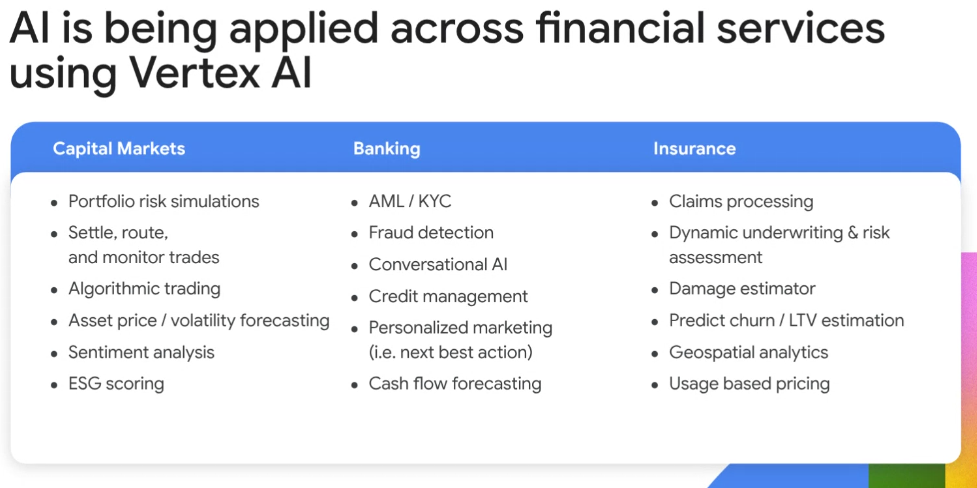

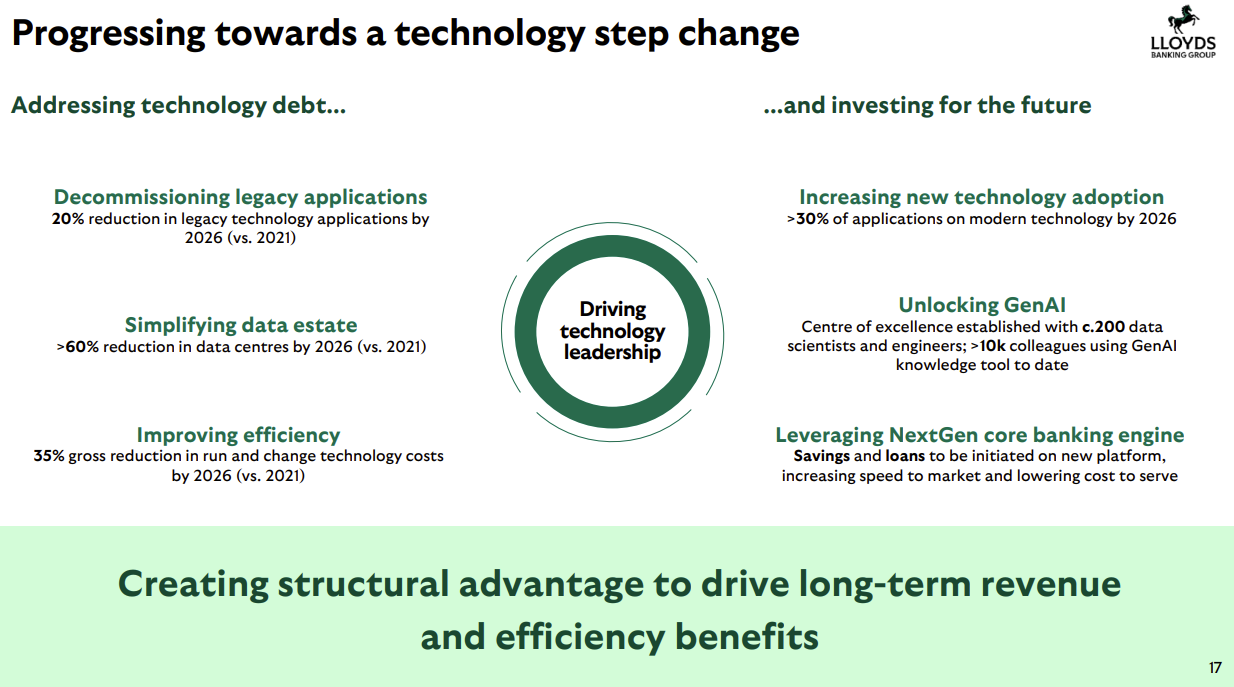

- Lloyds Banking Group bets on Google Cloud for AI-driven transformation

- Healthcare leaders eye agentic AI as next frontier for clinicians, patients

- Google Cloud, UWM partner as mortgage battle revolves around automation, data, AI

- Lowe's eyes AI agents as home improvement companion for customers

The customers cited by Kurian span a wide range of industries ranging from retail to healthcare to financial services. Google Cloud has also revamped its go-to-market approach to focus on industries, domain knowledge and use cases that drive returns. "We found the most important problems that customers care about and we have chosen to focus early on addressing them," said Kurian. "We've been fortunate that we made a bet early on with AI. The AI landscape has matured and we can offer many different pieces."

The showcase customer at Google Cloud Next was Sphere, which along with Google DeepMind, Google Cloud, Magnopus and Warner Bros. Discovery, reinvented the "The Wizard of Oz" with researchers, programmers, visual effects artist, AI engineers and archivists.

The "Wizard of Oz at the Sphere" effort revolved around using AI to recreate the 1939 movie to the Sphere and its sensory experience for a Aug. 28 degree. The companies developed a "super resolution" tool to turn celluloid frames from 1939 into high-definition imagery using Google Cloud models, AI outpainting and models to expand the scope of screens.

Kurian and Sphere CEO Jim Dolan said the goal was to honor the integrity of the original film and extended it to a new format. At a private screening event and keynote, Kurian described it as "almost like you were told to do AI and your first project was your PhD thesis, not your undergraduate."

Dolan added that it was the first time "I didn't feel like a customer. I felt like a partner. That's why this worked."

I saw the output from the collaboration and it was striking how much AI didn’t screw up the original.

Google Cloud's AI hypercomputer, custom silicon and integrated stack

Google Cloud announced Ironwood, its 7th generation TPU designed for faster model training and more efficient inference. Ironwood is the headliner with 4x peak compute and 6x high bandwidth memory, but Google Cloud launched storage, inference and AI at the edge advances. Gemini will be on Google Distributed Cloud infrastructure.

Ironwood is focused on powering responsive AI models. Ironwood can scale up to 9,216 liquid cooled chips linked through Inter-Chip Interconnect (ICI) networking. Ironwood also gives developers the ability to use Google's Pathways software stack to combine tens of thousands of Ironwood TPUs.

Key items about Ironwood include:

- For Google Cloud customers, Ironwood has two sizes for AI workloads--a 256 chip configuration and a 9,216 chip configuration.

- When scaled to 9,216 chips per pod at 42.5 Exaflops, Ironwood supports more than 24x the computer power of the El Capitan supercomputer.

- Ironwood has 192 GB per chip High Bandwidth Memory (HBM) capacity.

For Google Cloud, integration between its hardware stack is critical. Kurian said Google Cloud's stack is meant to address training as well as inference. In addition to Ironwood, Google Cloud announced:

- Hyperdisk Exapools, the next generation of block storage with up to exabytes of capacity and terabytes per second of performance per AI cluster.

- Rapid Storage, a new Cloud Storage zonal bucket that improves latency and features 20x faster data access and 6TB/s throughput.

- Cloud Storage Anywhere Cache, which reduces latency up to 70% with 2.5TB/s throughput. Anthropic is a big user.

- A fully managed zonal parallel file system called Google Cloud Managed Lustre and Google Cloud Storage Intelligence for insights specific to a customer's environment.

- A new GKE AI Inference Gateway to load balance inference requests.

- Cloud WAN, which provides 40% lower latency and up to 40% savings. Cloud WAN ensures traffic targeting Google WAN enters and exits Google's high-performance network at the geographically closest point of presence.

- Gemini on Google Distributed Cloud, which will run Gemini models on your on-premises infrastructure. Agentspace and Vertex AI will be available on Google Distributed Cloud.

- Google Cloud also launched optimized software for AI training and inference including Cluster Director, Pathways, Google's internal machine learning runtime, and vLLM for TPUs, an efficient library for inference that can be used with TPUs across Compute Engine, GKE, Vertex AI and Dataflow.

Here's the overview of what's new on the infrastructure front.

Agent development tools and models

With Google Cloud Next, the company is making it clear that it’s a notable cog in multi-agent systems and development tools. The company launched an open source Agent Development kit and Agent Engine that supports MCP, connectors to many players in the enterprise stack and Agent2Agent, which is a communications standard so agents can communicate.

More details include:

- Open Source Agent Development Kit and Agent Engine is designed as a combo to enable developers build multi-agent applications. It will be launched in Python and support MCP with other languages being added in the future.

- More than 100 connectors to access enterprise data and use cases. These pre-built connectors are designed for systems like Salesforce, ServiceNow, Jira, SAP, UiPath, Oracle and others.

- Agent2Agent enables communication across agent frameworks so agents can securely collaborate, manage tasks, negotiate UX and discover capabilities.

Of those items Agent2Agent (A2A) is the headliner.

Combined with MCP, Google Cloud's A2A effort highlights how agent interoperability standards have gone from nothing to something viable in just 5 months. Google Cloud said A2A has support of more than 50 technology partners including Atlassian, Box, Intuit, Langchain, MongoDB, Salesforce, SAP, ServiceNow, UKG and Workday. Systems integrators across the board are supporting A2A.

Google Cloud emphasized that A2A complements MCP because it focuses on AI agent collaboration regardless of their underlying technology.

Not surprisingly, Google Cloud rolled out a series of models that'll be on Vertex AI. Gemini 2.5 Flash and Pro are designed for complex reasoning and the company added a series of next-gen offerings such as Veo 2.0, Lyria and Chirp 3.

But the most interesting item on the model front was Model Optimizer, an experimental service that applies the best AI model for use case and requirements using Vertex AI's evaluation service. Model Optimizer will allow customers to tailor what models are used to meet a variety of business objectives.

Here’s the rundown:

- Gemini 2.5 Flash & Pro will be available on Vertex AI. Google Cloud also said that Veo 2.0, Lyria and Chirp 3 will bring new audio generation and understanding capabilities to the platform.

- Open Source Agent Development Kit, a framework for building multi-agent systems.

- Agent Engine, which enables customers to deploy agents from any framework to a fully managed runtime.

Agents everywhere

Agentspace is being built out. Google Cloud is using Agentspace to enable companies to build agents and use prebuilt ones too. Agents are being added to Google Cloud's Customer Engagement Suite, Data Cloud for engineering, data science and analytics tools, Security Suite and Workspace.

Google Cloud is ensuring that enterprises have multiple touchpoints to access and procure agents via Agentspace and its broader platform. The company launched:

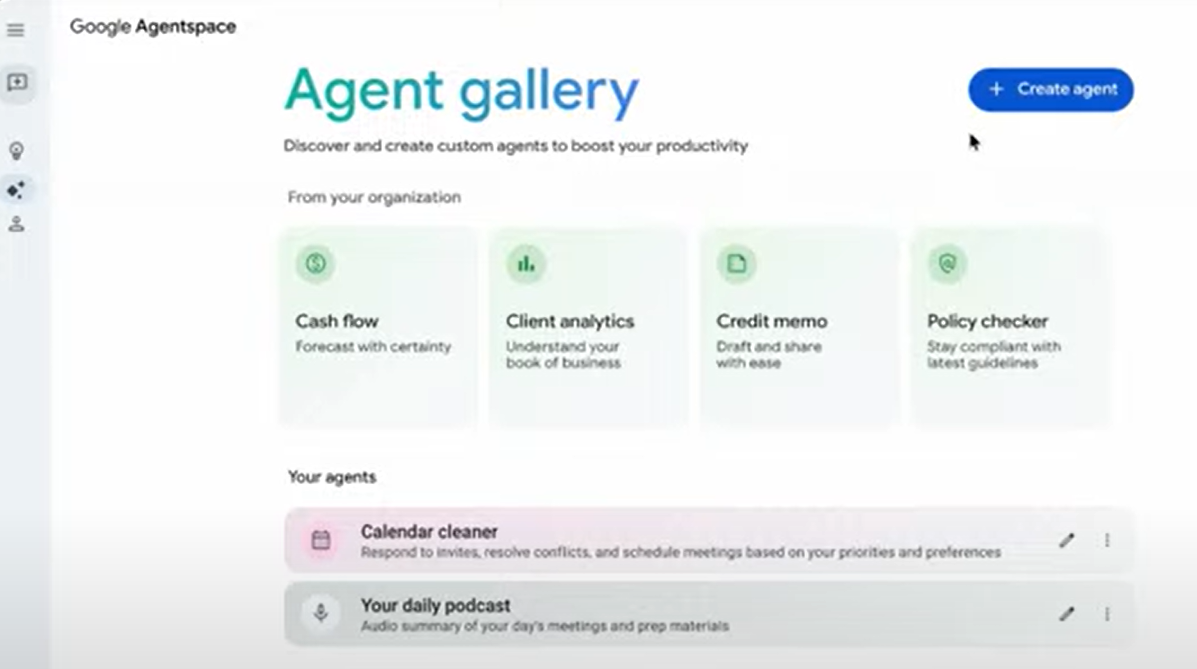

- Agentspace Agent Gallery, a curated set of agents from Google, customers and partners.

- Customer Engagement Suite updates with Google AI to use AI to build AI agents with multimodal and human-like voices.

- Use case specific agents such as Food Ordering AI Agent specifically designed for quick serve restaurants that operate with multiple languages. Google Cloud also announced Automotive AI Agent, which gives automakers the ability to create and deliver custom in-vehicle assistants.

- Customer Engagement Suite gets conversational agents in a new unified console as well as AI Coach and AI Trainer to help humans upsell and prebuilt agents for flight booking, ticketing, appointments and shopping assistance.

- Customer Engagement Suite is getting the ability to use Google's latest voice technologies so agents can sound like a human.

- Google outlined Idea Generation Agent that runs a tournament of ideas for evaluation as well as Deep Research agent.

- NotebookLM integration with Agentspace for natural language search results.

- Developers will get Cloud Hub, which provides top insights across applications and infrastructure, agentic AI application capabilities in Firebase Studio, Code Assist Agents and new tools in Cloud Assist.

- Data Cloud gets Data Agents tailored to users, Looker and AlloyDB integration with Agentspace, and LLM integration directly into BigQuery and AlloyDB.

- On the security front, Google Security Operations will get an Alert triage agent to investigate alerts and respond. Google Cloud is also adding a malware analysis agent in Google Threat Intelligence.

- Workspace will feature a series of agents and Gemini tools throughout the platform.

- Google Cloud announced Google Workspace Flows to automate work with the help of AI agents.

Here’s a look at how Google Cloud’s agent stack comes together.

Data to Decisions

Next-Generation Customer Experience

Tech Optimization

Digital Safety, Privacy & Cybersecurity

Innovation & Product-led Growth

Future of Work

Google Cloud

Google

SaaS

PaaS

IaaS

Cloud

Digital Transformation

Disruptive Technology

Enterprise IT

Enterprise Acceleration

Enterprise Software

Next Gen Apps

IoT

Blockchain

CRM

ERP

CCaaS

UCaaS

Collaboration

Enterprise Service

AI

GenerativeAI

ML

Machine Learning

LLMs

Agentic AI

Analytics

Automation

Chief Information Officer

Chief Technology Officer

Chief Information Security Officer

Chief Data Officer

Chief Executive Officer

Chief AI Officer

Chief Analytics Officer

Chief Product Officer