AI infrastructure spending is upending enterprise financial modeling

When a technology is evolving as fast as artificial intelligence, CFOs and the finance department struggle to crowbar AI infrastructure investments into traditional depreciation models.

Typically, enterprises depreciate capital spending on IT infrastructure over multiple years. For instance, servers have a useful life of 7 years, according to the IRS. Hyperscale cloud companies tend to tweak the useful life of a server. For instance, Meta in its annual report said certain servers and network assets had a useful life of 5.5 years for fiscal 2025. Amazon now reckons the useful life of some of its servers is now 5 years down from 6 years a year ago. Alphabet depreciates over 6 years.

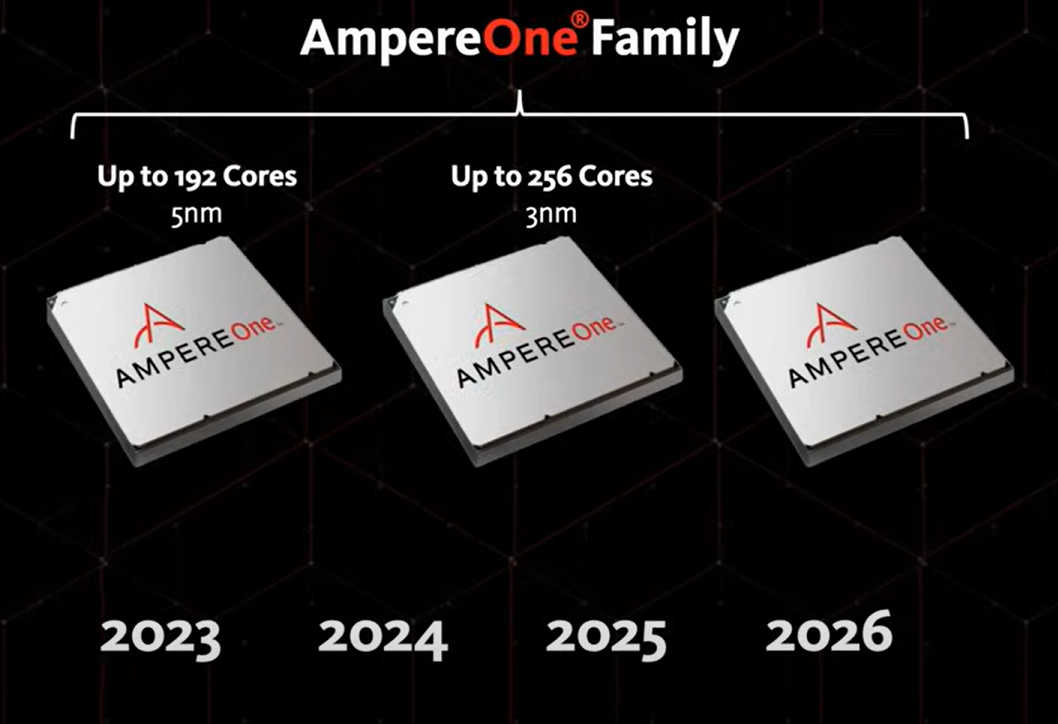

Here's the catch: 5 years in AI infrastructure is a lifetime. Nvidia now has an annual cadence for its GPUs and provides visibility into the roadmap through 2027. CEO Jensen Huang's bet is that Nvidia customers will continue to invest in the latest and greatest accelerated computing infrastructure because there's value and competitive advantage.

- Nvidia launches Blackwell Ultra, Dynamo. outlines roadmap through 2027

- Nvidia GTC 2025: Six lingering questions

Do companies go with shorter depreciation cycles, lease gear or just provision from the cloud even though operating expenses are already stretched? As Nvidia extends more into the enterprise and industry applications, this financial planning conundrum is going to go mainstream. It's no wonder that Nvidia has teams focused on financial solutions.

With that in mind, Nvidia held a virtual panel at GTC 2025 focused on these accounting issues. What does traditional planning look like when AI is advancing too quickly for typical technology refresh cycles?

Bill Mayo, SVP for research IT at Bristol Myers Squibb, has been investing in AI and machine learning for more than a decade, but AI advances today are moving faster than ever. "The challenge is we've had this probably first and second derivative improvement in in the pace of change that has completely broken financial models up and down the stack," said Mayo.

Richard Zoontjens, lead of the supercomputing center at Eindhoven University of Technology, said "we really need compute to compete in this era and that means financial systems have to support this fast moving world."

Zoontjens noted that the current AI cycle doesn't fit in with typical depreciation schedules and financial models. "If you buy new tools every five years, well you're not competing anymore. After two years, you lose talent and you lose innovation," he said.

The solution is that financial modeling will have to move faster. Mayo noted that Bristol Myers Squibb (BMS) has seen rapid improvement in compute, but there's still not enough to reach its biology vision. "At its core, biology is computation," said Mayo.

Mayo said that if you're using today's tech stack five years from now you're behind. Mayo said BMS hasn't figured out the financial model behind AI investments yet, but did say that its first swing was to move to a four-year depreciation cycle.

Zoontjens said his group opted for a flexible two-year renewal cycle. Flexibility is key. Zoontjens said sometimes his supercomputing center can stretch the system, but sometimes has to upgrade faster.

"The system and the lifecycle management contract that we have now provides that flexibility," said Zoontjens. "It gives us control and better agility to move and stay state of the art."

Mayo said AI investment and modeling has to revolve around the patient population and have the best insights to improve lives. That alignment helps with the costs, but BMS AI infrastructure is an operating expense due to cloud delivery. The problem is that cloud provider demand for AI infrastructure is high. Mayo said:

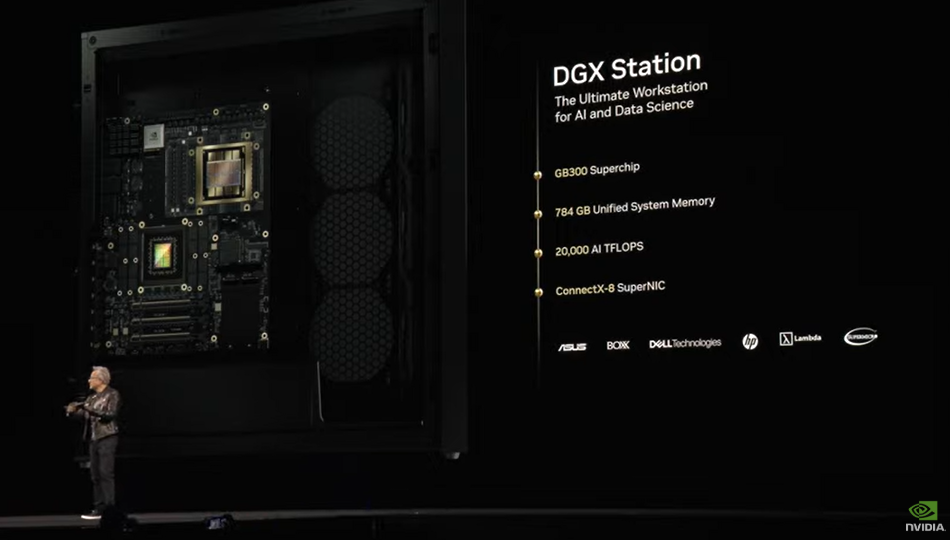

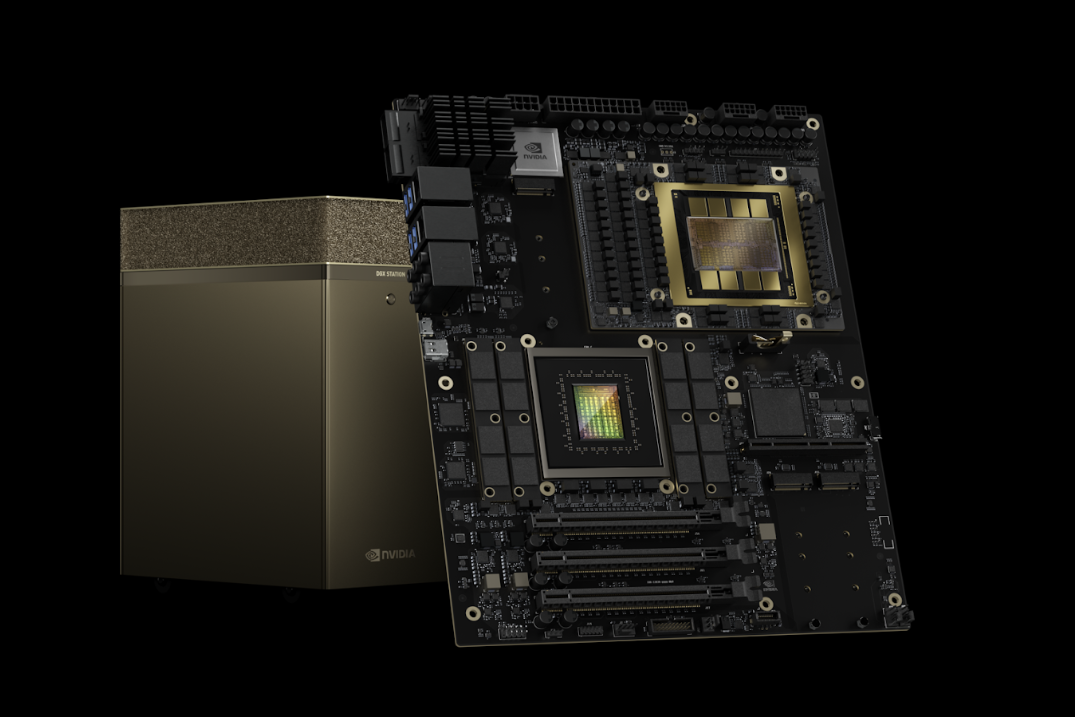

"We can't afford to buy a new (Nvidia) Super Pod every year and just use it for a year. I can't afford it at an OPEX rate, and frankly, neither can hyperscalers afford to buy enough to make it available fast enough that we can all consume that way."

The current situation may indicate that the havoc hitting financial models may be transitory, said Mayo, who said on-prem, co-located infrastructure as well as cloud AI services are all in the mix. "The fact of matter is, I'm buying through a time window that maybe three years from now, the financing problem might have solved itself, but TBD on that," said Mayo.

Here are a few themes on financing AI infrastructure from the IT buyers and Nvidia's Timonthy Shockley, global sales at Nvidia Financial Solutions, and Ingemar Lanevi, head of Nvidia Capital:

- Plan for data center investments that incorporate power and space savings. Nvidia systems have needed less space with each new system.

- Plan for more agile upgrade cycles to maintain capacity to compete in industries.

- There's no right answer that covers all the financial bases so there will be a mix of cloud and on-prem decisions to be made.

- Long-term depreciation will be an issue for the foreseeable future.

- Long-term cloud contracts and leases can be a challenge.

- Cross-functional teams will have to make financing decisions based on what needs to be achieved now and then where things will move later.

- Leasing models may make sense for AI infrastructure at the moment for cash flow purposes and building in upgrades.

- It's possible that a secondary market for AI infrastructure emerges for what Mayo called "gently used Super Pods." The accelerated computing market is young relative to CPUs so a secondary market may take time.

- Enterprises may look to monetize remaining residual value of AI infrastructure when it's not helpful to the buyer anymore.

- Segment investments for what needs to be cutting edge and adjust the financial model accordingly. Non-cutting edge tech investments can be depreciated over a longer period.

- Today's AI infrastructure spend is governed by financial systems, but may have to flip in the future to account for product cycles.

Mayo added the disconnect between financial planning and the AI opportunity is just a place in time.

"It's going to get solved. There's a right answer for the use case or the situation you're grappling with right now. Maybe it's a funding model, maybe it's a cash constraint. As long as we're open to try whatever, we're going to solve this problem, and then we're going to use this solution to solve all the other problems."

Data to Decisions Innovation & Product-led Growth Future of Work Tech Optimization Next-Generation Customer Experience Digital Safety, Privacy & Cybersecurity nvidia Big Data ML Machine Learning LLMs Agentic AI Generative AI AI Analytics Automation business Marketing SaaS PaaS IaaS Digital Transformation Disruptive Technology Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain CRM ERP finance Healthcare Customer Service Content Management Collaboration Chief Financial Officer Chief Information Officer Chief Technology Officer Chief Information Security Officer Chief Data Officer Chief Executive Officer Chief AI Officer Chief Analytics Officer Chief Product Officer