Distinguished Chair of the Accelerator & Principal/CEO

Stimson Center & LeadDoAdapt Ventures

Dr. David A. Bray is a Distinguished Fellow and Chair of the Accelerator with the Alfred Lee Loomis Innovation Council at the non-partisan Henry L. Stimson Center. He is also a non-resident Distinguished Fellow with the Business Executives for National Security, and a CEO and transformation leader for different “under the radar” tech and data ventures seeking to get started in novel situations. He is Principal at LeadDoAdapt Ventures and has served in a variety of leadership roles in turbulent environments, including bioterrorism preparedness and response from 2000-2005. Dr. Bray previously was the Executive Director for a bipartisan National Commission on R&D, provided non-partisan leadership as a federal agency Senior Executive, worked with the U.S. Navy and Marines on improving…

Read more

Principal Analyst and Founder

Constellation Research

R “Ray” Wang is the CEO of Silicon Valley-based Constellation Research Inc. He co-hosts DisrupTV, a weekly enterprise tech and leadership webcast that averages 50,000 views per episode and blogs at www.raywang.org. His ground-breaking best-selling book on digital transformation, Disrupting Digital Business, was published by Harvard Business Review Press in 2015. Ray's new book about Digital Giants and the future of business, titled, Everybody Wants to Rule The World was released in July 2021. Wang is well-quoted and frequently interviewed by media outlets such as the Wall Street Journal, Fox Business, CNBC, Yahoo Finance, Cheddar, and Bloomberg.

Short Bio

R “Ray” Wang (pronounced WAHNG) is the Founder, Chairman, and Principal Analyst of Silicon Valley-based Constellation…

Read more

1

A Rapidly Evolving Landscape; OpenAI's Disappearing Moat

We've been watching the generative AI landscape transform at breathtaking speed, and what concerns us most is how quickly the narrative around OpenAI has shifted from "unassailable market leader" to "company facing existential challenges." As leaders who have spent our careers at the intersection of technology, policy, and enterprise strategy, we believe that organizations making multi-million dollar AI investments need to understand the broader context beyond the marketing hype.

The concept of a "moat" in business refers to sustainable competitive advantages that protect a company from competitors. OpenAI's initial moat was built on first-mover advantage, technical superiority, and massive funding. All three pillars are now showing significant cracks.

Microsoft—OpenAI's primary backer—has began testing outside models from xAI, Meta, and even Chinese company DeepSeek. Simultaneously, Apple appears to be reconsidering its OpenAI partnership, now engaging with Google about Siri integration. These moves by two of the world's most valuable companies signal serious concerns about OpenAI's trajectory.

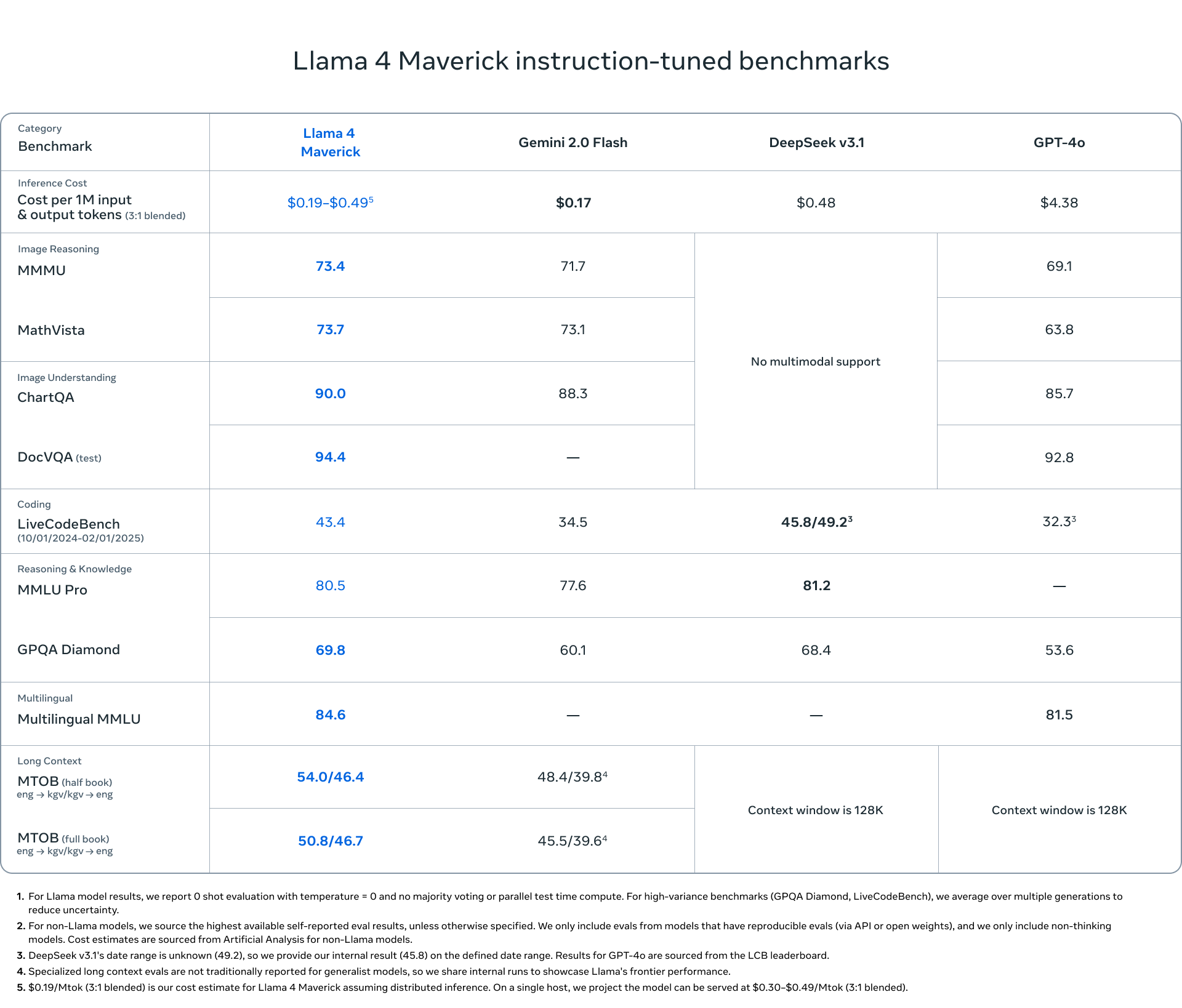

The technical superiority argument is also collapsing. OpenAI's rushed GPT-4.5 release shows a 30% error rate—significantly worse than both Anthropic's Claude 3.7 and xAI's Grok3. When your core product is underperforming relative to competitors, enterprise customers take notice.

Competition Is Intensifying; The Economics Don't Add Up

While OpenAI struggles, competitors are gaining momentum. Anthropic secured a $3 billion investment from Google and released Claude 3.7, which many consider technically superior to OpenAI's offerings. Elon Musk's xAI launched Grok3 with impressive deep research capabilities. Even OpenAI's former CTO, Mira Murati, launched Thinking Machines Lab and raised $2 billion at a $9 billion valuation in just two weeks.

And we can't ignore developments from China. Within the last few weeks,they announced what they described as the world's first fully autonomous AI agent, called Manus. Unlike some overhyped Western announcements, Chinese AI capabilities have generally delivered on their promises. This represents both competitive and geopolitical considerations for enterprise leaders.

The financial picture is equally concerning. OpenAI is reportedly burning through $1 billion monthly and could lose up to $44 billion by next year. Sam Altman himself admitted they lose money on every $200/month ChatGPT subscription. Their recent announcement of enterprise offerings priced between $2,000-$20,000 monthly appears to be a desperate attempt to stem these losses.

This pricing strategy reveals a company pivoting toward enterprise customers out of necessity rather than strength. But this market is already dominated by Microsoft, Amazon, and Google, who have decades-long relationships with Fortune 500 companies. OpenAI faces an uphill battle against entrenched competitors with deeper pockets and broader offerings.

Despite the recent headline-grabbing $40 billion funding round that catapulted OpenAI's valuation to $300 billion and reports that the company's revenue has grown by 30% in three months, the company still doesn't expect to break even until 2029—four years from now! This timeline raises serious questions about the sustainability of their business model, especially as they continue to burn through cash at an alarming rate.

In a telling strategic pivot, OpenAI has also announced plans to launch an open-weights reasoning model that developers can run on their own hardware. This represents a significant departure from their closed system subscription model and suggests an acknowledgment that their current approach may not remain competitive in the long term. This move appears to be a course correction in response to mounting pressure from both open-source alternatives and competitors offering more flexible deployment options.

Strategic Implications for Enterprise Leaders

For CEOs, CTOs, CIOs, and CMOs, these developments necessitate a more sophisticated approach to AI strategy. The days of simply "partnering with OpenAI" as a complete AI strategy are over. We believe enterprise leaders need to consider:

-

Geopolitical factors: How will US-China tensions affect your AI supply chain? What regulatory frameworks are emerging in different regions?

-

Economic sustainability: Are your AI partners financially viable for the long term? What happens if they significantly raise prices or pivot their business models?

-

Technical diversification: How can you build an AI architecture that isn't dependent on a single provider?

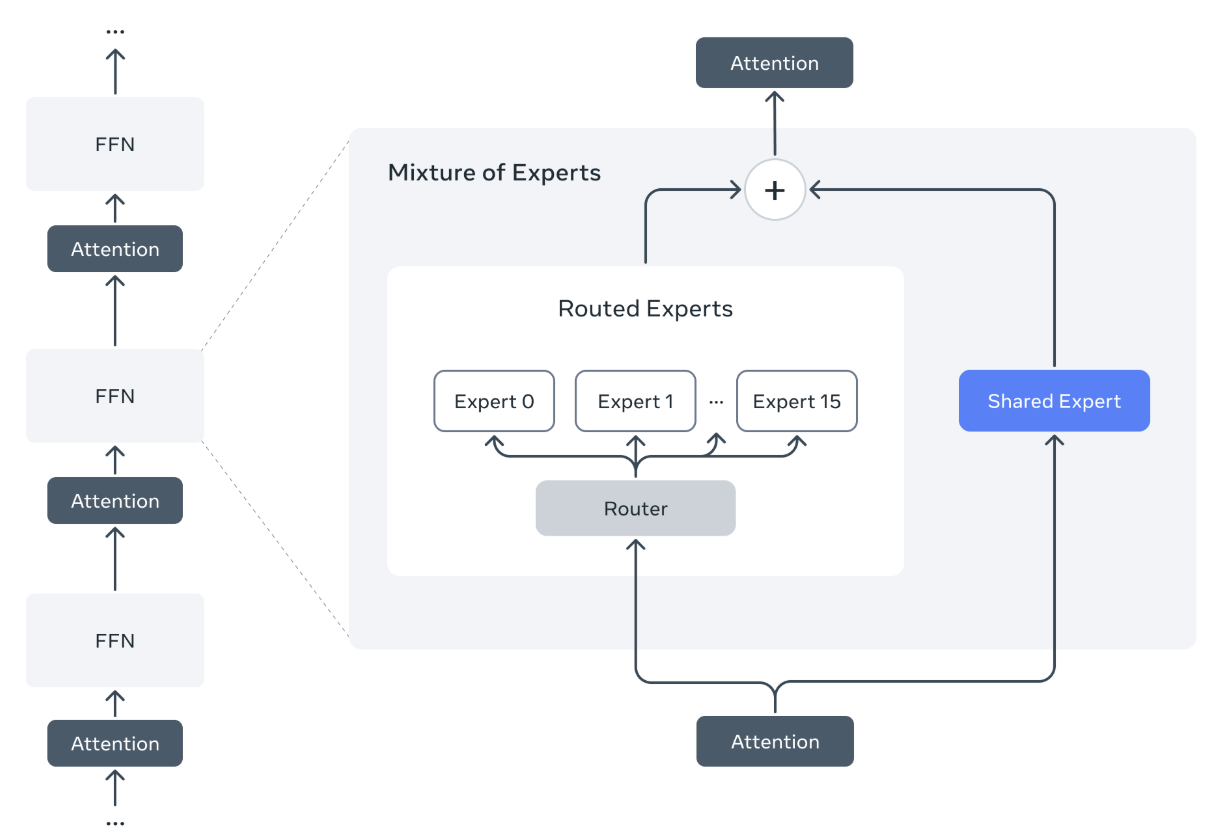

Enterprise clients can implement what we call a "multi-modal, multi-model" approach. This means leveraging different AI models for different use cases and maintaining the flexibility to switch providers as the landscape evolves. The companies that will win in the AI era aren't those that pick the "right" vendor today, but those that build adaptable AI architectures.

OpenAI's current valuation approaching $300 billion seems increasingly disconnected from economic reality. While they deserve credit for catalyzing the current AI revolution, enterprise leaders need to recognize that we're entering a new phase where multiple players will drive innovation.

The next 18 months will be critical. We'll see consolidation among smaller AI companies, continued heavy investment from tech giants, and potentially surprising moves from nation-states viewing AI as critical infrastructure. Enterprise leaders need to stay informed not just about the technology, but about these broader market and geopolitical dynamics.

The bottom line for enterprise leaders: your AI strategy needs to be as sophisticated as the technology itself.

Look beyond the hype, consider the full spectrum of factors at play, and build flexibility into your approach. We believe the latest "wave" of the current AI revolution is just beginning, and the winners will be those who navigate its complexities with clear-eyed strategic thinking.

Data to Decisions

Future of Work

Innovation & Product-led Growth

Marketing Transformation

New C-Suite

Tech Optimization

Chief Analytics Officer

Chief Data Officer

Chief Digital Officer

Chief Executive Officer

Chief Information Officer

Chief Privacy Officer

Chief Procurement Officer

Chief Product Officer

Chief Supply Chain Officer

Chief Technology Officer