CoreWeave's IPO: What you need to know

CoreWeave filed to go public and it's unclear whether the company's debut on the stock market will be a signal of an AI infrastructure top or just a milepost on a multi-year buildout.

One thing is clear: CoreWeave has crazy revenue growth, depends on two customers and is losing hefty sums.

Welcome to the AI infrastructure boom of 2025 (or is that bust?). The feds, OpenAI and others launch Stargate to invest in AI data centers in the US. TSMC is building in the US as geopolitics and AI infrastructure comingle. Capital spending from the likes of Microsoft, Meta, Alphabet and AWS continues to ramp. There's a fancy new large language model (LLM) daily. And Nvidia puts up record growth that Wall Street is taking for granted. Even bitcoin miners are in on the AI infrastructure boom.

This AI infrastructure boom will work well...until it doesn't. DeepSeek spurred fears that may mean we won't need all of this AI infrastructure. Don't worry though. All the big spenders assure us that the capacity is worth it. Erring on the side of not spending a gazillion dollars on AI infrastructure is the real mistake. We're in the FOMO round for GPUs and AI data centers.

- GenAI's 2025 disconnect: The buildout, business value, user adoption and CxOs

- AI data center building boom: Four themes to know

- DigitalOcean highlights the rise of boutique AI cloud providers

- Blackstone's data center portfolio swells to $70 billion amid big AI buildout bet

- The generative AI buildout, overcapacity and what history tells us

With that backdrop, here's what you need to know about CoreWeave, which will be an IPO worth watching simply for AI infrastructure sentiment.

What is CoreWeave? CoreWeave is an AI infrastructure specialist. The company is in the right place at the right time with AI infrastructure. CoreWeave has more than 250,000 GPUs online, 1.3 gigawatts of contracted power, 32 data centers and $15.1 billion in 2024 remaining performance obligations.

The offering, a rocky start and a lower price. CoreWeave initially said it will trade on the Nasdaq under the ticker "CRWV." it is offering 47,178,660 Class A shares. Class A common stock will price between $47 and $55 a share.

And then things got rocky for CoreWeave. CoreWeave leading up to its IPO became a referendum of AI infrastructure spending and the company wound up cutting its offering and the price. Ultimately, CoreWeave offered 37.5 million shares, down from 49 million shares, priced at $40. That $40 price per share was down from the initial expectation of $55.

The issue? The Financial Times reported that CoreWeave breached some terms of its $7.6 billion loan in 2024 and triggered defaults. Blackstone amended terms and waived the defaults. In addition, there are signs that big AI data center spenders may be pulling back on aggressive expansion plans. However, CoreWeave remains the biggest US tech IPO since 2021 with plans to raise $1.5 billion.

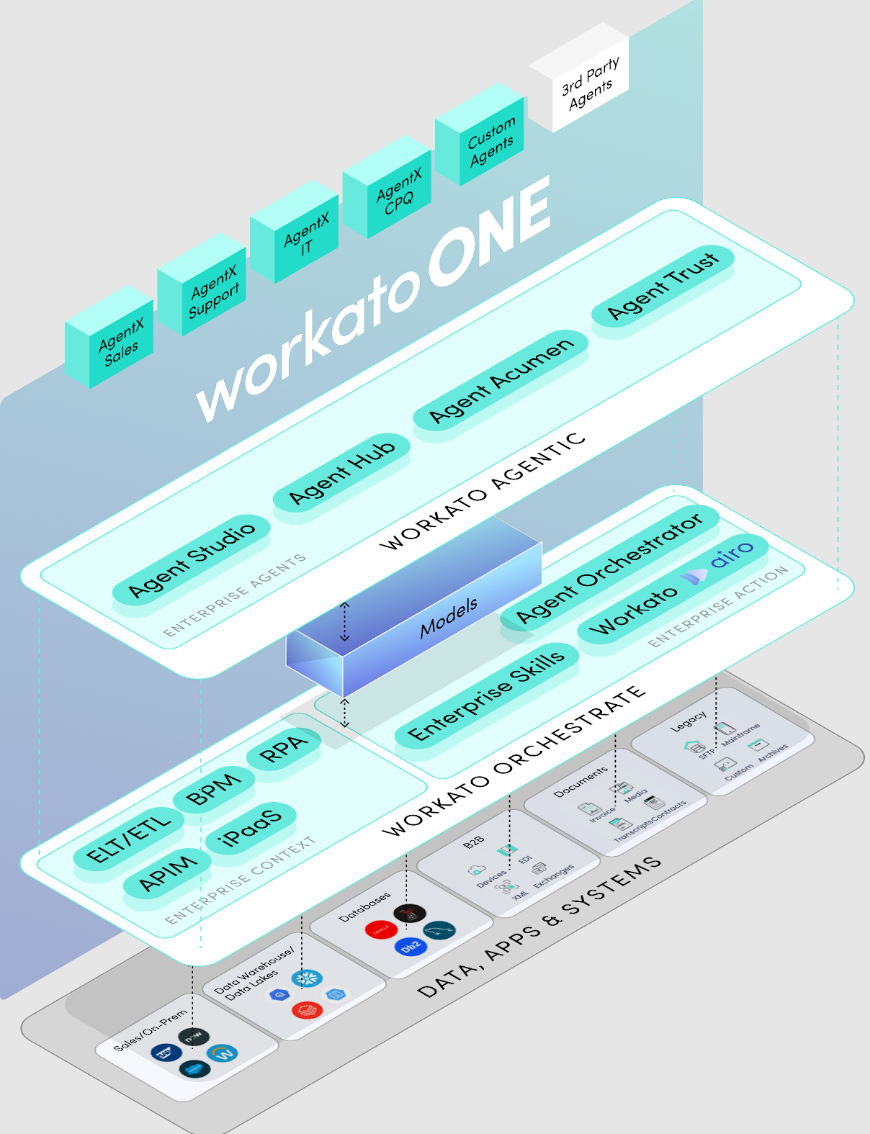

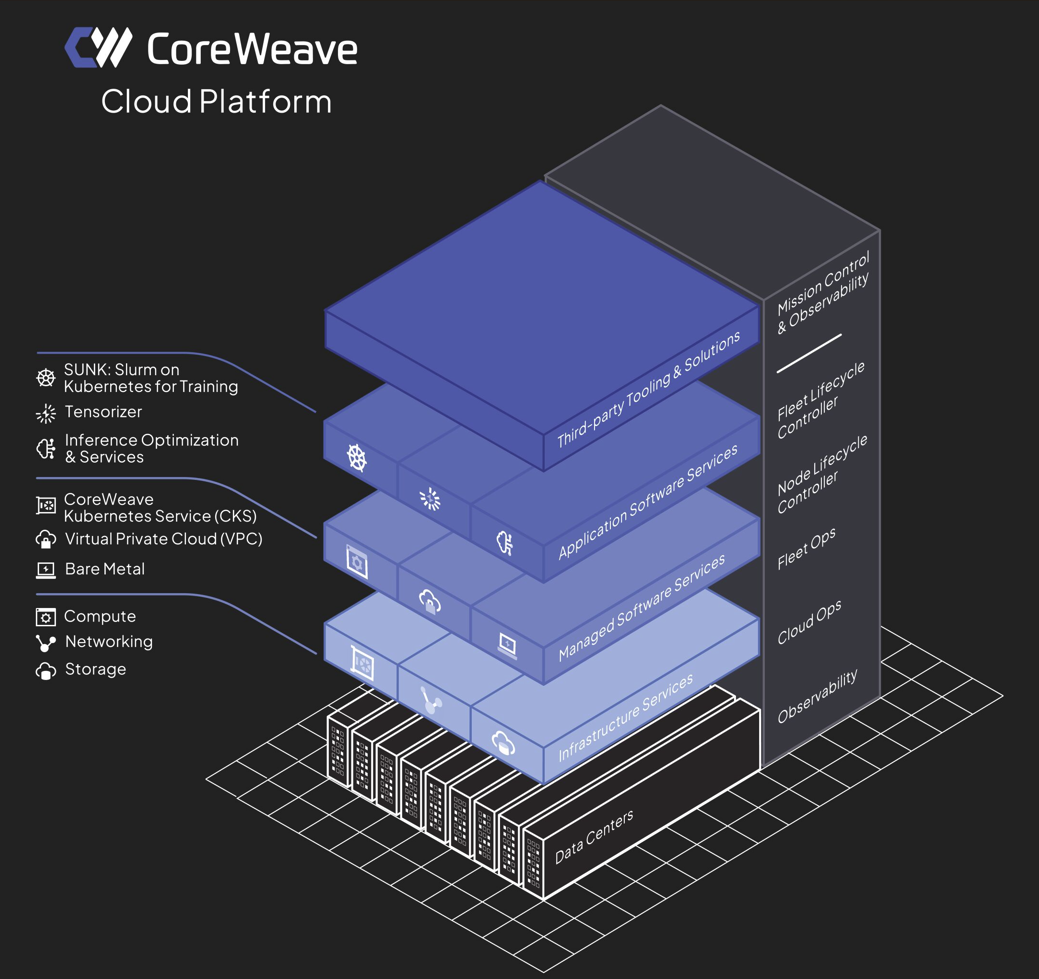

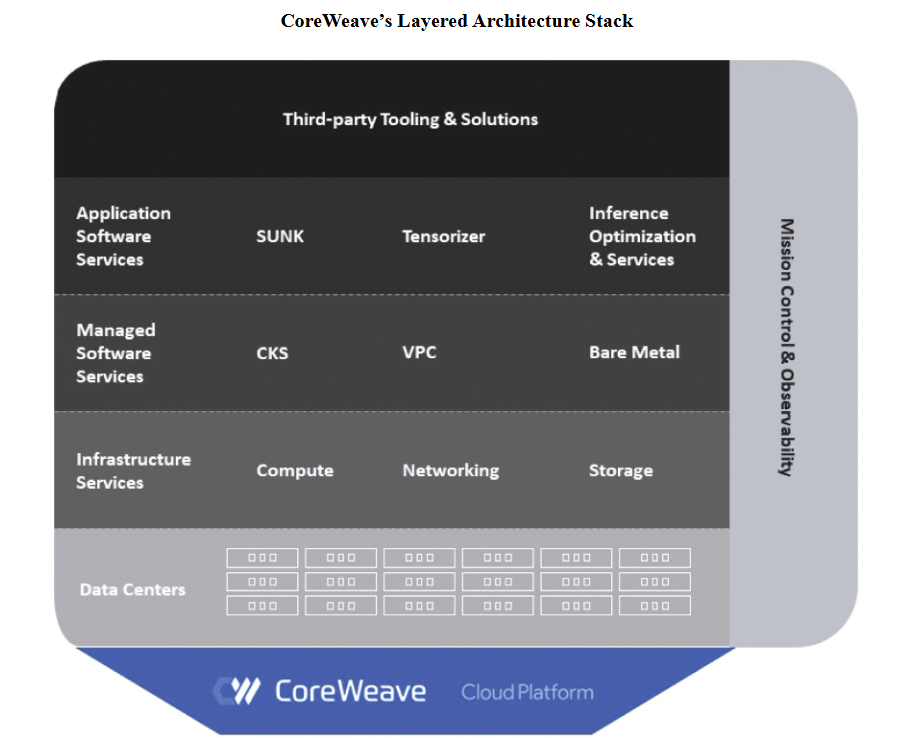

The AI stack. CoreWeave's stack of services is designed for AI workloads. CoreWeave's Cloud Platform is designed for uptime and reducing friction of engineering, assembling, running and monitoring infrastructure for AI workloads. CoreWeave has a Nvidia H100 Tensor Core GPU cluster with Nvidia Blackwell coming online. The infrastructure is designed for training as well as inference. "This market is not all about the big cloud vendors in the AI era, but also about smaller vendors in a good position with alternate offerings," said Constellation Research analyst Holger Mueller. "Smaller vendors usually try to win CxOs over due to the simplicity of their offering. A good example is CoreWeave, specializing on GPUs. We will see how it does commercially when it goes public."

The company said:

"We were among the first to deliver NVIDIA H100, H200, and GH200 clusters into production at AI scale, and the first cloud provider to make NVIDIA GB200 NVL72-based instances generally available. We are able to deploy the newest chips in our infrastructure and provide the compute capacity to customers in as little as two weeks from receipt from our OEM partners such as Dell and Super Micro."

The stack looks like this:

CoreWeave's mission is to utilize compute more efficiently for model training and inference. "We believe the AI revolution requires a cloud that is performant, efficient, resilient, and purpose-built for AI," the company said.

CoreWeave will use acquisitions to build out its stack. The company announced the acquisition of Weights & Biases, a major player in the MLOps and LLMOps ecosystem. The deal will give CoreWeave the ability to manage machine learning and model operations.

"Our combined capabilities will help you get real-time model performance monitoring and robust orchestration, providing you with a powerful AI application development workflow which can accelerate time to production and get your AI innovations to market even faster," said CoreWeave.

Mueller said:

"CoreWeave management has understood that CxOs want to buy complete solutions and forays into AI application development and related operations. The result is a turnkey cloud that allows to build and operate AI powered next generation application and in one offering."

Customers. In its SEC filing, CoreWeave noted that its customers include IBM, Meta, Microsoft, Mistral and Nvidia. The company also announced a multi-year deal with OpenAI. CoreWeave will provide AI infrastructure to OpenAI in a contract valued at $11.9 billion. OpenAI will become an investor in CoreWeave via the issuance of $350 million of CoreWeave stock.

Services offered. CoreWeave offers infrastructure cloud services but also has managed software, application software services as well as its Mission Control and observability software.

The debt funding growth. CoreWeave has financed its expansion with debt--$12.9 billion through Dec. 31, 2024, to be exact. That debt is backed by its assets and multi-year committed contracts. RPO was $15.1 billion at the end of 2024, up 53% from a year ago.

Blackstone and Magnetar funded CoreWeave's most recent private debt.

CoreWeave's revenue growth is stunning. In 2022, the company had revenue of $16 million. In 2023, revenue was $229 million. And by 2024, revenue surged to $1.9 billion, up 737% from a year ago. Net losses also surged. In 2024, CoreWeave had a net loss of $863 million and $65 million "adjusted."

Expansion. CoreWeave's plan is to capture more workloads from existing customers, extend into new industries, land enterprise customers and grow internationally. CoreWeave also plans to maximize the economic life of infrastructure. Judging from hyperscalers simply extending the useful life of servers can dramatically boost earnings. CoreWeave segments customers based on AI natives and enterprises.

The risks. CoreWeave's biggest risk is that 77% of its revenue comes from its top two customers. Microsoft was 62% of revenue in 2024. A deal with OpenAI means CoreWeave will likely be dependent on its three top customers. The company said:

"Any negative changes in demand from Microsoft, in Microsoft’s ability or willingness to perform under its contracts with us, in laws or regulations applicable to Microsoft or the regions in which it operates, or in our broader strategic relationship with Microsoft would adversely affect our business, operating results, financial condition, and future prospects.

We anticipate that we will continue to derive a significant portion of our revenue from a limited number of customers for the foreseeable future."

The good news is that CoreWeave's future revenue from OpenAI will bring Microsoft down to less than 50% of revenue. "Microsoft, our largest customer for the years ended December 31, 2023 and 2024, will represent less than 50% of our expected future committed contract revenues when combining our RPO balance of $15.1 billion as of December 31, 2024 and up to $11.55 billion of future revenue from our recently signed Master Services Agreement with OpenAI," the company said.

Other risk factors include the reality that CoreWeave depends on Nvidia GPU supply, its debt load and access to power. CoreWeave also faces competition from hyperscale cloud players including AWS, Google Cloud, Microsoft Azure and Oracle as well as focused AI cloud providers such as Crusoe and Lambda.

Data to Decisions Tech Optimization Innovation & Product-led Growth Future of Work Next-Generation Customer Experience Digital Safety, Privacy & Cybersecurity Big Data AI GenerativeAI ML Machine Learning LLMs Agentic AI Analytics Automation Disruptive Technology Chief Information Officer Chief Technology Officer Chief Information Security Officer Chief Data Officer Chief Executive Officer Chief AI Officer Chief Analytics Officer Chief Product Officer