Launch Report - When BW 7.4 meets HANA it is like 2 + 2 = 5 - but is 5 enough?

Launch Report - When BW 7.4 meets HANA it is like 2 + 2 = 5 - but is 5 enough?

As usual it’s good to remember how we got here…

A brief history of BW

Reporting has always been a challenge for enterprise application vendors. And when SAP was busy building R/3 in the early 90ies of last century, speed to build out a functionally complete ERP package was of the essence. Reporting was implemented in a similar way like in R/2 – which meant the company missed the data ware house trend. No one was unhappier about it than SAP co-founder Hasso Plattner, who frustrated about a combination of lack of understanding and progress hired an outsider (than a disruptive talent decision for SAP) with Klaus Kreplin. And Kreplin and his team delivered a solid data warehouse, originally called BiW (dropped later for not confusing it with another, but minor other German software company) in very short time. Not surprisingly SAP came to realize that being the largest business application vendor, it was not enough to just deliver a data warehouse, but customers expected extractions and content in the data warehouse. So SAP created the BW content releases. Then followed a long phase of different front end tools, the Business Objects acquisition happened and customers took up BW – to the tune of around 14000 today.

Enters HANA

Meanwhile, on the roots of the BW text search engine TREX, the PTime acquisition and the Sybase acquisition HANA was created, and with that some confusion started. Customers were using BW – but hearing from SAP that the traditional separation of OLTP and OLAP was history. Some predicted the end of BW. Of course that did not happen, too many customers, luckily don’t go away overnight, as well as a resilient and large ecosystem of partners.

And as we all know by now – HANA is the platform on which SAP has embarked in a massive journey of re-inventing itself. Consequently, BW also has to run on HANA and that was achieved with BW 7.3 – which in the aftermath was more of a ‘proof it works’ release. For the first time SAP let its ecosystem try and play with a key revenue product, with the BW 7.3 on HANA trial, with very good success. But after technology adoption, the interesting thing is what happens next, and that is what we can start evaluating with BW 7.4 on HANA.

Why it’s 2 + 2 = 5!

Let’s look at greatest drivers for enterprise synergy of the combining the two products:

- Speed (from HANA) – No question HANA contributes speed to traditional BW implementations. Traditional BW – like all data warehouses – needed some attentive hand holding to remain a responsive system that users could use. Not impossible, but the watch had to be 24x7. Getting speed without having to design a classic star schema design is another advantage.

- Simplification (from HANA) – The simplification of being able to run OLAP and OLTP on the same system, in columnar format has been described much before. But also a key data warehouse process, operated in the ETL layer – has pretty much gone. All data is there – normalized, with no need for transport and massaging.

- Content (from BW) – Remember the BW history, the first versions were technically great – but lacked content. BW has more enterprise content than most enterprises can and want to digest – so HANA instantly gains significant content.

- Governance (from BW) – Another lesson for all data warehouses – skipped for brevity above – is that you can’t surface the insight to just anyone in the enterprise. SAP spend a lot of time in the early 2000s learning that and building appropriately for it – and now it’s practically a gift to HANA.

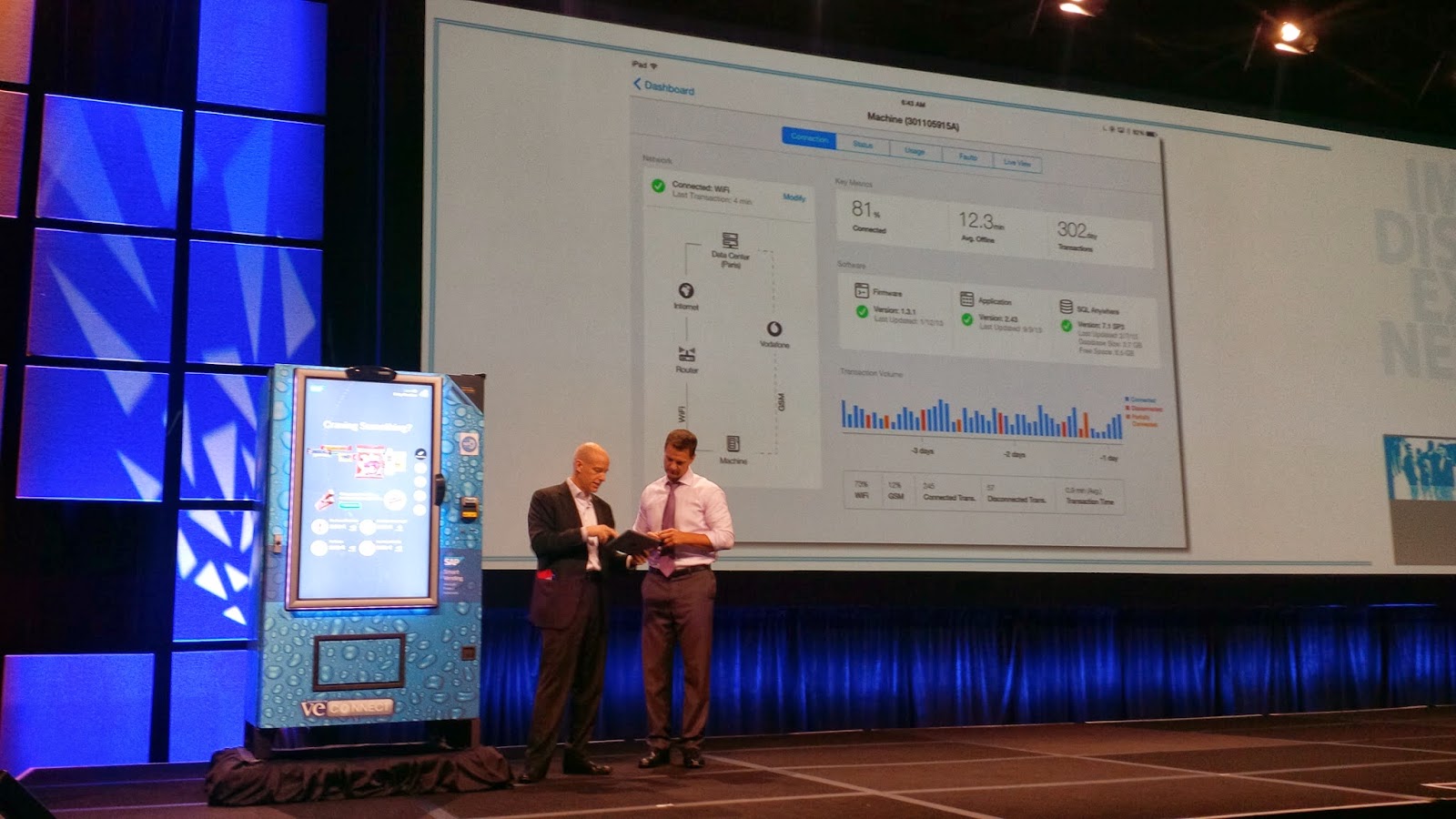

So where does the synergy – the 5 come from? Well, it’s the combination of above that enables faster insights, build on a modern application architecture (yes you can use it on an iPad) and that allows enterprise decision makers get to data faster and hopefully with that find the insights to make the right decisions.

But is 5 enough?

Getting a 5 from a 2 plus 2 equation – 25% headroom is a good result. Especially with BW 7.4 being the first release beyond 7.3, which as mentioned before was focused mainly on ‘getting there’. But 5 can only be enough if the insights are packaged in the right way, that a business user can digest them. And SAP has made a good acquisition with KXEN, but the road to packaged analytical applications, consumable by the business end users – remains a long one. To be fair SAP has only started. And 5 can only be enough if sufficient relevant information is available to make the right decision – needless to say that in 2014 it begs the question on how SAP will address the NoSQL / Hadoop challenge. [Added] And the indication on the latter is, that SAP is moving into a co-existence scenario between data in HANA and data in Hadoop in BW 7.4,, allowing the combination via the smart data access functionality.

MyPOV

SAP has delivered a promising ‘first’ functional release with BW 7.4 on HANA. It is good progress – but more needs to happen in the next releases to be able to feed (true) analytical insights to business end users – cutting out data scientist engagement on a project level. Of course there is plenty of ‘bread and butter’ BI to have that BW addresses well, but the quest needs to be for the ‘holy’ grail – end user consumable analytical applications, that are good enough to foster insights, without much or any IT and data scientist involvement.

To be fair – no one has gotten there (yet). It’s probably going to be a score of 8 or 9 that is required. So for now, 5 is a good start.

Tech Optimization Data to Decisions Digital Safety, Privacy & Cybersecurity Innovation & Product-led Growth Future of Work SAP Oracle SaaS PaaS IaaS Cloud Digital Transformation Disruptive Technology Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain CRM ERP CCaaS UCaaS Collaboration Enterprise Service Chief Information Officer Chief Technology Officer Chief Information Security Officer Chief Data Officer