Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

When Abrigo, which provides compliance, credit risk, and lending software for financial institutions, launched a series of new capabilities to its Abrigo AI suite in September this year, the effort was more than a product launch. The additions were the payoff in product velocity as a result of a broader cloud and data transformation.

For Ravi Nemalikanti, chief product and technology officer at Abrigo, the launch of AskAbrigo, Abrigo Lending Assistant, Abrigo’s anti–money laundering assistant, and Abrigo Allowance Narrative Generator highlighted how Abrigo could move much faster than it could two years ago.

“There’s no way we could have been in a position to launch those products back in our previous data center–centric world,†Nemalikanti said. “It was definitely a transformation around cloud, data, customer experiences, and resilience that made this possible.â€

Abrigo’s new artificial intelligence (AI) products went from concept to production in six months, three times faster than the process previously would have taken.

Download this article as PDF

And the stakes are high. Nemalikanti said Abrigo is a critical software provider to banks with less than $20 billion in assets as well as credit unions. “If you’re looking at a $15 billion bank or a $1 billion credit union, they don’t have a way to stay ahead of what’s happening or even keep pace,†Nemalikanti explained. “They look to us as an innovation partner. We needed to transform ourselves to be able to help power the transformation for our banks and credit unions.â€

In keeping with the trajectory of enterprises such as Intuit and Rocket, taking advantage of the latest in AI required Abrigo to complete foundational steps in the years prior. First, there’s a move to the cloud. Then there’s the data transformation. And if those two foundational elements are lined up, adopting AI at scale is more feasible.

Abrigo decided to move to the cloud in 2022 and then held a bake-off between the big three hyperscalers. Abrigo, a Microsoft shop, decided to go with Amazon Web Services (AWS), in part because the company didn’t want to be tied to software licenses and wanted to use open source technologies, Nemalikanti said. Abrigo, which caters to a heavily regulated industry, has noted that AWS’ approach to building security into development and deployment processes was also a big factor.

Nemalikanti noted that the move to AWS was partly about cost savings, but the real win was velocity and the cultural transformation involved with operating in the cloud. He said that previously product teams would develop software and throw it over to the data center ops team. With the cloud, the approach to software development is more holistic. “Shifting to the SRE [site reliability engineering] mindset across the organization was critical to cultural change,†Nemalikanti said. “Now, if you build it, you own it and run it.â€

Using AWS partner Cornerstone Consulting Group, Abrigo moved 100% of its workloads to AWS in 13 months.

‘Lift and Shine’

Speaking at AWS re:Invent 2024, Abrigo led a session walking through its cloud transformation. The pre-AWS environment was built around colocated data centers that came with $7.5 million a year in capital costs.

Here’s a look at Abrigo’s pre-AWS environment:

- All software-as-a-service (SaaS) servers were hosted out of two geographically diverse colocated data centers. One data center served as primary, for disaster recovery, and another for internal development.

- Abrigo had about 1,500 virtual servers with 5PB of storage. About 90% of Abrigo’s infrastructure was built on Microsoft’s stack including Windows, SQL Server Standard and Enterprise, IIS App Server, .NET Framework, and .NET Core.

- The vendor had more than 50 unique hosted SaaS applications.

Jason Perlewitz, VP of Cloud Operations at Abrigo, said the company was looking to migrate to AWS quickly so it could innovate faster with AI in the future. Speaking at AWS re:Invent 2024, Perlewitz said the goal was to create a foundation for infrastructure, product, and database modernization at lower costs.

Source: Abrigo/AWS

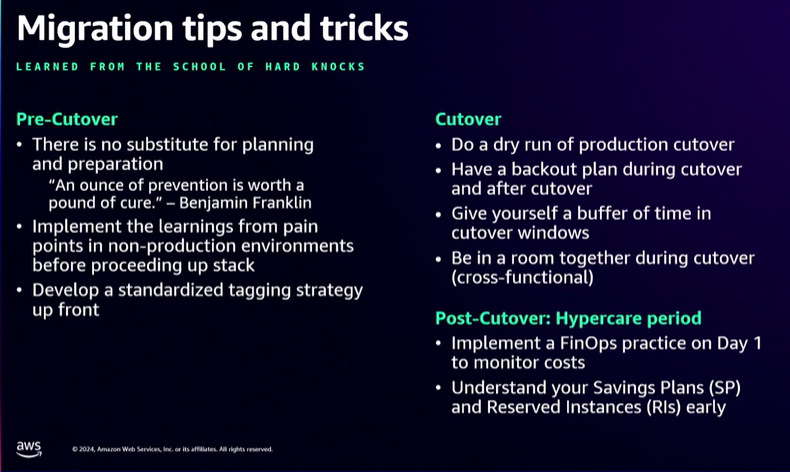

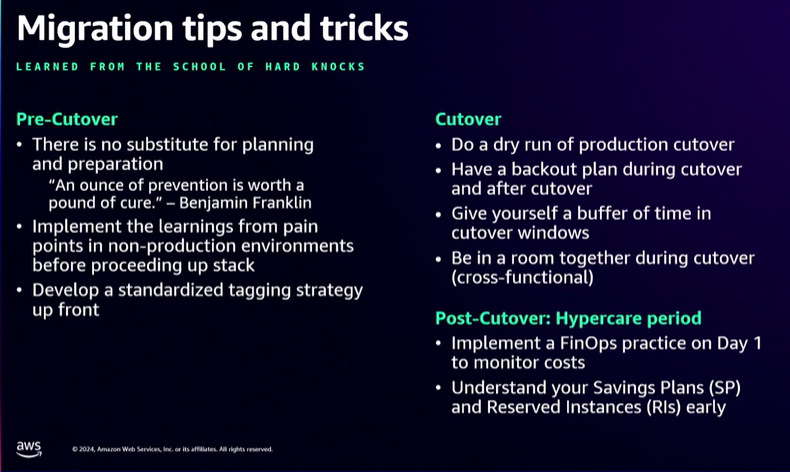

The challenge was delivering the cloud migration in fewer than 16 months when dealing with 50 unique applications, a lack of data hygiene, tech debt, and strict downtime requirements to minimize customer impact.

“We thought in the long run we could save money by operating in the cloud,†Perlewitz said. “Our infrastructure costs reductions we wanted to be at least 20%, and we thought more than that was possible once we started to operate efficiently. We also wanted to tie our cloud spend to the growth of our business.â€

Other goals for the cloud migration included:

- Reducing incident resolution time by at least 20%

- Reducing product deployment time by 25%, with a 30% increase in deployment frequency

- Planning ahead for enduring impact. Abrigo spent the first three months setting up architecture, defining data-tagging strategy, and upskilling teams.

“We wanted to free up our smart people to do smart things. We want to innovate,†Perlewitz said. “That’s where we get value. We want to see time spent on growth activities.â€

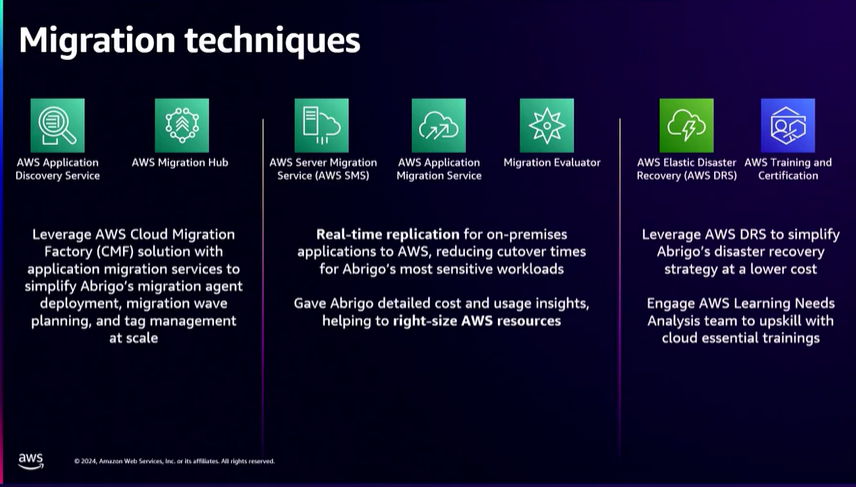

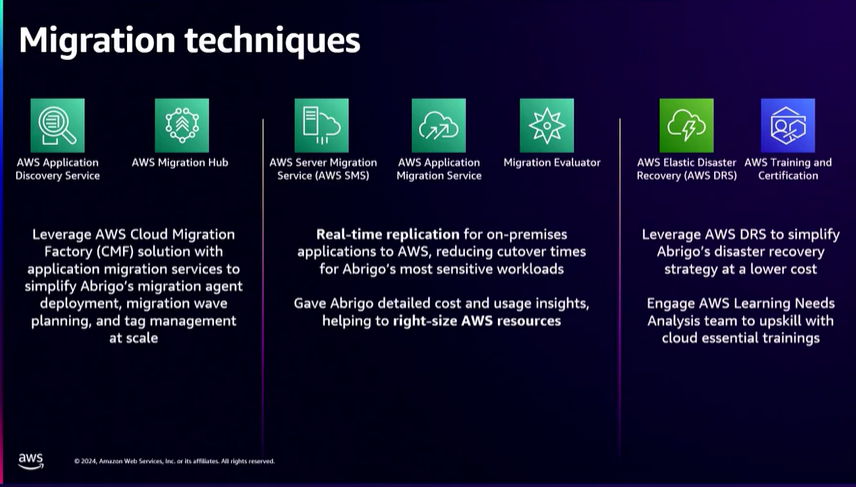

To meet those cloud migration priorities, Perlewitz said, Abrigo deployed AWS Professional Services to build fit-for-purpose landing zones and security architecture and invested in training.

Overall, Perlewitz said Abrigo didn’t want to simply migrate but wanted instead to take a “lift and shine†approach that included copying existing virtual machines with the AWS Application Migration Service (MGN), making small changes with outsized benefits, and cutting unnecessary environments and data. AWS Managed Services was used for additional operational support.

Abrigo said lift and shine included the following moves:

- Consolidating Windows versions before migration

- Eliminating environments and data that wasn’t needed

- Syncing data stores

- Standardizing engineering tasks

- Consolidating disaster recovery instances

Perlewitz said training was a big part of the migration mix. “We wanted to equip our teams to be functionally literate in the cloud and improve our own internal capabilities,†he said. “We want to innovate and adopt new technologies more quickly.â€

Abrigo hit its goals for the migration and then some. Here’s a look (all figures compared with the year prior):

- The migration was completed in 13 months—three months ahead of schedule.

- Mean time to recover has decreased 63%.

- Customer instance incidents have fallen 72%.

- Cost of infrastructure operates at 3.65% of Abrigo’s recurring revenue, down from 5% when the company operated its own data centers.

- Application performance improved 15% to 30% on average.

- Time to market for Abrigo’s cloud applications is 70% faster than before.

- Technical debt was reduced by 50%.

Source: Abrigo/AWS

Ongoing Optimization

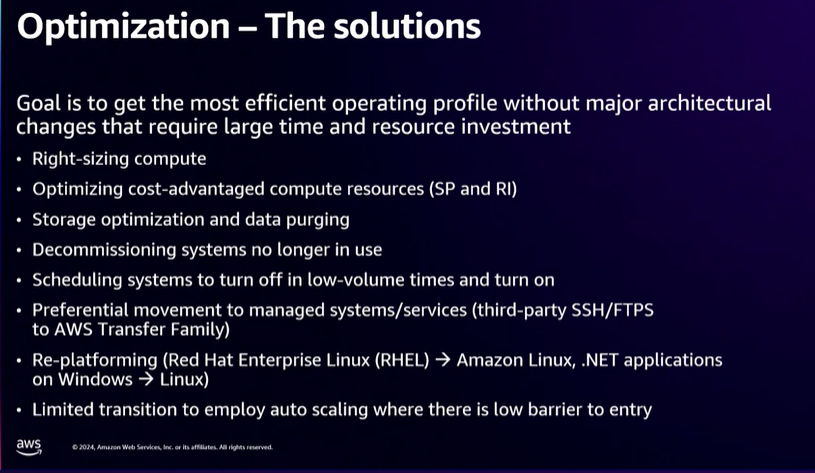

Phil Schoon, senior software architect at Abrigo, said the cloud migration provided many more options for application development as well as optimization challenges.

Schoon said Abrigo developers were excited about the various services from AWS that were now at their disposal. The catch is that those services can add up. “It’s very easy to move a monolithic architecture and deploy it, but as it grows it starts to get expensive,†Schoon said.

For starters, Schoon explained, Abrigo prioritized working on areas that weren’t directly tied to features that affected customers. In addition, Abrigo’s team needed to figure out how to use AWS services and then get better at using them.

Schoon said a big focus for Abrigo is container efficiency, where applications were simplified with partner Cornerstone and AWS.

Nayan Karumuri, senior solutions architect at AWS, said at re:Invent that it’s common for customers to need to optimize after a migration. “The initial challenge is that there’s a bubble cost in the beginning, and that’s mainly due to resource inefficiencies,†Karumuri said. “When you’re looking at 1,500 applications migrating to the cloud, some instances were over-provisioned to avoid performance degradations and provide a good user experience.â€

Karumuri said Abrigo switched to autoscaling instances and reserved capacity models. The ability to right-size services also required a learning curve.

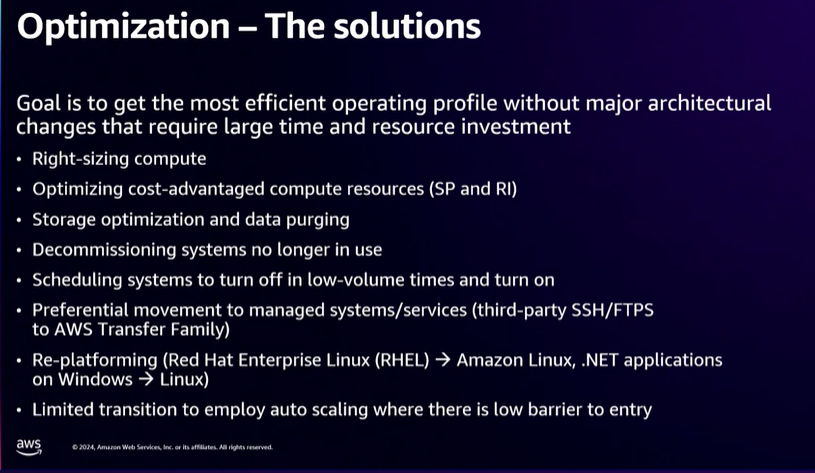

Here’s a look at some of the optimization changes:

- .NET applications were moved from Windows to Linux environments.

- Red Hat Enterprise Linux was transitioned to Amazon Linux for native integration with cloud-native services and the ability to use spot instances wherever possible.

- Compute instances were right-sized, with more instances moved to AWS’ custom Graviton chip.

- Amazon CloudWatch was used to monitor and trigger AWS Lambda functions.

- AWS Cost Optimizer was also used to manage ongoing costs.

- Abrigo moved commercial databases to AWS where possible.

Source: Abrigo/AWS

By the time Abrigo outlined the project at re:Invent, the company’s optimization efforts yielded the following:

- $1 million in disaster recovery savings due to a reduced EC2 footprint

- $1.3 million in savings from modernizing databases to Aurora PostgreSQL

- 80% Babelfish development cost savings

- $140,000 in cost savings from right-sizing EC2 instances

- $250,000 in savings for optimizing storage

- 30% processor performance uplift

That list isn’t everything, but it gave Abrigo a good base to move forward. Nemalikanti noted that the optimization continues on an ongoing basis.

What’s Next?

Nemalikanti (right) said everything from application performance (up 20% to 30% on average) to product release cadence and reporting has been sped up with AWS.

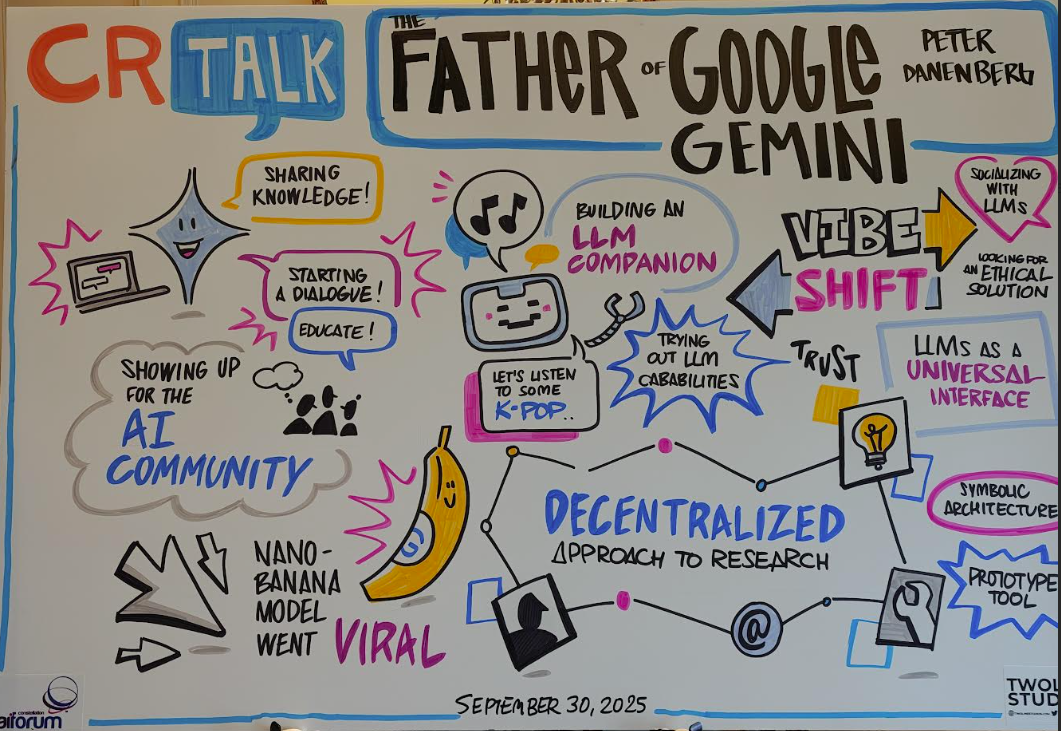

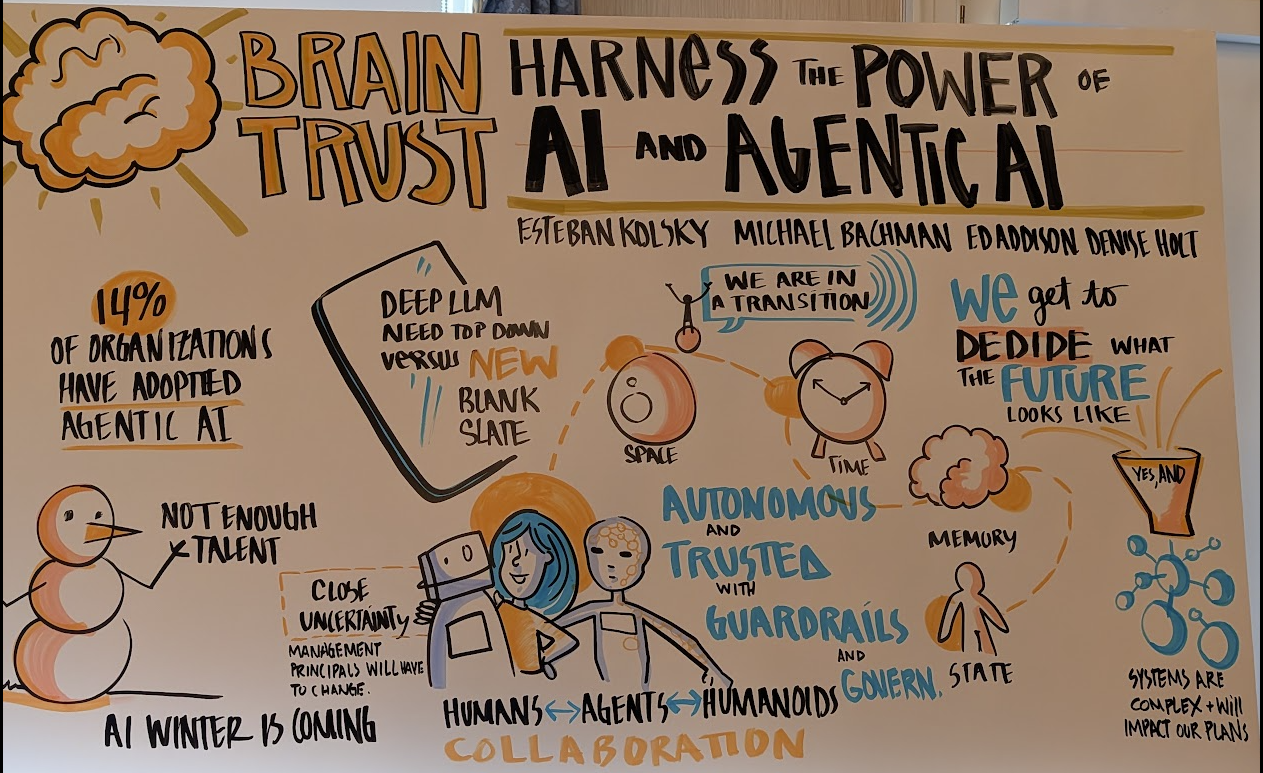

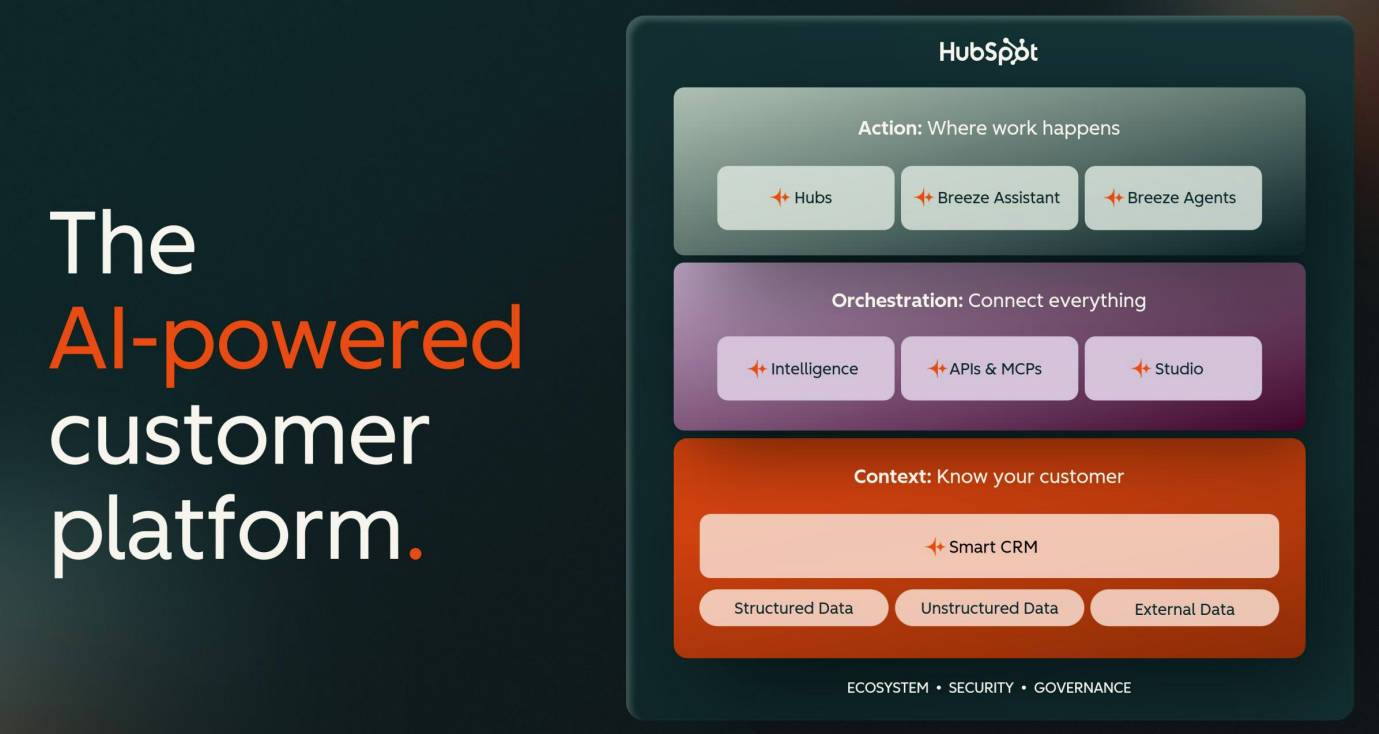

According to Nemalikanti, Abrigo’s AI strategy is to bring agentic AI features to customers and give them secure access to the latest models.

According to Nemalikanti, Abrigo’s AI strategy is to bring agentic AI features to customers and give them secure access to the latest models.

“Most of our customers don’t have access to multiple foundational models, and there’s some trepidation,†Nemalikanti said. “What we’ve done is extend the trust our customers have in us to AI.â€

Abrigo is also looking to solve for the most critical use cases within smaller banks. For instance, AskAbrigo can pull from multiple policy documents to give tellers the ability to make decisions quickly on questions about cashing a check with a temporary ID or another issue. “We can show them the source so there are no hallucinations,†Nemalikanti said.

Using AWS, Abrigo has set customer banks up with their own instances and data store. As for the models, Abrigo picks a variety of models that are best for a specific use case, including Amazon Nova and Anthropic’s Claude. “Our AI strategy is simple: Take the five most critical things that matter to customers and launch solutions at a high velocity. We know where the productivity for our customers is lost, and we’re embedding AI in exactly those areas,†Nemalikanti said.

Nemalikanti’s team does thousands of interviews with customers each year, and those interviews will determine where Abrigo uses AI. He said Abrigo plans to leverage agentic AI, but that it doesn’t work for every use case—especially when there’s a deterministic workflow. “We do think there are real opportunities, but we’re just not going to follow the hype,†Nemalikanti said. “We will look at real business processes holistically, such as loan origination, documentation reviews, and underwriting.â€

Data to Decisions

Next-Generation Customer Experience

Tech Optimization

amazon

Chief Executive Officer

Chief Information Officer

According to Nemalikanti, Abrigo’s AI strategy is to bring agentic AI features to customers and give them secure access to the latest models.

According to Nemalikanti, Abrigo’s AI strategy is to bring agentic AI features to customers and give them secure access to the latest models.