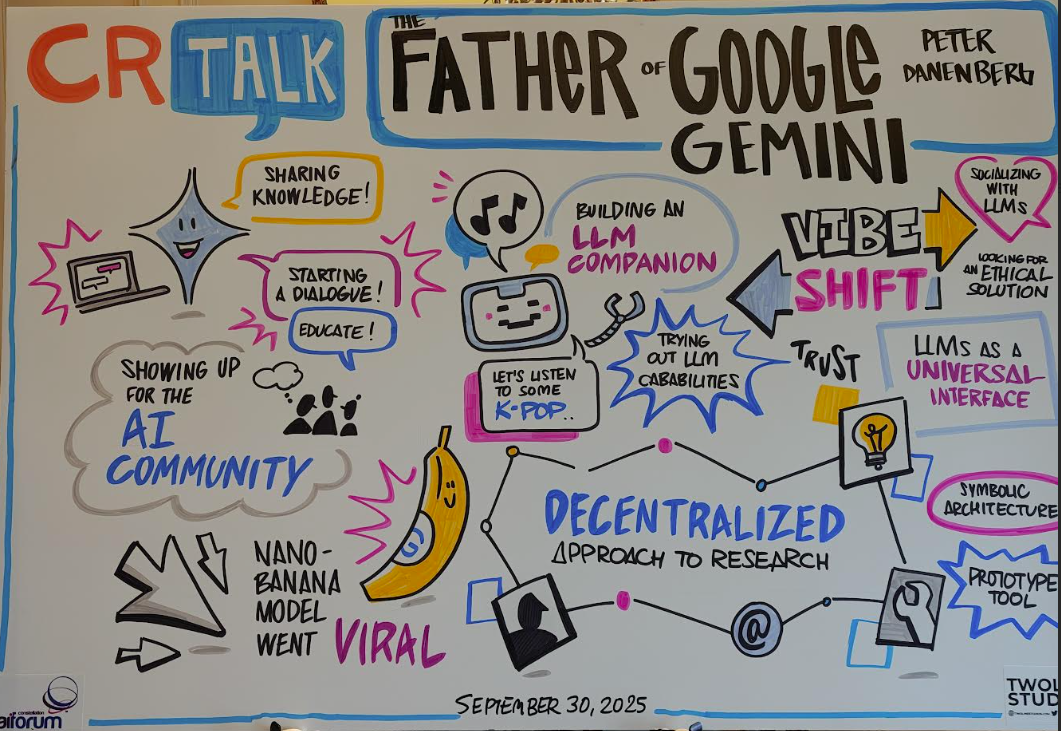

Google DeepMind’s Danenberg on emerging LLM trends to watch

Peter Danenberg is a senior software engineer at Google's DeepMind, leads rapid prototyping for Gemini and has to think through more than a few big ideas.

Speaking at Constellation Research's AI Forum, Danenberg spoke with R "Ray" Wang about emerging trends in AI and looming questions ahead. Here's a look at the high level topics in a space that evolves almost hourly.

More from AI Forum DC: For AI agents to work, focus on business outcomes, ROI not technology

Ambient LLMs. To use LLMs today, you break out your phone or laptop and often break your flow. The future could be an ambient companion that sits there and sees what you see and hear. Danenberg said he wasn't sure where he sits on the ambient LLM spectrum, noting that it could be creepy, but there are advantages to an assistant that wouldn't break your creative flow. "It's an interesting question," he said. "There's an idea of a companion that's there and you're not aware of it until you need it."

Use cases. Danenberg said that there has been a shift in companies about how they are using foundational models from reluctance to adoption. Companies are focusing on low hanging fruit for use cases, but these add up. "Anything where you need to extract structured data from unstructured data is beautiful low hanging fruit you can get started with," he said.

Constellations of smaller models emerge. Danenberg said one trend to note is that there are startups focused on smaller models that do one thing well and then become parts of constellations of LLMs that solve problems.

Don't forget the classics. Danenberg said there's a renaissance in thinking in AI that's "going back to class ML (machine learning." The trend is still developing, but researchers are rediscovering 1960s AI, symbolic reasoning and ontologies. In this world, "LLMs are just becoming a universal interface over small models and classic ML," said Danenberg. "I wonder if, to a certain extent, that the LLM sweet spot is really as a user interface of these classical models that can achieve something with 100% accuracy with its own specific event. That's going to be interesting idea."

The importance of 10,000 hours. The effect of LLMs on human intelligence is an ongoing debate and concern. Danenberg said one impact to ponder is the 10,000 hours rule. Humans put in 10,000 hours into something, gain domain knowledge and expertise and then develop a bullshit detector to distinguish between fact and fiction. "The big question is that in the age of LLMs are we still going to be able to put in the 10,000 hours to develop these reality detection systems?," said Danenberg. "Going forward, that's going to be an interesting question in terms of the generation coming of age."

Virality of Nana Banana, Google's AI image editor. Danenberg said the combination of Gemini 2.5 and Nana Banana led to a viral moment for Google that was unpredictable. "With this virality thing, you can't force it, but I am just glad we had a moment," said Danenberg.