Former Vice President and Principal Analyst

Constellation Research

Doug Henschen is former Vice President and Principal Analyst where he focused on data-driven decision making. Henschen’s Data-to-Decisions research examines how organizations employ data analysis to reimagine their business models and gain a deeper understanding of their customers. Henschen's research acknowledges the fact that innovative applications of data analysis requires a multi-disciplinary approach starting with information and orchestration technologies, continuing through business intelligence, data-visualization, and analytics, and moving into NoSQL and big-data analysis, third-party data enrichment, and decision-management technologies.

Insight-driven business models are of interest to the entire C-suite, but most particularly chief executive officers, chief digital officers,…...

Read more

Oracle has simplified its analytics product lineup and pricing and gone public with its roadmap. Here’s what’s coming and why Oracle customers will take a second look.

The Oracle Analytics Summit, held June 24-25, gave the company a chance to introduce what executives called “a new beginning†for Oracle Analytics. The themes of the event, held as both a webcast (attended by more than 6,800) and an in-person event (attended by roughly two hundred customers, partners and analysts) were “simple, transparent†and “trustworthy.â€

The company needed a new beginning, as Senior VP of Analytics, T.K. Anand, put it, because Oracle had previously “inflicted a lot of change on the community†and “wasn’t transparent†about product plans and roadmaps. In recent years Oracle has been pushing its cloud-based analytic options while doing little to update software deployed on-premises by tens of thousands of customers.

T.K. Anand, a Microsoft veteran who joined Oracle one year ago as senior VP of Oracle Analytics, announces "a new beginning" at the Oracle Analytics Summit.

On Simplification

Oracle announced a consolidated lineup with just three products: Oracle Analytics Cloud (OAC), Oracle Analytics Server, and Oracle Analytics for Applications. OAC has been the company’s cloud path forward for analytics for more than three years. It’s a modern, self-service-oriented cloud service emphasizing data visualization and offering extensive augmented analytics capabilities (including data prep recommendations, Web and mobile natural language (NL) query, NL generation, automated insights and, soon, personalized recommendations). Recent integrations extend the NL capabilities to the Oracle Digital Assistant and third-party products including Slack, Microsoft Teams, Skype, Amazon Alexa and Google Assistant.

Oracle Analytics Server, due in Q4, is an on-premises-oriented product that consolidates governed, self-service and augmented capabilities and replaces myriad products that came before it. The promise is that Oracle Analytics Server will be aligned, feature and function wise, with OAC and will see annual updates based new capabilities first delivered through OAC. It’s also Oracle’s option for multi-cloud deployment, as it will be software that can be deployed anywhere.

Oracle Analytics for Applications, as the name suggests, is essentially OAC pre-integrated with Oracle cloud applications. The integrations will make it easier to analyze data from Oracle applications, starting with Oracle Fusion ERP by Q4 2019 and Fusion HCM by Q1 2020. Executives promised that other Oracle cloud applications and third-party applications will follow on a quarterly cadence. Importantly, Oracle Analytics for Applications includes a managed data pipeline and data warehouse instance based on the Oracle Autonomous Database. Also included will be supporting content, including ready-to-use semantic models, reports and dashboards.

Another key element of the simplification is the pricing for OAC, which is straightforward and aggressive at $20 per user, per month for the workgroup- and departmental-oriented Professional Edition and $2,000 per Oracle Compute Unit (OCPU) per month for the Enterprise Edition, including unlimited numbers of users.

On Transparency and Trust

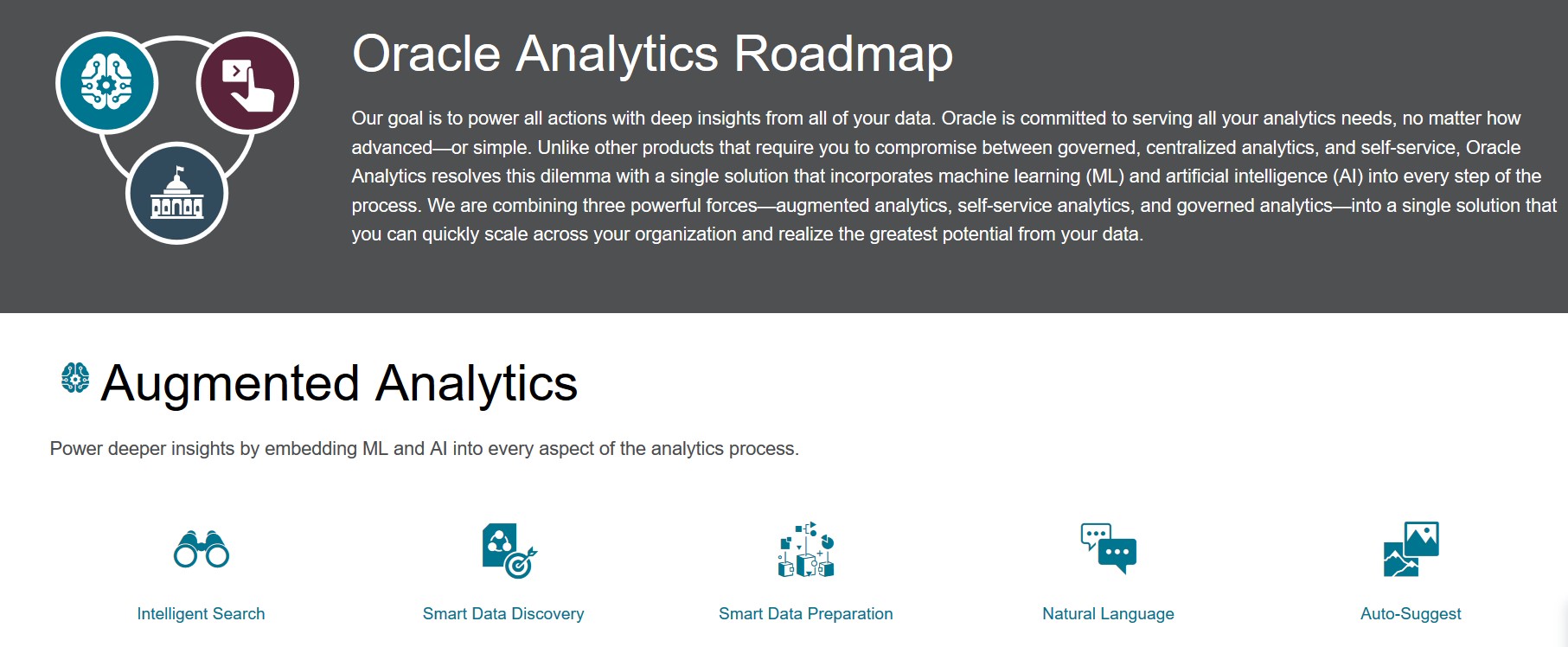

To be more transparent, Oracle has published its Oracle Analytics roadmap online for the first time. This is something Tableau, Qlik and others have done for many years, so Oracle is merely catching up with customer expectations, but it’s a sign that the company is listening.

In another sign that the company is listening and working on building trust, T.K. Anand, a Microsoft veteran who joined Oracle one year ago, said that Oracle has doubled the number of analytics customer-support engineers and improved processes to reduce service request resolution times by as much as ten times. It’s also investing in auto-remediation capabilities to reduce the time to recovery. Service improvements are a constant area of investment for most vendors, but a doubling of support staff is a real commitment.

Oracle's newly published analytics roadmap, available at oracle.com/solutions/business-analytics/roadmap.html

MyPOV on the New Oracle Analytics

From Oracle’s perspective, any customer of its applications or data-management software should naturally consider Oracle’s analytics options, but defections have mounted in recent years. The Summit announcements were designed to get customers to give Oracle Analytics products a first (or second) look. The attention getter was clearly the simplified, aggressive list pricing, and as Oracle customers all know (and count on), the more you spend, the more likely the company is to offer discounts.

To put the Enterprise pricing in context, I talked to one customer with 1,000 users who said he’s using 12 OCPUs while another customer with 600 users said he was “overprovisioned†and had room to grow with just six OCPUs. Capacity requirements will vary based on the complexity of the data and query, reporting and analytical demands.

The real new news among the announcements is the pending release of the Oracle Analytics Server aimed at on-premises deployment. Oracle’s prior emphasis on OAC and only OAC left the impression that cloud was the only way forward, yet plenty of customers aren’t ready to go there. Oracle Analytics Server is billed as an all-inclusive product that will get annual updates. In another carrot for current customers, any licensee of Oracle BI Enterprise Edition will be automatically licensed and entitled to download and deploy Oracle Analytics Server, which will include the latest self-service and augmented capabilities.

In short, the newly promised terms, transparency, support improvements and feature upgrades across Oracle Analytics are attractive and worth considering. We’ll have to wait and see whether the finished Oracle Analytics Server product is as simplified, integrated and all-inclusive as promised. Will it be as hybrid and multi-cloud friendly as the options from independent vendors? Oracle executives demurred on whether and when vendor-supported options for container-based deployment would emerge (something some rivals have delivered or announced). We also heard that there would be some differences in functionality between OAC and the Oracle Analytics Server (in the area of augmented analytics, for example), and licensing terms for on-premises deployment is another area where details have yet to emerge.

Customers that I talk to that have gone cloud don’t hesitate to choose cloud-based options. But tell an on-premises customer that cloud is the only way forward and they will be less likely to try your cloud option. The way forward for vendors that didn’t start in the cloud is to give customers clear, transparent choices and the freedom to move when they are ready. This is the new beginning that Oracle Analytics promises and we’ll surely hear more details about each option at this year’s Oracle Open World.

Related Reading:

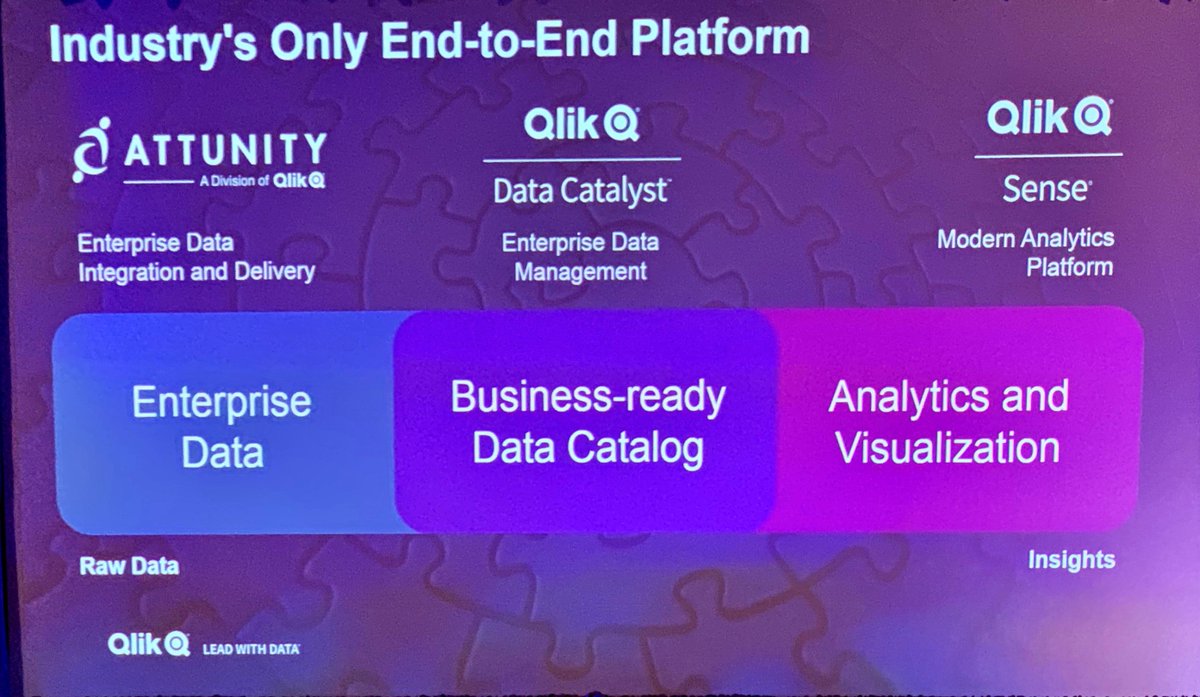

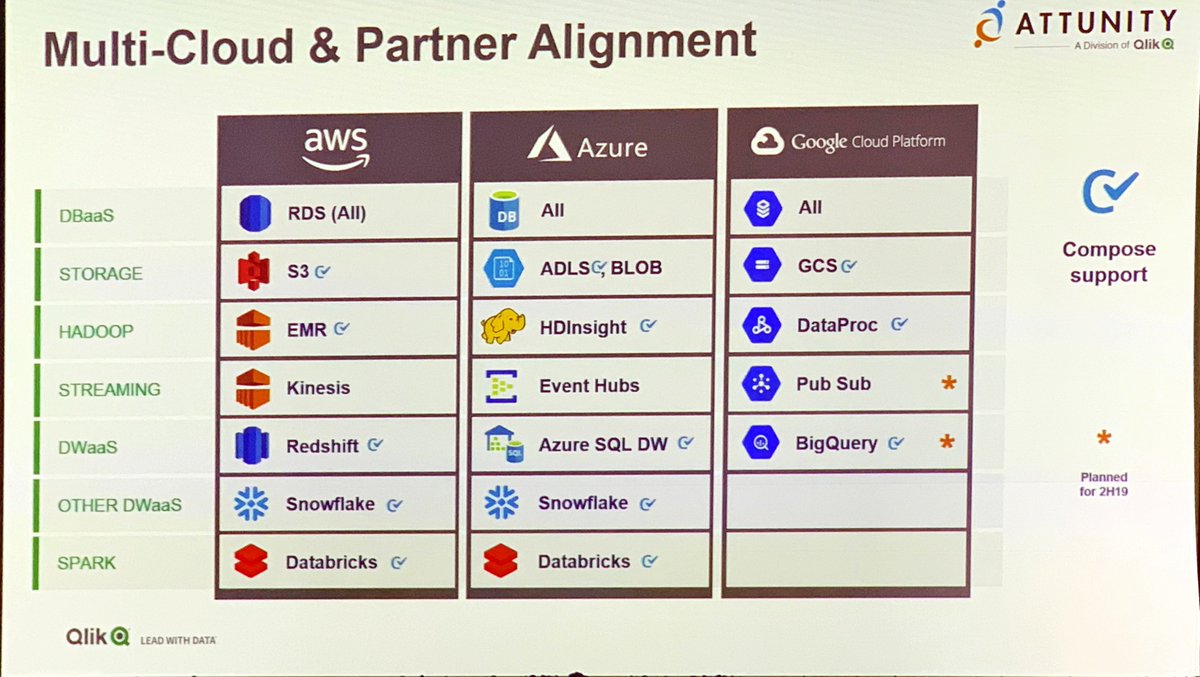

Qlik Advances Cloud, AI & Embedded Options, Extends Data Platform

Constellation ShortListâ„¢ Cloud-Based Business Intelligence and Analytics Platforms

Tableau Advances the Era of Smart Analytics

MicroStrategy Embeds Analytics Into Any Web Interface

Data to Decisions

Oracle

Chief Information Officer

Chief Analytics Officer

Chief Data Officer