Vice President and Principal Analyst

Constellation Research

Title: About Dion Hinchcliffe

Dion Hinchcliffe is an internationally recognized business strategist, bestselling author, enterprise architect, industry analyst, and noted keynote speaker. He is widely regarded as one of the most influential figures in digital strategy, the future of work, and enterprise IT. He works with the leadership teams of large enterprises as well as software vendors to raise the bar for the art-of-the-possible in their digital capabilities.

He is currently Vice President and Principal Analyst at Constellation Research. Dion is also currently an executive fellow at the Tuck School of Business Center for Digital Strategies. He is a globally recognized industry expert on the topics of digital transformation, digital workplace, enterprise collaboration, API…...

Read more

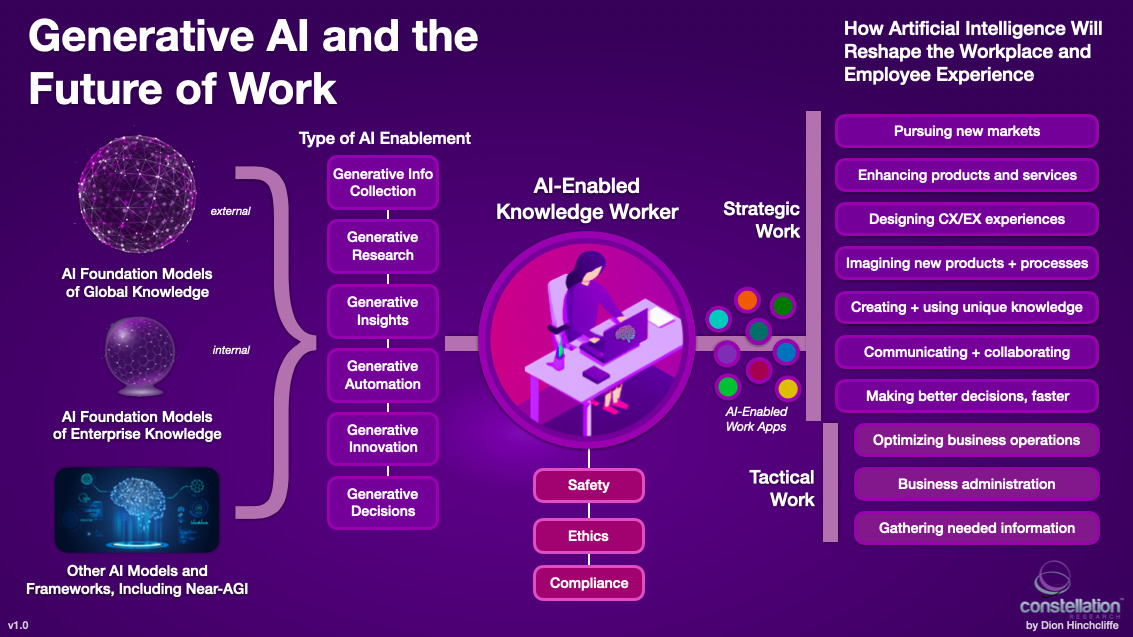

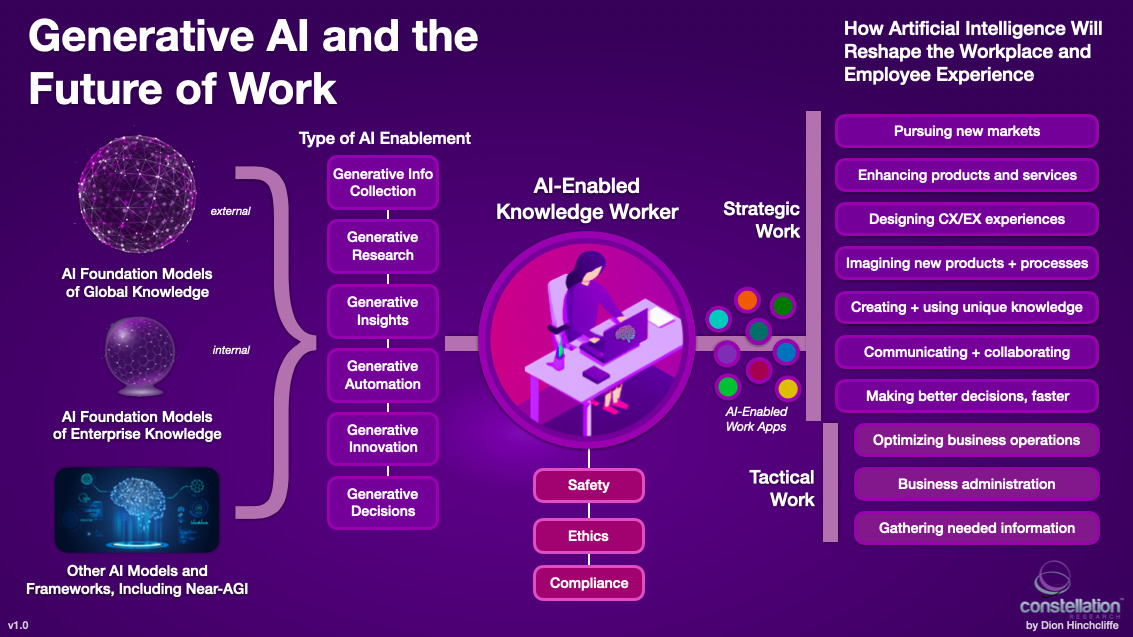

In today's fast-paced and data-driven business world, generative AI is now in the midst of transforming the way companies innovate, operate, and work. With proof points like ChatGPT, generative AI will soon enough have a significant competitive impact on revenue as well as bottom lines. With the power of AI that can help people broadly synthesize knowledge, then rapidly use it to create results, businesses can automate complex tasks, accelerate decision-making, create high-value insights, and unlock capabilities at scale that were previously impossible to obtain.

Most industry research agrees with this, such as a major study that recently determined that businesses in countries that widely adopt AI are expected to increase their GDP by 26% by 2035. Moreover, the same study predicts that the global economy will benefit by a staggering $15.7 trillion in both revenue and savings by 2030 thanks to the transformative power of AI. As a knowledge worker or business leader, embracing generative AI technology can deliver a wide range of new possibilities for an organization, helping them stay competitive in an ever-changing marketplace while achieving greater efficiency, innovation, and growth.

While many practitioners are focusing on industry-specific AI solutions for sectors like finance services or healthcare, the broadest and most impactful area of AI will be in general purpose capabilities that quickly enablie the average professional to get their work done better and faster. In short, helping knowledge workers work more effectively to achieve meaningful outcomes to the business. It's in this horizontal domain that generative AI has dramatically raised the stakes in the last six months, while garnering widespread attention for the seemingly immense promise it holds to boost productivity as it blazes a fresh technology trail towards bringing the full weight of the world's knowledge upon any individual task.

Delivering the Value of Generative AI While Navigating the Challenges

In my professional opinion, the ability for generative AI to produce useful, impressively synthesized text, images, and other types of content almost effortlessly based on a few text cues has already become an important business capability worthy of providing to most knowledge workers. In my research and experiments with the technology, many work tasks will benefit from between a 1.3x to 5x gain in speed alone. There are other less quantifiable benefits related to innovation, diversity of input, and opportunity cost that come into play as well. Generative AI can also provide particularly high value types of content such as code or formatted data, which normally require extensive expertise and/or training to create. It also has the capability to conduct advanced-level reviews of complex, domain-specific materials including legal briefs and even medical diagnoses.

In short, the latest generative AI services have proven that the capability is now at a tipping point and is ready to deliver value in a widespread, democratized away to the average worker in many situation.

Not so fast, say a chorus of cautionary voices that point out the many underlying challenges. AI is a potent technology that cuts both ways, and therefore a little advance preparation is required to use the technology while avoiding the potential issues, which are generally are:

- Data bias: Generative AI models are only as good as the data they are trained on, and if the data contains inherent biases, the model will replicate those biases. This can lead to unintended consequences, such as perpetuating undesirable practices or excluding certain groups of people.

- Model interpretability: Generative AI models can be complex and their results difficult to interpret, which can make it challenging for businesses to understand how they arrived at a particular decision or recommendation. This lack of explainability can lead to mistrust or skepticism, particularly in high-stakes decision-making scenarios, although this is likely to be addressed over time.

- Cybersecurity threats: Like any technology that processes and stores sensitive data, generative AI models can be vulnerable to cyber threats such as hacking, data breaches, malicious attacks, or more insidiously, input poisoning. Businesses must take appropriate measures to protect their AI systems for work and their data from these risks.

- Legal and ethical considerations: The use of generative AI may raise legal and ethical concerns, particularly if it is used to make decisions that impact people's lives, such as hiring or lending decisions. Businesses must ensure that their use of AI aligns with legal and ethical standards and does not violate privacy or other rights. Others have noted that some generative AI systems used today can violate privacy laws, which countires like Italy have already taken action over.

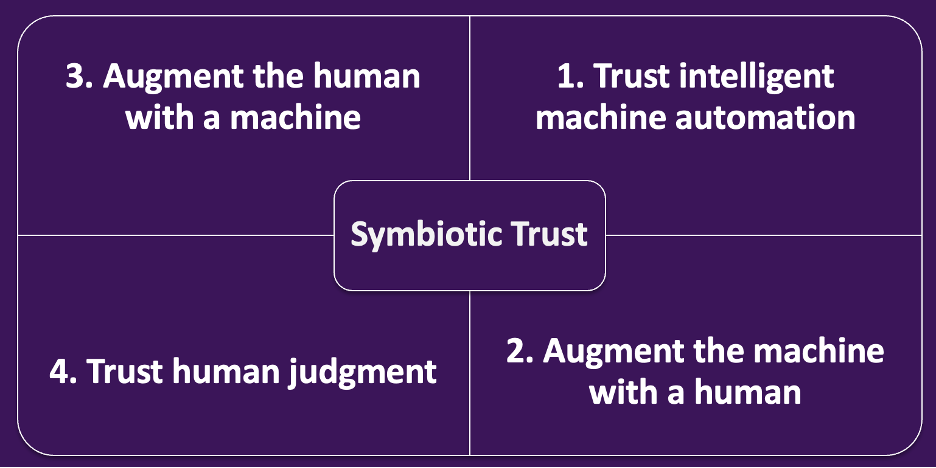

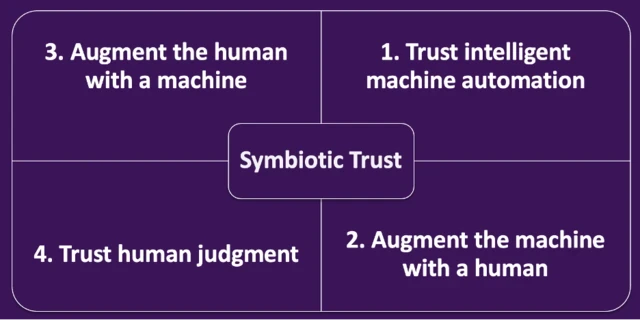

- Overreliance on AI: Overreliance on generative AI models over time can lead to a loss of human judgment and decision-making, which can be detrimental in situations where human intervention has to be resumed, yet the expertise is now lost. Businesses must ensure that they strike the right balance between the use of AI and human expertise.

- Maintenance and sustainability: Generative AI models require ongoing maintenance and updates to remain effective, which can be time-consuming and expensive. As businesses scale up their use of AI, they must also ensure that they have the resources and infrastructure to support their AI systems, especially as they begin to build their own foundation models for their enterprise knowledge. Making sure the resource-intensive nature of large language models don't consume excessive energy will be a significant issue as well.

Succeeding with General Purpose AI in the Workplace

However, the siren song of the benefits that AI can bring -- everything from raw task productivity to strategically wielding knowledge more effectively -- will continue as more proof points continue to emerge that today's generative AI solutions can genuinely deliver the goods. This will require organizations to begin putting into place the necessary operational, management, and governance safeguards into place as they climb the AI adoption maturity curve.

Some of the initial moves virtually all organizations should make this year as they situate generative AI in the digital workplace and roll it out to workers includes:

- Clear AI guidelines and policies: Establish clear guidelines and policies on how the AI tools should be used, including guidelines around data privacy, security, and ethical considerations. Make sure these policies are communicated clearly to workers and are easily accessible.

- Education and training: Provide workers with comprehensive education and training on how to use the AI tools effectively and safely. This includes training on the technologies and solutions themselves, as well as on any relevant legal and ethical considerations that they are required to follow. Digital adoption platforms can also be particularly useful in broadly accelerated situated use of AI tools at work.

- AI governance structures: Establish clear governance structures to oversee the use of AI tools within the organization. This includes assigning responsibility and providing budget for overseeing AI systems, establishing clear lines of communication, and ensuring that there are appropriate checks and balances in place.

- Oversight and monitoring: Establish processes for ongoing oversight and monitoring of the AI tools to ensure that they are being used by workers effectively and safely. This includes monitoring the performance of the AI systems, monitoring compliance with policies and guidelines, ensuring consistent models are being used across the organization, and monitoring for any potential biases or ethical concerns.

- Collaboration and feedback: Encourage collaboration and feedback among workers who are using the AI tools, as well as between workers and management. This includes creating channels for workers to provide feedback and suggestions for improvement, sharing of best practices on using AI, as well as fostering a culture of collaboration and continuous learning on AI skills.

- Create clear ethical guidelines: Companies should establish clear ethical guidelines for the use of AI tools in the workplace, based on principles such as transparency, fairness, and accountability. These guidelines should be communicated to all workers who use the AI tools.

- Conduct ethical impact assessments: Before deploying AI tools, companies should conduct ethical impact assessments to identify and address potential ethical risks and ensure that the tools are aligned with responsible practices as well as the company's ethical principles and values.

- Monitor for AI bias: Companies should regularly monitor AI tools for bias, both during development and after deployment. This includes monitoring for bias in the data used to train the tools, as well as bias in the outcomes produced by the tools.

- Provide transparency: Companies should provide transparency around the use of AI tools, including how they work, how decisions are made, and how data is used. This includes providing explanations for the decisions made by AI tools and making these explanations understandable to workers and other stakeholders.

- Ensure compliance with regulations: Companies should ensure that the use of AI tools is compliant with all relevant regulations, including data privacy laws and regulations related to discrimination and bias across the AI tool portfolio.

While the totality of theis list may seem to be a tall order, most organizations actually have many pieces of all this in various places in their organization already from department AI efforts. In addition, if they have developed an enterprise-wide ModelOps capability, this is a particularly good home for a large part of these AI oversight practices, in close conjunction with appropriate internal functions including human resources, legal, and compliance.

Related: See my exploration of ModelOps and how it helps organizations have a consistent, cost-effective AI capability with safety and ethics built-in

The Core Focus for Enabling AI in the Workplace: Foundation Models

Organizations looking at providing their workforce with AI-enabled tools will generally be looking at solutions that are powered by an AI model that is able to easily produce useful results without significant effort or training on the part of the worker. While the compliance, bias, and safety issues mentioned above may seem to be a significant hurdle, the reality is that most AI models already have basic protections and safety layers, while many of the others can be provided centrally through an appropriate AI or analytics Center of Excellence or ModelOps capability.

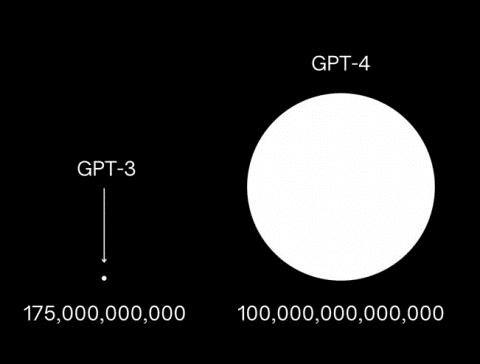

Large language models (LLMs) are particularly interesting as the basis for AI workplace tools because they are powerful foundation models that have been trained on a tremendous amount of open textual knowledge. Vendors for LLM-based work tools are generally going down one of several roads: The majority of them are building on an existing proprietary model that is specially tuned/optimized for certain behaviors or results they desire, or they are allowing model choice, enabling businesses to utilize language or foundation models they have already vetted. Some are also taking the middle road by starting with well-known, highly-capable models such as OpenAI's GPT-4, and adding their own special sauce to them on top.

While there will always be AI tools for the workplace based on lesser known and not-as-established AI frameworks and models, right now the most compelling results tend to be found with the better-known LLMs. While this list is always changing, the leading foundation models known currently, with varying degrees of industry adoption are (in alphabetical order):

It's also important to keep in mind that while some enterprises will be seeking to work directly with LLMs and other foundation models to create their own custom AI work tools, the majority of organizations are going to start with easy to use business-grade apps that already have an AI model embedded within them. Nevertheless, understanding which AI models are underneath which worker tools is very helpful in understanding their capabilities, supporting properties (like safety layers), and general known risks.

The Leading AI Tools for Work

The following is a list of AI-enabled tools that primarily use some form of foundation model to synthesize or otherwise produce useful business content and insights. I had a tough choice to make on whether to include the full gamut of generative AI services including images, video, and code. But those are covered in sufficient detail elsewhere online and in any case, they focus more on specific creative roles.

Instead, I sought to focus on business-specific AI work tools based on foundation models that were primarily text-based and more horizontal in nature, and thus would be a good basis for a broad rollout to more types of workers:

Here are some of the more interesting solutions for AI tools that can be used broadly in work situations (in alphabetical order):

- Bard - Google's entry into the LLM-based knowledge assistant market.

- ChatGPT - The general purpose knowledge assistant that started the current generative AI craze.

- ChatSpot - Content and research assistant by Hubspot for marketing, sales, and operations.

- Docugami- AI for business document management that uses a specialized business document foundation model.

- Einstein GPT - Content, insights, and interaction assistant for the Salesforce platform.

- Google Workspace AI Features - Google has added a range of generative AI features to their productivity platform.

- HyperWrite - An business writing assistant that accelerates content creation.

- Jasper for Business - A smart writing creator that helps keep workers on-brand for external content.

- Microsoft 365 Copilot/Business Chat - AI-assisted content creation and contextual user data-powered business chatbots.

- Notably - An AI-assisted business research platform.

- Notion AI - Another business-ready entry in the popular content and writing assistant category.

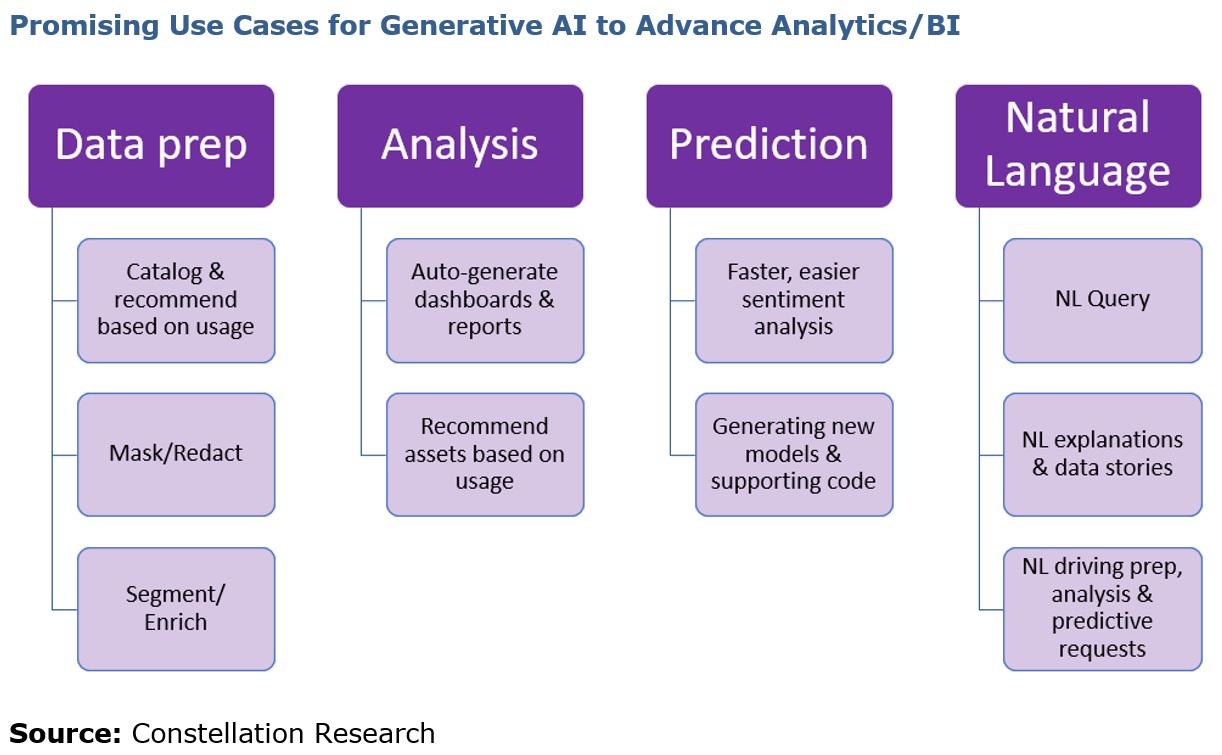

- Olli - Enterprise-grade analytics/BI dashboards created using AI.

- Poe by Quora - A knowledge assistant chatbot that uses Anthropic's AI models.

- Rationale - A business decision-making tool that uses AI.

- Seenapse - An AI-assisted business ideation tool.

- Tome - An AI-powered tool for creating PowerPoint presentations.

- WordTune - A general purpose writing assistant.

- Writer - An AI-based writing assistant.

As you can see, writing assistants tend to dominate Ai tools for work, since they are generally the easiest to create using LLMs, as well as the most general purpose. However, there are a growing number of AI tools that cover many other aspect of generative work as well, some of which you can see emerging in the list above.

In future coverage for AI and the Future of Work, I'll be exploring vertical AI solutions based on LLMs/foundation models for legal, HR, healthcare, financial services, and other industries/functions. Finally, if you have an AI for business startup that a) primarily uses a foundation model in how it works, b) has paying enterprise customers, and c) you would like to be added to this list, please send me a note. You are welcome to contact me for AI-in-the-workplace vendor briefings or client advisory as well.

My Related Research

How Leading Digital Workplace Vendors Are Enabling Hybrid Work

Every Worker is a Digital Artisan of Their Career Now

How to Think About and Prepare for Hybrid Work

Why Community Belongs at the Center of Today’s Remote Work Strategies

Reimagining the Post-Pandemic Employee Experience

It’s Time to Think About the Post-2023 Employee Experience

Research Report: Building a Next-Generation Employee Experience

Revisiting How to Cultivate Connected Organizations in an Age of Coronavirus

How Work Will Evolve in a Digital Post-Pandemic Society

A Checklist for a Modern Core Digital Workplace and/or Intranet

Creating the Modern Digital Workplace and Employee Experience

The Challenging State of Employee Experience and Digital Workplace Today

The Most Vital Hybrid Work Management Skill: Network Leadership

New C-Suite

Future of Work

Chief Data Officer

Chief Digital Officer

Chief Executive Officer

Chief Information Officer

Chief People Officer

Chief Revenue Officer

Chief Supply Chain Officer

Chief Technology Officer

In early 2023 I joined a rogue gaggle of industry analysts to trek to Zoho’s campus just outside of Chennai for an event dubbed Truly Zoho. Panel after panel of Zoho leaders shared an insider’s view and we analysts tried to accurately and fairly describe what we were hearing, seeing and experiencing. I’ve read article after article beautifully sharing the experience…but for some reason I was struggling. It wasn’t because there wasn’t plenty to share. I was struggling to document things in a way that was fair, accurate and, well, truly about Zoho.

In early 2023 I joined a rogue gaggle of industry analysts to trek to Zoho’s campus just outside of Chennai for an event dubbed Truly Zoho. Panel after panel of Zoho leaders shared an insider’s view and we analysts tried to accurately and fairly describe what we were hearing, seeing and experiencing. I’ve read article after article beautifully sharing the experience…but for some reason I was struggling. It wasn’t because there wasn’t plenty to share. I was struggling to document things in a way that was fair, accurate and, well, truly about Zoho. and core to the business value of every product and offering. When opportunities and a lack of R&D is holding a country back, what next? You invest in rural revival to bring globally in-demand skills and innovation to India despite the assumptions of the world that innovation only happens in places like the Silicon Valley.

and core to the business value of every product and offering. When opportunities and a lack of R&D is holding a country back, what next? You invest in rural revival to bring globally in-demand skills and innovation to India despite the assumptions of the world that innovation only happens in places like the Silicon Valley. One early and lasting experiment: finding a new way to identify, educate and train the next generation of experimenters. For 17 years, Zoho Schools of Learning (informally called Zoho University by some,) has seen over 1,400 graduates advance across technology, design and business. Built as an alternative to traditional college or university programs that can often exclude students from far-flung rural villages across India, Zoho Schools focuses on the often-overlooked student that may not have the means to attend University but has the curiosity and will to learn and experiment.

One early and lasting experiment: finding a new way to identify, educate and train the next generation of experimenters. For 17 years, Zoho Schools of Learning (informally called Zoho University by some,) has seen over 1,400 graduates advance across technology, design and business. Built as an alternative to traditional college or university programs that can often exclude students from far-flung rural villages across India, Zoho Schools focuses on the often-overlooked student that may not have the means to attend University but has the curiosity and will to learn and experiment.

Sacrifice profit??? Zoho’s leaders decided to sacrifice growth to make a bold promise: nobody would be laid off as the world grappled with the threat of global financial recession and decline. For months we have seen headline after headline announcing layoffs. In order to appease Wall Street, investors, backers or shareholders, companies have made tough decisions to lay people off, cut back on research investments and implement austerity measures to keep ledgers in the black and ensure growth percentages did not fall. Zoho decided that the growth velocity they had consistently enjoyed over several years could slow if people could be prioritized.

Sacrifice profit??? Zoho’s leaders decided to sacrifice growth to make a bold promise: nobody would be laid off as the world grappled with the threat of global financial recession and decline. For months we have seen headline after headline announcing layoffs. In order to appease Wall Street, investors, backers or shareholders, companies have made tough decisions to lay people off, cut back on research investments and implement austerity measures to keep ledgers in the black and ensure growth percentages did not fall. Zoho decided that the growth velocity they had consistently enjoyed over several years could slow if people could be prioritized.