The generative AI buildout, overcapacity and what history tells us

The spending on generative AI infrastructure is accelerating at a breakneck pace, but it's quite possible that the "build it and they will come" approach may lead to overcapacity or some serious indigestion.

That is the argument from MIT's Daron Acemoglu and Goldman Sachs Research's Jim Covello. In a recent Goldman Sachs podcast, the two were skeptical about whether the $1 trillion expected to be spent on AI capex is going to pay off. And there are some incremental items that point to more skepticism about the AI buildout.

Overcapacity ahead?

Covello noted that the internet buildout was huge and led to multiple companies and services like Uber that arrived later. The issue is that this buildout didn't pay off big for about 30 years. "It ends badly when you build things the world is not ready for," said Covello. "When you wind up with a whole bunch of capacity because you build something that isn't going to get utilize it takes a while to grow into that supply."

He said: "One of the biggest lessons I've learned over 25 years here is bubbles take a long time to burst. So, the build of this could go on a long time before we see any kind of manifestation of the problem. I'm very respectful of how long they can go on."

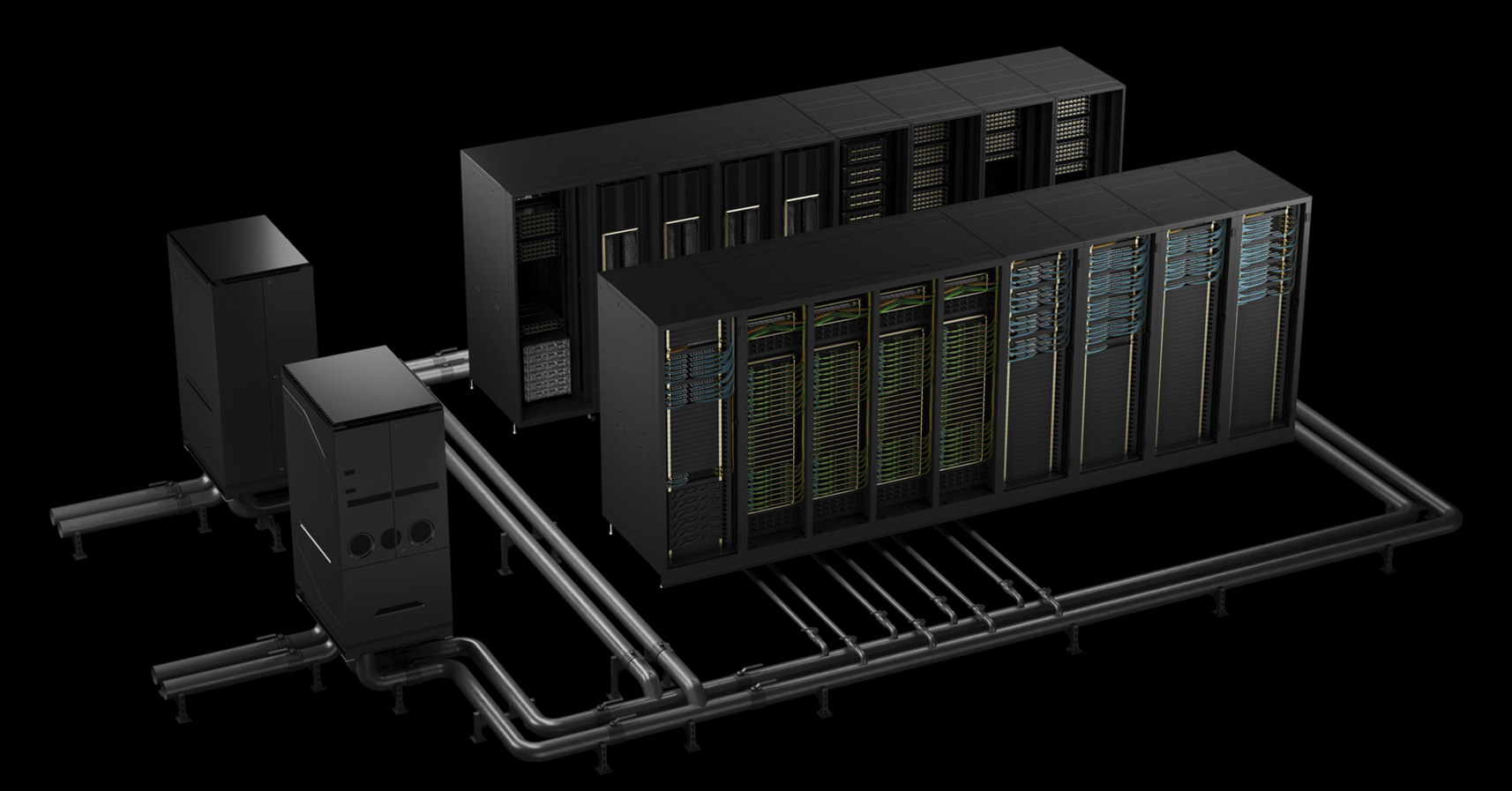

Clearly, the AI buildout is underway. Alphabet, Amazon Web Services, Oracle and Microsoft are all spending billions to build genAI capacity. Companies like OpenAI and Anthropic are also spending. Most of the genAI infrastructure profits are going to Nvidia for now.

This post first appeared in the Constellation Insight newsletter, which features bespoke content weekly and is brought to you by Hitachi Vantara.

This buildout could lead to indigestion should genAI fail to deliver the returns. "Very few companies are actually saving any money at all doing this," said Covello. "How long do we have to go before people start to really question?"

To hear Covello tell it, we're clearly in the FOMO stage with genAI. It's likely that genAI won't fizzle like the metaverse, but you never know.

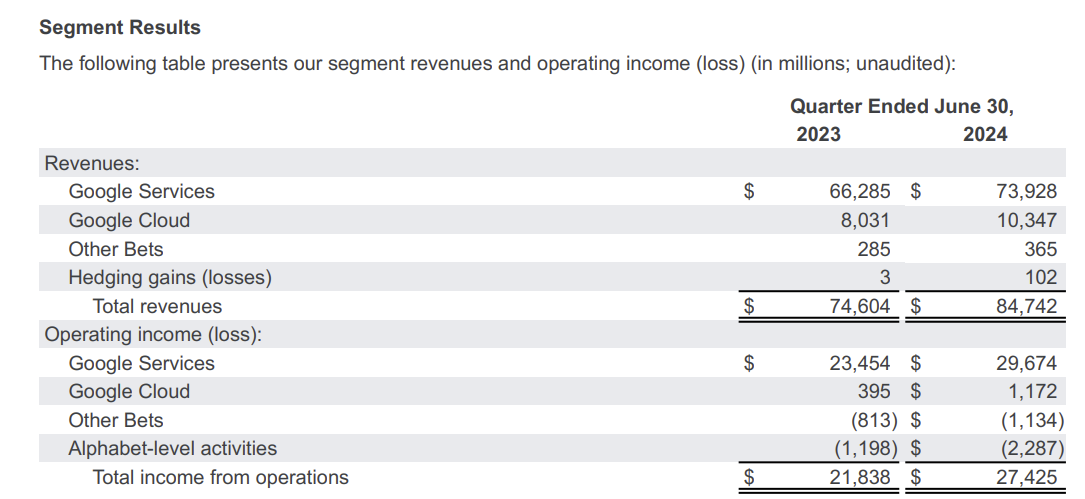

For now, genAI infrastructure spending isn't going to ease. Alphabet CEO Sundar Pichai crystallized the FOMO-fueled AI infrastructure boom when he answered a question about the company's genAI capital spending. Speaking on Alphabet’s second quarter earnings call, Pichai said:

"We are at an early stage of what I view as a very transformative era. When we go through a curve like this, the risk of under-investing is dramatically greater than the risk of over-investing. Even in scenarios where it turns out that we are over-investing the infrastructure is widely useful for us. I think not investing to be at the frontier definitely has much more significant downside. Having said that, we obsess around every dollar we put in. Our teams work super hard and I'm proud of the efficiency work, be it optimization of hardware, software, model deployment across our fleet."

Covello said: "AI is pie in the sky big picture. If you build it, they will come, just you got to trust this because technology always evolves and we're a couple of years into this. And there's not a single thing that this has been used for that's cost effective at this point. I think there's a unbelievable misunderstanding of what the technology can do today."

The fundamental issue with genAI is that the buildout starts from a different point. E-commerce started out cheaper. Mobile turned out to be cheaper. The internet made everything cheaper. "With AI, you're starting from a very high-cost base. I think there's a lot of revisionist history about how things start expensive and get cheaper. Nobody started with a trillion dollars," said Covello.

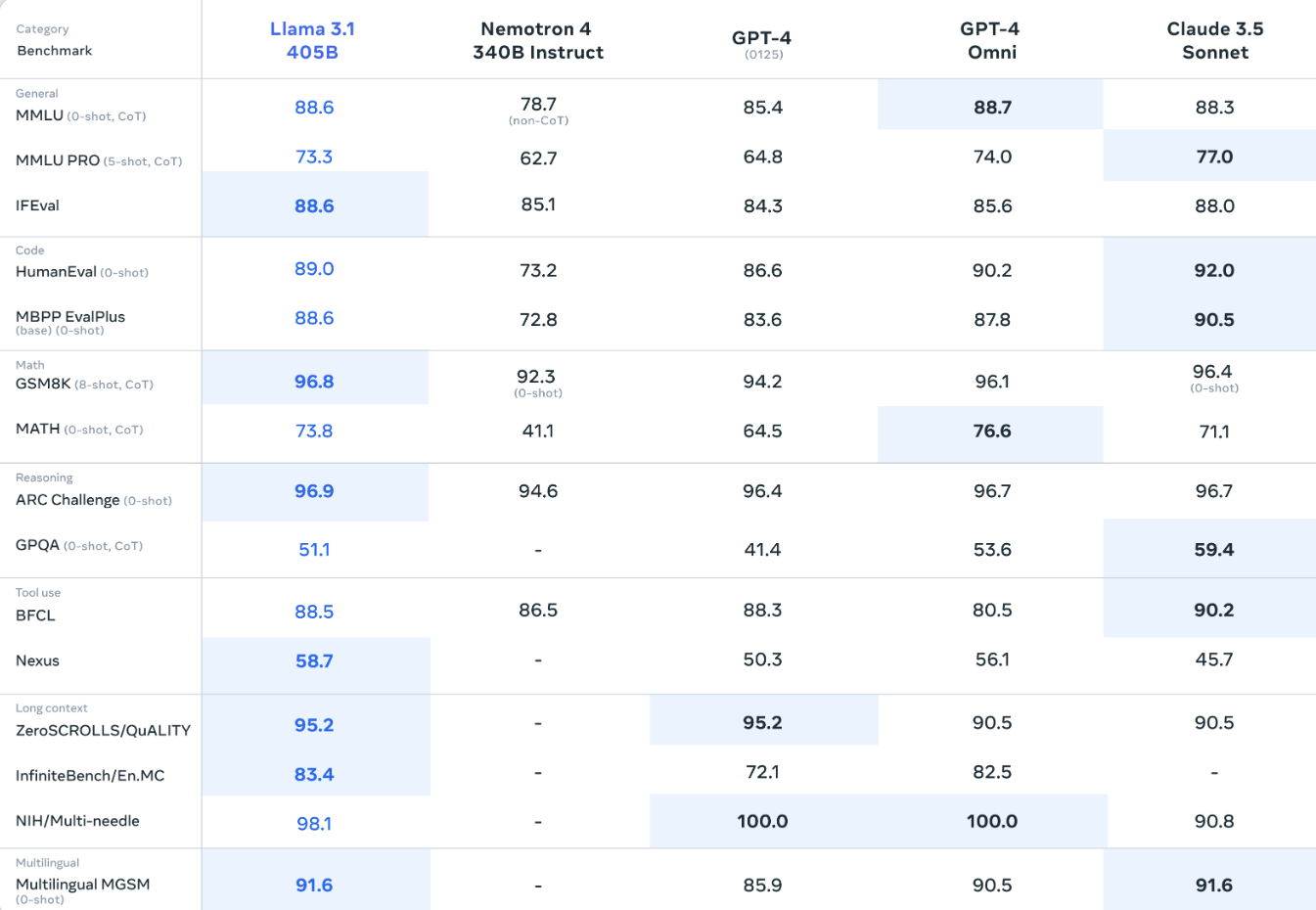

GenAI can get cheaper, but first Nvidia needs real competition. "Why is AI so expensive? It's really the GPU costs," said Covello. "I think the big determination in whether AI costs ever become affordable is whether there are other players that can come in and provide chips alongside Nvidia."

The economics

Acemoglu recently penned a research paper that questioned the economic benefit from genAI. Acemoglu said genAI economic prognostications depend on how quickly the technology can be integrated into an organization. Some genAI projects will boost productivity. Other companies will find that genAI was a waste of time and money.

The big issue is time horizon. Acemoglu said most genAI economic prognostications, which range from a 1.5% to 3.4% boost to average annual GDP over the next decade, have too many uncertainties. His paper concludes that AI and productivity improvements at the task level will increase total factor productivity by 0.71% over 10 years. That amount is nontrivial but too modest to justify the genAI building boom today. You can find plenty of folks who disagree here, here and here.

- Enterprises start to harvest AI-driven exponential efficiency efforts

- GenAI trickledown economics: Where the enterprise stands today

- Generative AI spending will move beyond the IT budget

Acemoglu said: "I think economic theory actually puts a lot of discipline on how some of these effects can work once we leave out those things like amazing new products coming online, something much better than silicon coming, for example, in five years. That's good. That happens. All right, that's big. But once you leave those out, the way that you're going to get productivity effects is you look at what fraction of the things that we do in the production process are impacted and how that impact is going to change our productivity or reduce our costs."

Acemoglu is that genAI isn't going to be big enough to replace humans in the short run. Transport, manufacturing, utilities and other industries have people interacting with the real world. GenAI can offload some of that work, but not much in the next few years. Pure mental tasks can be affected and that work replaced isn't trivial but it's not huge, he said.

In 10 years, genAI may be cost effective and move GDP, but a lot has to happen first. Acemoglu said: "I am less convinced that we're going to get there very quickly by just throwing more GPU capacity. Any estimate of what can be achieved with an anytime horizon is going to be uncertain. There's a view among some people in the industry that you double the amount of data, you double the amount of compute capacity, same number of GPU units or their processing power, and you're going to double the capacities of the AI model."

There are a few issues with scaling genAI and driving this alleged productivity boom. For starters, it's unclear what doubling capabilities of genAI will do in economic terms. Meanwhile, more data doesn't matter if it doesn't improve predictions and help you solve problems. It's also not clear whether data or compute will be cheap enough to really move productivity.

Acemoglu said: "I think there is the possibility that there could be very severe limits to where we can go with the current architecture. Human cognition doesn't just rely on a single mode. It involves many different types of cognitive processes, different types of sensory inputs, different types of reasoning. The current architecture of large language models has proven to be more impressive than many people would have predicted, but I think it still takes a big leap of faith. There are all the sorts of uncertainties."

In the end, genAI's march to super intelligence and a productivity boom depends on time horizon. Acemoglu said.

A Sequoia report makes you go hmm

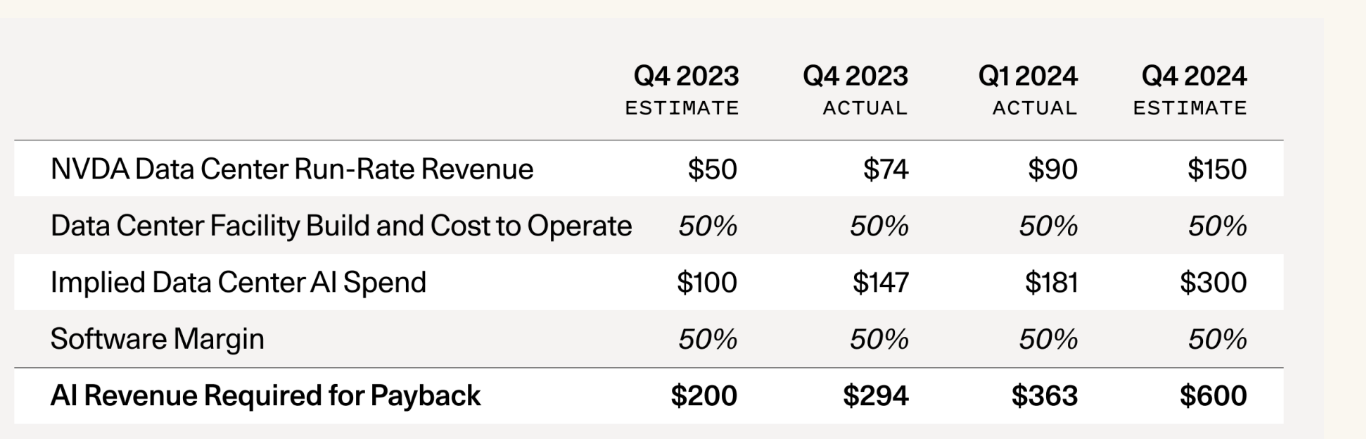

In June, venture capital firm Sequoia chimed in on the AI buildout. Sequoia partner David Cahn noted the big gap between revenue expectations implied by the AI infrastructure buildout and actual revenue growth, which Cahn used as proxy for end user value.

Cahn first raised the issue in Sept. 2023, but updated his argument since conditions have changed. He noted that the supply shortage for GPUs has been "almost entirely eliminated."

In addition, GPU stockpiles at large cloud providers is growing. If these stockpiles grow enough demand will decrease--and so will Nvidia's valuation. Cahn's other concern is that most of the direct AI revenue is going to OpenAI. Microsoft, Google, Apple and Meta need a big leap in direct AI revenue. Here’s a look at Sequoia’s payback figures.

Cahn said: "Speculative frenzies are part of technology, and so they are not something to be afraid of. Those who remain level-headed through this moment have the chance to build extremely important companies. But we need to make sure not to believe in the delusion that has now spread from Silicon Valley to the rest of the country, and indeed the world. That delusion says that we’re all going to get rich quick, because AGI is coming tomorrow, and we all need to stockpile the only valuable resource, which is GPUs."

My take

First, this topic has been on my mind for a few months. The data center buildout, machinations of bitcoin miners suddenly pivoting to AI with a new narrative and the lack of trickle-down economics beyond Nvidia have given me pause. After all, I've seen this movie before with the dotcom boom and bust, 2008 financial crisis, the COVID-19 pandemic component panic and current housing bubble.

All of these bubble cycles include a gold rush that leads to too much capacity. This capacity usually pans out in the long run, but squeezes investors that aren't first movers.

Nvidia is priced for perfection and it's only logical that there will be some indigestion ahead as the genAI supply chain starts optimizing for costs. There's a rush to buy GPUs now, but you can only build data centers so fast. Nvidia GPUs have become their own asset class, but competition looms.

The big genAI spenders are touting massive capex budgets that may not be tolerated by Wall Street forever. This spending is fine until sales growth and/or productivity gains slow. Spending on genAI will always work--until it doesn't.

And perhaps the biggest reason to play contrarian about the genAI boom. I've heard cashiers, grandparents and randoms on the street all talking about Nvidia and genAI--and I don't live in Silicon Valley.

In the end, it's worth paying attention to these genAI contrarians but keep in mind that bubbles last longer than you think. Also keep in mind no one rings a bell at the top, but there are signs worth watching.

Insights Archive

- Enterprises start to harvest AI-driven exponential efficiency efforts

- GenAI may be the new UI for enterprise software

- Education tech in turmoil amid genAI: Why consolidation is next

- 14 takeaways from genAI initiatives midway through 2024

- OpenAI and Microsoft: Symbiotic or future frenemies?

- AI infrastructure is the new innovation hotbed with smartphone-like release cadence

- Don't forget the non-technical, human costs to generative AI projects

- GenAI boom eludes enterprise software...for now

- The real reason Windows AI PCs will be interesting

- Copilot, genAI agent implementations are about to get complicated

- Generative AI spending will move beyond the IT budget

- Financial services firms see genAI use cases leading to efficiency boom