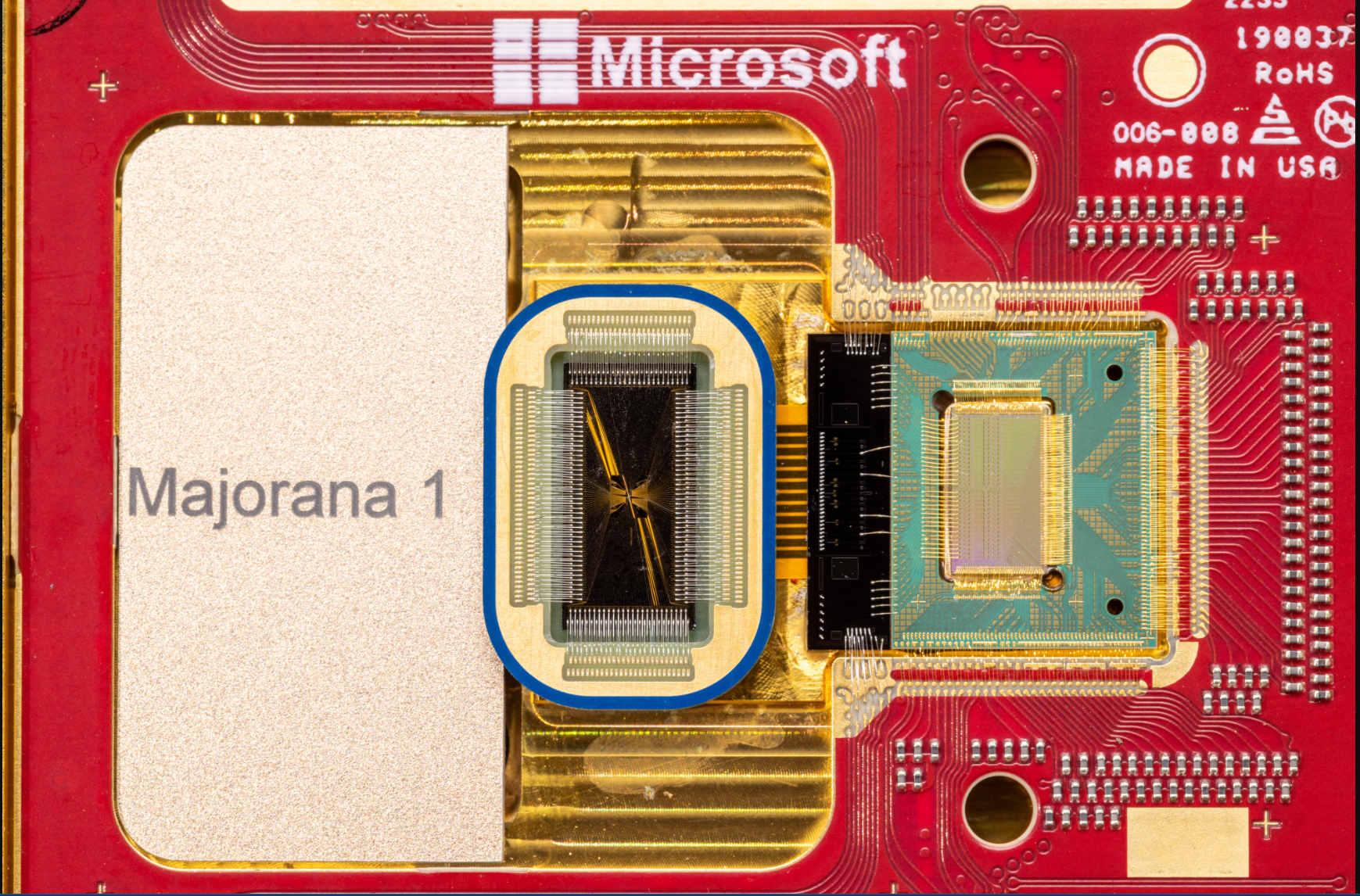

Microsoft unveils Majorana 1, aims to scale quantum computing

Microsoft launched Majorana 1, a quantum computing chip with a Topological Core architecture.

Topological quantum computing uses a concept similar to semiconductors using "anyons," which can arrange qubits into patterns. A topological superconductor is a material that can create a new state of matter. It's harnessed to create a more stable qubit that can be digitally controlled.

According to Microsoft, Majorana 1 has a breakthrough material that can observe and control Majorana particles to create more reliable and scalable qubits. Chetan Nayak, Microsoft Technical Fellow, said the goal for Majorana 1 was to invent "the transistor for the quantum age."

Microsoft is betting that Majorana 1 will be a more fault tolerant way to scale quantum computing. Microsoft's architecture used in Majorana 1 creates a path to fit 1 million qubits on a chip the size of a palm of a hand.

There are various flavors of quantum computing in addition to the approach Microsoft is using:

- Superconducting qubits are seen as general quantum computing options and vendors in this category include IBM, Google and Rigetti Computing.

- Trapped Ion quantum computing has high fidelity and long coherence times. IonQ is the big player in this category along with Quantinuum, which was created by the merger of Honeywell's quantum unit and Cambridge Quantum.

- Neutral atom quantum computing has the potential to scale better and QuEra is a player here.

- Quantum annealing is designed for optimization over general purpose computing and D-Wave has championed this approach.

Microsoft said Majorana 1 has eight topological qubits on a chip and can scale from there. Microsoft is building its own hardware as well as partnering with the likes of Quantinuum and Atom Computing.

- Quantinuum, Microsoft claim quantum reliability breakthrough

- Microsoft claims hybrid quantum breakthrough with Quantinuum, partners with Atom Computing

Years or decades?

Quantum computing has been in the middle of a big debate about whether it'll be useful in years or decades. Nvidia CEO Jensen Huang said in January that quantum computing was 15 to 30 years away from being useful. Microsoft said its approach will scale quantum computing "within years, not decades."

- Taking stock of quantum computing’s kerfuffle and what's next

- CxOs need to focus on quantum computing readiness, not the noise

- Practical quantum computing advances ramp up going into 2025

- Quantum computing all in on hybrid HPC with classical computing

Microsoft outlined Majorana 1 in a paper in Nature.

Under a program with DARPA, Microsoft said it will build the world's first fault-tolerant prototype based on topological qubits.

Nayak said Microsoft's plan now revolves around "making more complex devices" including its first QPU Majorana with a topological core. Nayak said Microsoft "can scale to a million qubits on a chip the size of a watch face."

Given Microsoft's developments, the move by Quantinuum to combine quantum and generative AI and various hybrid HPC and quantum efforts, enterprises need to prepare potential use cases.

Indeed, cloud vendors, which will deliver quantum instances, have been busy setting up services to get enterprises quantum ready. Given the mileposts, quantum computing is developing quickly.

Constellation Research analyst Holger Mueller said:

Data to Decisions Tech Optimization Innovation & Product-led Growth Microsoft Quantum Computing Chief Information Officer"Right when you think quantum computing approaches were set we have a new approach and vocabulary to learn with topological qubits and majorana. It is good to see that alternate approaches are feasible, promising and could accelerate the path to quantum, but it does leave a few question marks for other quantum vendors scaling out alternate approaches. What will matter for CxOs will be a consistent software layer across platforms to traverse quantum platforms. But first we need to see the viable quantum platforms."

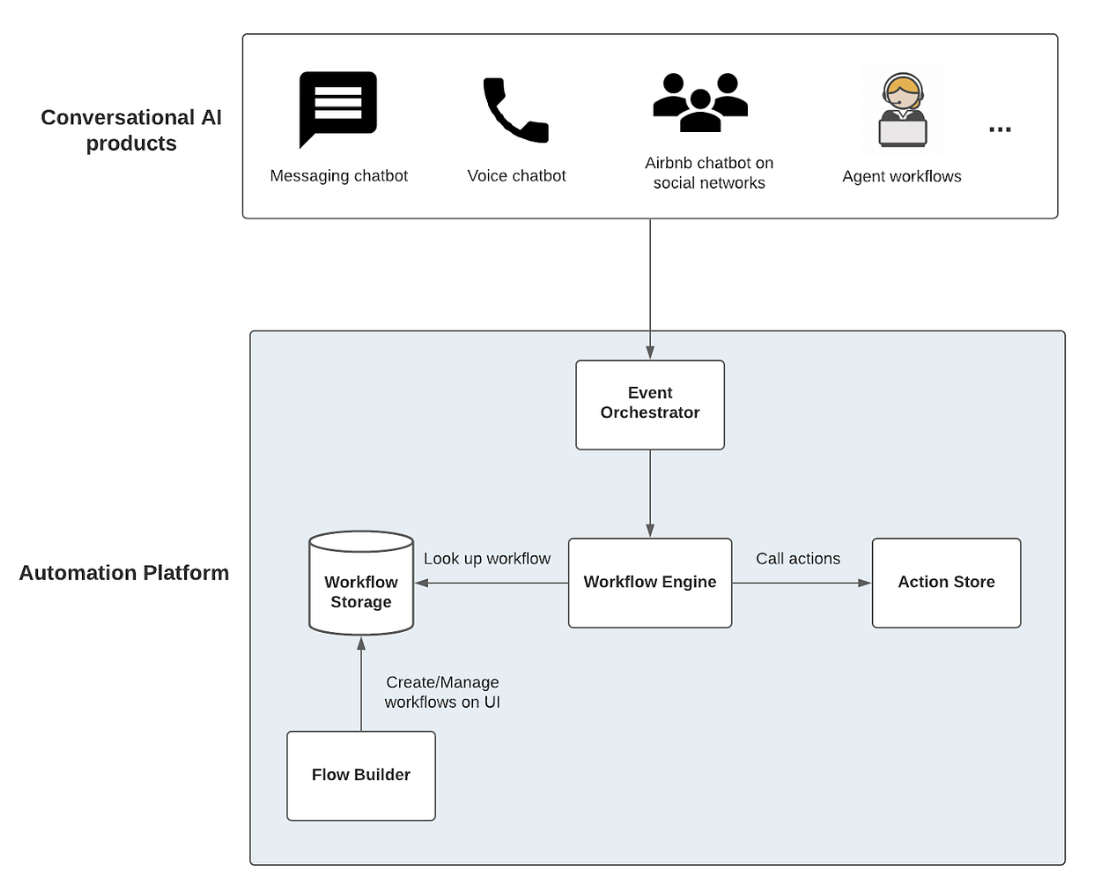

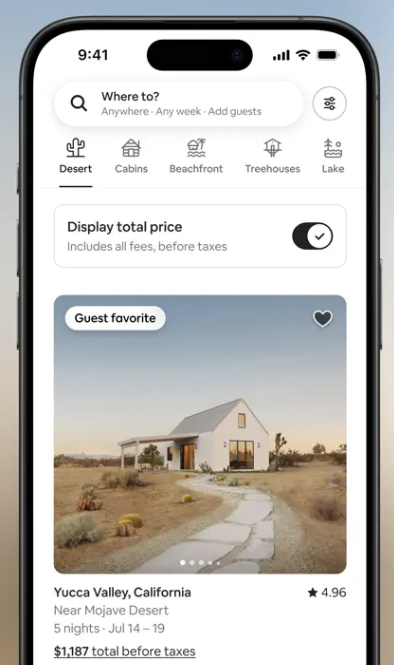

Indeed, that velocity is due to a revamped tech stack designed to take advantage of AI and improve the overall experience. Airbnb is largely built on Amazon Web Services and has nearly 5 billion visitors a year.

Indeed, that velocity is due to a revamped tech stack designed to take advantage of AI and improve the overall experience. Airbnb is largely built on Amazon Web Services and has nearly 5 billion visitors a year.