Atlassian: A look at its system of work strategy, enterprise uptake, AI approach

Atlassian is exiting its second quarter on a $5 billion annual revenue run rate as its "System of Work" strategy is landing large enterprises that want to connect their technology and business teams.

The company's goal is to hit the $10 billion annual revenue run rate and its ability to leverage AI across its platform is resonating with large enterprises. Atlassian landed a record number of deals with more than $1 million in annual contract value in the second quarter.

Atlassian CEO Mike Cannon-Brookes said on an earnings conference call:

"Our cloud platform with AI threaded throughout is delivering. With more than 20 years of data and insights on how software, IT, and business teams plan, track, and deliver work, we're uniquely positioned to help teams across every organization on the planet work better together. Today, more than 1 million monthly active users are utilizing our Atlassian Intelligence features to unlock enterprise knowledge, supercharge workflows, and accelerate their team collaboration. We're seeing a number of AI interactions increase more than 25x year-over-year."

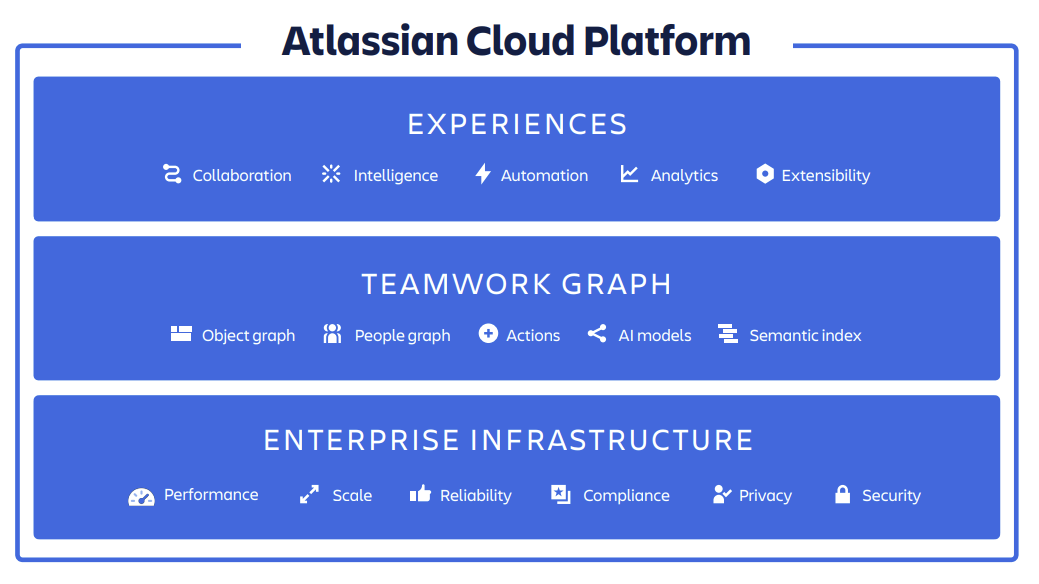

Atlassian reported a second quarter net loss of $38.2 million in the second quarter, or 15 cents a share, on revenue of $1.29 billion, up 21% from a year ago. Non-GAAP second quarter earnings were 96 cents a share, 20 cents ahead of expectations.

As for the outlook, Atlassian projected third quarter revenue of $1.34 billion to $1.35 billion with cloud revenue growth of 23.5%. For fiscal 2025, Atlassian projected revenue growth of 18.5% to 19% with cloud revenue growth of 26.5%.

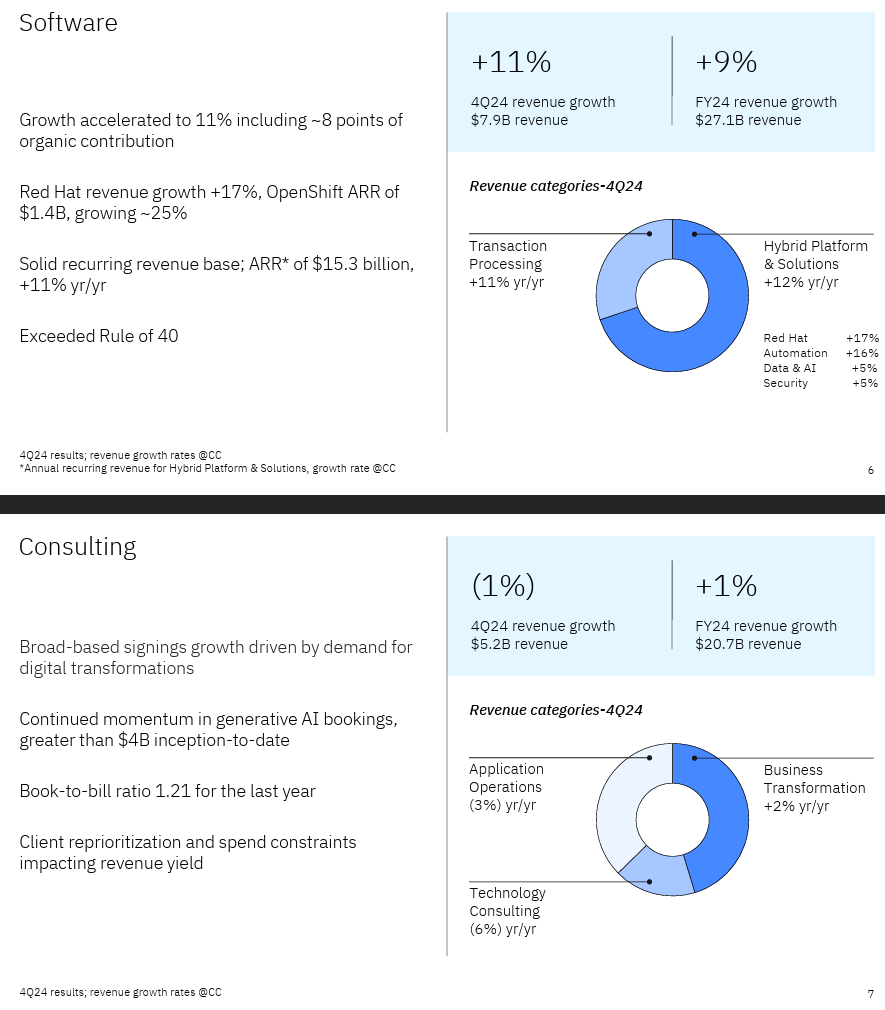

The strategy

Atlassian's strategy is to expand broadly into a system of work. This work system has the potential to break down information silos and align software development, service management and work management to create what could be referred to as an alignment engine.

In a nutshell, Atlassian wants to be the system enterprises use to plan, track and execute every collaboration workflow wall-to-wall.

In a nutshell, Atlassian wants to be the system enterprises use to plan, track and execute every collaboration workflow wall-to-wall.

Here's what Atlassian's plan based on its Investor Day last year. The company said:

"As business becomes more complex, we’re seeing a rapid rise in teams like HR, marketing, and finance working with their counterparts in development and IT. Management wants to see a single system of work, teams want seamless collaboration."

This is a bit of a different spin than that work operating system category occupied by Monday.com, Asana and Smartsheet, but the efforts rhyme. Like ServiceNow, Atlassian sees its platform as something that can go well beyond software development and service management into every corporate function. In fact, Atlassian sees a $67 billion total addressable market with $18 billion of that sum sitting in its existing customer base.

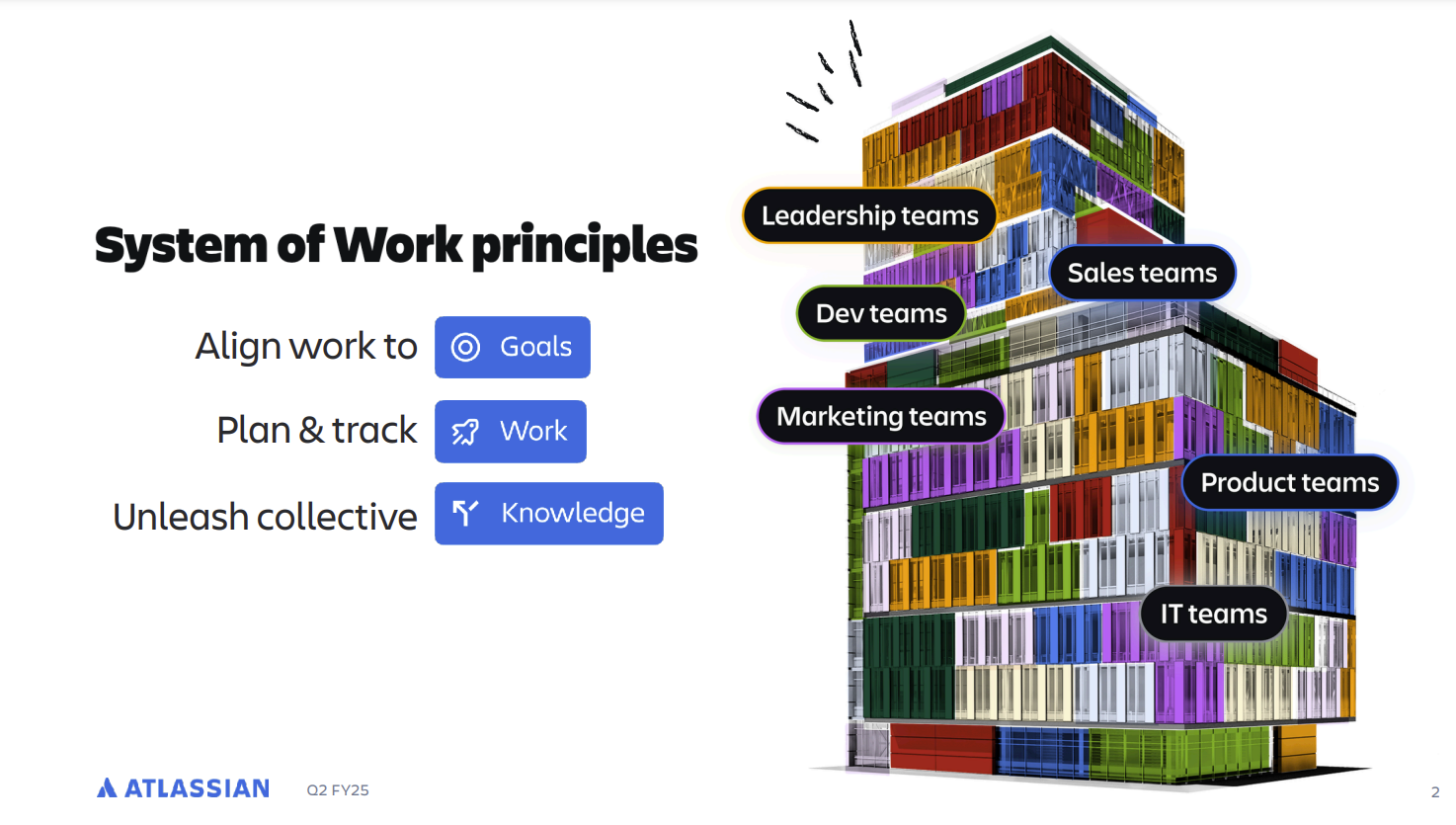

Atlassian is betting it can win because its software team collaboration tools put it in a digital transformation and AI pole position. The company can also land and expand. More importantly, Atlassian has one platform where it can leverage AI across its products and 1,800 marketplace partners to extend it.

Atlassian acquires Loom for $975 million, will add asynchronous video to platform

On the product front Atlassian is doing the following:

- Doubling down on IT service management with Jira Service Management.

- Move its entire customer base to the cloud. In the second quarter, cloud revenue was $847 million, up 30%. Data center revenue, where Atlassian is self-managed by enterprises, was $362.3 million, up 32%.

- Grow large enterprise accounts.

- Layer AI and Atlassian Intelligence throughout the platform. Atlassian is in a natural position to turbo charge software team processes with AI.

- Use Rovo, a human-AI collaboration tool that disperses knowledge to teams and their workflows to land more cross-functional enterprise teams. Rovo is Atlassian's AI agent play and it's early days in the uptake. Atlassian said customers are mostly in the Rovo proof-of-concept stage.

Atlassian launches Rovo, consolidates Jira Work Management, Jira Software | Atlassian Rovo AI additions go GA with consumption pricing on deck

Can Atlassian move upstream?

Cannon-Brookes said in a shareholder letter that 10% of Atlassian's revenue in the second quarter was from large customers. The big question on the earnings call was whether Atlassian can expand in large enterprises.

According to Cannon-Brookes, the combination of Jira Work Management and Jira Software gives the company the opportunity to touch more employees and use cases in large companies.

Atlassian is betting that large enterprises will gravitate toward platforms that can enable innovation.

Cannon-Brookes said:

"The CIOs and CEOs I speak to continue to want to form a deeper strategic relationship with Atlassian, not because of any single product we have, but because of our R&D speed, the innovation we're delivering. AI is just the latest example of that, but also the breadth of the platform, the amount of things they can see it improving from their goals all the way down to the day-to-day work that they do."

Atlassian did say there's some uncertainty in the macroeconomic environment, but the risks are manageable.

Atlassian on AI agent hype, multiple foundational models

Another item that remains to be seen is how large enterprises react to the barrage of AI agents being tossed at them. Atlassian's Rovo is also in that mix.

Cannon-Brookes was asked about the competitive dynamic in agentic AI. He had some interesting things to say.

"There's no doubt we've been through these technology transformations before. And when we go through them, you run through the hype cycle up and down, and there are certain words that mean something and then mean nothing and then end up meaning something. I think agents is probably squarely in that camp. The word is used everywhere suddenly for all sorts of things that I would argue aren't agents, but you can't control how the world uses a word."

Atlassian's definition of an AI agent goes like this.

- AI agents have a goal, are aimed at outcomes, and have "some sort of personality."

- They have a set of knowledge and can take action.

- There are control parameters.

- And AI agents actual like a virtual teammate.

"Atlassian agents are unique in that they can basically anywhere that a human being can be used in our software, an agent can do the same sorts of things. You can assign them issues, you can give them certain sets of knowledge, you can give them permission to certain actions. So that's pretty differentiated to other people who are building either a chatbot or fundamentally just something they're calling an agent," said Cannon-Brookes.

The ultimate barometer for enterprise AI vendors is the ability to pivot R&D. Cannon-Brookes said the AI market is moving quickly and vendors have to go with it.

"Our ability to build, deploy, get customer feedback and learn in a loop is really important in order to navigate these transitions," said Cannon-Brookes. "Anyone who tells you they know where this is going to be three years from now is a fool. What I can tell you is that we have to be able to learn really fast and move really fast and take the latest and greatest innovations and deploy them and get them to customers quickly. That is the best strategic path to gain that value over time."

In addition, enterprises will need vendors that rely on multiple models. Agentic AI is going to depend on a series of different models. "Atlassian Intelligence needs to be able to keep adapting modern models as fast as possible. Again, we're running more than 30 models from more than seven different vendors today. We continue to evaluate new models," said Cannon-Brookes. "It's also about all the data you have, the quality, the ability to search and ability to connect it."

"Ultimately, customers and users don't use an AI model, they use a piece of software, they use some high-level technology to interact with an agent," he added.

Data to Decisions Future of Work Next-Generation Customer Experience Tech Optimization Innovation & Product-led Growth New C-Suite Sales Marketing Digital Safety, Privacy & Cybersecurity AI GenerativeAI ML Machine Learning LLMs Agentic AI Analytics Automation Disruptive Technology Chief Executive Officer Chief Financial Officer Chief Information Officer Chief Experience Officer Chief Technology Officer Chief AI Officer Chief Data Officer Chief Analytics Officer Chief Information Security Officer Chief Product Officer