Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

AWS customers are increasingly focused on using cloud management approaches on-premises, optimizing GPU costs and modernizing mainframe infrastructure.

Those were some of the customer takeaways from AWS re:Invent 2024's first day. AWS' news flow starts in earnest on Tuesday so it's worth highlighting a few tales from the buy side today.

Merck on using cloud approaches on-prem

Merck's Jeff Feist, Executive Director, Hosting Solutions, is in charge of the pharma giant's cloud environment and on-premises. Feist said it wants to simplify the hybrid infrastructure and lower total cost of ownership.

Feist also added that the company is focused on transformation with an effort called BlueSky.

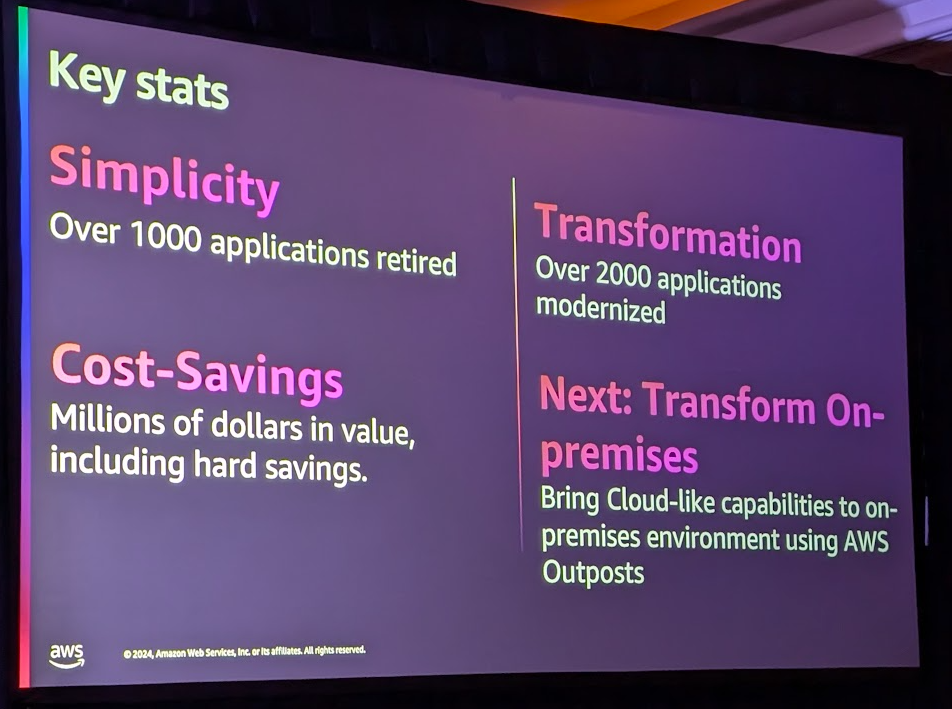

"My role has been focusing on the landing zones, developing automated governance controls, making sure that we have a safe, secure and agile environment to leverage the benefits that cloud offers," said Feist. BlueSky includes the following:

- Roll out infrastructure as code, automated deployments and APIs with software defined configurations.

- Establish a culture that's agile. "It's probably more important than the technology itself," said Feist. "We need the culture of the company to embrace the model cloud way of working."

- Training.

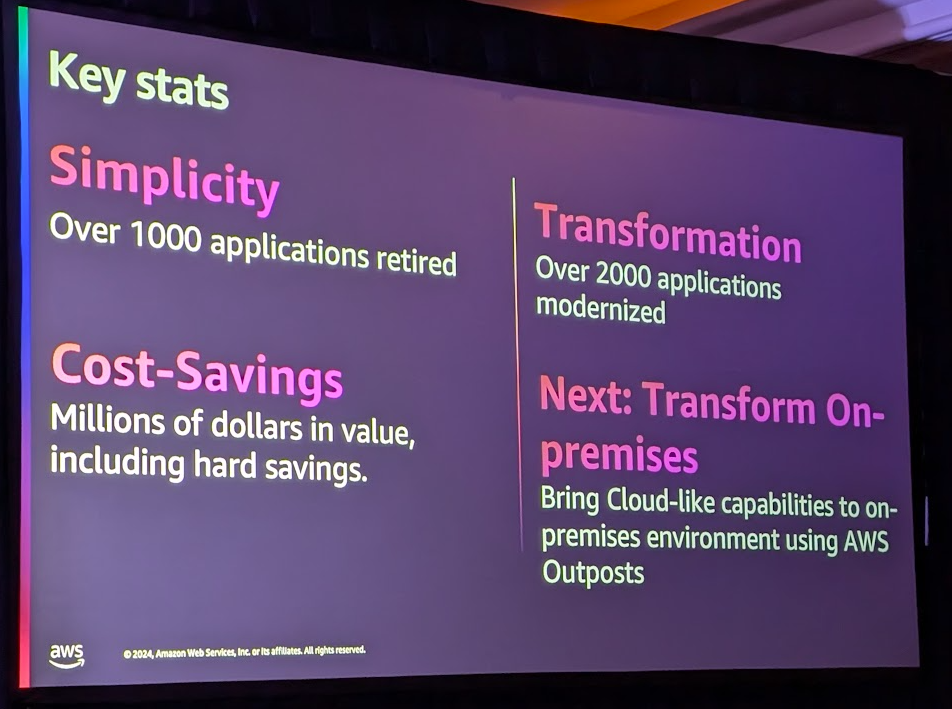

- Focus on delivering business value. The company has modernized more than 2,000 applications with cloud native services. Merck retired more than 1,000 applications.

Going forward, Feist said the company is using Amazon Outposts to focus on cloud models with on-premises environments. Feist said it is adopting a more simple management interface where AWS is responsible for maintenance.

In a nutshell, Feist is looking to make its on-prem infrastructure run like the public cloud setup it has with AWS.

Capital One on optimization and tracking cloud costs

Ed Peters, Vice President, Distinguished Engineer at Capital One, leads an ongoing transformation to create a bank that can use data and insight to disrupt the industry. Capital One has been an AWS customer since 2016.

Capital One has adopted AWS and has a focus on optimizing the infrastructure for costs. "We have a robust FinOps practice," said Peters. "We take the billing data and marry it up with the telemetry tracking and resource tagging information."

Peters said Capital One has saved millions of dollars with optimization. He said:

"We tag everything in our AWS cloud, down to billing units, individual teams, applications. I have a dashboard I can have access to that. I can tell you the monthly spend on any given application. We can drive very, very useful insight into the usage of the cloud, and we can focus our optimization on where it needs to be."

The company is also using Graviton to save money.

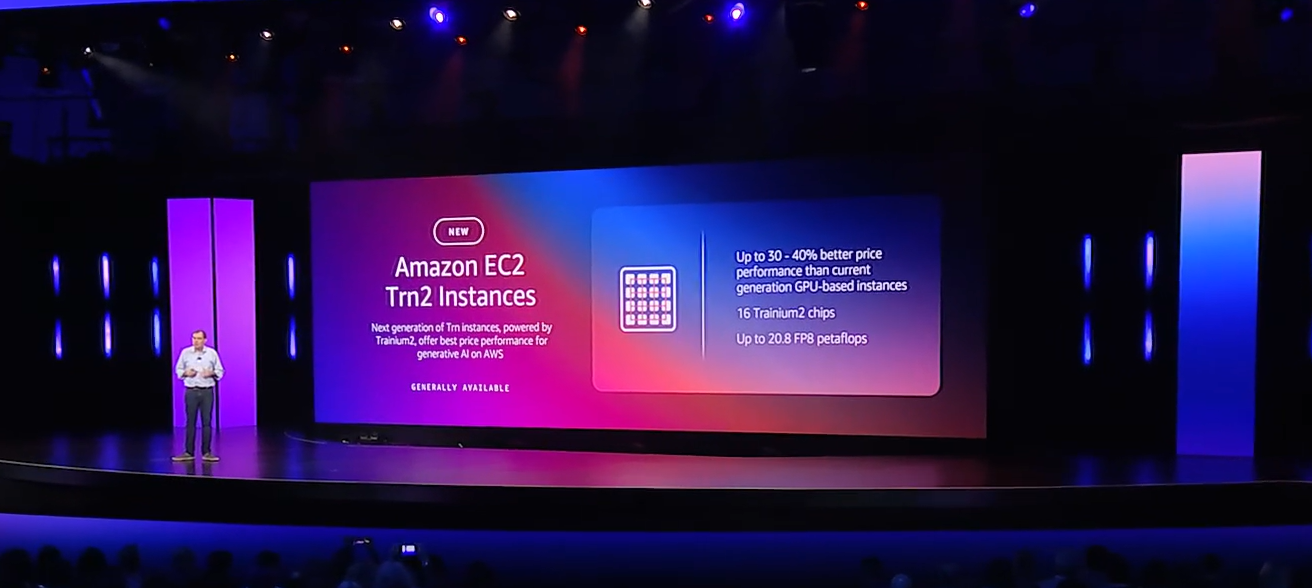

Going forward, Capital One is focused on generative AI workloads and building out an infrastructure that can be optimized and automated. Peters said Capital One is in a working group with AMD and Nvidia to optimize GPU workloads.

"We will continue to push forward in generative AI and its application in financial services," said Peters.

Capital One is also focused on transferring more of its operations including financial ledgers and business operations to the cloud.

Sprinklr on benchmarking GPU costs, smaller models

Sprinklr's Jamal Mazhar, Vice President of Engineering at Splinklr, said the company invested in AI early and has been focused on scaling its data ingestion and processing in a cost efficient way.

"We have thousands of servers and petabytes of data," he said.

As a result, Mazhar said Sprinkr has been focused on experimenting with instances that have a good cost ratio for compute and storage. Mazhar said his company has optimized on Graviton and scaling its Elastic Search workloads.

Mazhar said he has also been focused on smaller large language models and cutting GPU overhead. He said:

"A lot of times people use GPUs for AI workloads. But what we found out is that several of our inference models, which are very small in size, there's an overhead of using GPUs. For a smaller models, you can do quite well with compute intensive instances."

Mazhar said Sprinkr has been benchmarking AI workloads for its inference models and AI workloads. He added that the company has seen a 20% to 30% cost reduction. He said:

"When you try use a more expensive chip, you feel like you're going to get better performance. Just benchmarking the workload makes you realize that the GPU is not necessarily overkill. You're not using it properly."

Goldman Sachs: Modernizing mainframes

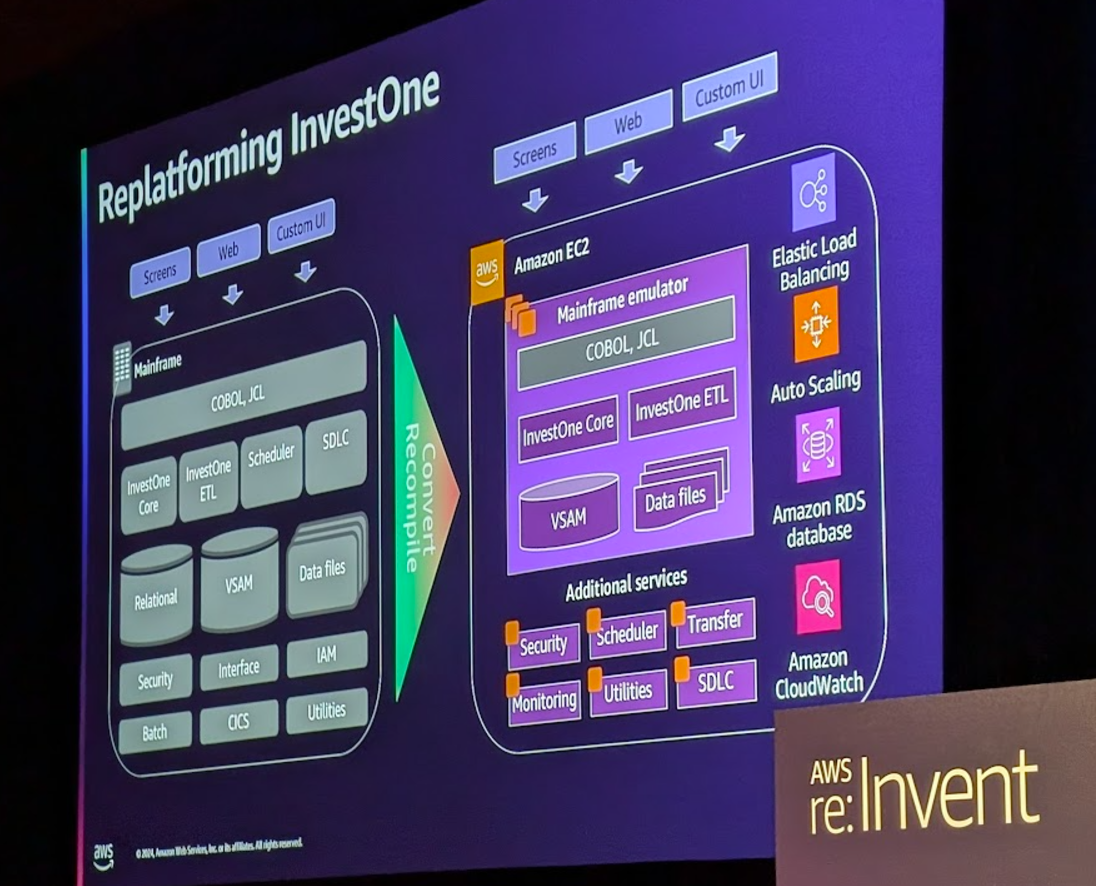

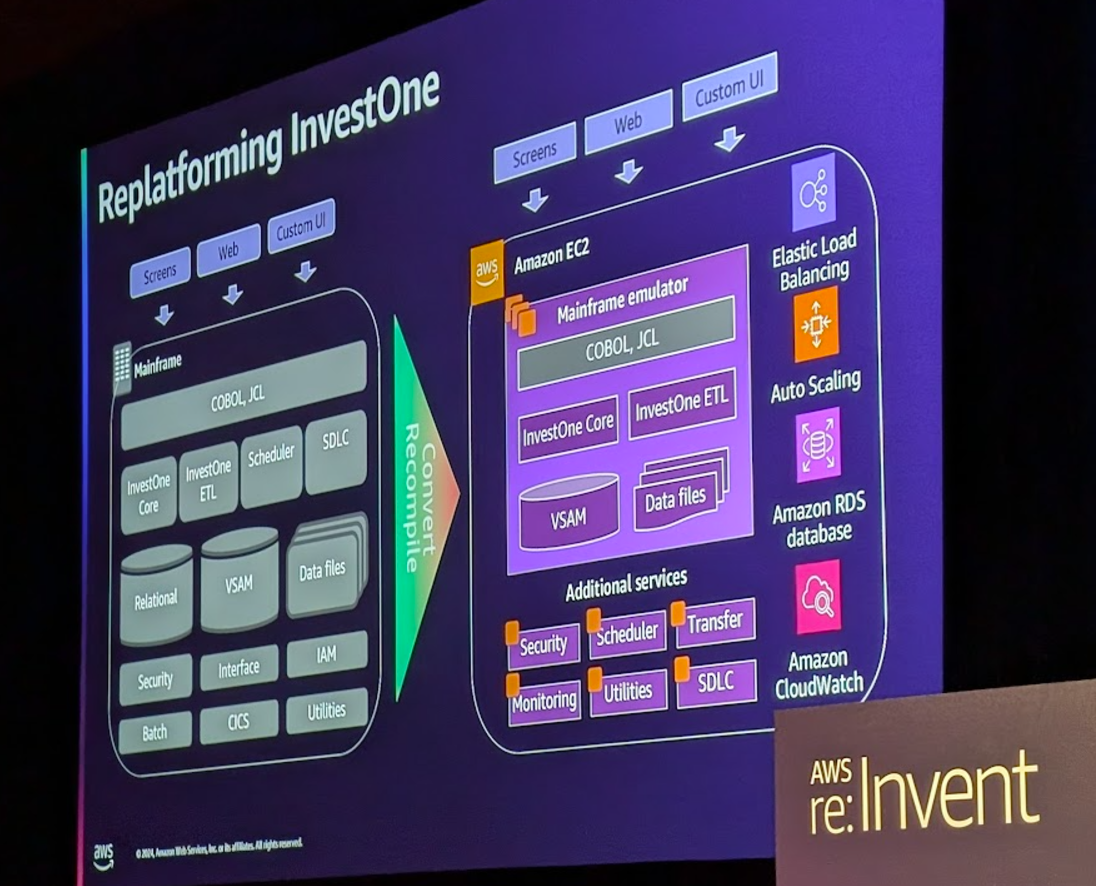

Victor Balta, Managing Director at Goldman Sachs, said the investment firm was focused on moving its mainframe software, which was licensed from FIS decades ago and heavily customized. FIS has said it won't support mainframes, which supports Goldman Sachs InvestOne platform.

InvestOne is Goldman Sachs investment book of record and sits in Goldman Sachs Asset Management, which oversees $2 trillion in assets. The mainframe architecture was running more than $6 million a year ago support and had limited scaling ability and integration.

Balta said Goldman Sachs created an emulator that would allow its COBOL-based system to run on AWS. Goldman Sachs also decoupled components such as data streaming, real-time integrations and batch processing to reduce costs.

"Currently we have a team of more than 20 global engineers supporting the platform," said Balta. "It's very expensive to run on mainframe with the complexity and integration. You don't have the same number of APIs or data connects to integrate with the mainframe. We're very limited on what we do. And sourcing high skilled COBOL engineers with that financial background is difficult."

Simply put, Goldman Sachs had 30 years of custom COBOL code. Rewriting it wasn't a possibility in a quick time frame so it decided to lift and shift with an emulator and go from there.

Going forward, Goldman Sachs Technology Fellow Yitz Loriner said the company will begin to reinvent its system so it can scale and create a new software development lifecycle.

"The emulator is just the first step because we wanted to reduce the blast radius of changing the infrastructure without changing the existing interface," said Balta. "It's a pragmatic approach."

Data to Decisions

Tech Optimization

Innovation & Product-led Growth

Future of Work

Next-Generation Customer Experience

Digital Safety, Privacy & Cybersecurity

AWS reInvent

aws

amazon

AI

GenerativeAI

ML

Machine Learning

LLMs

Agentic AI

Analytics

Automation

Disruptive Technology

Chief Information Officer

Chief Executive Officer

Chief Technology Officer

Chief AI Officer

Chief Data Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Product Officer