Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

The gap between generative AI's "build it and they will come" folks and the enterprises looking for actual business value may be widening. The next year will be interesting for generative AI trickledown economics.

First, let's touch on the boom market.

- Nvidia will report third quarter earnings Nov. 20 and rest assured it'll be the most important report of the year (again). Funny how we say that about Nvidia every quarter. Demand will remain off the charts and the hyperscalers and countries are begging to spend billions on Nvidia's AI accelerators. Analysts are looking for non-GAAP third quarter earnings 74 cents a share on revenue of $32.94 billion, up from $18.12 billion a year ago.

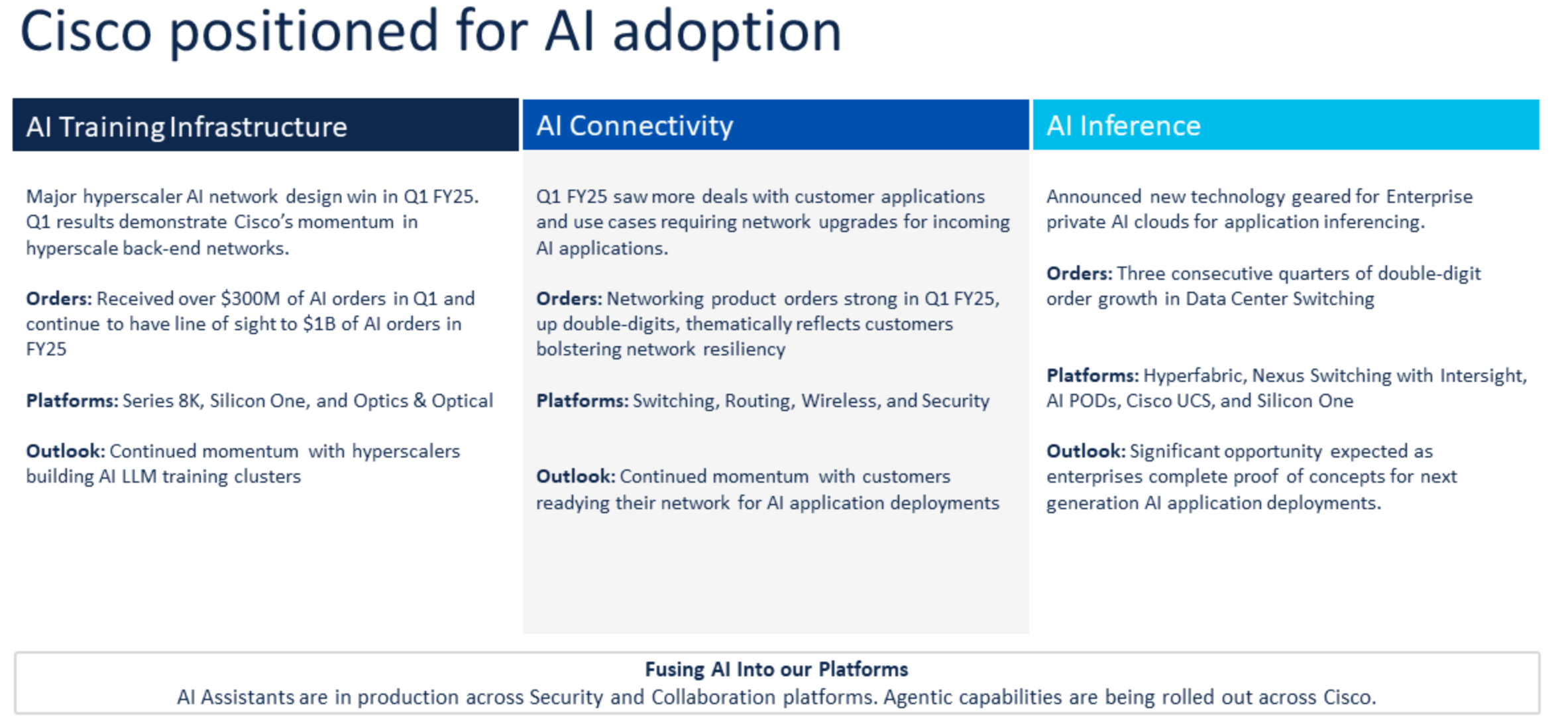

- CoreWeave, the AI hyperscale cloud provider, just closed a minority investment round of $650 million led by Jane Street, Magnetar, Fidelity and Macquarie Capital, with additional participation from Cisco Investments, Pure Storage, BlackRock, Coatue, Neuberger Berman and others. CoreWeave is valued at about $23 billion, according to Reuters.

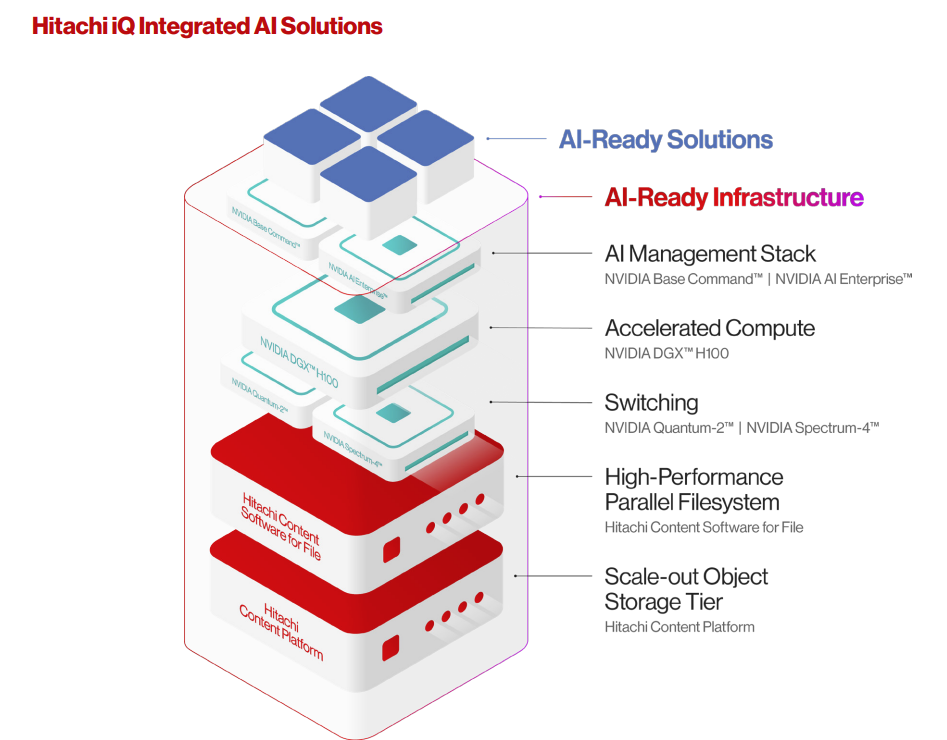

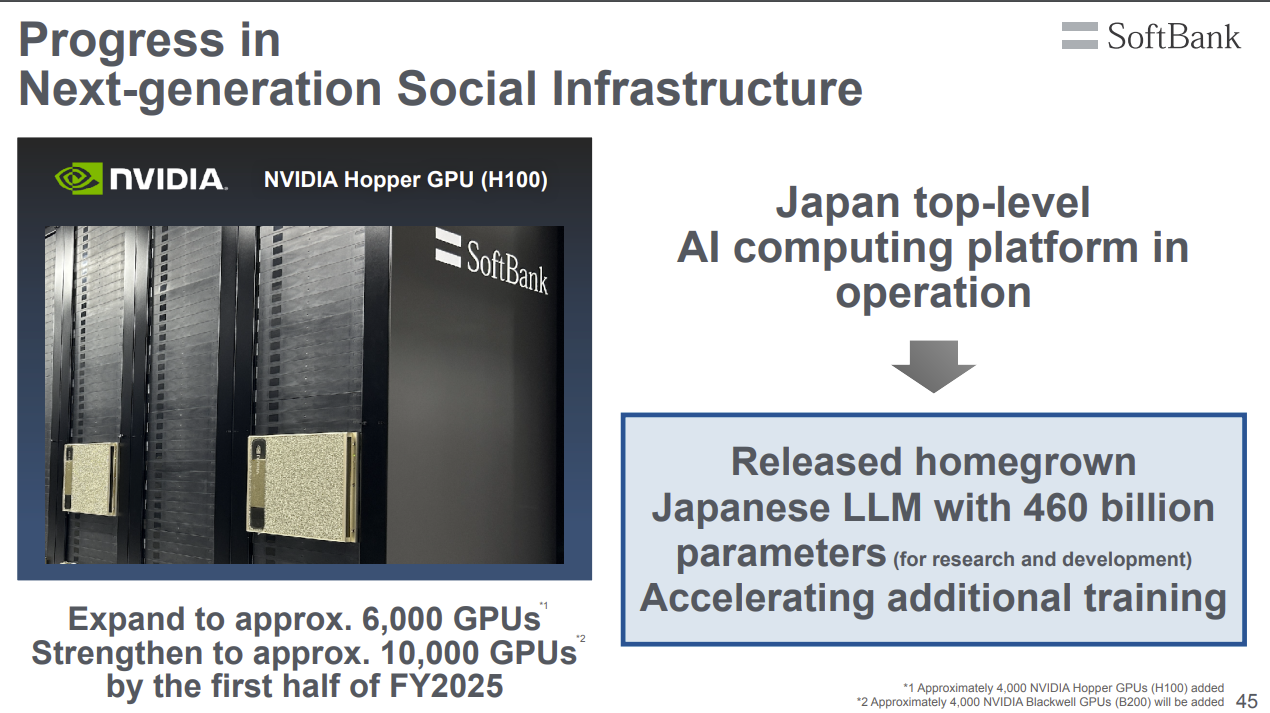

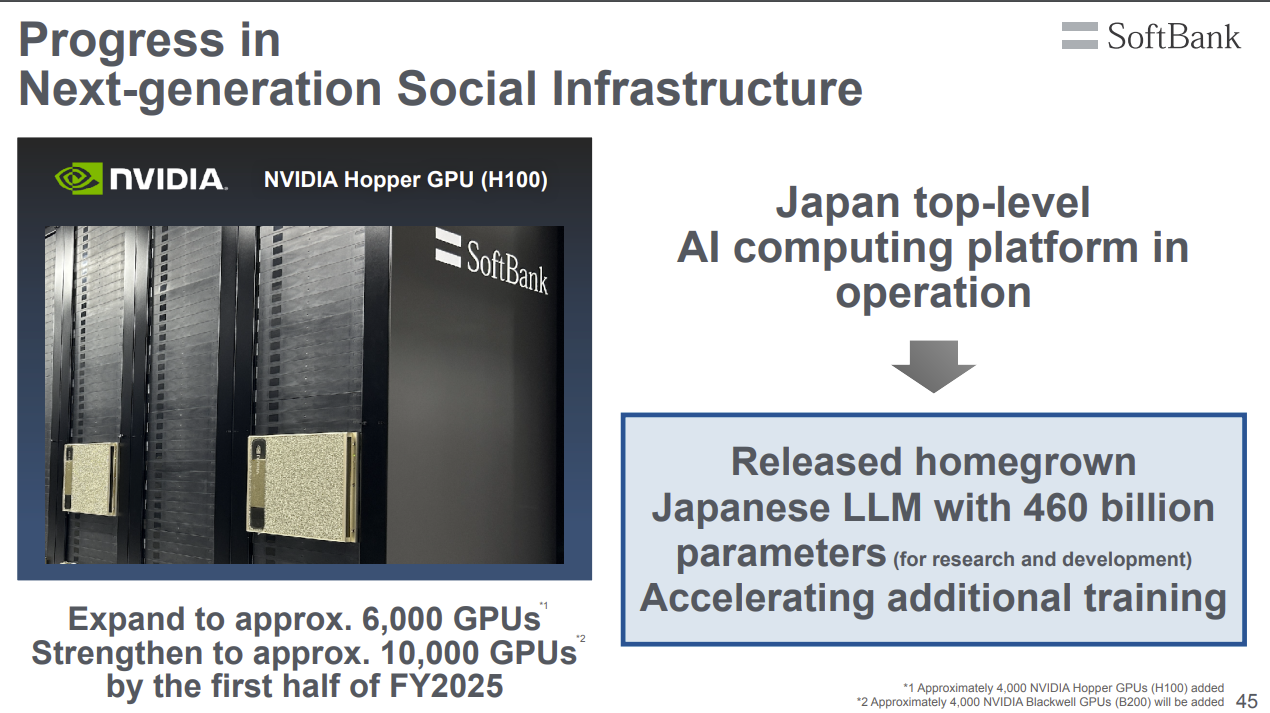

- Softbank Corp. will be among the first to build out an AI supercomputer using Nvidia's Blackwell platform. Softbank will get Nvidia's first Nvidia DGX B200 systems with plans to build out a Nvidia DGX SuperPOD supercomputer. Softbank floated debt to be first in line.

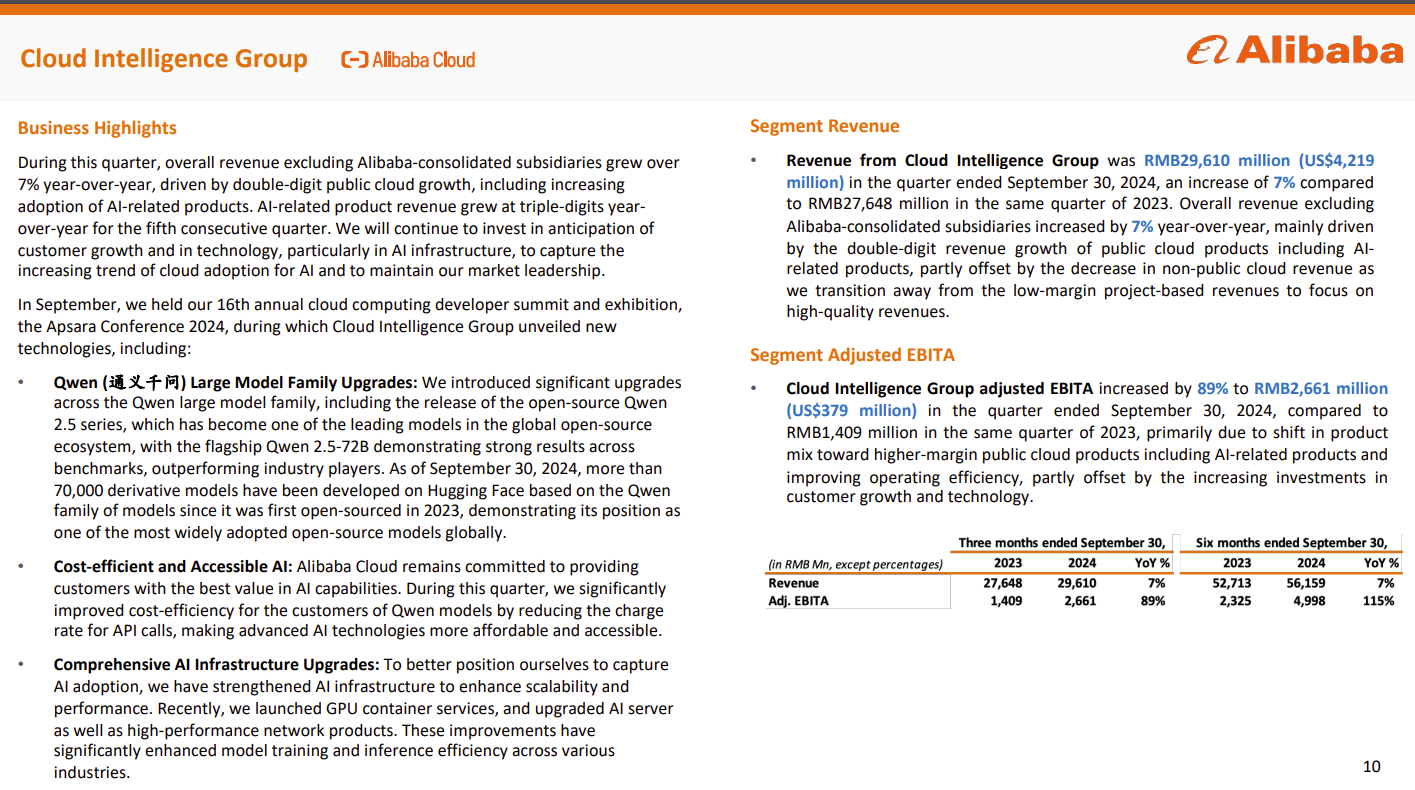

And on it goes. You could round up the AI building boom weekly. All of the hyperscalers are spending big on AI factories. Microsoft, Amazon, Meta and Alphabet all said capital spending on AI will continue to surge.

Microsoft CFO Amy Hood said:

"Roughly half of our cloud and AI-related spend continues to be for long-lived assets that will support monetization over the next 15 years and beyond. The remaining cloud and AI spend is primarily for servers, both CPUs and GPUs, to serve customers based on demand signals.â€

I couldn't help but think of what Oracle CTO Larry Ellison said on the company's most recent earnings call. Ellison said: "I went out to dinner with Jensen (Huang) and Elon (Musk) at Nobu in Palo Alto. I would describe the dinner as begging Jensen for GPUs. Please take our money. In fact, take more of it. You're not taking enough of it. It went well. The demand for GPUs and the desire to be first is a big deal."

We know the drill. The tech industry is betting that the real risk is not plowing billions (if not trillions) on the AI buildout. I'd recommend reading a contrarian argument about irrational AI data center exuberance. but your guess is as good as mine on the timing.

The disconnect between the AI buildout side and the business value side may be widening. 2025 is going to be a year of business value following 2024, which was about genAI production following proof of concepts in 2023. Yes, enterprises are going to need real gen-AI budgets in 2025, change management and returns.

Bottom line: The trickledown economics of generative AI at the beginning of 2024 hasn’t exactly trickled down beyond the infrastructure layer.

Can vendors monetize genAI value?

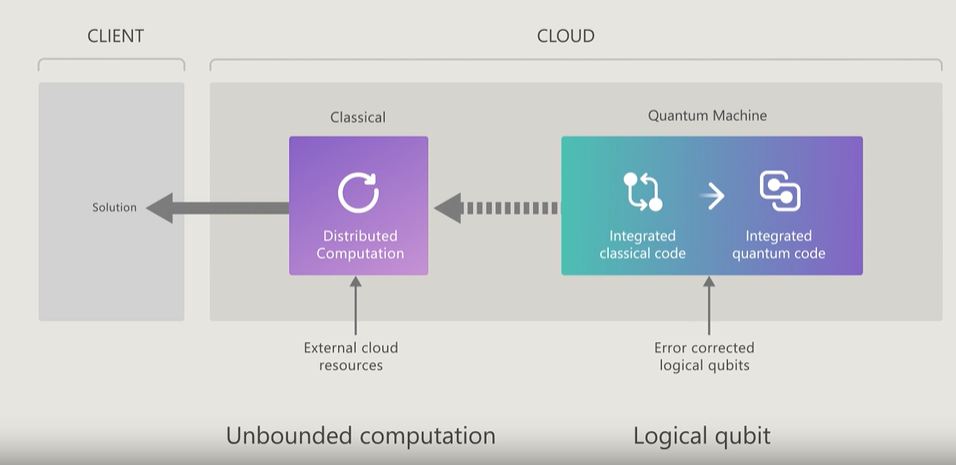

For real genAI value to occur, the application layer will need to be built out. LLM players, think Anthropic and OpenAI, are going to need apps to go with their models. Vendor monetization models are a bit fuzzy now. ServiceNow is clearly benefiting, but other software companies are seeing mixed results. Here's a sampling of recent comments and there will be a bunch more as SaaS earnings season kicks off soon.

Monday CEO Eran Zinman:

"Total AI actions grew more than 250% in Q3 compared to Q2. And the AI blocks grew 150% from Q2. So overall, we see more and more customers adopt those blocks, people incorporate them into their automation. They create a lot of processes within the product that involves AI within that. And over time, we are planning to roll out the monetization tied with AI, where we're going to generate clear and efficient value for our customers."

Zinman was then asked whether 2025 will be the year for AI monetization. "We don't have a specific date, but it might be in 2025," said Zinman. "But we can't commit to that."

Translation: Monday needs to show value to get the money.

Hood danced around monetization, but did say that analysts need to think about the long game.

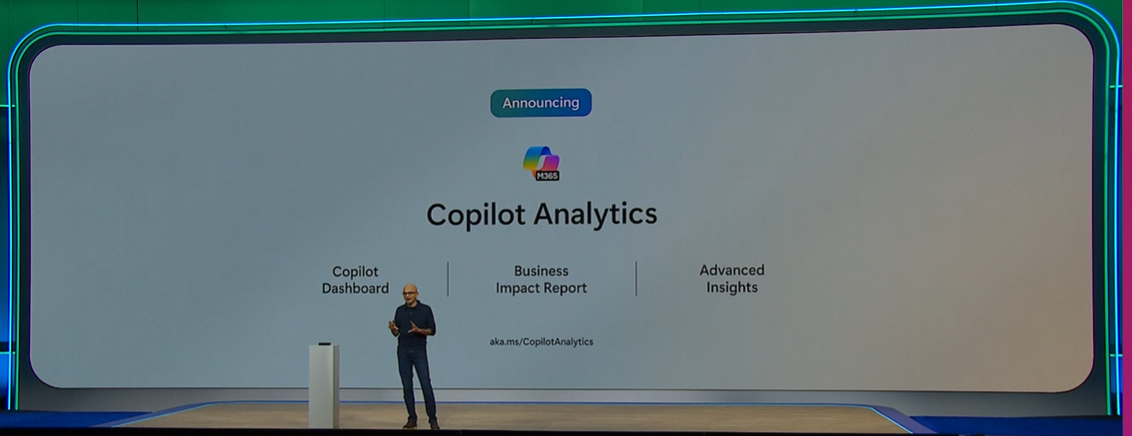

"We remain focused on strategically investing in the long-term opportunities that we believe drive shareholder value. Monetization from these investments continues to grow, and we're excited that only 2.5 years in, our AI business is on track to surpass $10 billion of annual revenue run rate in Q2. This will be the fastest business in our history to reach this milestone."

Infosys CEO Salil Parekh said:

"Any of the large deals that we’re looking at, there’s a generative AI component to it. Now, is it driving the large deals? Not in itself, but it’s very much a part of that large deal."

SAP CEO Christian Klein said Klein said about 30% of SAP's cloud orders included AI use cases.

Simply put, if you follow the money you'd trip once you got past the infrastructure layer and to the applications. Enterprise software vendors haven’t figured out what customers will pay for.

The beginning of genAI user fatigue?

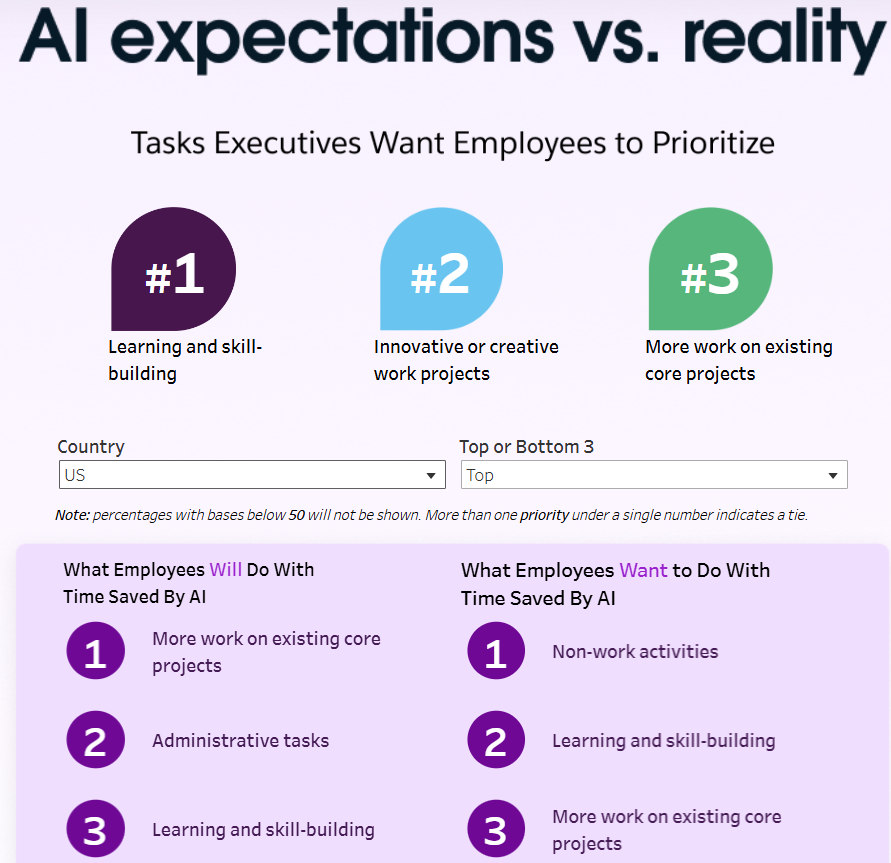

What about the users? Well, that genAI love affair has become tiresome too.

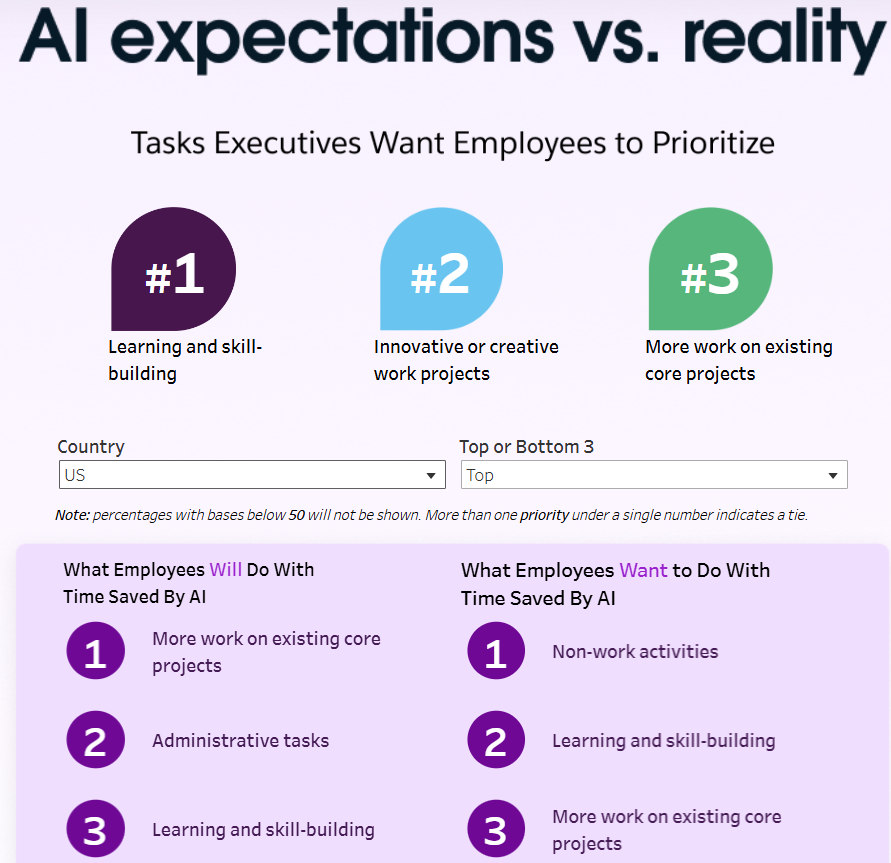

A Slack survey found that generative AI adoption among desk workers went from 20% in September 2023 to 32% in March 2024 and then hit a wall. Today, 33% of desk workers are using generative AI, according to Slack. The survey also found that excitement around AI is cooling and drop from 47% to 41% from March to August. Slack cited uncertainty, hype and lack of AI training for the decreases. Another possible reason I'll throw in: There's a copilot sprawl that's adding to costs for the enterprise and distraction for the worker.

This genAI adoption from employees is a bit chicken or egg. If enterprises balk at spending on various copilots, they're going to limit access. Or there's just not enough value for employees yet. I can't tell you how many times I've tuned out Microsoft Copilot, Google Gemini and other overly helpful AI. Dear LLM, if you're useful I'll reach out. Until then don't annoy me.

It’s on you CxOs

These moving parts—genAI to agentic AI, FOMO, data strategies, vendor promises and change management—are going to be challenging to navigate for CxOs. I mined my recorded conversations in 2024 to surface common AI themes from CxOs. Here’s a look:

GenAI is a tool instead of a magic bullet. CxOs are looking to integrate AI into processes and workflows and use the technology as an excuse to revamp them. Agentic AI is promising, but full automation will require orchestration and process groundwork.

Change management is everything. Change management has turned up in multiple conversations throughout 2024. Implementing AI is really about transforming how people work and interact with the technology. GenAI also will create organizational challenges. CxOs also need strong change management approaches to address employee fears about job displacement.

Governance and control matters. Governance is becoming a key theme as genAI projects move to production.

Data strategy. Enterprises are still working on their ground games when it comes to data. That work will continue for many in 2025.

Costs. Enterprises will begin to focus more on cost of compute, open source models, small language models and the use cases that drive the most value for the money. There will be some hard conversations between enterprises and software vendors.

Training. Everyone is talking about upskilling and training, but it is unclear whether this education is happening.

Iterative implementation. The mantra with genAI has been to "just get started," but that approach has led to AI debt already with copilot sprawl, difficulty changing models and user dissatisfaction. Will 2025 be better for the slower movers that spent 2024 refining the data strategy?

Employee-AI collaboration. CxOs are trying to solve for human-in-the-loop approaches so employees feel more empowered working with AI.

This genAI cognitive dissonance is worth watching in 2025. Either the value reaches vendors and enterprises or we're going to have a massive AI buildout hangover.

Data to Decisions

Future of Work

Innovation & Product-led Growth

Tech Optimization

Next-Generation Customer Experience

Digital Safety, Privacy & Cybersecurity

AI

GenerativeAI

ML

Machine Learning

LLMs

Agentic AI

Analytics

Automation

Disruptive Technology

Chief Information Officer

Chief Executive Officer

Chief Technology Officer

Chief AI Officer

Chief Data Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Product Officer