Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

AWS CEO Matt Garman at re:Invent 2024 elaborated on the company's strategy to serve up foundational building blocks, Intel's future, model choices, sustainability and why storylines about Trainium competing with Nvidia are misplaced.

Most of Garman’s comments were follow-ups on the happenings at re:Invent. Here’s the news stack:

- AWS unveils next-gen Amazon SageMaker in bid to unify data, analytics, AI

- AWS aims to make Amazon Bedrock your agentic AI point guard

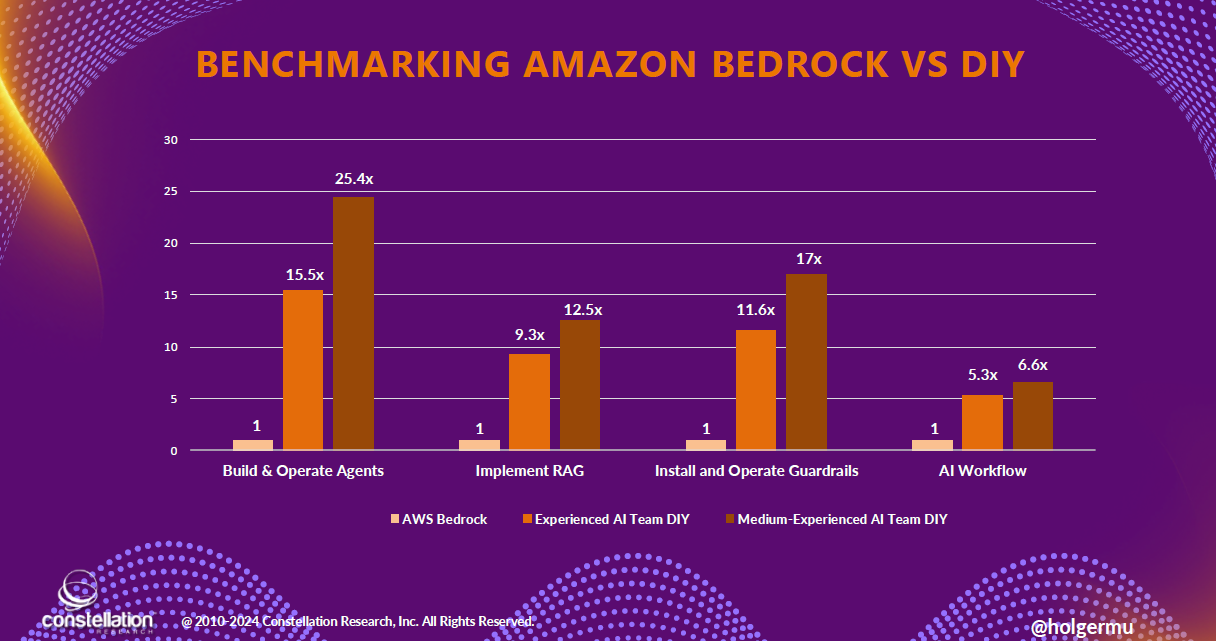

- Amazon Bedrock vs. DIY approaches benchmarked (full report)

- AWS adds capacity sharing, training plans to SageMaker HyperPod, marketplace for Bedrock

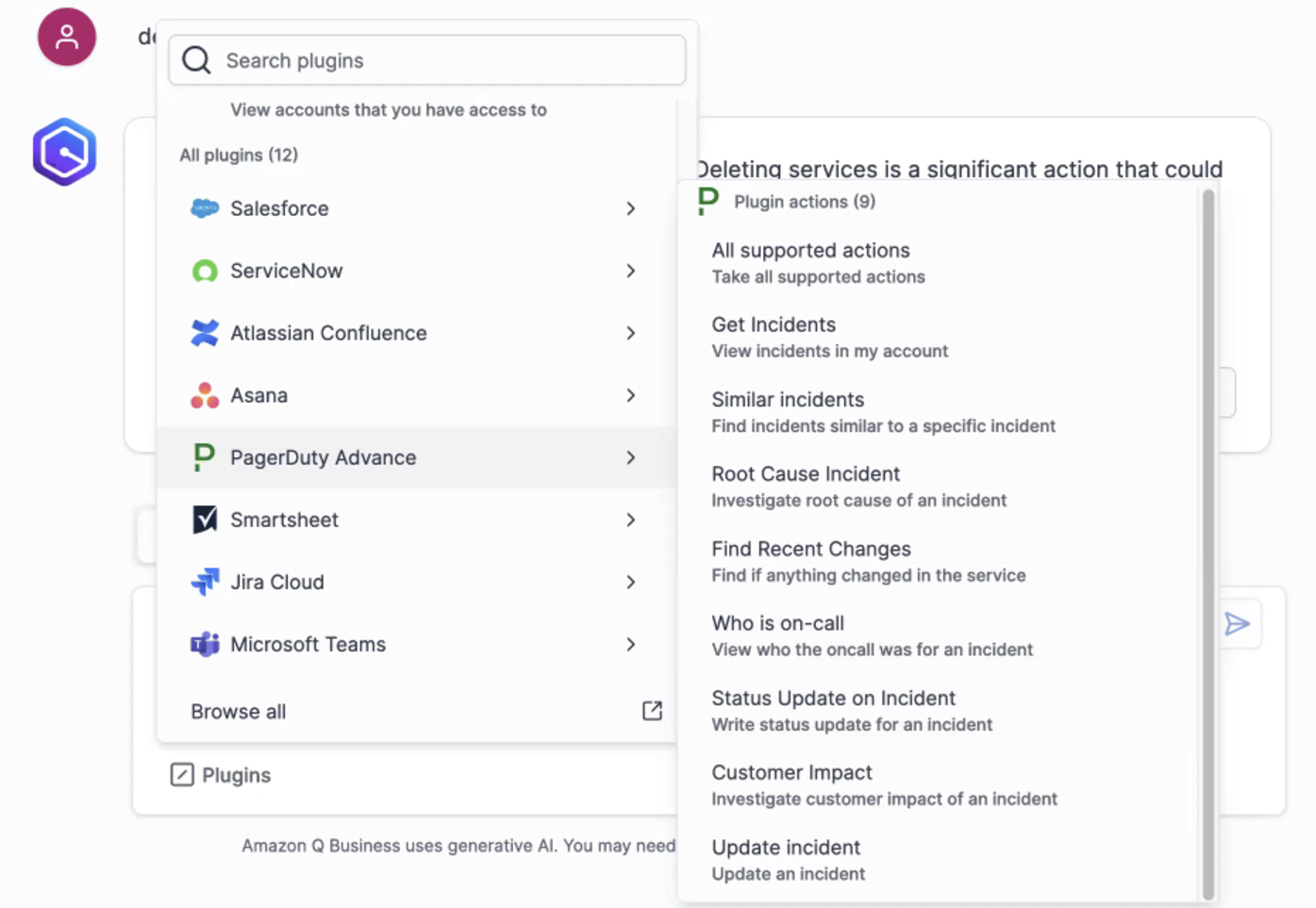

- Amazon Q Business gets a story at AWS re:Invent 2024

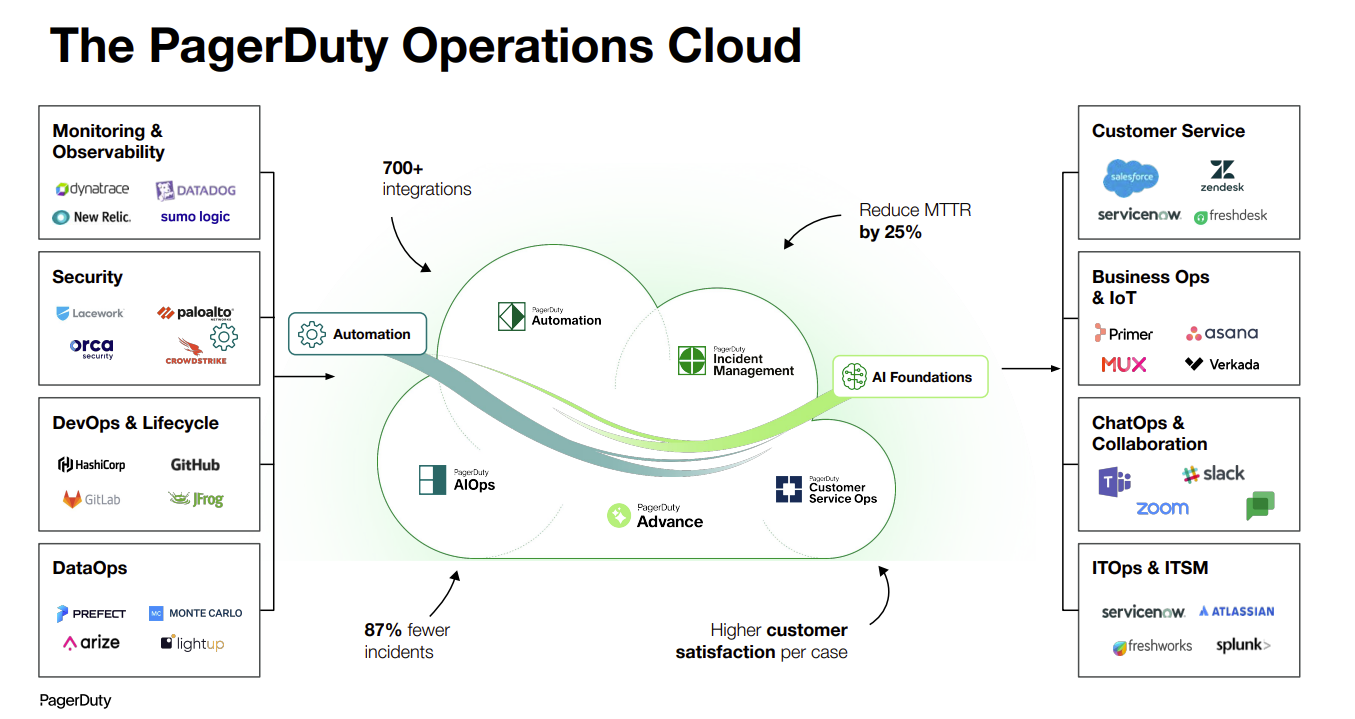

- PagerDuty integrates with Amazon Bedrock, Q Business: Will it boost large enterprise traction?

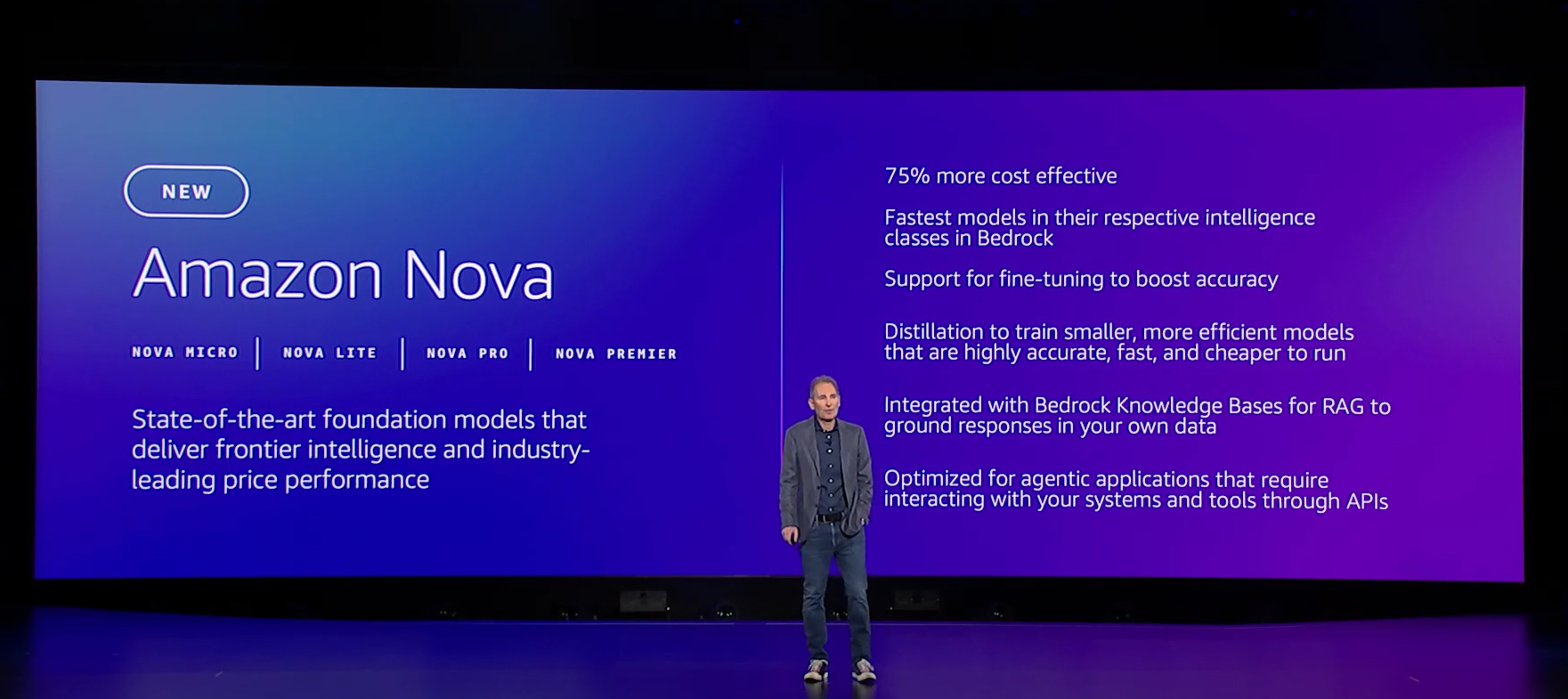

- AWS launches Amazon Nova foundation models in commoditization play

- AWS aims to make Amazon Bedrock your agentic AI point guard

- AWS revamps S3, databases with eye on AI, analytics workloads

- AWS scales up Trainium2 with UltraServer, touts Apple, Anthropic as customers

- AWS outlines new data center, server, cooling designs for AI workloads

- AWS re:Invent 2024: Four AWS customer vignettes with Merck, Capital One, Sprinkr, Goldman Sachs

- Oracle Database@AWS hits limited preview

In a Q&A with analysts, Garman covered the following points:

Customers leveraging foundation models. Garman said customers will build, buy and fine tune a wide selection of models. Garman said:

"Customers are going to use a wide range of models, and they'll fine tune some of the models in Bedrock, and they will build their own. The answer is evolving. You'll find it easier to build a model from scratch with your own proprietary set of data. There’s going to be a lot of customers who are continuing to do that on SageMaker. We're seeing no amount of slowing down of customers doing that."

Nova models .AWS launched its new Nova models to replace Titan. "Nova will be replacing the Titan model as it's such a leap forward from where we were and wanted a whole new brand around them."

Intel. Garman said that manufacturing in the US is critical and he's hopeful that the company can get to being a leading foundry. Garman said:

"They're incredibly important to the country, and so I think I'm hopeful that they get to a good place. Not sure that I would like to fund them, necessarily, but I think it's super important. I'm hopeful that that Intel can get back to being a leading foundry.

"I think having all of the leading edge foundries in one location in Taiwan is probably not the best for just the global supply chain."

Power and sustainability. Garman said AI will need a lot more electricity and power, but hyperscalers will have to become much more efficient. Garman said:

"The compute is getting much more efficient. Every cycle of compute today is getting more and more efficient. I would love for the computers get as efficient as the human brain. We haven't invented that technology yet. In the meantime, we're planning for the electricity needs for the next decade that we project. It's not just us. There's much more demand for power.

It's important for us to keep pushing towards carbon zero power. And so, we continue to make really large investments in renewable energy. We're making investments in nuclear. It's just a part of the portfolio of power that we're going to need."

Garman noted that Amazon has commissioned multiple renewable energy projects.

The balance between abstraction and primitives. Garman said AWS will aim to improve compute, storage and database as well as the abstraction layer. Garman said:

"I don't think that it's either or. I think we're absolutely focused on the primitives and improving things like storage and database. We think there's tons of innovation and custom silicon and compute and networking. I think inference is a core building block. We're investing a lot in services that are more abstract and help customers be more efficient in their jobs. We'll do both of those things. And I think there's a there's enough room for innovation across all those different levels."

Cybersecurity approach. Garman was asked why AWS doesn't try to monetize cybersecurity. He said AWS spends billions of dollars on cybersecurity that's hopefully invisible to most customers. "There's a lot of great partners out there and they do a fantastic job," said Garman. "We're happy to partner with them."

Product approach. Garman was asked about AWS' approach between product teams to create different building blocks. He said that approach has driven innovation because teams aren't interdependent on each other. "If you have 50 teams that all have to be in lockstep to deliver something you're going to move slow," said Garman. "Our strategy has been to let those teams invent and move fast. I appreciate that approach introduces some complexity for customers and we're moving to cover that."

He noted that SageMaker Studio is an example of an effort to bring those building blocks together seamlessly. "SageMaker is an elegant example of making it easier to operate with a whole set of tools on common data sets," said Garman. "We can do that because we have all these core components behind the scenes. We will keep innovating."

Simply put, there won't be fewer services from AWS-like ever.

GenAI model choice. Garman said choice is important to AWS, but the company will have "to keep getting better about helping customers choose the right thing."

"We'll have to figure out new ways to help and thinking about model routing, thinking about how you do AB testing and which model is giving customers better outcomes," he said.

Garman was asked about Amazon's Anthropic investment and he noted that the companies are close partners that learn a lot from each other.

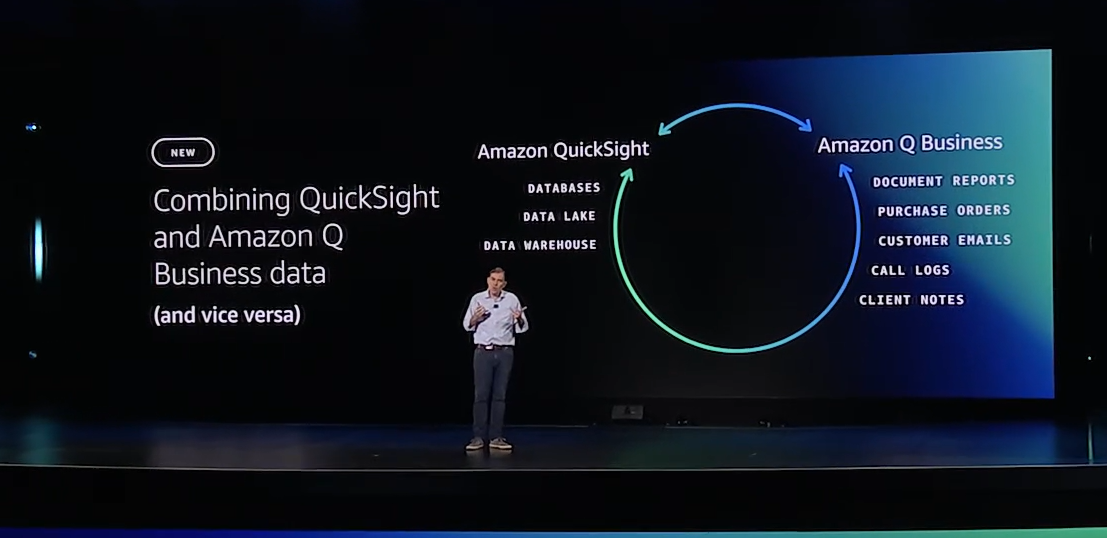

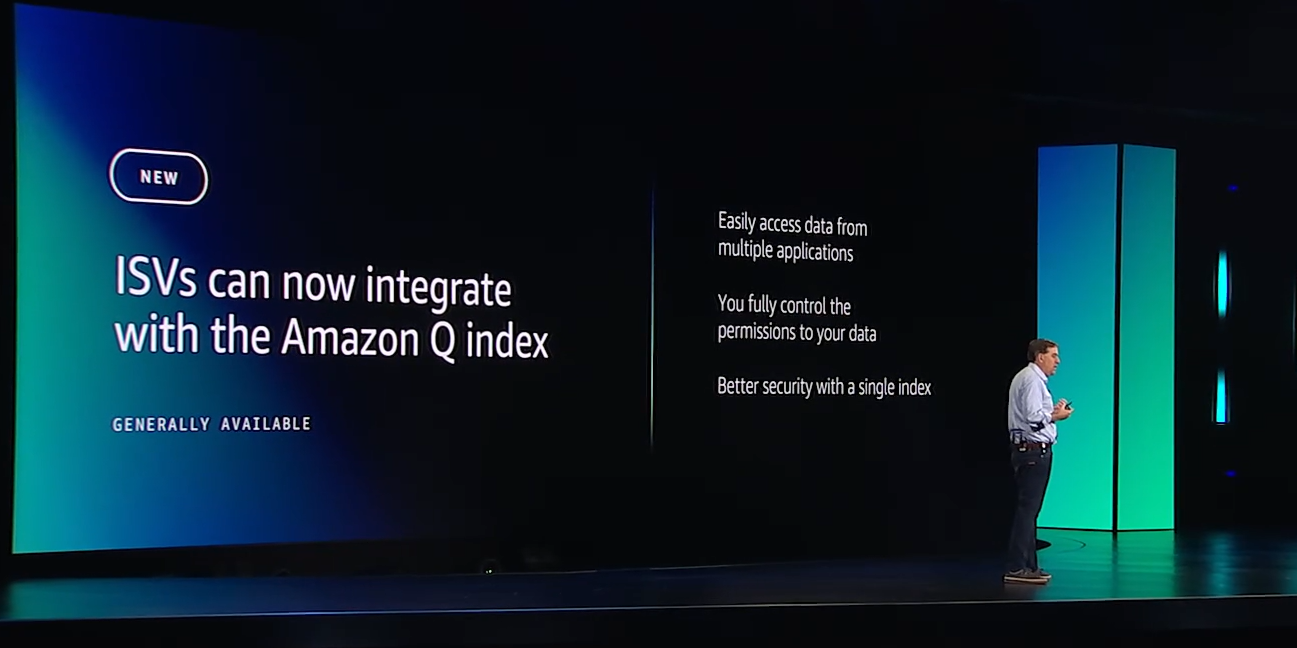

Amazon Q. Garman said that Amazon Q can have a wide reach from a more neutral position. "The real power with Q Business is this Q Index that can index data across your different SaaS providers. AWS has recognition as a trusted source there and bringing data together," he said.

Garman said that Amazon Q can democratize data and analytics for business roles as Q Apps serve as an abstraction layer.

The false narrative of Trainium vs. Nvidia. Garman was asked about competition with Nvidia and he noted that there wasn't any story there. He said:

"It's about more options for customers. If we can lower costs and more inference is done it's not going to be at Nvidia's expense. Nvidia is an incredibly important partner. I think the press wants to make it us vs. them but it's just not true."

Garman also noted that Graviton didn't take workloads from Intel or AMD.

On-prem AI workloads. Garman said the scale of genAI requires the cloud because the systems become so complicated quickly. He said:

"I think the scale required means AI is a cloud workload. You can take these smaller models and run them on premise today, and I do think that there's maybe some interesting things there. If you think about distilling smaller models or running inference at the edge, I do think that that is an interesting idea. But kind of training big models and things like that is a cloud centric thing. It's just not practical."

Data to Decisions

Innovation & Product-led Growth

Future of Work

Tech Optimization

Next-Generation Customer Experience

Digital Safety, Privacy & Cybersecurity

AWS reInvent

aws

amazon

AI

GenerativeAI

ML

Machine Learning

LLMs

Agentic AI

Analytics

Automation

Disruptive Technology

Chief Information Officer

Chief Executive Officer

Chief Technology Officer

Chief AI Officer

Chief Data Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Product Officer