Digital Business Distributed Business and Technology Models Part Two; The Dynamic Infrastructure

Vice President and Principal Analyst, Constellation Research

Constellation Research

Andy Mulholland is Vice President and Principal Analyst focusing on cloud business models. Formerly the Global Chief Technology Officer for the Capgemini Group from 2001 to 2011, Mulholland successfully led the organization through a period of mass disruption. Mulholland brings this experience to Constellation’s clients seeking to understand how Digital Business models will be built and deployed in conjunction with existing IT systems.

Coverage Areas

Consumerization of IT & The New C-Suite: BYOD,

Internet of Things, IoT, technology and business use

Previous experience:

Mulholland co authored four major books that chronicled the change and its impact on Enterprises starting in 2006 with the well-recognised book ‘Mashup Corporations’ with Chris Thomas of Intel. This was followed in…...

Read more

The Digital Business model with its dynamic adaptive capabilities to react to events with intelligently orchestrated responses forms from Services requires a very different enabling infrastructure to that of current Enterprise IT systems. As the Enterprise itself decentralizes into fast moving agile operating entities operating under an OpEx (costs allocated to actual use) management model so must the Infrastructure support with similar functional structure.

The Technology that creates and supports Digital Business does not resemble that deployed in support of Enterprise Client-Server IT systems. Neither is it a rehash of the standard Internet Web architecture. Instead a combination of Cloud Technology, both at the center and increasingly the edge, running Apps, in the form of Distributed Apps, linked by massive scale IoT interactions, and increasingly various forms of AI intelligent reactions represent a wholly different proposition.

In existing Enterprise IT the arrangement and integration of the technology complexities is defined by Enterprise Architecture, the term has not been used above deliberately to highlight the difference. In contrast with the enclosed, defined Enterprise IT environment where it is necessary to determine the relationship between the finite number technology elements; a true Digital Enterprise operates dynamically between an infinite numbers of technology elements, internally and externally.

Enterprise IT, for the most part, supports Client-Server applications, as evidenced in ERP, and is focused on ensuring the outcomes of all transactions will maintain the common State of all data. To do this the dependencies of all technology elements have to be identified in advance and integrated in fixed close-coupled relationships. It is important to remember that Enterprise Architecture was developed to deploy the Enterprise Business model defined by Business Process RE-engineering, (BPR).

It is vital to recognize that the Enterprise Business model and the Technology model are, or should be, two sides of the same coin coherently working together to enable the Enterprise to compete in its chosen market and manner. The introduction of a Digital Business model introduces a completely different set of technology requirements, and importantly requires to reverse accepted IT Architecture by requiring Stateless, Loose coupled, orchestrations to support Distributed Environments.

These simple statements cover some very complicated issues, and before going further the three important issues should be identified and clarified within the context used here;

- Stateful means the computer or program keeps track of the state of interaction, usually by setting values in a storage field designated for that purpose. Stateless means there is no record of previous interactions and each interaction request has to be handled based entirely on information that comes with it. Reference http://whatis.techtarget.com/definition/stateless

- Tightly-Coupled…hardware and software are not only linked together, but are also dependent upon each other. In a tightly coupled system where multiple systems share a workload, the entire system usually would need to be powered down to fix a major hardware problem, not just the single system with the issue. Loosely-Coupled describes how multiple computer systems, even those using incompatible technologies, can be joined together for transactions, regardless of hardware, software and other functional components. References http://www.webopedia.com/TERM/T/tight_coupling.html http://www.webopedia.com/TERM/L/loose_coupling.html

- Digital Business is the creation of new business designs by blurring the digital and physical worlds. ... in an unprecedented convergence of people, business, and things that disrupts existing business models. Reference https://www.i-scoop.eu/digital-business .

Clearly there is a need for something to act as an equivalent for the Enterprise Architecture, and indeed there is no shortage of activities to create ‘Architectural’ Models for IoT. There is a fundamental challenge in the sheer width of what constitutes a Digital Market connected through IoT in different industry sectors. Though it might seem that the approach for a Smart Home is not likely to have much in common with Self-driving cars, other than both being part of a Smart City, at the level of the supporting infrastructure there are minimal differences.

The result is an over whelming abundance of standards bodies, technology protocols and architectural models that will in the short term confuse rather than assist. A read through the listings covering each of those areas here will prove this point. Whilst there is no doubt the devil is in the detail and these things matter IoT deployments should be driven from the Digital Business model outlined in the previous blog post.

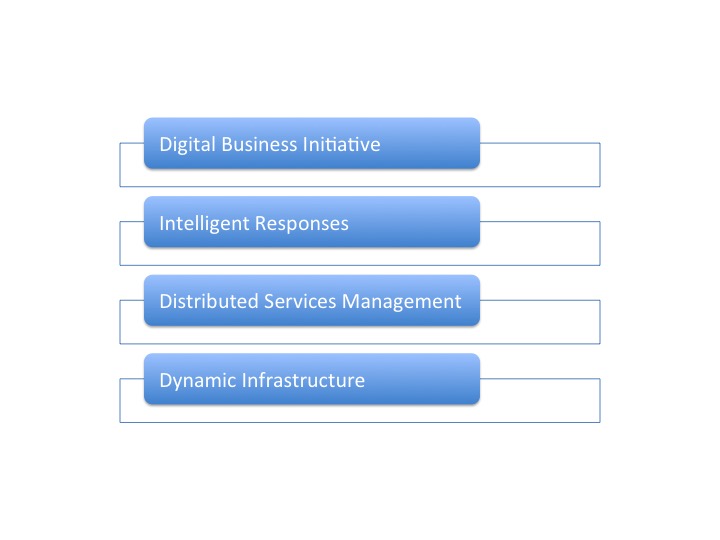

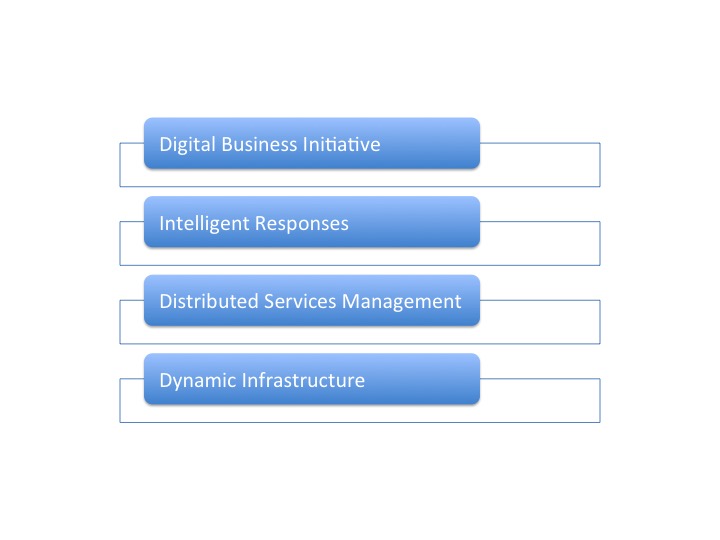

A blog is not the format to examine this topic in detail; instead the aim is to provide an overall understanding of a workable approach. And to make use of the views and solution sets available from leading Technology vendors to provide greater detail. The manner of breaking down the ‘architecture’ into abstracted four conceptual layers illustrated below matches almost exactly with the Technology vendors own focus points.

Enterprise Architecture methodologies start with a conceptual stage; an approach designed to provide clarification of the overall solution and outcome. This is necessary to avoid the distraction of the specific products details, often introducing unwelcome dependencies, at the first stage of the shaping the solution/outcome vision.

The four layers illustrated correspond to the major conceptual abstractions present in building, deploying, and operating the necessary Technology model for a Digital Business. This blog focuses on the Dynamic Infrastructure and each of the following blogs in the series will focus on one of the abstracted layers.

The four layers illustrated correspond to the major conceptual abstractions present in building, deploying, and operating the necessary Technology model for a Digital Business. This blog focuses on the Dynamic Infrastructure and each of the following blogs in the series will focus on one of the abstracted layers.

The following concentrates on the role and in particular on Enterprise owned and operated infrastructure. The same basic functionality could be provided from a Cloud Services operator. There are significant issues around latency and risks in certain areas, such as ‘real time’ machinery operations as an example, that will lead to the selection of on-premises Dynamic Infrastructure capability. It is most likely that a mix of external and internal Dynamic Infrastructure will be deployed in most Enterprises with the Distributed Services Technology Management layer providing the necessary cohesive integration. A point made in the following Part 3b of this series.

The Dynamic Infrastructure shares many of the core traits of Internet and Cloud Technology in providing capacity, as and when required, in response to demands. The development of the detailed specification started in 2012 with the publication by Cisco of a white paper calling for a new model of distributed Cloud processing across a network. Entitled ‘Fog Computing’ this concept became increasingly important with the development of IoT redefining requirements.

In November 2015 a group of leading Industry Venders, (ARM, Cisco, Dell, Intel and Microsoft), founded the OpenFog Consortium. Today there are 56 members including a strong representation from the Telecoms Industry. Cisco has developed its products and strategy in tune with the vision statement of the OpenFog Consortium that states the requirement to be;

“Fog computing is a system-level horizontal architecture that distributes resources and services of computing, storage, control and networking anywhere along the continuum from Cloud to Things. By extending the cloud to be closer to the things that produce and act on IoT data, fog enables latency sensitive computing to be performed in proximity to the sensors, resulting in more efficient network bandwidth and more functional and efficient IoT solutions. Fog computing also offers greater business agility through deeper and faster insights, increased security and lower operating expensesâ€

It is worth pointing out there are subtle, but important, differences between Fog Computing, and pure Edge Based Cloud Computing. Edge Based solutions more closely resemble a series of closed activity pools with relatively self contained computational requirements, where as Fog Computing processing is more interactive and distributed using a greater degree of high level service management from the Network. Naturally the two definitions overlap and this together with other terms can be confusing. In practice, it is important to note that “Fog†certainly includes “Edgeâ€, but the term Edge is often used indicate a more standalone functionality.

Three Technology vendors have focused their products and solution capabilities around providing such an infrastructure, with its mix of connectivity and processing triggered by a sophisticated management capability. Each vendor uses different terminology and has published their definitions on what they identify as the challenges and requirements.

Constellation Research would like to thank Cisco, Dell and HPE for contributing the following overviews that describe their point of view in respect of building and operating a Dynamic Infrastructure. Each vender also provided links to enable a more detailed evaluation to be made of their approach and products.

Cisco’s Digital Network Architecture

At Cisco, we are changing how networks operate into an extensible, software driven model that makes networks simpler and deployments easy. Customer requirements for digital transformation go beyond technology such as IOT and require that the network can handle changes, security, and performance in a policy-based manner designed around the application and business need.

Network Architecture is the framework for that network change moving from a highly resources intensive and time consuming way of deploying network services and segments to a model that is built to speed these processes and reduce cost. With DNA, we are focusing on automating, analyzing securing and virtualization of network functions. Networks need to be more than just a utility, they need to be business driving and secure in the proactive and reactive sense. To do this Cisco is building on our industry leading security products combined with our industry leading access products (including SD-WAN, wireless, and switching) we are helping customers change how they fundamentally work and to embrace the digital transformation.

Some examples of our continued innovation in this space include products like APIC-EM, the central engine of our Cisco DNA. APIC-EM delivers software-defined networking capabilities with policy and a simple user interface. It offers Cisco Intelligent WAN, Plug and Play for deploying Cisco enterprise routers, switches, and wireless controllers, Path Trace for easy trouble shooting, and Cisco Enterprise Service Automation.

Cisco is more than a networking vendor, we partner with our customers at all levels. We strive to understand not only what customers need at a technical and IT level, but what they need as a business. Cisco brings consistent and long term investment into its products and services, adding value and features constantly. Nobody in the networking market invests in R&D and listens to customers like Cisco does. Cisco knows that the changing face of IT is to help bridge the gap to cloud and make sure that business needs are met with agile solutions that enhance the business. With Cisco DNA, CIOs, managers, and administrator all get what they need to move forward with digital transformation and IOT.

The details of the Cisco range of products, and solutions, can be found in in three places; One, Two, Three

Dell Technologies Internet of Things Infrastructure

With the industry’s broadest IoT infrastructure portfolio together with a rapidly growing ecosystem of curated technology and services partners, Dell Technologies cuts through the complexity and enables you to access everything you need to deploy an optimized IoT solution from edge to core to cloud. By working with Dell’s infrastructure and curated partners they also provide proven use-case specific solution blueprints to help you achieve faster ROI. Dell has strong credibility to play in Industrial IoT from its origins in the supply of computing to the Industrial sector, as an early leader in sensor-driven automation, and through the EMC acquisition, which adds additional expertise in storage, virtualization, cloud-native technologies, and security and system management. Further, Dell Technologies is leading multiple open source initiatives to facilitate interoperability and scale in the market since getting access to the myriad data generated by sensors, devices, and equipment is currently slowing down IoT deployments.â€

The challenge with IoT is to securely and efficiently capture massive amounts of data for analytics and actionable insights to improve your business. Dell Technologies enables the flexibility to architect an IoT ecosystem appropriate for your specific business case with analytics, compute, and storage distributed where you need it from the network’s edge to the cloud.

Part of Dell’s net-new investment in IoT is a portfolio of purpose-built Edge gateways with specific I/O, form factor and environmental specifications to connect the unconnected capturing data from a wide variety of sensors and equipment. The Dell Edge Gateway line offers processing capabilities to start the analytics process to cleanse the data as well as comprehensive connectivity to ensure that the critical data can be integrated into digital business systems where insights can be created and business value generated. These gateways also offer integrated tools for both Windows and Linux operating systems to ensure that the distributed architecture can be secured and managed. Reference here

Further, Dell EMC empowers organizations to transform business with IoT as part of the digitization initiative. The Dell EMC’s converged solution including Vblock Systems, VxRack Systems, VxRail Systems, PowerEdge and other Dell EMC products are prevalent in the core data centers for enterprise applications, big data and video management software (VMS) as well as for cloud native applications. Dell simplifies how businesses can tap IoT as part of their digital assets — from edge with Dell’s Edge Gateways tied to sensors and operational technology to core data center and hybrid cloud from Dell EMC plays an crucial role for blending historical and real-time analytics, processing and archival. The Dell EMC Native Hybrid Cloud Platform, a turnkey digital platform accelerates time to value by simplifying the use of in IoT as part of cloud native app deployment. Included in this portfolio is the Analytic Insights Module, a fully-engineered solution providing self-service data analytics with cloud-native application development into a single hybrid cloud platform, eliminating the months it takes to build your own.

The details of Dell range of products, and solutions, can be found here

HPE’s Hybrid IT

HPE believes that there are a number of dimensions to dynamic infrastructure. It is estimated that 40-45% of IoT data processing will occur “at the edge†- close to where the sensors and actuators are. This is why they have created their “EdgeLine†range of edge compute devices. HPE calls this the first dimension of Hybrid IT - getting the right mix of edge and core compute.

While “real-time†processing of IoT data will occur both at the edge and at the core, “deep analytics†like design simulations and deep learning that a digital world requires may need specialised computers because Moore’s law is running out of steam. HPE believes, another dimension to Hybrid IT is the mix of conventional versus specialised compute. HPE’s specialised compute includes their SuperDome and the SGI ranges.

Digitiziation is forcing a change in the architecture of applications. Gone are the three tier, web client to app server to database applications. These are replaced by application and service meshes - meshes of services that applications can call. This is why micro-service and containers are becoming so popular (Docker has been downloaded over 4 billion times, for example). HPE built its Synergy servers with this new application architecture in mind:

- CPU, storage and fabric can be treated as independently scalable resource pools. This scaling can be applied to both physical infrastructure (for containers running directly on top of the hardware) and virtual machines.

- Infrastructure desired state can be specified in code. This allows the infrastructure on which an application is run to put under source control with the source code

- Because containers carry their required infrastructure specification with them, this specification can be given directly to the Synergy server for provisioning before containers are layered on top

Full details on HPE Infrastructure products can be found here.

Addendum

A distributed system is a model in which components located on networked computers communicate and coordinate their actions by passing messages.[1] The components interact with each other in order to achieve a common goal. Three significant characteristics of distributed systems are: concurrency of components, lack of a global clock, and independent failure of components. Distributed computing also refers to the use of distributed systems to solve computational problems. In distributed computing, a problem is divided into many tasks, each of which is solved by one or more computers, which communicate with each other by message passing. https://en.wikipedia.org/wiki/Distributed_computing

A Smart System is a distributed, collaborative group of connected Devices and Services that react to a continuous dynamic changing condition by invoking individual, or groups, of Smart Services to deliver optimized outcomes. The term originated in industrial automation and therefore the current Wikipedia definition seems somewhat limited in its scope when compared to the wider IoT use of the term.

New C-Suite

Innovation & Product-led Growth

Tech Optimization

Future of Work

AI

ML

Machine Learning

LLMs

Agentic AI

Generative AI

Analytics

Automation

B2B

B2C

CX

EX

Employee Experience

HR

HCM

business

Marketing

SaaS

PaaS

IaaS

Supply Chain

Growth

Cloud

Digital Transformation

Disruptive Technology

eCommerce

Enterprise IT

Enterprise Acceleration

Enterprise Software

Next Gen Apps

IoT

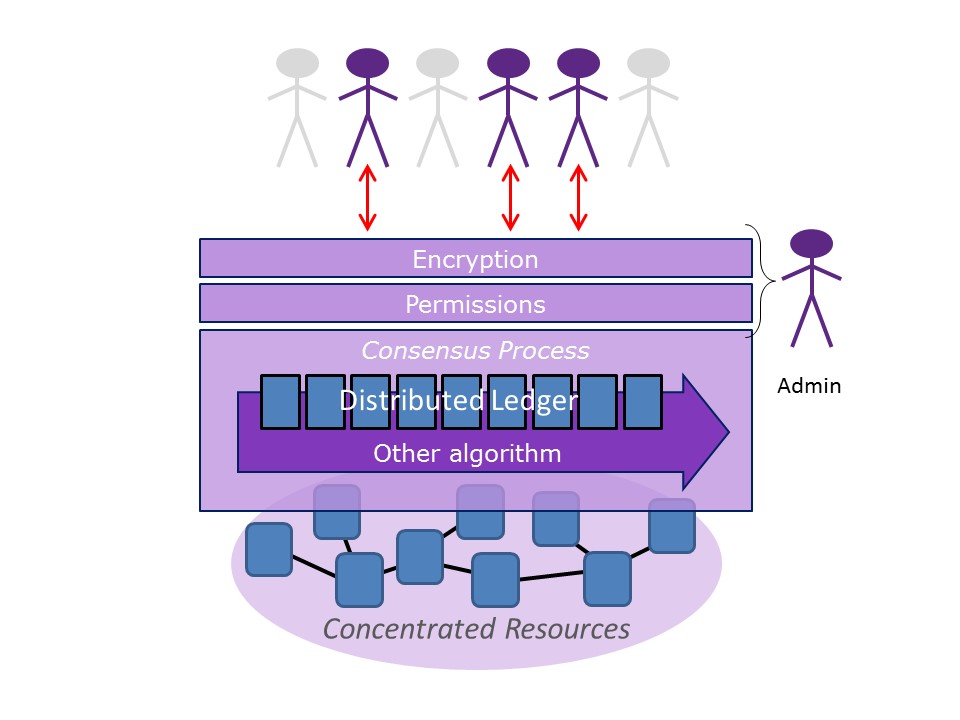

Blockchain

CRM

ERP

Leadership

finance

Customer Service

Content Management

Collaboration

M&A

Enterprise Service

Chief Information Officer

Chief Technology Officer

Chief Digital Officer

Chief Data Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Executive Officer

Chief Operating Officer