Digital Business Distributed Business and Technology Models Part 3a; Distributed Service Management (Technology)

Digital Business Distributed Business and Technology Models Part 3a; Distributed Service Management (Technology)

Service Management is a very broad term, and in the framework of Digital Business has a particularly broad and crucial role. A further complication is that the role is split between the Service Management of the enabling technologies, overlaid by the Service Management of distributed Business interactions/transactions. In the short term the Distributed Service Technology Management will dominate, and must support/integrate both on-premise Enterprise owned infrastructure, and the use of external ‘As a Service’ Cloud Service provider infrastructure.

However for a Digital Business, as defined in Part 1 of this series, to trade in the Digital Economy does require the implementation of an external Distributed Service Business management capability as well. Part 3b of this series provides a briefing on the requirement, and in particular, the potential role of Blockchain technologies.

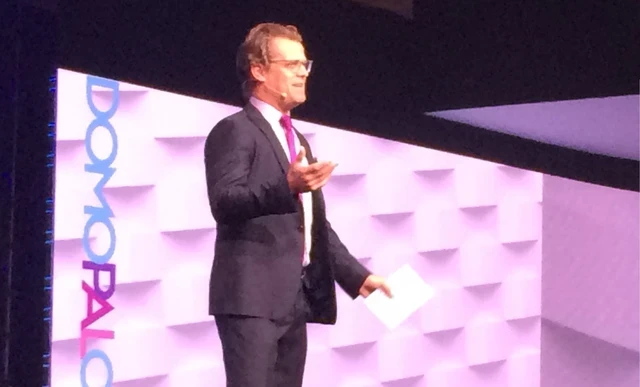

The preceding part two, see appendix for details, focused on the first layer of simple four layer abstracted framework defining the technologies supporting a Digital Enterprise. Dubbed ‘Dynamic Infrastructure’ this base layer provides on demand access to networking and computational resources with the necessary Service Management directly associated with provisioning. The Distributed Service Technology Management Layer sits above this layer to provide and manage a wide range of sophisticated functions that enable the delivery of Business value.

The separation between the Service Management of the Dynamic Infrastructure and the Distributed Service Technology Management may seem odd, but it is important. The provisioning infrastructure may be provided by the enterprise, but increasingly a large portion will be provided ‘as a Service’ by market leading vendors such as AWS, Google or Microsoft, amongst many others. Enterprises can expect to operate across both Private and Public Infrastructure, and as such their Distributed Service Technology Management must operate seamlessly across both.

Distributed Service Technology Management should be used as a term to refer to those functions that must operate in an independent manner above the Dynamic Infrastructure layer. The over used popular term ‘Platform’ is frequently used to describe these capabilities, but the differences between various Platforms is so large as to render the term meaningless as a requirement definition.

The comparison of ‘Platform’ products is difficult due to the wide range of functions contained in this layer, some of which are very focused on a particular aspect. In addition most Platforms are continually developing in line with deployment experience and market demands. Discussion on standard may be actively underway but it will take both time and market maturity before significant impact. The definition of an IoT Platform started around the connectivity of sensors with associated functionality for data management, but today a Platform are increasingly seen as an integral part of CAAST, (Clouds, Apps, AI, Services & Things). High function Platforms from leading technology vendors support the integrated operation of these technologies as the enabler for Digital Business.

Specialized Platforms, particularly as part of final mile IoT connectivity, are still required and as a further complication these are usually designed to connect into the sophisticated high function Platforms. With such wide diversity in capabilities it makes the term ‘Platform’ effectively meaningless as a capability definition. To gain an insight on the numbers of products defined as an ‘IoT Platform’ then visit a product-listing site such as Postscapes.

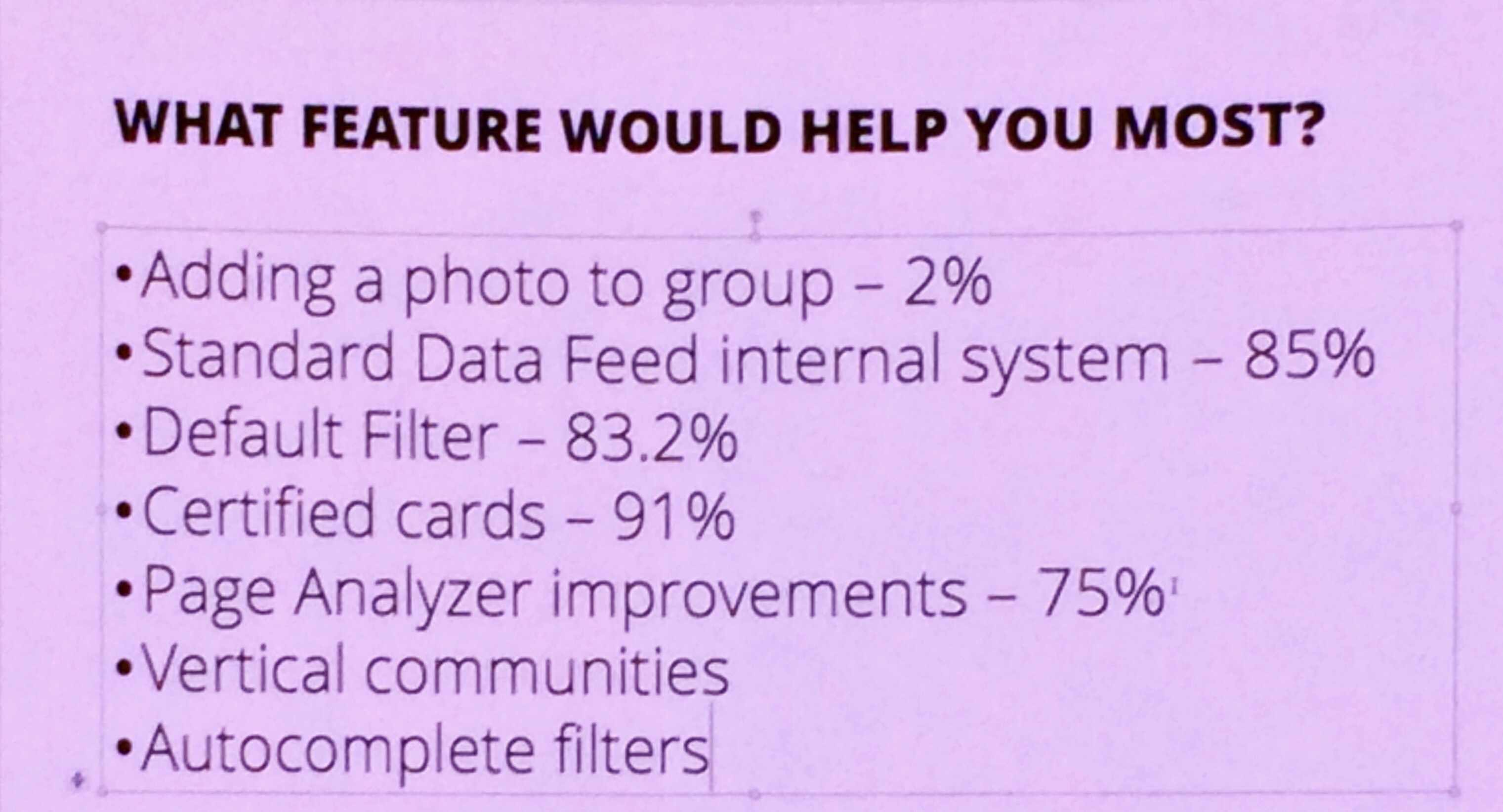

Platforms can be broken into four major groupings, a methodology that allows the positioning of major technology vendors to be more readily identified in alignment with their core market focus;

- Enterprise operated Dynamic Infrastructure; examples; Cisco, Dell, HPE

- Cloud Services providers+; examples; AWS, Google, Microsoft, Salesforce, SAP,

- IoT ‘final mile’ focused; examples; Labellum, PTC, ThingsWorx

- Open Source Development*; examples; AllJoyn, GE Predix, OpenIoT

+IBM, Salesforce and SAP all offer Platforms that connect to their respective Clouds, but their focus is on providing Business Apps, not Cloud capacity. They have been included to avoid questions that would occur if their names were omitted.

*See a list of 21 Open Source Projects here

As is usually the case in the initial stages of a new technology market Vendor proprietary solutions are likely to provide the most attractive solutions for the requirements of first generation deployments. Such deployments tend to be focused and do not require the full range of functions that will be required later when maturity and scale drive product selection. Not unnaturally there will be concern as to vendor lock-in, and/or, restrictions on the development of a fully functional Distributed Services Technology Management layer, but this may be less concerning then it might seem.

For any Digital Enterprise the successful implementation of an independent Distributed Service Management of Technology layer able to integrate ‘any to any’ combinations of Private or Public Dynamic Infrastructure provision into advanced operational Services in support of the higher business layers is a crucial success factor.

A great deal of Technology attention is focused upon the architecture and standards necessary to achieve this as by definition the Distributed Service Management layer be based on standardized principles to ensure ‘open’ operation. Leading technology vendors are active in addressing the requirement for standards. Almost all references to IoT Architecture are in reality references to the Architecture of the Distributed Services Technology Management layer, and have relatively little to contribute to the remaining three layers of the Digital Business framework.

If the number of Platforms, each with different features, available in the market are confusing, then the confusion is made worse when the numbers of communities developing architectural models and standards are added into consideration. This is not the place to examine, even list, each individually. This blog is aimed at providing an informed overview to build understanding of the necessary considerations for enterprise deployment and product evaluation.

Commercially sponsored standards activities often have a scope, or point of view driven by the market positioning and products of particular vendors. This often fragments the overall architecture required as well as making it difficult to use for objective evaluation. Those charged with managing the introduction of the Distributed Services Technology Management into their enterprise need a comprehensive future framework to help them ensure the various tactical deployment choices will come together in a cohesive transformation of capabilities.

Perhaps the best example of an independent approach but with a scope to cover the entire architectural framework comes from the IoT Forum. This body took over the work of the EU on IoT Architecture and extended the reach to be global, as well as to more inclusive with a series of events held around the world. EU funding has reduced reliance on technology vendor sponsorship, enabling the production of detailed report on what is required, and why, under the title of ‘Architectural Reference Model, or ‘ARM’ introduction.

The IoT Forum work on ARM provides an excellent background to understanding this complex requirement, as well as offering a strategic definition as a longer-term target for the development of an Enterprise Distributed Services Technology Management layer. Current deployment requirements can be assessed against this framework to establish requirement definitions for product choice. This is particular useful given the lack of reliable standards to guide choice.

The value of the work on Architectural Frameworks by various bodies on across the Technology industry currently provides guidance on incorporating the first standards. However, in determining how the Technology elements will support interworking internally, and externally, it is easy to lose sight of the real question. The technology aspect is there to support and enable the Distributed Services Business Management capabilities.

The Digital Business Enterprise only exists because it is part of the Digital Economy conducting business through exchanging Services with its industry ecosystem of partners. In this continuously dynamic model with ever changing Business partners and transactions a distributed, and decentralized, commercial transaction recording capability is a necessity.

In a decentralized distributed Digital Business ecosystem operating in a loose coupled, stateless format existing forms transaction management based on predefined close coupled relationships and managed state cannot be applied. The huge interest in Blockchain technology is to provide this new and radically different capability.

It should be noted that BitCoin, often quoted as an example of Blockchain, is not indicative of the overall capabilities that can de developed using Blockchain technologies. BitCoin is a particular implementation that uses the technology in a certain manner with corresponding limitations.

Part 3b of this series provides a briefing on decentralized Distributed Services Business management.

Summary; Background to this series

This is third part in a series on Digital Business and the Technology required to support the ability of an Enterprise to do Digital Business. An explanation for the adoption of a simple definition shown in the diagram below to classify the technology requirements rather than attempt any form of conventional detailed Architecture is provided, together with a fuller explanation of the Business requirements.

Part One - Digital Business Distributed Business and Technology Models;

Understanding the Business Operating Model

Part Two - Digital Business Distributed Business and Technology Models;

New C-Suite Innovation & Product-led Growth Tech Optimization Future of Work AI ML Machine Learning LLMs Agentic AI Generative AI Analytics Automation B2B B2C CX EX Employee Experience HR HCM business Marketing SaaS PaaS IaaS Supply Chain Growth Cloud Digital Transformation Disruptive Technology eCommerce Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain CRM ERP Leadership finance Customer Service Content Management Collaboration M&A Enterprise Service Chief Information Officer Chief Technology Officer Chief Digital Officer Chief Data Officer Chief Analytics Officer Chief Information Security Officer Chief Executive Officer Chief Operating Officer