Digital Business Distributed Business and Technology Models Part 4; Augmented Intelligence and Machine Leaning

Vice President and Principal Analyst, Constellation Research

Constellation Research

Andy Mulholland is Vice President and Principal Analyst focusing on cloud business models. Formerly the Global Chief Technology Officer for the Capgemini Group from 2001 to 2011, Mulholland successfully led the organization through a period of mass disruption. Mulholland brings this experience to Constellation’s clients seeking to understand how Digital Business models will be built and deployed in conjunction with existing IT systems.

Coverage Areas

Consumerization of IT & The New C-Suite: BYOD,

Internet of Things, IoT, technology and business use

Previous experience:

Mulholland co authored four major books that chronicled the change and its impact on Enterprises starting in 2006 with the well-recognised book ‘Mashup Corporations’ with Chris Thomas of Intel. This was followed in…...

Read more

Business has constantly pushed for better ‘intelligence’ to support improved decision-making to support the continued drive towards increasing competitiveness. The impact of Cloud Services in reducing cost and improving availability of capacity together with the rise of Big Data from Web and Social activities has taken analytics and Business Intelligence to new highs. But are these the capabilities to support Digital Business with its massively increased data loads, constant new perspectives, and collapsed time frames required to achieve ‘immediate’ dynamic optimizations? Part 4 of this series explores the new requirement for ‘Intelligence’ in a Digital Business as defined in part 1 of this series.

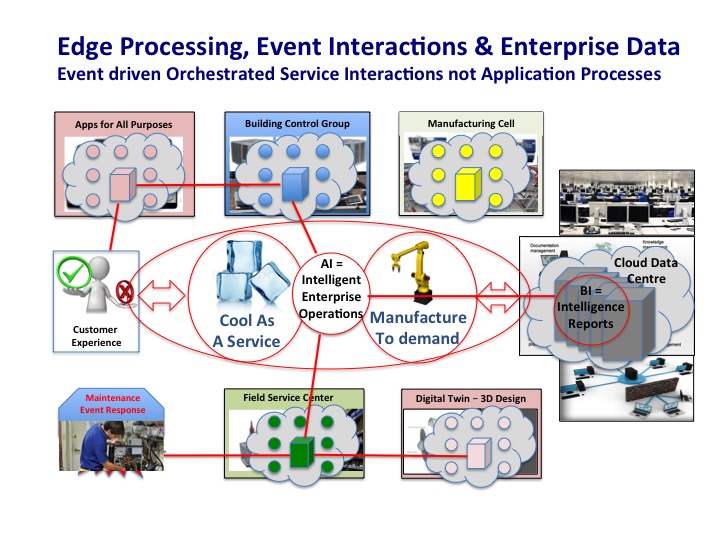

At the heart of Digital Business is using IoT sensing technology to convert physical objects and events into digital representations to use Augmented Intelligence and Machine Learning to create the Business benefit. The result is to produce amounts and types of data into the Enterprise, together with constantly changing market dynamics that simply defy traditional BI reporting methods. The challenge is to both analyze these data flows, and to make optimized decisions, within the limited time frames required for optimized responses.

AI, together with IoT, are the two new core technologies at the heart of the CAAST technology model of Clouds, Apps, AI, Services and Things that, when used in new integrated frameworks create the capabilities of Digital Business.

In the first blog in this series, part 1, the business model architecture of a Digital Enterprise is outlined and therefore it is recommended to reading this first before reading further. A notable factor of the Business activities of a Digital Enterprise is a constant series of dynamic and innovative adjustments in respond to the conditions of its Digital Ecosystem of partners. This market led optimization is a startling reversal of the current, traditional Business models, which are built on the optimization of Enterprise assets through stability in the operating model as a key factor.

This reversal in the Business model driver unsurprisingly also affects the current, traditional approach to implementation of a Business driven requirement. IT Enterprise architecture methods start with the business requirement, which is assumed to be an Enterprise Application, and proceeds down the technology stack. At each layer the selection of a technology, or product, is made based on the requirements of the Enterprise Application. As many Enterprise Applications were written using particular operating systems, etc., the result is to produce a custom implementation.

Increased awareness of standards, and a trend towards standardization, including the benefits of HyperVisors with Cloud technology, has improved commonality over recent years. Fortunately the complications/cost of maintaining custom, individual technology deployments under Enterprise Applications have been low in the past as the Enterprise Business model would rely on stability thus expecting Business application to have a life of many years.

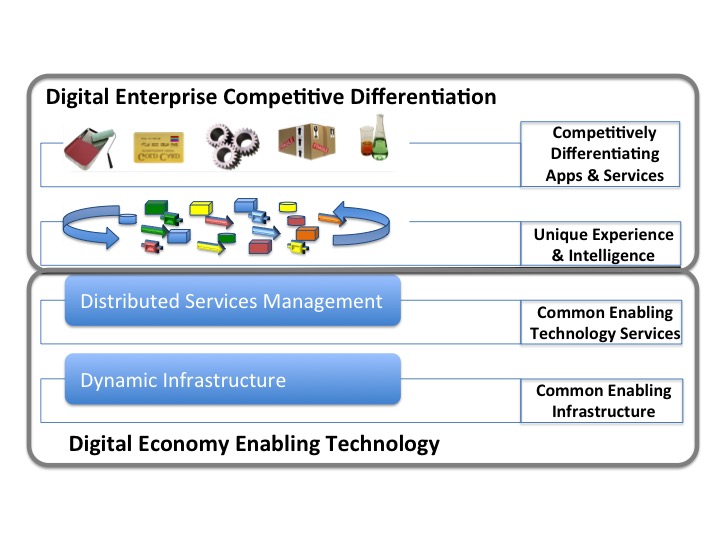

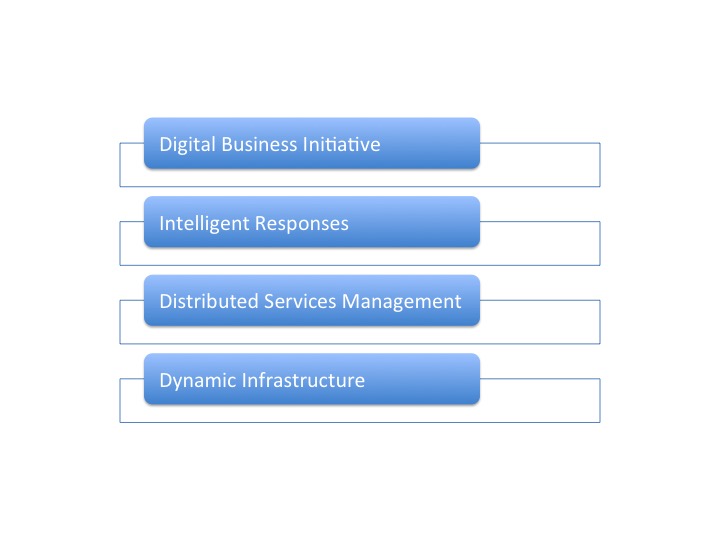

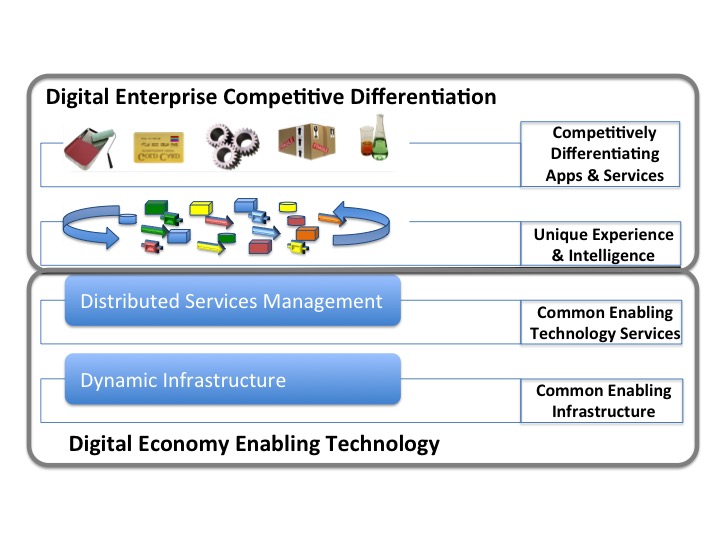

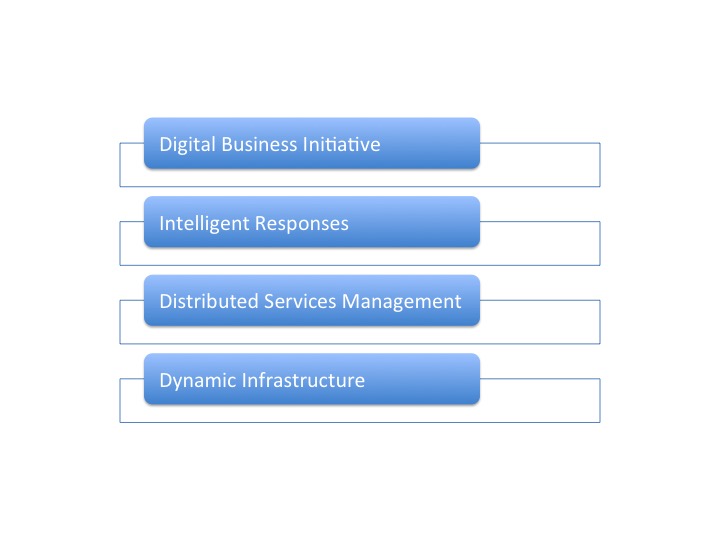

Digital Business models are built on dynamic responsiveness to markets, and opportunities, and are delivered through quick build Apps, not monolithic applications, accordingly deployment relies on being able to deploy over a common set of enabling technologies. These capabilities span both internal and external infrastructure and are described in more detail in Parts 2, 3a and 3b titled Dynamic Infrastructure, Distributed Services Technology Management and Distributed Services Business management respectively and relating to the two common, shared infrastructure layers.

It is in the final two layers; covered in here in part 5 Augmented Intelligence and Machine Leaning and the concluding Part 6 on Business Apps and Services that a Digital Business competitively differentiates its self. Aligned to the fast moving light weight nature of rapidly deployed Apps is an Enterprise organizational model that reflects the same shift away from centralized monolithic processes and departments. The dynamic innovative Digital Enterprise has become a fast moving decentralized structure able to act swiftly to take decisions and act.

The Enterprise IT structured centralized data environment using historic analysis and reporting to delivers Business Intelligence, or BI, is not present in the activities and environment that relates to Digital Business.

Enterprise IT incorporating BI reporting remains vitally important for those processes that support key commercial functions, including compliance, where stability and ongoing comparisons remains key. However where Digital Business models are implemented the transformation in both Business and Technology models not surprisingly calls for a equal transformation in the approach to ‘Intelligence’.

The following diagram illustrates the two layers of Intelligence and Business Apps and Services, with the diversity of the Apps and Services layer producing a constantly changing demand for intelligent responses from the Intelligence layer below. Note; the term App is used to indicate a deployed business capability whose functionally is fully controlled by single Enterprise. The term Service is used to indicate an orchestration of functional elements from different Enterprises, either created dynamically by response to an event, or to build a Business offer to the market, and therefore not totally controlled by a single Enterprise.

One of the key traits that defines Digital Business is the ability to ‘Read and React’ to the data flow arising from the events and circumstances instrumented by IoT. The data volumes to be analyzed and the time frames in which to do this are one part of the challenge. The other is to use human experience and machine leaning to automate the react decision-making based on the analysis.

AI is used as a convenient term to cover the huge range of technologies and methods that are being developed to address these challenges. In the immediate future there is common agreement amongst the major Technology vendors approaches that Augmented Intelligence is the key. The goal being to use, or augment, Human Intelligence to work in this challenging environment, rather than trying to replace human experiences with entirely computer generated responses. Information Week published an excellent article defining this topic in response to the US Government concerns.

Given time, and the right information, an experienced human mind can successfully work out a reasonable solution to the requirements for ‘read and react’ responses, bit not at the frequency and volumes that Digital Business requires. It is comparable with the drivers that created Industrial Automation, where the ever-increasing production volumes increased speeds beyond the human operators capabilities. Some/ perhaps many, repetitive Office based role face the similar pressures, and though improvements can be made to improve human interactions with computers, ultimately the answer is increasingly likely to be Office Automation.

Even focusing on Augmented Intelligence and Machine Learning technology introduces a big and complex subject that is not a topic for this blog. Here the focus remains on exploring the use of the technologies of CAAST, (Clouds, Apps, AI, Services and Things), in building solutions for Digital Business. To learn more about AI, and Augmented Intelligence, the following links provide access to good primers on the topic; starting with The Verge explanation of common terms; followed by Wired explaining in more depth Machine Learning including the following useful paragraph;

AI is a branch of computer science attempting to build machines capable of intelligent behavior, while

Stanford University defines machine learning as “the science of getting computers to act without being explicitly programmedâ€.

You need AI researchers to build the smart machines, but you need machine-learning experts to make them truly intelligent. Quote from Wired see above link

Information Week published, ‘Why AI should stand for Augmented Intelligence’, drawing on an interview with IBM for their approach. PC Mag considered how Salesforce approached AI noting how, and where, it fits in relationship to Apps above, with the Data flow handing and infrastructure below. The importance of, and the role of Machine Learning comes up in these articles, with ZDnet discussing SAP views on Machine Learning as a closing view for the Technology background reading.

The interviews all point to the major technology vendors sharing, and working on, similar definitions and capabilities for the addition of intelligence and automated processing. But it will be quite a while before enough maturity occurs to allow interworking. As Augmented Intelligence and Machine Learning calls for in depth experience, knowledge and focus on a particular element an Enterprise will be faced with using different vendors for different business deployments.

The Digital Enterprise is made up of a series of high business value activity ‘pools’ operating in a semi autonomous manner to make rapid innovative competitive moves in response to changing events and operational activities. This is a complete reversal of current Business Models that rely on using centralized conformity around a set of optimized processes to reduce costs.

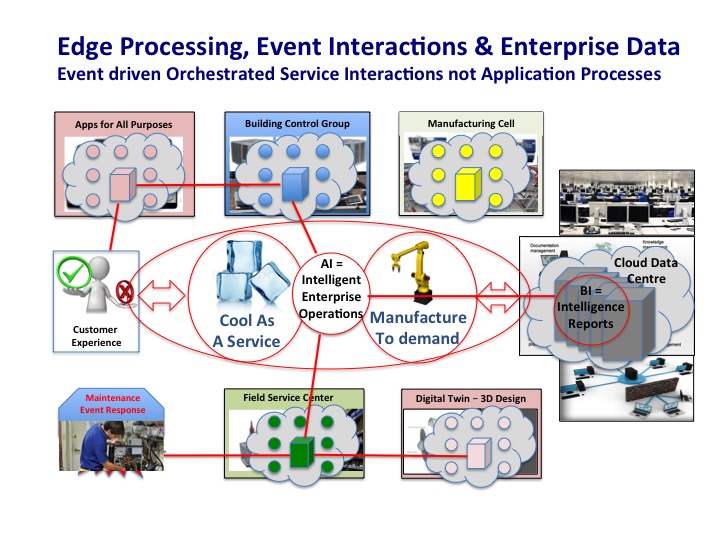

See the diagram below, which appears in Part 1, Understanding the Digital Business Operating model that illustrates this point. The independent enterprise activity ‘pools’ are shown, with the red lines illustrating orchestrations between the activity pools in a response to an external event in a customer building. This same reversal of the Business Model applies equally to the Technology Model, shifting the architecture from close-coupled state to alignment with fixed enterprise processes to loose coupled, stateless orchestrations in response to the events of Digital Business.

Augmented Intelligence starts with deployments to improve the operations of a particular activity; this allows selection of a technology vendor to be made based on either their specialist knowledge of the activity, or to maximize the impact of Augmented Intelligence/Machine Learning of the existing technology installation. In time, and with increasing maturity as a second phase, Augmented Intelligence/Machine Learning will move to the optimization at Enterprise level of the interconnections between the Activity pools.

The ability to start business beneficial deployments around specific activities, rather than wait for a full level of enterprise wide maturity should do much to reduce risks and difficulties for early adopters.

A commercial decision on; 1) where, and why, to initially deploy Augmented Intelligence/Machine Learning should depend on the importance of the activity pool, and, the scope for its operational improvement; 2) It has to be possible to use IoT sensing to provide the necessary quality of digital data to operate Augmented Intelligence/Machine Learning; 3) The output has to be capable of being channeled a ‘react’ Apps that can deliver the Business benefit as a ‘real time’ optimized operational improvement.

Summary; The Digital Enterprise Business is, by definition, a business that has created a full digital representation of its principle Business assets and activities to use computational facilities to optimize business operations. Though it may seem initially that IoT sensing is at the core, Augmented Intelligence/ Machine Learning represents the other half of the transformation.

Links to Information on Augmented Intelligence/ Machine Learning

The following is not intended to be an exhaustive listing, and is presented alphabetically. This list is provided for informative purpose and selection is based on Client and Press interest. Inclusion, or absence, from the listing does not imply any significance.

Amazon AWS - https://aws.amazon.com/machine-learning/?tag=vglnk-c312-20

Google - https://research.google.com/pubs/MachineIntelligence.html

IBM Watson – https://www.ibm.com/watson/

Microsoft - https://news.microsoft.com/features/microsofts-ai-vision-rooted-in-research-conversations/#6lVIzKOAeOhXwa57.97

Salesforce - https://www.salesforce.com/uk/products/einstein/overview/

SAP - https://www.sap.com/uk/solution/machine-learning.html

Summary; Background to this series

This is third part in a series on Digital Business and the Technology required to support the ability of an Enterprise to do Digital Business. An explanation for the adoption of a simple definition shown in the diagram below to classify the technology requirements rather than attempt any form of conventional detailed Architecture is provided, together with a fuller explanation of the Business requirements.

Part One - Digital Business Distributed Business and Technology Models;

Understanding the Business Operating Model

Part Two - Digital Business Distributed Business and Technology Models;

The Dynamic Infrastructure

Part Three – Digital Business Distributed Business and Technology Models

- Distributed Services Technology Management

- Distributed Services Commercial Management

Tech Optimization

Innovation & Product-led Growth

Future of Work

AI

ML

Machine Learning

LLMs

Agentic AI

Generative AI

Analytics

Automation

B2B

B2C

CX

EX

Employee Experience

HR

HCM

business

Marketing

SaaS

PaaS

IaaS

Supply Chain

Growth

Cloud

Digital Transformation

Disruptive Technology

eCommerce

Enterprise IT

Enterprise Acceleration

Enterprise Software

Next Gen Apps

IoT

Blockchain

CRM

ERP

Leadership

finance

Customer Service

Content Management

Collaboration

M&A

Enterprise Service

Chief Information Officer

Chief Technology Officer

Chief Digital Officer

Chief Data Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Executive Officer

Chief Operating Officer