IoT where two, or even three, possibly four, Worlds collide Or Operational Technology meets Information Technology

Vice President and Principal Analyst, Constellation Research

Constellation Research

Andy Mulholland is Vice President and Principal Analyst focusing on cloud business models. Formerly the Global Chief Technology Officer for the Capgemini Group from 2001 to 2011, Mulholland successfully led the organization through a period of mass disruption. Mulholland brings this experience to Constellation’s clients seeking to understand how Digital Business models will be built and deployed in conjunction with existing IT systems.

Coverage Areas

Consumerization of IT & The New C-Suite: BYOD,

Internet of Things, IoT, technology and business use

Previous experience:

Mulholland co authored four major books that chronicled the change and its impact on Enterprises starting in 2006 with the well-recognised book ‘Mashup Corporations’ with Chris Thomas of Intel. This was followed in…...

Read more

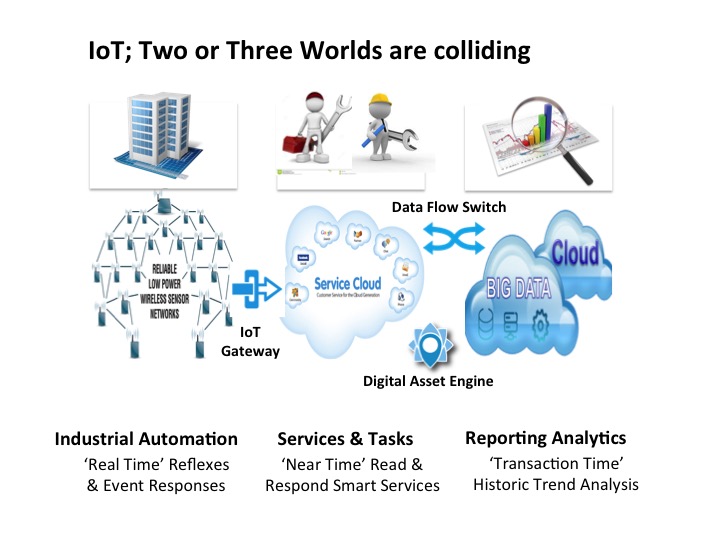

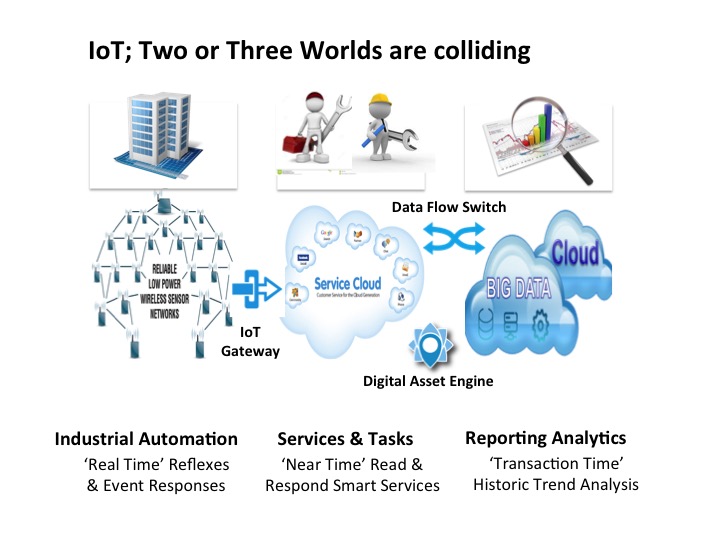

The title originates from a chapter heading entitled ‘IoT; Two Worlds collide’ in a recent Telefonica report on IoT Security drawing attention to the technology vendors, as well as enterprise staff, coming from two different backgrounds with very little in common. In reality it’s perhaps slightly worse as there are three distinctively different functional zones in a mature IoT architecture, together with connectivity split between mobility versus more conventional wired, or wireless, networking.

The technology market, and in-house enterprise expertise is spilt between Information Technology operating the business administration, and Industrial Automation, long time users of sensing technology, supporting Operational Technology. Each further subdivides skills as an example, there are separate IT groups for Web/Services, Cloud, and Mobility technology specializations.

The recent Mobile World Congress in Europe devoted considerable amounts of session time, and exhibition space to IoT in contrast to its traditional focus on Mobile telephony. Of course in reality a Smart Phone is an IoT device as many exhibition features were keen to demonstrate, along with Wearable technology and other more specialized 4G IoT sensors and devices. One of the most compelling IoT fully integrated business value demonstrations showed automation of IoT Tagged cows farm management.

Many are surprised to learn that Precision farming as it is called, (see Constellation Blog IoT Market in 2016), continues to be a showcase for business, and sector, transformation through the adoption of IoT. Why doesn’t this register? Almost certainly it is due to the lack of anything in common that would bring it to the attention of IT, and OT, practitioners. Farms are neither office, nor factory based, and by having moving machinery and animals at the center of operations. Accordingly Precision Farming has been driven by Telecom venders providing a Mobility connected Architecture.

Equally, those engaged with Precision Farming would find the Industrial vendors approach to Machine-to-Machine IoT on the factory floor, or in Buildings, equally incomprehensible. However the real problem is the extent to which it is also incomprehensible to IT practitioners. The converse charge can be made in respect of OT practitioners grasping the principles of IT architecture.

The diagram below is at the heart of understanding the title and opening comment concerning multiple worlds colliding. The left and right hand sides are pretty clearly delineated by current technology vendors positioning and products, but it’s the center that is the new zone where much of the new business value around Smart Services will be created.

The diagram below is at the heart of understanding the title and opening comment concerning multiple worlds colliding. The left and right hand sides are pretty clearly delineated by current technology vendors positioning and products, but it’s the center that is the new zone where much of the new business value around Smart Services will be created.

Its not the possession of IoT data that creates value, its what business valuable action, or outcome that data produces that matters. In each of the three zones in the diagram the type of action and its value is different. An enterprise can benefit from specific Business value delivered in each of the three zones, but a real ‘transformation’ requires integration across all three.

Industrial Automation companies, focussed on the left hand side of the diagram, include the suppliers of the heating, cooling, lighting, and a mass of utility equipment that is built into a modern multi-story office building. The numbers of sensors that are already being deployed currently number in the hundreds, but its rising fast as cheap battery powered wireless sensors are being added in increasing numbers.

In an example of a new build ‘smart’ forty-floor office in London, the planning expects in excess of 20,000 building sensors producing more than 3 petabytes of data annually. The IT community will see this as the ultimate requirement for Big Data analytical tools, but can they really handle 20,000 individual inputs of a few Kbits each when more than 75% of the traffic will merely confirm the status quo is maintained?

The Industrial Automation vendors working with OT community see a very different picture, with those data flows being used to trigger ‘reflex actions’ such as increasing a selected heating output in reaction to a developing ‘cold spot’. Even more important would be a reaction to a fire alarm releasing fire doors, setting off sprinklers and shutting off power.

This mass of low value building sensors will be interconnected by low capacity, and power, Grid Networks, such as ZigBee, using a master node to distribute the processing tasks. In short there is little, maybe nothing that relates in the Network, Processing, or even data model of IT systems. This is a pure Machine-to-Machine, or M2M, environment. However as machines can’t repair themselves, (though ‘smart’ automation can limit the impact by bypassing failed equipment), there is a need to connect events with ‘Services’ that can initiative Engineers and repairs.

Cloud based Services that support, empower and improve the efficiency of people are the prime functionality and business value delivered by the middle of the three zones. These should not be confused with the current Building Management and Service Engineering applications already provided by IT.

Building Management is a very ‘hot’ market for IoT currently; immediately recognizable is the interest around energy consumption with increasingly expensive and regulatory ‘green’ energy. Energy is typically only 2 to 3% of the overall building management costs, against machinery maintenance running at around 8 to 10%. The biggest reward lies in shifting to preventative maintenance by using IoT sensing to track individual equipment’s operating efficiency to decide when, and what type of optimized action is required, rather than recording planned time based processes in the traditional manner.

Salesforce is recognized for its longtime focus on cloud based ‘Services’ to make customer-facing people more responsive to real time activities and that includes Service Engineers. Over the last year Salesforce have supported IoT sensor event data inputs to their Services, and in the last month SAP has introduced a Preventative Engineering capability linked to their SAP IoT Initiative. Exactly how the integration that links these capabilities, and those of the final zone of traditional IT, with IoT sensors and grid networks has been examined in detail in previous blogs, most recently in the importance of ‘Final Mile’ architecture in pilots.

Both the Industrial Automation and Service Management zones share the common trait that the time to read and respond with a successful outcome is as near ‘real-time’ as possible. Indeed ‘timely optimization’ is the critical element in creating the business value. This is in contrast to traditional IT that is largely based on recording what has happening, and analyzing historic data to find value from identifying trends that have already happened.

The integration with the third zone shown on the right hand side of the diagram, that of traditional IT, is made by methods familiar to IT practitioners around data. As the Data formats and protocols are often different API engines are frequently required. An important point is that this is consolidated data, collected and collated, from the action outcomes made in the first and second zones. Analyzing over a period events that led ‘outcomes’, and ignoring null reports, produces management reporting. Similarly Service contract actions can be captured and recorded with in existing applications.

Though OT is equally failing to appreciate the implications of how to apply analytics at scale to operational Industrial Automation systems the issues facing IT, who are expected to be at the center of an Enterprises use of technology are are more concerning.

Few IT practitioners appreciate the massive numbers of small sensing devices currently being deployed, nor the use of new types of networks and protocols, rather than the IP based networks that IT understands. Add to this the overwhelming volume of extremely small data packets containing no contextual information such as location, or type of sensor, etc. that depart from the expected ‘Big Data’ models.

Unimagined numbers of IoT devices flooding miniscule data packets with no contextual data simply do not suit current big data analytical tools, nor will the traffic be welcomed on critical enterprise networks. Neither can processing be carried out in time frames to s address the key IoT sensing business benefit of optimized real time events and outcomes. Whilst none of these challenges are any more insurmountable than those of past generations of technology innovation waves, but they do require more recognition and understanding of adding and integrating IoT and IoT Smart Services within the enterprise.

IT should be leading the path towards a new enterprise architecture that will again unite new business and technology capabilities. Just as in the past, when the advent of Client-Server, Web and Mobility technologies all imposed similar changes.

Its time to get beyond the current myopic views of the different elements, or zones, of IoT and start to form enterprise wide working parties to pilot around proven Business beneficial requirements an integrated manner!

New C-Suite

Data to Decisions

Future of Work

Innovation & Product-led Growth

Tech Optimization

The diagram below is at the heart of understanding the title and opening comment concerning multiple worlds colliding. The left and right hand sides are pretty clearly delineated by current technology vendors positioning and products, but it’s the center that is the new zone where much of the new business value around

The diagram below is at the heart of understanding the title and opening comment concerning multiple worlds colliding. The left and right hand sides are pretty clearly delineated by current technology vendors positioning and products, but it’s the center that is the new zone where much of the new business value around