SAP Bets On Cloud For Analytics, BPC Optimized for S/4 HANA

Former Vice President and Principal Analyst

Constellation Research

Doug Henschen is former Vice President and Principal Analyst where he focused on data-driven decision making. Henschen’s Data-to-Decisions research examines how organizations employ data analysis to reimagine their business models and gain a deeper understanding of their customers. Henschen's research acknowledges the fact that innovative applications of data analysis requires a multi-disciplinary approach starting with information and orchestration technologies, continuing through business intelligence, data-visualization, and analytics, and moving into NoSQL and big-data analysis, third-party data enrichment, and decision-management technologies.

Insight-driven business models are of interest to the entire C-suite, but most particularly chief executive officers, chief digital officers,…...

Read more

SAP Financials 2016 event highlights a comprehensive cloud platform and real-time analysis. Here’s why SAP Cloud for Analytics and BPC Optimized for S/4 Hana Finance are getting attention.

Two next-generation products stood out at the March 15-16 SAP Financials2016 and GRC2016 event in Las Vegas: SAP Cloud For Analytics and SAP BPC (Business Planning and Consolidation) Optimized for S/4 HANA Finance. There are, in fact, several paths forward in SAP’s vast financial planning and analysis (FP&A) portfolio, but these are the options seeing the most aggressive development and, not surprisingly, the most interest. Here’s why.

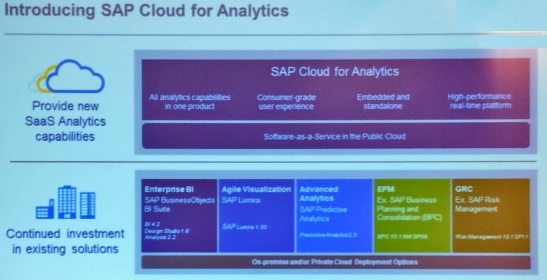

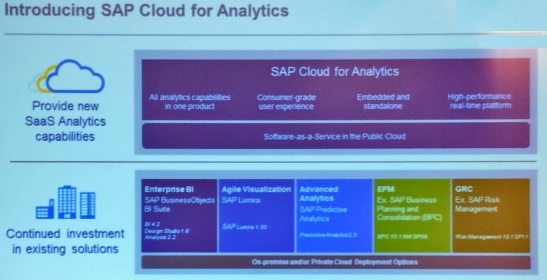

Introduced last fall and based on what was originally called SAP Cloud For Planning, SAP Cloud For Analytics is a comprehensive product spanning planning, business intelligence (BI), and, coming later this year, predictive analytics. Long-range plans also call for Governance, Risk and Compliance (GRC) functionality, but that part won’t show up in 2016.

SAP has offered many analytics products over the years, including Lumira, BusinessObjects Web Intelligence (Webby) and you can even throw BPC onto the list. But where those products have been for specific types of analysis and deployed mostly on-premises, the goal with Cloud for Analytics is to cover all the bases in a cohesive and consistent product that runs on the SAP HANA Cloud Platform.

A key selling point is broad data connectivity and real-time, in-memory analysis. Connection points include on-premises instances of SAP HANA, SAP BW and bi-directionally to BPC. You can also connect to the (private) HANA Enterprise Cloud, Salesforce and Google Enterprise Apps. Soon to be added will be connections to SAP ECC, BusinessObjects Universes, SAP SaaS apps (Ariba, Concur, Hybris, SuccessFactors, etc.) and live connections with write-back capabilities to on-prem instances of SAP BW.

Though it addresses planning, BI and, soon, predictive analytics, Cloud For Analytics is not a monolithic, all-or-nothing proposition. You can subscribe to just planning or just BI and so on. And there are different subscription levels for different types of users, whether they’re basic report or plan consumers or advanced developers or analysts.

Another, emerging constituent for Cloud for Analytics is business unit leaders, CXOs and even board members, all of whom are supported through an optional Digital Boardroom application. The idea is to help executives see where the business stands and where it’s headed, and with access to all that business data and data visualization and planning capabilities in the cloud, it’s an accessible option.

At SAP Financials 2016 (and also at the recent SXSW event in Austin, TX), SAP offered a Digital Boardroom virtual demo that showed how executives can traverse a Value-Driver view and plug in new planning assumptions for what-if scenario analysis. Plug in a new cost figures for key raw materials, for example, and you can see the impact on costs and margins. Or you could plug in an increase in sales staffing in a fast-growing market to gauge the impact on sales and profitability.

The Digital Boardroom app will become even more powerful as Cloud for Analytics gains predictive capabilities later this year, so executives can do simulations and get predictive recommendations on best actions. You can do this executive-level planning and analysis one a single screen with a mouse and keyboard, but the Digital Boardroom option also supports a slick, three-screen conference room setup using giant touch-screen displays (hardware not included).

MyPOV: It’s early days for Cloud for Analytics, and the roadmap reveals that there’s plenty of functionality that has yet to be added. On the planning front, it’s up against fast-growing, cloud-based performance management rivals such as Anaplan, Host Analytics and Adaptive Insights. These products are also more mature, offering prebuilt apps in areas such as workforce planning as well as financial consolidation, something not yet on the roadmap for Cloud for Analytics.

On the BI front there are myriad competitors, from cloud-native BI systems like BIRST, Domo and GoodData to newish cloud offerings from Qlik and Tableau to emerging as-a-service offerings from Amazon Web Services, IBM and Oracle. The predictive analytics capabilities SAP will bring to Cloud for Analytics from its KXEN acquisition could be a real differentiator, but its’ unclear to me how deep this functionality will go and how soon it will be generally available.

For now I’d say that the breath of SAP Cloud For Analytics is very promising, but the depth in each area of analysis has yet to be realized. It will clearly be most attractive to SAP customers who want real-time access to and analysis of on-premises data in the cloud.

BPC Optimized for S/4HANA Finance

BPC has been a cornerstone FP&A option for SAP customers nearly a decade. A key differentiator versus the rival Oracle Hyperion portfolio is that BPC combines both planning and consolidation in a single product. BPC has evolved to include several permutations, including BPC NetWeaver Standard, BPC for Microsoft and BPC Embedded (as in, an embedded component of SAP Hana).

BPC Optimized for S/4 Hana Finance is seeing growing interest because it gives the many companies that have implemented BPC a path to HANA in-memory performance without having to reimplement on HANA. BPC gets direct, real-time access to ERP data from an S/4HANA Finance instance that sits next to a conventional deployments of the SAP Business Suite.

For now, the real-time advantage for BPC Optimized for S/4 Hana Finance is limited to the planning side of the product, but that alone is attractive to many because you get on-the-fly slicing and dicing against up-to-the-minute data. Future plans call for sophisticated, real-time analyses of sales and profitability, investments, liquidity, product costs, tariffs and more.

The appeal of this product will become even more powerful in the third quarter when it gains support for real-time consolidation. This will enable business units and corporate to predict and close end-of-period results that much more quickly, a crucial advantage.

MyPOV: For net-new customers who are implementing from scratch, BPC Embedded is the likely path forward because there’s no legacy to worry about and you develop from the start to gain the real-time analysis advantages of SAP HANA. But for the many existing customers who have content they want to leverage, BPC Optimized for S/4 Hana is a more practical path to real-time planning and consolidation. It’s also a path the S/4Hana Finance Universal Journal, which promises single-table access to all cost and account information, including customer and vendor data. With universal access to real-time data combined with (coming) what-if simulation and recommendations, we’re talking state-of-the-art capabilities that can change the way you see and do business.

Data to Decisions

Chief Financial Officer

Chief Information Officer

direction for 2016 and beyond. The biggest theme I took away from the event was the re-positioning of procurement. Evolving from being primarily a spend management tool to a much more global and strategic function. Taken in the context of how commerce, both from a B2B and B2C world have evolved, the ability to approach spending with greater flexibility and insight is crucial to remain not only competitive but to also capture opportunities. The highlights from the show:

direction for 2016 and beyond. The biggest theme I took away from the event was the re-positioning of procurement. Evolving from being primarily a spend management tool to a much more global and strategic function. Taken in the context of how commerce, both from a B2B and B2C world have evolved, the ability to approach spending with greater flexibility and insight is crucial to remain not only competitive but to also capture opportunities. The highlights from the show: