Salesforce AI Agents, Talent Acquisition, Post-Breach Resilience | ConstellationTV Episode 87

In ConstellationTV episode 87, NEW co-hosts Martin Schneider and Larry Dignan unpack recent enterprise tech news, including Salesforce's introduction of two new AI agents: a sales development agent and a sales coaching agent, aimed to enhance lead qualification and sales coaching by leveraging multi-channel communication and AI-driven insights.

Then Larry interviews SuperNova finalist Cari Bohley from Peraton about the importance of AI in talent management, highlighting Paraton's use of AI to improve retention and career development.

Finally, Constellation analyst Chirag Mehta unpacks the impact of AI on cybersecurity, emphasizing the need for post-breach resilience and the challenges of securing generative AI models.

00:00 - Meet the Hosts

00:21 - Enterprise Tech News updates (Salesforce AI, earnings, market trends)

08:08 - Challenges and Surprises in AI Talent Acquisition | Interview with Cari Bohley

24:10 - Cybersecurity Trends for the Fourth Quarter | Interview with Chirag Mehta

34:14 - Bloopers!

ConstellationTV is a bi-weekly Web series hosted by Constellation analysts, tune in live at 9:00 a.m. PT/ 12:00 p.m. ET every other Wednesday!

__

ConstellationTV Episode 87 transcript (News segment): Disclaimer - This transcript is not edited and may contain errors

Well, one of the most interesting things that popped over the last week was Salesforce really advancing a lot of their AI vision. Interesting that they're announcing this ahead of Dreamforce, but they've really kind of taken the next step, you know, they started out with kind of Einstein analytics, and they went to their Gen AI stuff with the Einstein one co pilots. Now they're bringing out what they're calling agents. AI agents, right? They've got two that they're going to be announcing and bringing out at Dreamforce. One is a sales development agent, so an SDR, virtual SDR, think of that. And then the other one's kind of a sales coaching. Really interesting for these two choices, because you've really you're looking at, on the one hand, with the with the sales Dev, a lot of kind of repetitive, you know, question based concepts of like finding out, you know, how do you qualify leads? Is there a budget? Are you a decision maker? All these types of things, but also being able to do that in a really interesting way, across channels, right? So where most SDRs, the human ones, are making phone calls and maybe just sending out a bunch of, you know, blast and hope emails. You know, these can be done through WhatsApp and through other channels, and, you know, maybe even slack over time, right? Because it's a Salesforce property, right? And you're, you're looking at this ability to actually go out there and asynchronously and in interesting ways, qualify leads and then route them using Salesforce workflow to the appropriate sales rep, right? So instead of kind of batching it, doing it with a lot of like, hey during business hours, we can call a bunch of people. This can be 24/7, asynchronous, multi channel. Really interesting there, right?

And then on the coaching side, what you're seeing is a really interesting play where you know, sales coaches, sales methodologies, all these types of things, and even guided selling. Ai started to get into that, right? But when you actually have this kind of one on one coach, really following everything you do, because a human coach really can't understand every call you make, every email you send. You know, what is your cadence? You know how what is the white space between when you engage, when you don't engage, when you should be engaging, right? It really can understand that better than any human and at scale. So I think they really pick two really smart and really strong, you know, first agent choices there to go out with. And the great thing is, is, again, with Salesforce as a platform, you're going to be able to kind of build these on your own, leveraging their tool set and make your own agents for different purposes, jobs to be done, all that kind of stuff. So it's just stage one. It's interesting that they're announcing it well ahead of Dreamforce, but I think they're taking a really interesting first mover advantage among a lot of the CRM players out there. So it's pretty interesting.

What's interesting about that to me is this is sort of the second time in like a week and a half where I've heard talk about Gen AI as, like a behavior coach and walk you through difficult conversations and coach you up for sales and things like that. I mean, I assume there's, there'll be ROI metrics for those, those kind of coaching, sales, coaching agents. But yeah, it's interesting stuff. Yeah, the stuff I'm looking at when it comes to Salesforce is, are they going to grow and their earnings are about to land on a day that brings Salesforce, Nvidia, CrowdStrike and a few others. Why they don't pace these out better? It's beyond me. But, you know, there's, there's been some grumbling about Salesforce trying to, you know, sell multiple clouds and all that kind of stuff. So I'm really going to just look closely for the growth. You know, Salesforce is sort of in that land of single digit growth right now, and it's. Really unclear to me, what, you know, what's going to juice those sales? I mean, I assume it would be data cloud, but you know, it's, it's, it's a little tricky to see how that company's going to accelerate growth without an acquisition, which also gets the activist investors all Well, sure, yeah. I think one of the interesting things they pointed on a briefing the other day was they're seeing, you know, the customers that they have that are utilizing AI well, are seeing like 30% revenue growth, like there's they're hitting that high double digit growth. So again, it comes down to value and value receipt. So if they can build an ROI model out there that, like the their AI vision, combined with their ability to really either keep flat your human costs, but also be more productive. There's a lot of ROI in that that they can be providing downstream and proving that out Well, finally, in a way where, like, commodified sales automation rarely could do in a really, like non dotted line, way right, like really a straight line. So it could be an interesting tipping point for them to really see some growth again, because they're actually going to be able to show some value, big if, but it could be huge for them.

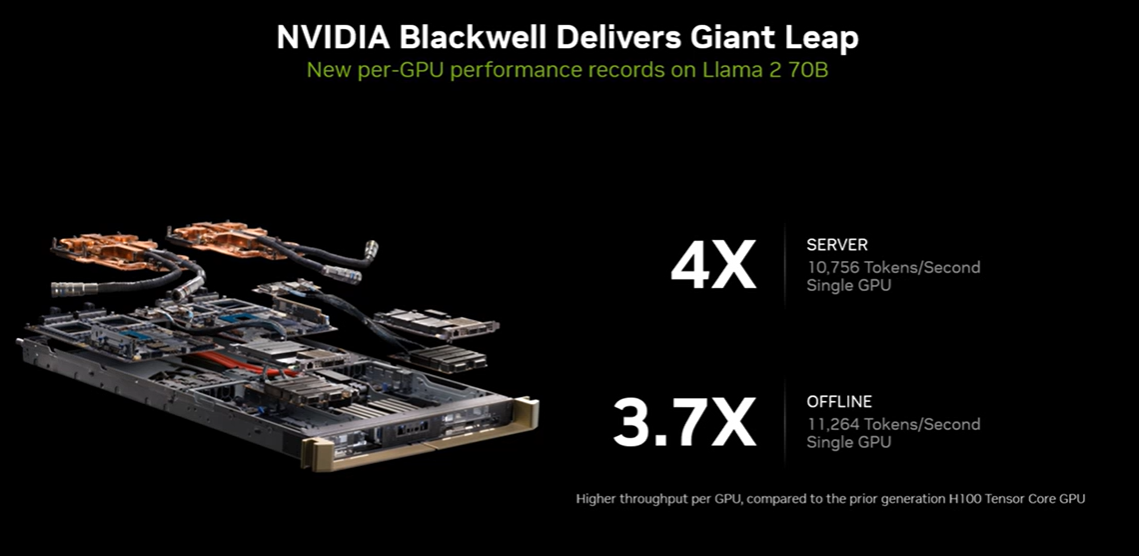

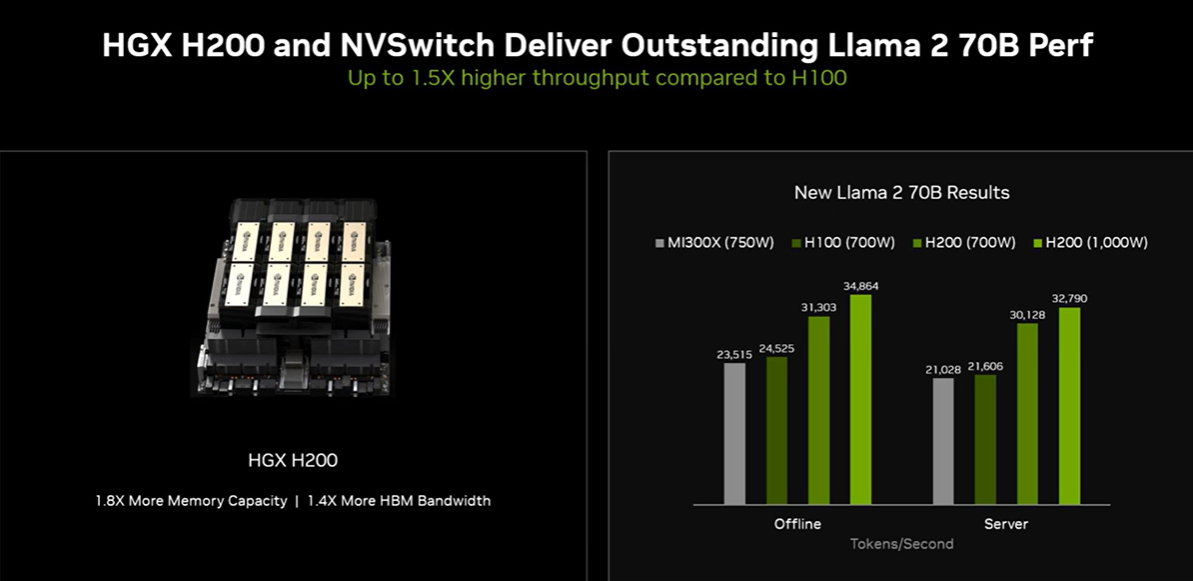

The other earnings I'm watching is CrowdStrike. When they report it's basically, what are these lawsuits going to cost? It sounds like a lot of it was not insured, per se, but the damages that could, you know, you could hit CrowdStrike with, are kind of limited, right? There's the one, you know, the big Delta, CrowdStrike, Microsoft spat. You know, been reading the docs going back and forth on that, but you know, there's gonna be a bunch of those lawsuits popping up. So that's the thing to look for there. The other one, naturally, the whole markets waiting for it, and that's the videos earnings, right? It's basically all about Nvidia. Nvidia is probably the only company to date that's raked in a ton of dough on Gen AI, sure. And, you know, they do have some delays, and they're in the latest chips and all that. But I'm not sure it's gonna matter too much. And, you know, AMD made this acquisition the other day, building out, you know, their integrated system designs and things like that. So, you know, the competition's coming up to Nvidia, but right now, I think it's kind of free sailing for them.

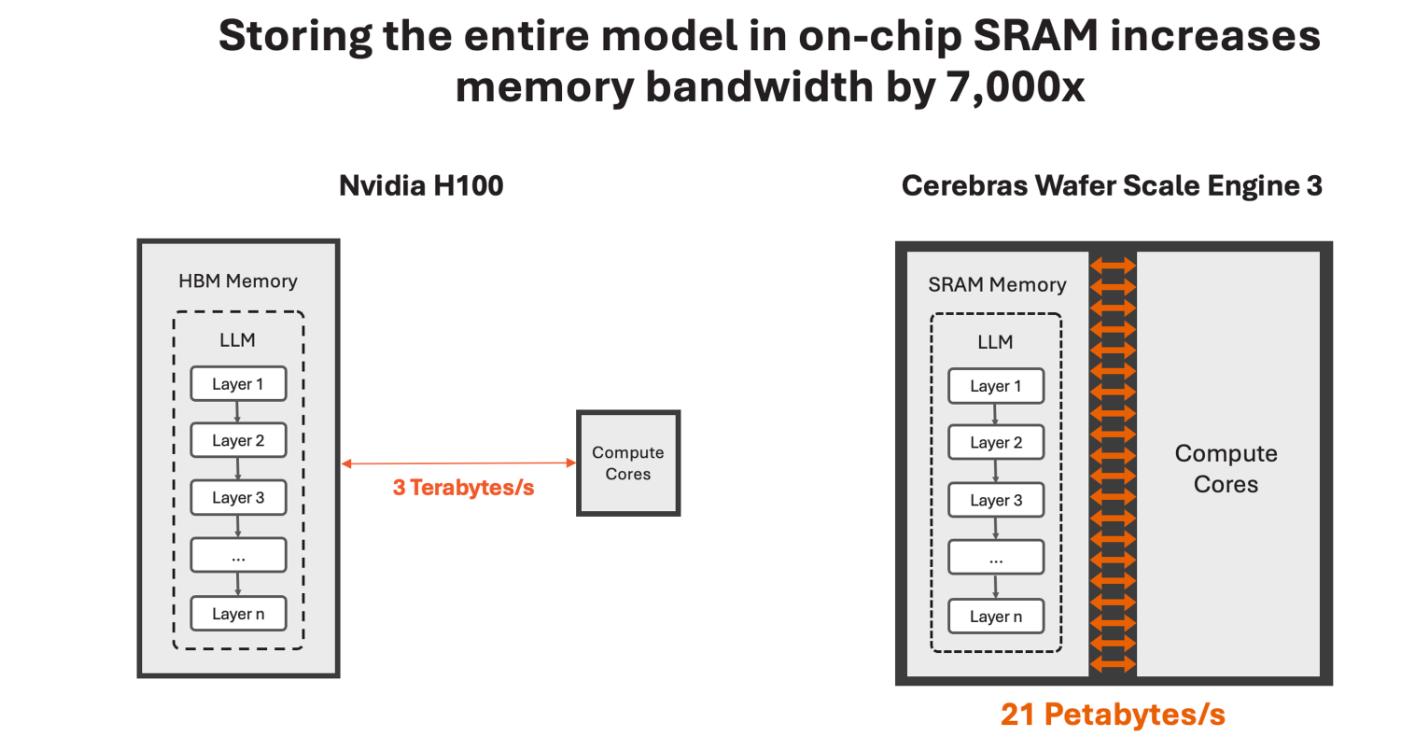

The problem is just that they're a price for perfection. Well, of course, and that's and that's an issue. But what you're seeing is interesting. Like, when you actually look at, like you said, AMD, zt, you're also seeing rebellions and sapien in Korea merging, again. Like, everyone is going to take a run in Nvidia and like, how do you, you know, how do you become the value provider, right? Of like, where's the commoditization of AI powering, you know, of like, where is that like? Because, you know, like, think, let's, like, take a look back at Amazon, how many years ago, right, when it was, like, commodity commodifying cloud, right, when people were doing a lot of that themselves, right? You know, like, think about what Salesforce could have been if it had an Amazon 20 years ago, right? So who's going to go and take that, that, that incredible disruption role there, right? Because, like you said, right now, it's so expensive, and look, they've been reaping the benefits, but someone's going to disrupt it, right? Exactly, all right.

Well, thanks for the news, Martin. And then coming up next, we have a talk with supernova Award finalist, Carrie Bowie, she's vice president of talent management at a company called paraton, and it's really a cool company to do all the scientific engineering. DoD is a customer, and it was kind of built by merger, and now they're basically combining the talent pool and trying to, you know, find the right skill sets for for what they're doing. So it looks pretty cool there. And then to end the show, we're going to talk to Sharad Mehta about some of the cybersecurity stuff you need to think about for the fourth quarter.

View the full ConstellationTV Episode 87 transcript here

On ConstellationTV <iframe width="560" height="315" src="https://www.youtube.com/embed/G_wc0EvvCdE?si=o-BNEVOaMCkkNX9v" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share" referrerpolicy="strict-origin-when-cross-origin" allowfullscreen></iframe>

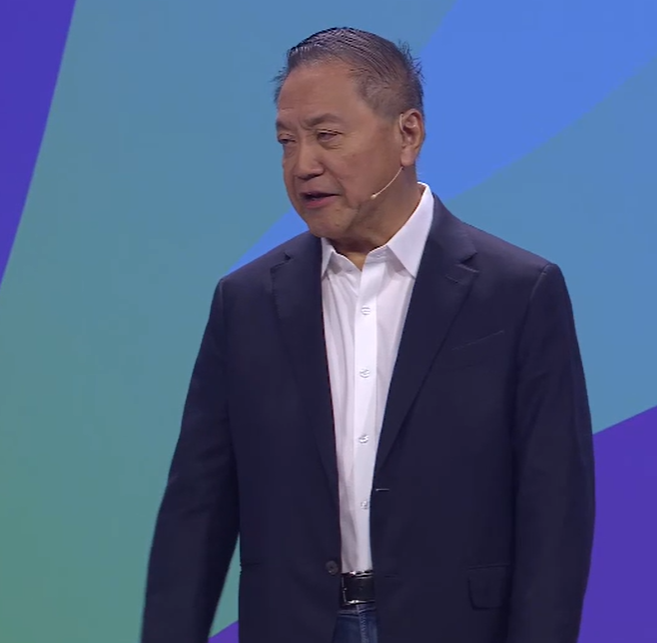

Tan, speaking at VMware Explore in Las Vegas, said:

Tan, speaking at VMware Explore in Las Vegas, said: