Creatio’s “Energy” Release Fuels its Continued Disruption of the CRM Space

Creatio has been taking a “no code” approach to building a midmarket and enterprise focused CRM platform and set of applications for about a decade now. The company has offered a competitive suite of CRM tools spanning marketing automation, sales automation and customer service automation that rests upon a solid workflow engine (the company was previously named “BPMonline”).

So, with an existing solid foundation in workflow, the innovations we are seeing in AI provide a unique opportunity for Creatio to offer its customers the ability to supercharge existing workflow oriented CRM with generative and agentic AI tools to drive user productivity, reduce costs, and provide an enhanced customer experience. And that is just what its latest release, dubbed “Energy,” aims to do.

Embedded AI to Drive CRM Adoption and ROI

The highlights of the Energy release include a new “AI Command center.” The goal of AI Command Center is to combine prescriptive, generative, and agentic AI into one single destination. It allows admins to design, deploy, and refine AI skills in a single place, to both optimize the actual performance of AI implementations but also better audit and moderate AI usage across the organization.

The AI builder tools in Energy are impressive, and bring Creatio’s AI vision closer to parity with most CRM providers. However, given its small and midsize business base of customers, Creatio is releasing a slew of pre-built AI tools for multiple use cases. These initial 20 pre-built tools cover marketing, sales and support use cases. For example, users can more quickly segment target lists, leverage generative AI to create personalized digital engagements, and take advantage of integrated predictive analytics to refine offers and promotions to improve conversion rates.

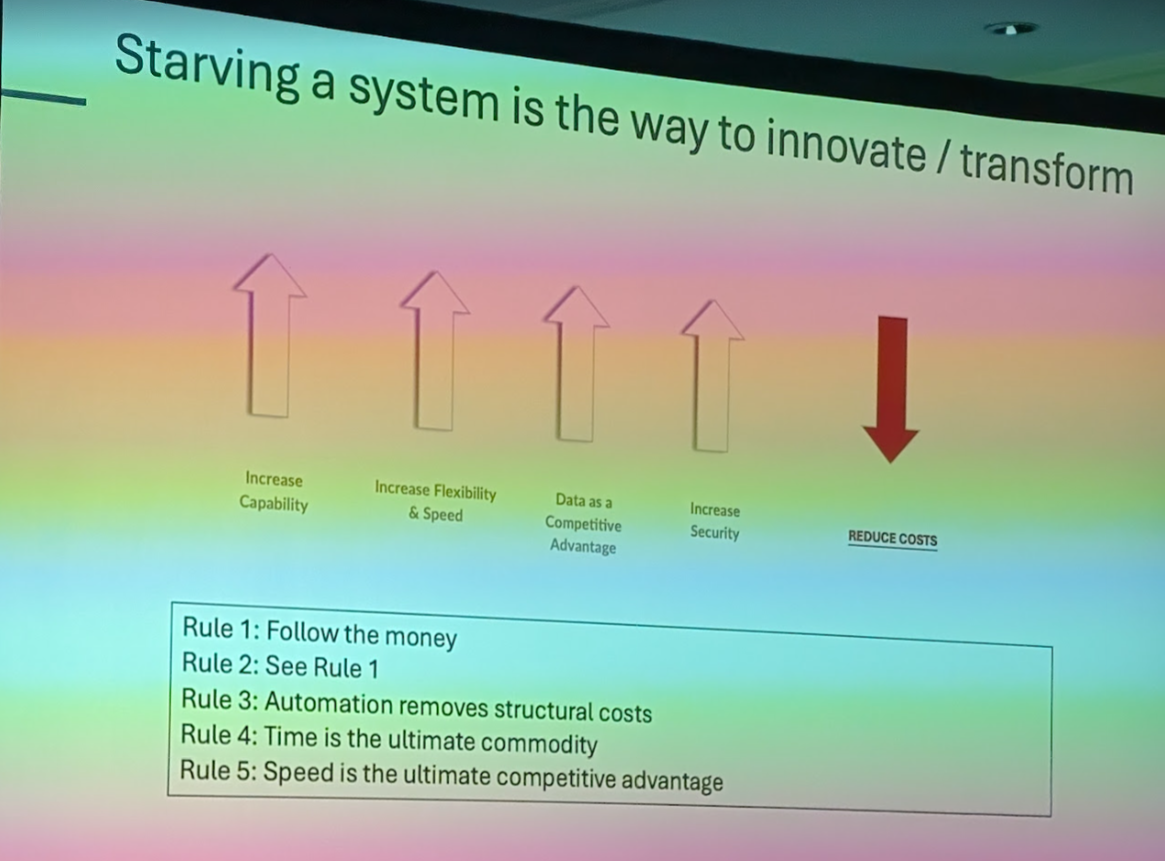

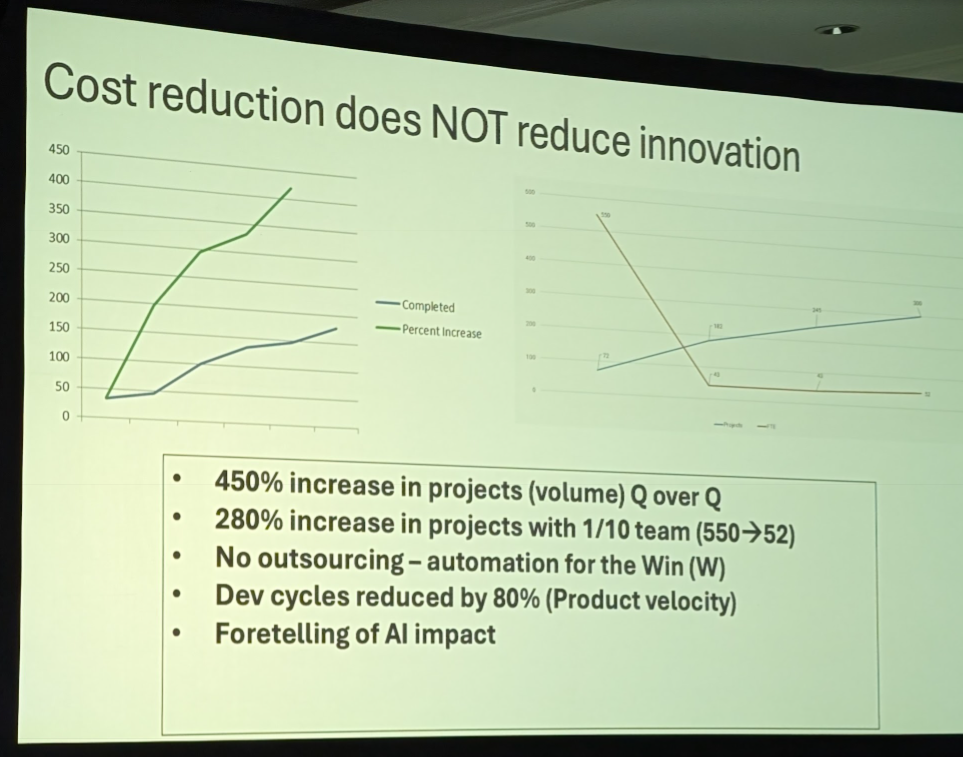

The AI advancements build upon existing strong copilot and other generative AI tools inside Creatio’s offerings. These tools have provided inline, contextual insights around leads, accounts, and even predictive and prescriptive insights around the reporting and analytics tools. With agentic AI advancements, Creatio users can now better use AI to automate common tasks in the system, driving productivity and enabling growing but resources constrained teams to “do more with less” and to manage growth efficiently without needing to constantly add human headcount. And, by providing a more streamlined and effective employee experience, users can potentially retain more employees as they can be more productive and less likely to experience employee burnout.

Out of the box AI tools include support for the following use cases:

- Sales: Meeting Scheduling, Conversion Score Insights, Lead and Opportunity Summaries

- Marketing: Bounce Responses Analysis, Enhanced Email Subject Lines, Text Rewriting, Text Translation

- Service and Support: Case Resolution Recommendations, Case Performance Analysis, Case Summaries, Knowledge Base Articles

- General CRM: a brand new drag-and-drop Email Designer, revamped Product Catalog, redesigned pages for Order and Contract Management, improved navigation panels, Ada AI chatbot integration, embedded Google Analytics integration, and many others.

Flexible Pricing for Growing Businesses

In a recent launch event for the Energy release, Creatio showcased some customers who are taking advantage of the platform, as well as how they plan to leverage the new AI capabilities to drive productivity and foster growth without having to make significant people hires in the process, further driving efficiency. But what was notable was the size of Creatio’s reference customers. These were manufacturers and others firms with more than a thousand CRM users across marketing, sales and support. Creatio has also landed marquee enterprise reference customers including AMD, Howdens, Colgate Palmolive, and Metlife to name a few. In short, Creatio is proving itself a legitimate platform that can scale to meet enterprise customers’ needs as their businesses grow.

But as businesses grow and their needs change, traditional CRM pricing can actually stymy an organization’s ability to be successful in their CRM initiatives. High per-user pricing in legacy CRM offerings, as well as arbitrary silos between application functions (example: you cannot use any aspect of marketing automation as a sales automation user without significant increase in spend) have forced line of business and IT leaders to make hard decisions as to who has access to the system, and what functionality they can utilize.

Creatio is trying to solve this by offering a far more flexible pricing model that better meets the needs of growing businesses. The company offers Growth, Enterprise, and Unlimited platform access plans that enable users to build CRM deployments that fir their unique business needs, and low cost ($15 per user/month) feature set access for its core sales, marketing and support tools. Platform plans start at $25 per user/per month, making the platform compelling to businesses of all sizes. In addition, Creatio includes its AI capabilities including the new features in Energy as part of the base price. This gives users the ability to both experiment and deploy AI without a lot of risk in terms of cost, complexity or security concerns.

For businesses looking to deploy their first packaged CRM system, Creatio should be on any short-list. The competitive platform fees, the ability to enable every employee to access data and functionality as needed without premium SaaS prices, and the fact that the AI capabilities require no fees, makes Creatio a compelling alternative to more premium priced offerings.

Add to that, the free AI tools can enable smaller business to expand their business without headcount additions, and the return on investment could far outweigh any cost of ownership when we think of the ability to grow without hiring more workers. But beyond pricing, the workflow oriented platform, the no-code approach and the well-integrated marketing, sales and support applications offers growing and midsized enterprises a tool they can really “own” and create a differentiated employee and customer experience without a lot of IT assistance, or needing to engage with VARs or SIs for any change in the system, driving both a more effective and efficient CRM deployment.

Next-Generation Customer Experience Data to Decisions Future of Work Innovation & Product-led Growth New C-Suite Sales Marketing Tech Optimization Digital Safety, Privacy & Cybersecurity ML Machine Learning LLMs Agentic AI Generative AI AI Analytics Automation business Marketing SaaS PaaS IaaS Digital Transformation Disruptive Technology Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain CRM ERP finance Healthcare Customer Service Content Management Collaboration Chief Revenue Officer Chief Executive Officer Chief Information Officer Chief Technology Officer Chief AI Officer Chief Data Officer Chief Analytics Officer Chief Information Security Officer Chief Product Officer