AI - Enterprise Scale Intelligence with C3 IoT

Business expectations of the deployment of AI, with associated Digital Business technologies, may be higher than the reality of their Technology staff’s current experience to deploy. Pilots, even business successful deployments, are by necessity focused on targeted and contained deliveries. The technologies, methods and experience will all have been chosen to reflect the requirements of the singular project.

The likely outcome, with some additional challenges, will be a dysfunctional Enterprise unable to integrate at an Enterprise level all resources, assets and intelligence in a cohesive manner able to optimize responses to markets and operating conditions. Sadly, as has been too often the case Business Management will be frustrated by the failure of Technology investments to deliver expectations.

Why is this happening again? From the start of Enterprise IT in the mid-eighties, through the succession of Internet, Web, Mobility, Social Tools, and Apps, localized project successes have had a nasty tendency to turn into Enterprise level barriers. Yet eager Business managers and technology leaders are once again embarking on project level deployments, often supported by C Level executives. Why is the apparently accepted Enterprise Business Strategy of Digital Business transformation failing to set an appropriate Enterprise Digital Technology strategy?

Once again there is a Business and IT alignment issue, but this time not occurring in the usual manner. Digital Business operating models focus on ‘unbundling’, or de-centralization, of activities, to support flexibility in what and how they do business. Not unsurprisingly Technology managers believe they should align to this business model by moving to individual project deployment optimization.

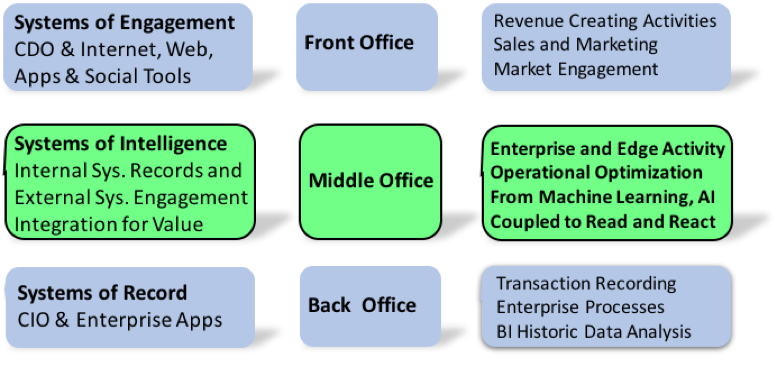

It is perfectly understandable, even a necessity at one level, given the new diversity of requirements for ‘Systems of Engagement’, (as distinct from ‘Systems of Record’ provided by current IT systems). However, to make this work there has to be a parallel, and conscious, Digital Business Technology strategy for the use of data from these new systems to develop Enterprise ‘Systems of Intelligence’, (Business Insights from Machine Learning and AI).

The words ‘use of data’ are the key to understanding what is required. This is not a call for an Enterprise Data strategy, or even Big Data analytics, as commonly understood in support of the current IT systems, and associated Business Intelligence analytics. Nor for that matter will the current methods of Enterprise Architecture deliver integrity and integration in this new Digital Business and Technologies environment. A Digital Business and Technology strategy requires three individual elements to be successful, combined and integrated at the Enterprise level;

Enterprise IT Systems of Record (existing Enterprise Applications), create centralized recording of transactions as ‘state-full’ historic data in defined formats arising from an architecture of closely coupled Enterprise Applications; Enterprise scale challenges usually refer to the size of the resulting data bases, together with their management and analytics.

Digital Business Systems of Engagement (Apps from IoT, Mobility, Social tools, etc.), refers to loosely coupled integration of sets of activities on the Edge of the Business producing huge flows of ‘stateless’ data on real events actually happening. This data is the foundation of the new era of Digital Business and has little in common with Systems of Record. The challenge of Scale refers to the data volumes flowing from connected devices, and systems, at levels that overwhelming traditional methods of storage and analytics.

Enterprise Systems of Intelligence use ‘stateless’ data to provide the fundamental properties that enable Digital Business insights, read and react capabilities, and support the introduction of Machine Learning and AI. Digital Business Stateless data has the power to corrupt Enterprise IT State-full data and the role of Systems of Intelligence is to provide a successful integration and processing capability that results in the genuine innovation that Machine Learning and AI brings to Enterprise operations.

The Constellation Research Digital Transformation Survey October 2017 identified that whilst Enterprise CxO management had a clear commitment to enacting a Digital Transformation there is a distinct lack of clarity as to what this actually meant. The reality is that many Enterprises are still struggling to come to terms with successful deployment of IoT driven projects, modernization of their existing IT systems to run on the Cloud, or integration of Social CRM.

These ‘Digital Strategies’, driven by the very real sense of the speed with which market and competitive change is occurring, are creating intense activity and investment. Unfortunately, also a breeding ground for potential internal Digital Disruption of operations that will see Enterprise management struggling to reconnect, and rebuild, their internal operational coherence to face the all too real external Digital Disruption of their markets.

A genuine joint Enterprise Business and Technology strategy is required to ensure Systems of Engagement develop to empower the Enterprise Systems of Intelligence that are the key to Digital Transformation of Enterprise capabilities.

In this, the Digital Technology era, a Cloud Platform would appear to be the immediate answer, but as in so many other aspects of the Digital Transformation some care needs to be taken over the casual use of terminology. The traditional definition of a ‘Platform’ refers to the technology capability to provide a set of common technology functions to simplify the tasks of integration and processing, as such it is the common route chosen by Technology management. Though inherently a technology choice many offer the addition of some Business value, but technology simplification is usually offset by Business requirement constraints.

N.B. Internal Integration Platforms should not be confused with external Business Ecosystems Platforms, though similar care must be exercised in choices. See Constellation blog Open Business Ecosystems or Closed Technology Platforms.

Systems of Intelligence are constantly discovering and creating new innovative business insights by continually refining dynamic, unpredictable, event inputs with historic data. Much of this new insightful value comes from finding in the data flows previously unforeseen, or planned, relationships, a very different processing activity from traditional Systems of Record deployments using data Integration and analytical tools.

This new requirement for a combination of simplified, abstraction, of core technology elements with complex integration, processing, and automation of responses is more closely aligned to the definition of an Software Engine, than a Integration Platform. With the simplification of Software development, often by the use of a Platform in one form or another, over recent years the thoughtful in depth functional specification evoking the requirement for an ‘Engine’ to power a series of complex software elements has sharply decreased.

The following description of a Software Engine fits the requirement for Systems of Intelligence more closely, whereas the requirements for Systems of Engagement integration and connectivity are well suited to Platform technology.

An ‘Engine’ is defined as an application program that coordinates the overall operation of other programs. Systems of Intelligence are the conglomeration of the dynamic environment of Business defined values, and insights, integrated, regardless of technology definitions and formats, into cohesive optimized Enterprise and/or Edge outputs

Perhaps it would be better to think of an Enterprise requiring a Digital Business Engine to successfully operate as an intelligent Digital Enterprise by provide the necessary continuous business ‘agility’ from Systems of Intelligence.

The first wave of Digital Pioneers, or early adopters, have already reached the stage when these issues have become clear, and the need to find a solution has become necessary. Interestingly, many who are the standard business cases references for Digital Transformation have started deployments with C3 IoT.

The following is based on a briefing by C3 IoT to Constellation Research

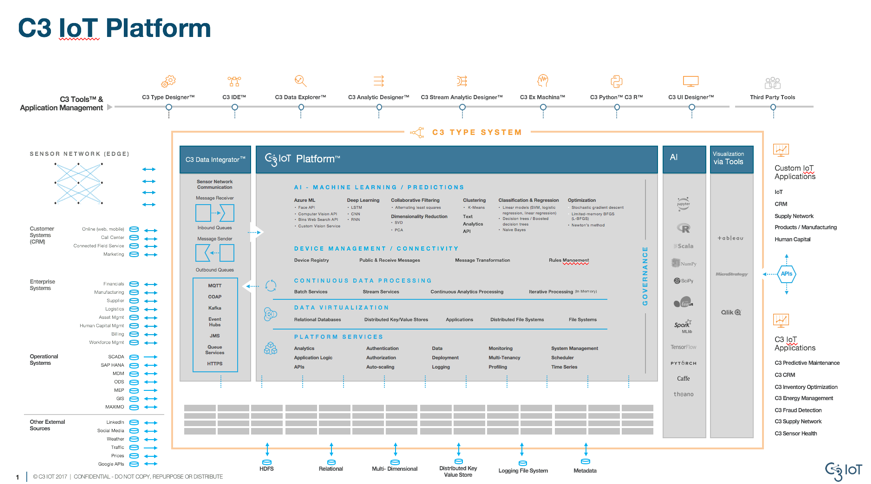

The C3 IoT Platform™ is a platform as

a service (PaaS) for the design, development, deployment, and operation of next-generation AI and IoT applications and business processes. The applications apply advanced machine learning to recommend actions based on real-time analysis of petabyte-scale data sets, dozens of enterprise and extraprise data sources, and telemetry data from tens of millions of endpoints.

C3 IoT provides a suite of pre-built, cross-industry applications, developed on its platform, that facilitate IoT business transformation for organizations in energy, manufacturing, aerospace, automotive, chemical, pharmaceutical, telecommunications, retail, insurance, healthcare, financial services, and the public sector. C3 IoT cross-industry applications are highly customizable and extensible. Pre-built applications are available for predictive maintenance, sensor health, enterprise energy management, capital asset planning, fraud detection, CRM, and supply network optimization. Customers can also use the C3 IoT Platform to build and deploy their own custom applications.

Operationalizing IoT is much harder than it looks. Many IoT platform development efforts to date – internal development projects as well as industry-giant development projects – are attempts to develop a solution via acquisition of multiple piece parts or from the many independent software components that are collectively known as the open-source Apache Hadoop stack. Despite the marketing claims surrounding these projects, a close examination suggests that there are few examples, if any, of enterprise production-scale, elastic cloud, big data, artificial intelligence, and machine learning IoT applications that have been successfully deployed in any vertical market except for applications addressed with the C3 IoT Platform.

Companies often expect that installing the Apache Hadoop open-source stack will enable them to establish a “data lake†and build from there. However, the investment and skill level required to deliver business value from this approach quickly escalates when developers face hundreds of disparate software components in various stages of maturity, designed and developed by more than 350 different contributors using different programming languages while providing incompatible software interfaces. A loose collection of independent, open-source projects is not a true platform, but rather a set of independent technologies that need to be somehow integrated and maintained by developers. Instead, companies need a comprehensive AI and IoT application development platform. To avoid this increasingly common pitfall, C3 IoT leverages a model-driven architecture approach.

Model-Driven Architecture

The architecture requirements for the Systems of Intelligence that are the key to the digital transformation of enterprises are uniquely addressed through a Model-driven architecture. Model-driven architectures define software systems using platform independent models, which are translated to one or more platform specific implementations. The C3 IoT Platform is a proven model-driven architecture.

This architecture abstracts application and machine learning code from the underlying platform services and provides a domain-specific language (annotations and expressions) to support highly declarative, low-code application development.

The model-driven approach provides an abstraction from the underlying technical services (for example, queuing services, streaming services, ETL services, data encryption, data persistence, authorization, authentication) and simplifies the programming interface required to develop AI and IoT applications to a Type System interface.

The model is used to represent all layers of an application including the data interchange with source systems, application objects and their methods, data aggregates on those objects, complex features representing business and application logic, AI-machine learning algorithms that use these features, and the application user interface. Each of these layers are also accessible as microservices.

The C3 IoT Platform is an example of a proven model-driven AI platform. The C3 IoT Platform allows small teams of five to ten application developers and data scientists to collaboratively develop, test and deploy large-scale production AI applications in one to three months. The platform is proven across 30 large-scale deployments across industries including energy, manufacturing, aerospace and defense, healthcare, financial services, and more. A representative large-scale C3 IoT Platform deployment processes AI inferences at a rate of a million messages per second against a petabyte-sized unified federated cloud data image aggregated from 15 disparate corporate systems and a 40-million sensor network. Global 1000 organizations have successfully used the platform to deploy full-scale production deployments in six months and enterprise-wide digital transformations with more than 20 AI applications in 24- to 36-month timeframes.

Time to market is critical as next-generation computing platforms emerge. C3 IoT is a proven, scalable, production and development environment with dozens of large-scale IoT applications deployed in the market, managing tens of millions of smart, sensor-enabled devices. The time-to-market advantage of a proven, scalable architecture can be leveraged to gain early network effects and competitive differentiation in the next big wave of computing and industrial automation.

C3 Type System – a detailed review provided by C3 IoT

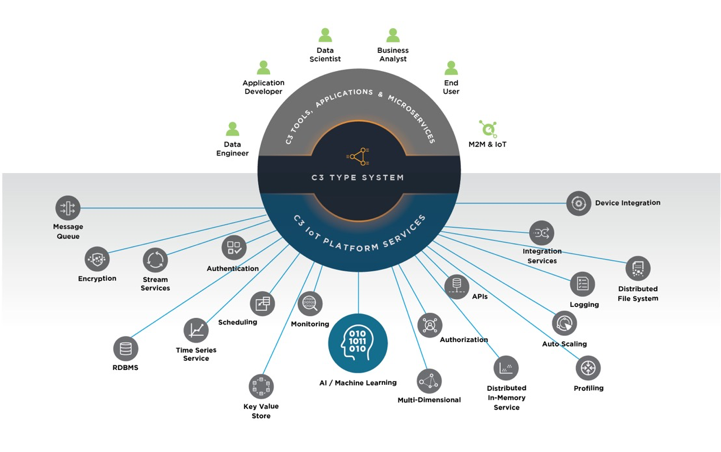

The C3 Type System is a data object-centric abstraction layer that binds the various C3 IoT Platform components, including infrastructure and services. It is both sufficient and necessary for developing and operating complex predictive analytics and IoT applications in the cloud.

The C3 Type System is the medium through which application developers and data scientists access the C3 IoT Platform, C3 Data Lake, C3 Applications, and applications and microservices. Examples of C3 Types include data objects (e.g., customer, product, supplier, contract, or sales opportunity) and their methods, application logic, and machine learning classifiers.

The C3 Type System allows programs, algorithms, and data structures – written in different programming languages, with different computational models, making different assumptions about the underlying infrastructure – to interoperate without knowledge of the underlying physical data models, data federation and storage models, interrelationships, dependencies, or the bindings between the various structural platform or cloud infrastructure services and components (e.g., RDBMS, No SQL, ETL, SPARK, Kafka, SQS, Kinesis, object models, classifiers, data science tools, etc.). The C3 Type System provides RESTful interfaces and programming language bindings to ALL underlying data and functionality.

Leveraging the C3 Type System, application developers and data scientists can focus on delivering immediate value, without the need to learn, integrate, or understand the complexities of the underlying systems. The C3 Type System enables programmers and data scientists to develop and deploy production big data, predictive analytics, and IoT applications in one-tenth the time at one-tenth the cost of alternative technologies.

To improve manageability, Types support multiple object inheritance, allowing objects to inherit characteristics from one or more other objects. For example, a mixed-use building might have characteristics of both a residential and commercial use building.

The Type System, through inherent dataflow capabilities, automatically triggers the appropriate processing of data changes by tracing implicit dependencies between objects, aggregates, analytic features, and machine learning algorithms in a directed acyclic graph.

The Type System is accessible through multiple programming language bindings (i.e. Java, JavaScript, Python, Scala, and R) and Types are automatically accessible through RESTful interfaces allowing interoperability with external systems.

Model-Driven Architecture Abstracts Underlying Platform Services Through a Simple Type Systems Interface

Summary

The C3 IoT Platform (and the associated C3 Type System) is a unique high-productivity, low-code application PaaS for rapidly developing and deploying AI and IoT applications at scale across an enterprise. The C3 IoT Platform has been developed and hardened through numerous large-scale deployments over 9 years at an investment of $300 million. The C3 IoT Platform is proven to support Enterprise Digital Transformations.

Capitalizing on the potential of AI and IoT requires a new kind of technology stack that can handle the volume, velocity, and variety of big data and operationalize machine learning at scale. Existing attempts to build an IoT technology stack from open-source components have failed—due to the complexity of integrating hundreds of software components developed with disparate programming languages and incompatible software interfaces. C3 IoT has successfully developed a comprehensive technology stack from scratch for the design, development, deployment, and operation of next-generation applications and business processes.

Developing AI applications requires less code to be written, and less code to be debugged and maintained, significantly reducing delivery risk and total cost of ownership. Using the C3 IoT Platform, a company’s investment in application code is abstracted from underlying infrastructure and platform services and future proofed against rapidly evolving software technologies avoiding lock-in to those technologies. Further, the C3 Type System provides enterprise leverage – any published code <entity, method, aggregate, feature, ML algorithm, UI> is instantly available to the rest of the organization.

The C3 believe its IoT Platform is the industry’s only IoT platform proven in full-scale production. With hundreds of millions of sensors under management and more than 20 enterprise customers reporting measurable ROI, including improved fraud detection, increased uptime as a result of predictive maintenance, improved energy efficiency, and stronger customer engagement.

New C-Suite Chief Digital Officer