Event Report: Dreamforce 2017 - Quip's Digital Canvas Brings Context to the Salesforce Platform and Beyond

Monday Nov 6 - Thu Nov 9, Salesforce held their annual Dreamforce conference in San Francisco. This massive gathering of customers and developers covers the full range of Salesforce offerings, from Sales to Marketing to Customer Service and much more. Constellation Research had a team of analysts in attendance, with my focus being on the collaboration components of the event. Below I will discuss 3 key areas: Quip, Community Cloud, and Salesforce and Google partnership announcement.

For more information, please read the posts by my colleagues Cindy (Marketing and Sales), Doug (Data and Analytics), Holger (Infrastructure and application development), Andy (IoT) and Dion (leadership and business transformation)

Quick Summary

- Quip: Introduced Live Apps, which enables people to embed a variety of content onto a Quip page, turning it into a Digital Canvas for collecting information in context on a single page

- Community Cloud: Introduced Lightning Flow and Einstein Answers

- Salesforce and Google partnership: Access Salesforce information in Gmail and Google Sheets. Salesforce customers can trail GSuite for free for a year. (* several restrictions apply)

Below is a short video where I discuss these items

Quip - The Digital Canvas That Glues Salesforce (and many other things) Together

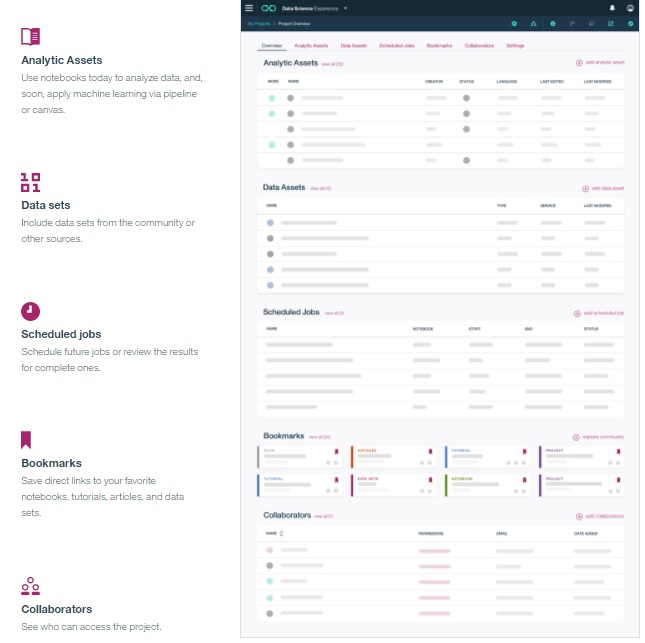

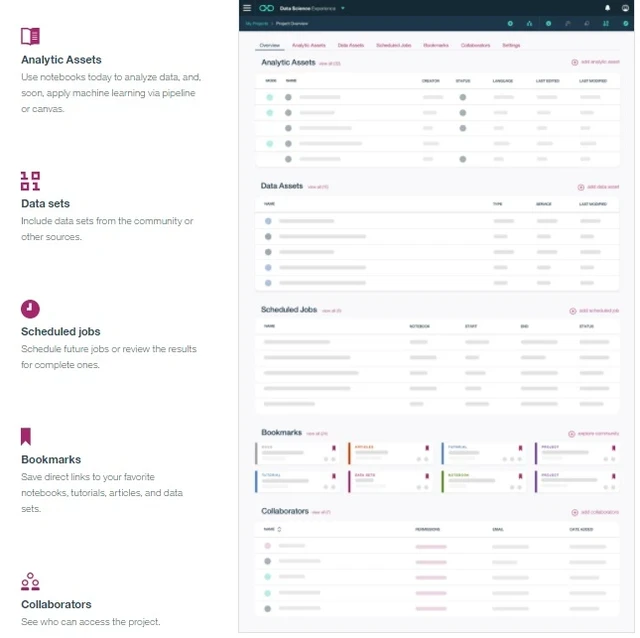

For those of you that are unfamiliar with Quip, it started as an online "word processor" that combined documents, spreadsheets and chat into a seamless experience. They were acquired by Salesforce in August 2016, and since then have been rapidly expanding the variety of content that can be embedded onto a page, culminating in the announcement at Dreamforce of what they are calling, Live Apps. Live Apps fall into three categories:

- Native features (tables, images, kanban boards, countdown timers, progress bars, Salesforce records, etc)

- Business Partner integrations (currently: Atlassian Jira, Workplace by Facebook, New Relic, Lucid Chart, Smartsheet, Docusign)

- Customer applications using the API (an example was shown where 20th Century Fox embedded a custom video player)

MyPOV

As the number of applications and websites people use to get work done grows, so to does the complexity and lack of context. Enter the concept of a "digital canvas", where multiple sources of content can be embedded together in a single place. When conversations can take place in context around these combined canvas, and updates to the content occur in real time, it makes work more productive, effective and efficient. Constellation Research sees Quip as the way Salesforce can tie together not only content from their own services, but those of their partners as well. For example, a Quip canvas could show information from a Salesforce customer record along with all their open customer service tickets, tweets about their products, support documents and files, the marketing campaigns they are part of, product images and a task list, plus enable the account team to have conversations about all these objects in a single place.

Quip faces a few obstacles for mass adoption. First, The concept of a "digital canvas" is new to most employees, so explaining what it does and how it works can be difficult. Does Quip compete with products like Box Notes and Dropbox Paper, or Microsoft Word and Google Docs, or Evernote and Microsoft OneNote, or Confluence and Socialtext? Second, Salesforce needs to reconcile (and vocalize) the long term roadmap between Quip and Salesforce Chatter. While there are differences, customers still need to know when and why to use each product. Third, licensing, purchasing and administration needs to become a seamless part of the overall Salesforce experience in order for it to grow within large enterprises.

Example of a Quip document I used while working on this blog post.

Community Cloud - Enabling Conversations, Knowledge Sharing and Commerce

The Salesforce Community Cloud provides digital experiences for use both externally with customers and internally with employees. At Dreamforce 2017 they made several announcements which can be seen in this keynote.

With respect to personal productivity, collaboration and getting work done... the two most significant items are Lightning Flow and Einstein Answers.

- Lightning Flow (MyPOV conspicuously named similarly to Microsoft Flow) enables people to easily create rules that automate common actions that occur inside communities. For example, if someone selects a certain topic, you could automatically move them into a certain marketing campaign. It will be interesting to see what templates Salesforce (and their partners) provides to help guide people in creating flows.

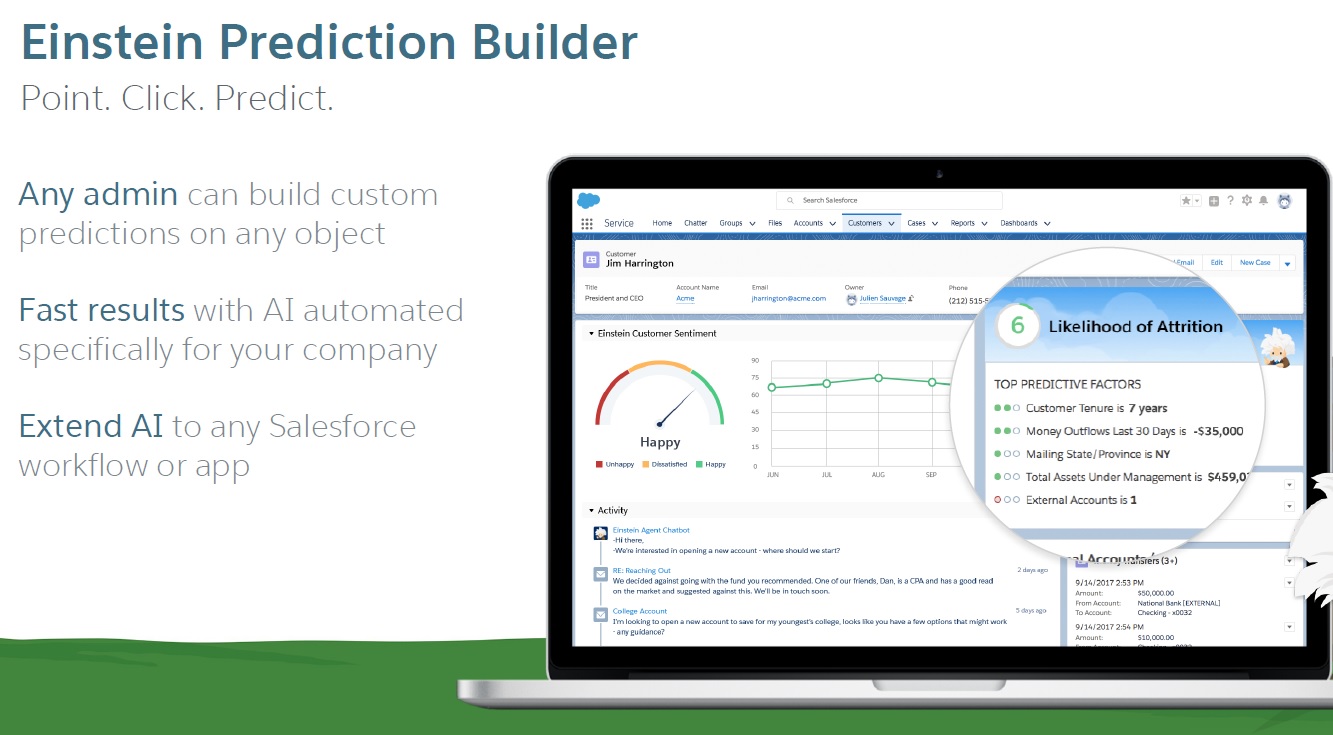

- Einstein Answers leverages Salesforce's artificial intelligence engine to enable community members to ask questions and have the most relevant answers returned to them, as well as recommended subject matter experts, without the need for human intervention.

MyPOV

With partner integrations for everything from ecommerce to video conferencing, custom branding, integration with the rest of the Salesforce portfolio, and new features like Flow and Einstein answers, Salesforce Community Cloud provides a very robust platform for both internal and external communities. With one of their main competitors Jive Software struggling in 2017, the market is ripe for Community Cloud to gain traction with new customers and expand their usage within existing Salesforce accounts. I would like to see Salesforce push the internal intranet use case stronger than they currently do, as I believe getting employees hooked can lead to expansion into other areas, especially around collaboration via Quip and Chatter.

Salesforce and Google - The Enemy of My Enemy is My Friend

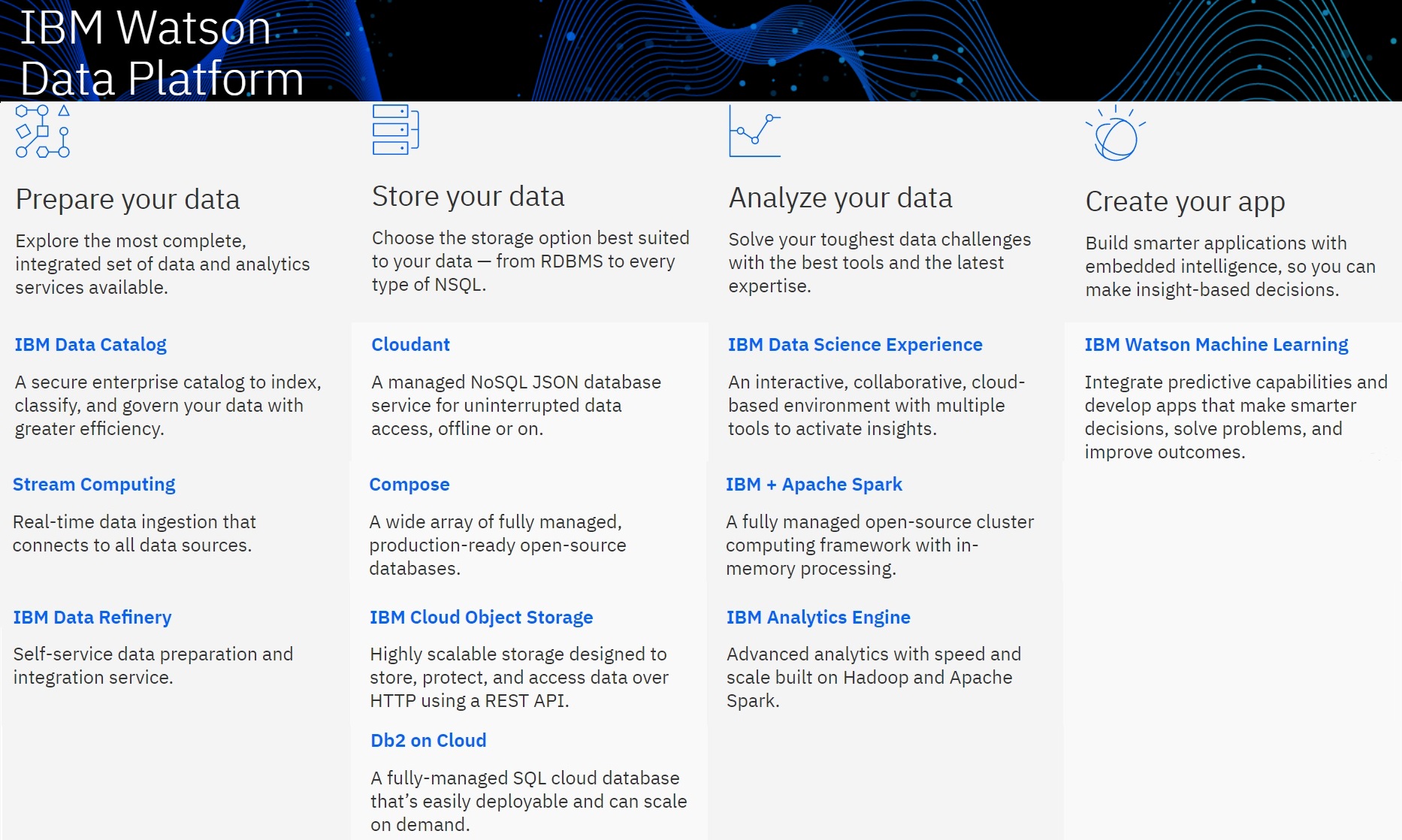

One of the "surprise announcements" at Dreamforce was a tighter partnership with Google. The announcement involves several areas, so I will focus on the productivity and collaboration tools, while my colleague Cindy will cover Marketing and Sales Cloud integration with Google Analytics 360, and Holger will discuss Salesforce's use of Google's data-centres.

At Dreamforce 2015 Salesforce and Microsoft announced several strategic partnerships. However, since Microsoft acquired LinkedIn in 2016 the future of that partnership has been in question. The new announcements with Google hint at a new (but not exclusive) direction for Salesforce with Google's products. The new partnership includes:

- Salesforce Lightning for Gmail: Users can surface relevant Salesforce CRM data in Gmail, and interactions from Gmail directly within Salesforce.

- Salesforce Lightning for Google Sheets: Users will be able to auto-update data between Salesforce Records or Reports and Google Sheets.

- Quip Live Apps for Google Drive and Google Calendar: Teams will be able to embed and access Drive files (e.g., Google Docs, Slides, Sheets) or their Google Calendar inside Quip.

- Salesforce for Hangouts Meet: Within Hangouts Meet, users will be able to surface relevant Salesforce customer and account details, service case history, and more

Also, Salesforce customers who are not currently Google G Suite customers will be able to trail G Suite for up to a year. There are several restrictions and details to this offer which can be viewed here.

MyPOV

Stronger integration between Google and Salesforce's products will be very welcome to their joint customers. It also could influence customers making a decision between Microsoft Office 365 and Google G Suite, and similarly customers deciding between Salesforce CRM or Microsoft Dynamics. It will be interesting to see where this partnership is in a year; including how many customers have taking advantage of the trail and how far along the product integration has come.

Conclusion

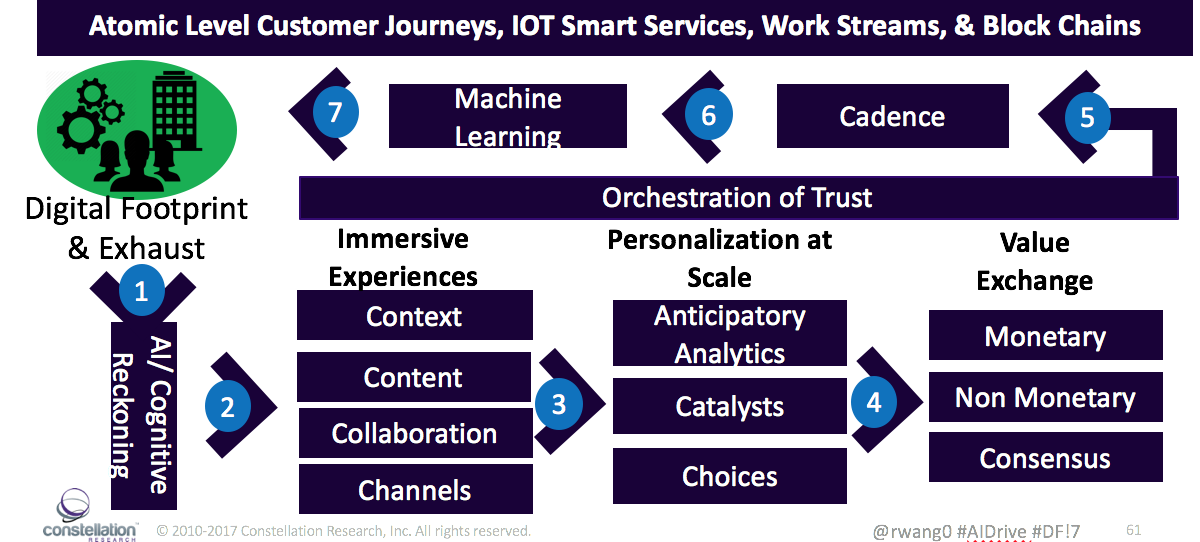

Salesforce Dreamforce is one of the "can't miss" tech events of the year. This year there was not a major product announcement like there has been in previous years (ex: introduction of Salesforce Lightning or Salesforce Einstein) but rather an overall "maturing" of the product lines. This includes new features, new integrations, new customers and new partners... which is a good thing. Sometimes incremental improvements mean more to customers than a big bang. For my coverage of personal productivity and collaboration, obviously the introduction of Quip's Live Apps is significant. The ability to bring context to content on conversations via a seamless "digital canvas" is going to play a important role in the Future of Work.

Future of Work