What Does IBM’s Cognitive Computing Era Mean For Collaboration?

What Does IBM’s Cognitive Computing Era Mean For Collaboration?

One of the most common complaints from employees is that they can’t keep up with the vast amounts of information available to them. Many of you have heard me talk about the issue not being email overload, nor even information overload, but rather input overload. We have too many places to look for, or respond to people, content and conversations.

But what if computers could do that for you? What if they could eliminate having to know where content was, which was the most relevant, what needs your attention now or what you can avoid working on? Even better, what if computers could go beyond helping you prioritize and filter, and start to actually take actions on your behalf?

With ideas like these in mind, IBM has just announced their new strategic initiative, Cognitive Business.

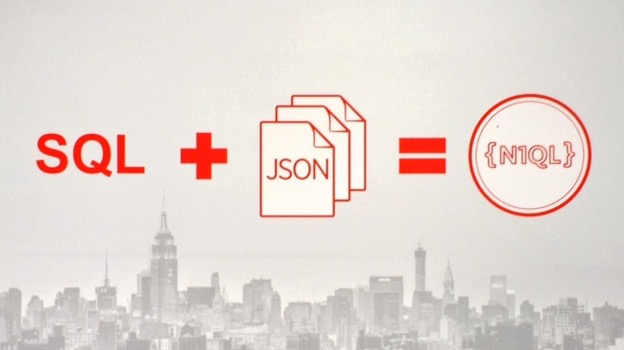

You may have heard of IBM Watson, the computer that beat two Jeopardy grand champions. Well, that system was designed to do a lot more than just play trivia games! Watson does not just know what it’s programmed to know, it learns and adapts, similar to the way humans do. This is called cognitive computing.

While IBM's marketing is currently focused on large industry initiates like healthcare and finance, cognitive computing could also one day help individuals and teams collaborate more effectively. IBM’s collaboration platform, IBM Connections and their new email client, IBM Verse could one day leverage the cognitive capabilities of IBM Watson to help people make sense of the vast amounts of information they are currently flooded with. In addition to helping people filter and prioritize information, one day the tools could recommend actions and even proactively respond.

Let’s call this next generation of personal productivity and teamwork, Cognitive Collaboration.

What Customers Should Consider

IBM is not the only company applying artificial intelligence to teamwork. While IBM will argue they are the only company with true "cognitive computing" (Watson), to the average person the technology behind the scenes does not matter as much as the end result. Other vendors are also adding digital assistant capabilities of their tools, such as Microsoft with Cortana and Google with Google Now. Salesforce also recently released Salesforce IQ which proactively helps sales professions organize their pipelines. Customers should speak with these vendors to get a list of current capabilities as well as future roadmaps in how their platforms will help employees get work done. It's important to note, most of these cognitive capabilities only work in cloud based deployments, as the computing power for these systems is not offered on-premises.

What Partners Should Consider

The success of any platform is determined by the strength of its ecosystem. For collaboration software, it's critical that tools integrate with other enterprise applications and expose their features so that 3rd party developers can create add-ons and additional features. IBM Watson has a very strong focus on developers, and has been rapidly expanding the set of cognitive capabilities that are available for use in other applications. I look forward to seeing if the IBM partner ecosystem comes up with interesting ways to use Watson to help people collaborate.

Moving in the Right Direction

I really like the new “Outthink†messaging. It bridges IBM’s long standing message of “Think", with this new cognitive era. I've not been a fan of the previous company wide mantra "Be Essential", but I think trying to get every employee to rally around cognitive computing is a good move. I look forward to seeing how IBM ties together their collaboration tools and the Watson platform.