Event Review: Infosys Confluence 2017

In the following 4 minute video I discuss Infosys's artificial intelligence (AI) platform NIA and Infosys.Digital.

And here is a Storify review of my Tweets about the event

Future of Work

In the following 4 minute video I discuss Infosys's artificial intelligence (AI) platform NIA and Infosys.Digital.

And here is a Storify review of my Tweets about the event

Future of Work

The agreement gives AT&T global access to Oracle’s cloud portfolio offerings both in the public cloud and on AT&T’s Integrated Cloud. This includes Oracle’s IaaS, PaaS, Database-as-a-Service (DBaaS), and Software-as-a-Service (SaaS) which will help increase productivity, reduce IT costs and enable AT&T to gain new flexibility in how it implements SaaS applications across its global enterprise. AT&T has also agreed to implement Oracle’s Field Service Cloud (OFSC) to further optimize its scheduling and dispatching for its more than 70,000 field technicians. With OFSC, for example, AT&T will combine its existing machine learning and big data capabilities with Oracle’s technology to increase the productivity, on-time arrivals and job duration accuracy of AT&T’s field technicians.MyPOV – This is an impressive demonstration of the ‘chip to click’ Oracle stack creating value from the IaaS layer all the way to SaaS. For AT&T to throw in critical Field Service into the overall mega deal is a key sign that the Oracle synergies across the stack work. The AT&T machine learning and big data capabilities relied on Oracle and with that move with the Oracle systems. Industry rumors were that AT&T had tried a number (or even any) alternative to stay with Oracle, but could not find anything fitting capabilities as well as TCO. This is a key lighthouse deal for Oracle. The vendor’s shot to become a key player in cloud infrastructure is to capture more deals like this and the bulk of the load that the Oracle DB carries on premises – for the Oracle cloud / IaaS and Database as a Service business.

As a first step, Trek set out to streamline the customer claims process and engagement with its dealers. Using Oracle Mobile Cloud Service, a key component of Oracle Cloud Platform, Trek developed an innovative new mobile application that enables dealers to submit repair claims using their mobile devices and a few taps of a button. With the new claims application, dealers will simply snap a picture of the customers’ bikes on their mobile device, connect directly to Trek’s supply chain applications through an API, and create a claim in just a couple of minutes. The new claim submission application will also include optical character recognition (OCR) technology that allows service professionals to pull up customer service histories simply by scanning a bike’s serial number. It also allows Trek to better service their bikes and better support existing customers.MyPOV – A good example how enterprises need to build software to truly differentiate. PaaS matters for that and Trek built an application that changes the supply chain from closed to open, making the Trek stories active users of the system. Making claims easier and faster to process is great for customers that get a more hassle-free repair experience, great for the Trek dealer who is more productive and has a happy customer and great for Trek that has happy dealers and customers. And likely a competitive differentiator in the market.

Continuing its commitment to cloud customers through extensive engineering and infrastructure investments, Oracle today announced enhancements to the Oracle Cloud EU Region in Germany with the addition of modern infrastructure as a service (IaaS) architecture and new IaaS and platform as a service (PaaS) cloud services. German-based modern IaaS will enable organizations to build and move mission-critical workloads to the cloud with uncompromised security and governance at a significant price performance advantage both over existing on-premises infrastructure and competitive cloud offerings. Oracle’s expanded infrastructure footprint is a result of tremendous customer demand with non-GAAP cloud revenue up 71 percent in Q3FY17 to $1.3 billion. The Oracle Cloud EU Region in Germany builds on the previously announced Oracle Cloud UK Region. The Oracle Cloud EU Region in Germany is expected to come online in the second half of this calendar year.MyPOV – Good to see the investment, that is required though to make Oracle a cloud IaaS player. Oracle follows the traditional roll out of the IaaS competitors, starting in the UK and then moving to Europe’s #1 economy, Germany. Frankfurt makes additional sense as it is one of the Internet backbone exchanges, so it makes sense to build data centers there. No surprise this will be a ‘Gen 2’ Oracle region – which usually means 3 data centers (though Oracle would not confirm). That would raise the ‘game’ over the also Germany based IaaS competitors. But then it is half a year out – so many things can change till then. But good to see Oracle spend the CAPEX, which it has to in order to catch up with the ‘Big 3’ of IaaS.

The Oracle Cloud delivers nearly 1,000 SaaS applications to customers in more than 195 countries around the world. With NetSuite now part of Oracle, more than 25,000 organizations around the world now leverage Oracle Cloud Applications to transform critical business functions and embrace modern best practices. To accelerate this growth and help organizations of all sizes transform business processes, Oracle recently announced a series of NetSuite expansions and innovations. It is also introducing artificial intelligence-based enterprise applications. Oracle Adaptive Intelligent Apps blends first-party data with third-party data, and then applies Oracle's decision science and machine learning capabilities to create cloud applications that adapt and learn.MyPOV – The NetSuite acquisition is a huge jump in cloud customers for Oracle, albeit on a different and older platform than the rest of the Oracle cloud applications. But it is still an Oracle platform, so a number of investments that Oracle announced at NetSuite’s recent SuiteWorld even (see my take here). And no surprise to see AI mentioned, and Oracle calls them Adaptive Intelligent Apps. The potential of merging third party data with enterprise data is substantial and we will see how well Oracle can exploit this opportunity. In the following list of Oracle Cloud suites, notably CRM was missing – though Oracle CX / HCM / ERP Cloud were mentioned. An unusual omission which begs questions. But overall good traction of the Oracle SaaS portfolio.

VMware is the first mobile application management provider to manage and secure hundreds of Oracle business applications and custom applications built on the OMCS. As such, enterprise IT organizations can manage their Oracle application suite on a single unified platform together with their other business-critical applications and devices. Users who count on Oracle’s business applications to make better decisions, reduce costs and increase performance can benefit by being able to access these applications through a simple digital workspace environment—be it from a mobile device, laptop or desktop – with VMware Workspace ONE™ and AirWatch.MyPOV – Always good to see when customers can get vendor to partner and bring their offerings closer, here it is Oracle and VMware. VMware Workspace ONE and VMware AirWatch have reached a critical usage level in enterprises, so these enterprises want to use the same pane of glass to manage Oracle mobile applications. Good to see the partnership a win / win / win for customers and both vendor.

Oracle FastConnect addresses one of the most important issues that impacts migration to a cloud service: the unpredictable nature of the Internet. With FastConnect, customers can create a high-throughput and low-latency connection that delivers the benefits of a hybrid cloud setup by providing an easy, cost effective way to create dedicated and private connectivity to Oracle Cloud. In addition, customers can address common concerns with security and performance issues associated with cloud technologies. This is especially true for mission-critical enterprise workloads that frequently demand high levels of availability, security and performance.MyPOV – Network connectivity remains a crucial component for hybrid and migratory scenarios. Good to see Oracle expanding the program with Equinix, joining Megaport, Verizon, BT and NTT Communications.

The Hyperledger blockchain consortium is continuing to gain momentum, adding eight new members including Deloitte and Ernest & Young. Hyperledger now has 142 members, up substantially from the initial 30 who joined the project at launch in February 2016. Here are the key details from Hyperledger's announcement:

“These new members have joined Hyperledger bringing a diverse set of skills at a crucial time,†said Brian Behlendorf, Executive Director, Hyperledger. “Consensus is a great platform for our members to set the stage and speak to what’s happening in our community, as production blockchain deployments increase."

Hosted at the Linux Foundation, Hyperledger encompasses a set of open-source projects around various aspects of blockchain technology. The arrival of Deloitte and Ernst & Young should help further its mission of finding vertical applications for blockchain. Other new members announced this week include Alphapoint, Change Healthcare, CITIC, Clause Inc, FZG360 Network Co. Ltd and Schroder Investment Management Limited.

Hyperledger also announced that two projects, Sawtooth and Iroha, have graduated from incubation to "active" status at the Linux Foundation. The first focuses on scalability and security issues, while the latter seeks to create a componentized blockchain framework whose parts can be used in other implementations.

In March, Hyperledger announced that its Fabric project was the first to achieve active status. While the ranking doesn't denote a production-ready version 1.0, among other criteria it means the project has achieved enough diversity of support to survive any one company dropping out.

Overall, this week's news is good for the Hyperledger effort, as well as the future of business, says Constellation Research VP and principal analyst Andy Mulholland.

"In order for digital business ecosystems and markets to function in a frictionless manner, they must have the commercial capability provided by a distributed ledger to allow any to any business transactions to be recorded," he says. "The quality of the membership of the HyperLedger project says a great deal about the business value well-known enterprises are placing upon its success."

24/7 Access to Constellation Insights

Subscribe today for unrestricted access to expert analyst views on breaking news.

Qlik highlights upgrades and the roadmap to high-scale, hybrid cloud and ‘augmented intelligence.’ Here's my take on the long-range plans.

Big data scalability, hybrid cloud flexibility and smart “augmented” intelligence. These are the three plans that business intelligence and analytics vendor Qlik officially put on its roadmap at the May 15-18 Qonnections conference in Orlando, Florida.

Qlik also highlighted six important upgrades coming in the Qlik Sense June 2017 release – one of five annual updates now planned for the company’s flagship product (reflecting cloud-first pacing, though on-premises customers can choose whether and when to make the move). The June upgrade highlights include:

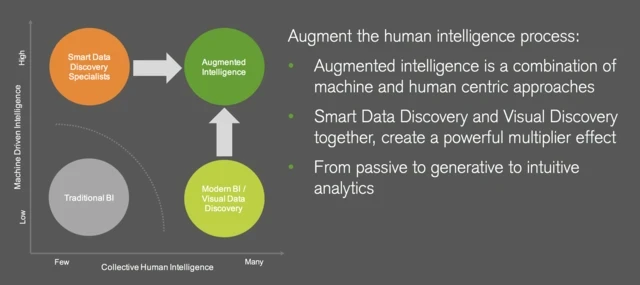

Qlik is promising "augmented intelligence" said to combine the best of machine intelligence

with human interaction and decisions.

Most of these upgrades earned hearty applause from the more than 3,200 attendees at the Qonnections opening general session, but the sexiest and most visionary announcements were the ones on the roadmap. Here’s a rundown of what to expect, along with my take on what’s coming.

Building Toward BigData Analysis

Qlik’s key differentiator is its associative QIX data-analysis engine, which is at the heart of the company’s platform and is shared by its Qlik Sense and QlikView applications. QIX keeps the entire data set and rich detail visible even as you focus in on selected dimensions of data. If you select customers who are buying X product, for example, you’ll also see which customers are not buying that product. It’s an advantage over drill-down analysis where you filter out information as you explore

There have been limits, however, in how much data you can analyze within the 64-bit, in-memory QIX engine. Qlik has a workaround whereby you start with aggregated views of large data sets. Using an On-Demand App Generation capability you can then drill down to the detailed data in areas of interest. But the drawback of this approach is that you lose the powerful associative view of non-selected data.

The Associative Big Data Index approach announced at Qonnections will create index summaries of large data sets, drawn from sources such as Hadoop or high-scale distributed databases. A distributed version of the QIX engine will then enable users to explore the fine-grained detail within slices of the data without losing sight of the summary-level index of the entire data set.

MyPOV on Qlik big data capabilities: What I like about the Associative Big Data Index is that it will leave data in place, whether that’s in the cloud or in an on-premises big data source. It brings the query power to the data, eliminating time-consuming and costly data movement. The distributed architecture also promises performance. In a demo, Qlik demonstrated nearly instantaneous querying of a 4.5-terabyte data set. Granted, it was a controlled, prototype test, so we’ll have to wait and see about real-world performance.

Speaking of waiting, on big data, as on the hybrid cloud and augmented intelligence fronts, Qlik senior vice president and CTO Anthony Deighton set conservative expectations, telling customers they would see progress by next year’s Qonnections event. He didn’t rule out the possibility of an earlier release, but nor did he promise that any of the new capabilities would be generally available by next year’s event. As has been Qlik’s habit in recent years, it’s responding slowly to demands in emerging areas like big data and cloud.

Preparing for Hybrid Cloud

The business intelligence market has forced a binary, either-or, on-premises or cloud-based choice, said Deighton. He vowed that Qlik will change it to an and/or choice by fostering hybrid flexibility with the aid of microservices, APIs and containerized deployment. The approach will also require sophisticated, federated identity management, which the vendor has developed to support European GDPG data security and privacy compliance requirements set to go into effect next year.

In a prototype preview at Qonnections, Qlik demonstrated workloads being spawned and assigned automatically across Qlik nodes running on Amazon, in the Qlik Cloud and on-premises. The idea is to flexibly send workloads to the most appropriate resources. That could mean spawning public cloud instances on the fly when scale is required. Or it could mean keeping analyses on-premises when regulated data is involved. Qlik is working with big banks and hospitals, among other customers, to master microservices orchestration across on-premises, private-cloud and public-cloud instances.

MyPOV On Qlik’s cloud plans: As noted above, Qlik made no promises as to when it will deliver on this flexible, cloud-friendly microservices vision, other than to say that we’ll hear more at Qonnections 2018. Qlik’s cloud offerings need these workload-management features, particularly where Qlik Sense Enterprise in the cloud is concerned. Customers want better performance as well as the granular services and APIs they’re used to from leading SaaS vendors. I believe it’s more important for Qlik to deliver quickly on this front than on any other, so let’s hope it’s something introduced before Qonnections 2018.

Augmenting Intelligence

There have been many announcements about “smart” capabilities this year. A few have of the capabilities have actually launched (like those detailed in my detailed reports on Salesforce Einstein and Oracle Adaptive Intelligent Apps), but most are works in progress. Some are conservatively described as automated predictive analytics or machine learning while others are billed as “artificial intelligence.”

Over the past year, Deighton and other Qlik executives have charged that competitive AI and cognitive offerings tend to remove humans from decision making. In keeping with this theme, the company announced that it’s working on “augmented intelligence” that will “combine the best” of what machines can do with human input and interaction. The approach will eschew automation in favor of machine-human interaction that will bring context to data and promote better-informed machine learning, said Deighton.

The general idea is for humans to interact with concise lists of computer-generated suggestions. This will happen through computer-augmented interfaces at various stages in the data-analysis lifecycle. When users bring data together, for example, data-analysis algorithms will be applied to suggest how the data might be correlated. In the analysis stage, algorithms will suggest the best analytical approaches. And once results are generated, data-visualizations algorithms will be applied to suggests best-fit visualizations. Humans will interact with the suggestions and make the final selections at every stage. Deighton promised something that will neither dump too many possibilities on users, at one extreme, nor create “trust gaps” by automating and remove human input from decisioning.

MyPOV on Qlik Augmented Intelligence: Based on conversations with Qlik executives, I’d say we’re in the early stages of Qlik’s augmented intelligence initiative. It all sounds good, but the details were sketchy. I heard a bit about analytic libraries and potential partnerships on the machine learning and neural net front. But executives weren't ready to name partners or predict availability. In short, we may see the beginnings of Qlik’s augmented intelligence capabilities at Qonnections 2018, but Qlik execs were up front in describing the initiative as something that may take a few years to mature.

Qlik’s most direct competitors, including Tableau, Microsoft, SAP and IBM, are all working on smart data exploration, basic prediction and “smart” recommendation features of one stripe or another. IBM is actually on the second-generation of its cloud-based IBM Watson Analytics service. Yet we’re still in the very earliest phases of bringing advanced analytics, machine learning and artificial intelligence to the broad business intelligence market. I think 2017 may mark the end of the beginning. By 2018 and beyond, we’ll start to see vendor selections based on smart features rather than the maturing trend toward self-service capabilities.

RELATED READING:

Qlik Gets Leaner, Meaner, Cloudier

Inside Salesforce Einstein Artificial Intelligence

Tableau Sets Stage For Bigger Analytics Deployments

It's not often that a single technical hire at a vendor merits news coverage, but when it involves a tech luminary of the likes of Java "father" James Gosling, proper attention should be paid. Gosling announced this week on his personal Facebook page that he has taken a position with Amazon Web Services, after a stint as chief software architect of Liquid Robotics.

Gosling co-created Java along with Mike Sheridan and Patrick Naughton while the three worked at Sun Microsystems in the 1980s. After Oracle bought Sun in 2010, taking control of Java, Gosling left the company, later citing issues with his salary, job responsibilities and micromanagement.

Liquid Robotics has developed an autonomous robot that traverses oceans, collecting and transmitting information about water temperature, wind speeds and other metrics. The startup's technology is used by energy companies and military organizations, among others.

It's not clear whether Gosling will be doing anything of the sort for AWS, although his background at Liquid Robotics would seem to have some synergies with IoT (Internet of Things) projects in general.

Gosling's title at AWS is distinguished engineer, a distinction handed out to relatively few people overall at the company. Distinguished engineer positions are not sinecures and AWS will undoubtedly have him working on something highly strategic.

Gosling himself declined to reveal what he'll be doing at AWS, yet suggested as much, in a Facebook post:

I've been getting a lot of questions on what I'll be working on at Amazon. Sadly, I can't say: it's Amazon's policy to be quiet. As much as secrecy can be annoying, it usually makes sense. ...Years ago I worked at IBM for a while and had to go through "confidential information" training. When I came back grumpy my manager smiled and said "IBMs biggest secret is that it has nothing worth keeping secret". Doesn't apply at Amazon. It looks like it'll be a fun ride.

AWS supports and relies upon Java for many of its services, particularly on the back end. Meanwhile, a great many on-premises enterprise workloads—ones AWS is keen to migrate—are Java-based. It's conceivable that Gosling could help lead efforts to improve Java services and tooling for AWS, and his name on the marquee won't hurt in convincing conservative enterprises to place bets with the company.

Gosling could also serve as an evangelist to the coding community. "AWS needs luminaries for the developers to look up to and he definitely is one," says Constellation Research VP and principal analyst Holger Mueller. "So this is a good move."

24/7 Access to Constellation Insights

Subscribe today for unrestricted access to expert analyst views on breaking news.

I rarely write a blog based on personal opinion as my engineering background always favors researched factual reporting. Opinion pieces are, by necessity, based on subjective views; to have value must be insightful. Having prepared and published research presenting the ‘Big Picture’ view of Digital Enterprises functioning in the Digital Economy it became all to clear while individual technologies might be understood by IT; the deployment into Digital Business was not understood.

In fact the alignment between IT and Business management in the deployment of IoT as a core element in a Digital Enterprise was somewhere between absent, and poor. Industrial companies with Operational Technology departments had moved swiftly forward, usually with limited reference to the Information Technology operation. This blog provides a simple summary of the main points, in my opinion, that IT Professionals have to understand to see the ‘Big Picture’ of Digital Business and how it will transform their Enterprise.

As the above is controversial, but easy to prove with evidence, I am going to start with an explanation as to why researching a Big Picture report gives a different view to reporting on particular elements in a specialized manner. Why and how can the work produce some insightful views in addition to the factual report itself.

An Analyst interacts with many different people, with a wide range of roles, experiences and employers to build the ‘big picture’ view across an entire market spanning products, companies and deployments. Each discussion revolves round in depth microcosm that is one part of the whole picture; in a mature market the integration to the whole is part of the discussion.

In an immature market where both the Business deployment and the Technology products are still rapidly emerging the degree of consensus in the views is a critical issue. The concepts and practices of ‘Digital’ make for not just a big Picture, but for the entire redesign of Business, and the enabling Technology Frameworks into a new inclusive Digital Economy over the coming years.

A great deal of research, with the active cooperation of vendors and users was required to produce the final report, which can be found here as an abbreviated set of Blogs under the title Distributed Business and Technology models. (The title aims to be ‘neutral and inclusive’ avoiding terms with strong individual definitions). The resulting report defines the aspirational target for both users planning their business/technology adoption strategy, and vendors their product/services features development.

Most recognized markets whether discussing business, or technology, have usually a reasonable match between Business user expectations and Technology vendors’ product capabilities. Sadly in Digital Business this is not so, there are serious mismatches between Business Managers, those Deploying and the Vendors of the Technology. The goal of this blog is to identify what I believe are some of the biggest gaps, or issues that need to be addressed.

Currently both the Business management and the Technology Management are simultaneous drivers of deployment, but for completely different benefits and reasons. The visions of Digital Business is all to often remote from those of the IT department. In manufacturing, or certain industry sectors like Buildings, or Medical, the presence of Operational Technology departments has helped to overcome the ‘gap’ and resulted in these sectors accelerating rates of adopting the new practices.

Cloud is an excellent example of this; the Information Technology department will be advanced in reducing cost through using centralized large-scale data centers whilst Business Management and Operational Technology see Cloud as the technology that supports the distributed low latency edge computing requirements of IoT in a Digital Business.

Sadly much of the following comments seem to target the IT department, and its need to come to terms with a Business and Technology transformation of a type last experienced in the early 90s that lead to the creation of the Enterprise IT department. The following comments assume that IT professionals and departments are keen to identify their new, or additional, role in the Digital Enterprise.

After twenty-five years of Client-Server based Enterprise IT driven by the Close Coupled State full architecture model a radical technology change to an all-together different Business model using a Loose Coupled, Stateless architecture is radical. After all the current architecture has proved able to be adjusted to accommodate the inclusion of the Internet, Web, Mobility, and Clouds, so why not IoT, and AI, as well?

The following, gathered from a wide range of sources, are my opinion the key points where misunderstandings around the Digital Business model and its enablement with Technology are most common. And it starts with the fundamental question of what is Digital Business.

Digital Business, the Digital Economy, and similar terms that are widely used, often interchangeably, are not really understood as to their real definition, and are seen as part of the current Internet/Web economy. The prevailing view is Digital Business relates to an extension of the current model, with added new technology capabilities to support increased volumes of business. There is widespread failure to really grasp that Digital in this context refers to a new generation of Commerce that changes Business models, and Enterprise organizational structures.

The enabling technologies for the Digital Enterprise that make up CAAST, (Clouds, Apps, AI, Services & Things), even if bearing a familiar name such as Cloud, are deployed in a different approach to enable Digital Business. Digital Business deploys these technologies to optimize continuous changes in opportunities to do business that will gain increased revenues and margin improvements. Digital Business is more than a Front Office activity running through out the Enterprise Business and Operating model.

The contrast with the current role of IT, as a predominantly Back Office activity providing the administrative functions necessary to record transactions through stable processes could not be greater. Information Technology needs to grasp the difference between their current roles, and understand Operation Technology learning from the leadership this discipline has already showed operating ‘real-time’ event optimization. The role of IT is necessary for compliance, and will continue, but is likely to become subordinate, even increasingly outsourced, as attention turns to investing in Digital Business.

IoT is all too often either seen as a consumer wave, or as interesting way to add more data to current IT processes. The Digitized representation of the Physical World, by using IoT, to allow assets and events, to be ‘read’ and create dynamic optimized ‘react’ is at the heart of Digital Business. IoT should be understood as a group of technologies that create the crucial digital data to extend the use of computers into new areas of the Enterprise Business operating model.

Business Managers have come to realize that continuous innovation and dynamic optimization requires the decentralization in Enterprise operations that is a core feature of Digital Business models. After years of using IT to support ever more centralized business activities and processes this is counter intuitive to many IT professional who fear for the ‘State full’ synchronization of Enterprise data.

The IT role is to manage the integration of Systems in known relationships, Close Coupled, to maintain the single version of the truth, state full, data model. Digital Business requires a new role to ensure whatever Device can communicate whenever it needs, Loose Coupled, and align data flow to activities in a Stateless Model.

The Loose Coupled, Stateless nature of Digital Business coupled to the translation of the physical World into Digital Models, massively increases the volumes of data to be ‘read’. Simultaneously the time available to ‘respond’ in order to be able to influence the outcome is decreased thereby making the introduction of automation necessary.

Adopting Digital Business by Technology correctly creates the environment in which to deploy AI, (standing for Augmented Intelligence). As with Digital Business versus current online Business AI is not Analytics and BI taken to the next level, it is a new approach requiring an investment in time to understand how to deliver the ‘augmentation’ human capacity to manage the vast increases in data and decisions Digital Business brings.

In summary IT departments cannot muddle through and expect that as before they will be able to assimilate and adopt this latest round of technology change. Technology professionals as ever will be in great demand to deliver what Business requires. Currently IT professionals in IT departments need to conduct serious strategic knowledge building exercises.

Equipped with this knowledge the IT department becomes able to fulfill a wider role, perhaps in conjunction with the existing Operation Technology department, and certainly to bring Technology skills to the Enterprise Business strategy.

Interesting Links

1) ASUG, (Association of SAP User Groups) has had a long running educational program in the form of Webinars given by a range of Experts with Case Studies. The views are not limited to, or constrained by SAP and its products, and offer distinctly practical advice. The current series can be found at https://www.asug.com/news/saps-journey-to-the-cloud-and-you-part-i

2) The Internet of Things World – Europe event provides an illustration of the scale of IoT to Digital Business adoption in the numbers of speakers from many World-class enterprises, but also demonstrates the almost total absence of IT departments and professionals from the program. See speaker lists and the program details here

New C-Suite Innovation & Product-led Growth Tech Optimization Future of Work AI ML Machine Learning LLMs Agentic AI Generative AI Analytics Automation B2B B2C CX EX Employee Experience HR HCM business Marketing SaaS PaaS IaaS Supply Chain Growth Cloud Digital Transformation Disruptive Technology eCommerce Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain CRM ERP Leadership finance Customer Service Content Management Collaboration M&A Enterprise Service Chief Information Officer Chief Technology Officer Chief Digital Officer Chief Data Officer Chief Analytics Officer Chief Information Security Officer Chief Executive Officer Chief Operating OfficerMay 18, 2017 – Johannesburg, South Africa – Today Microsoft revealed plans to deliver the complete, intelligent Microsoft Cloud for the first time from datacenters located in Africa. This new investment is a major milestone in the company’s mission to empower every person and every organization on the planet to achieve more, and a recognition of the enormous opportunity for digital transformation in Africa.MyPOV – Good to see the intent, in line with the general Microsoft Azure (or is it now the Microsoft Cloud?) value pitch of being the intelligent cloud.

Expanding on existing investments, Microsoft will deliver cloud services, including Microsoft Azure, Office 365, and Dynamics 365, from datacenters located in Johannesburg and Cape Town, South Africa with initial availability anticipated in 2018. The new cloud regions will offer enterprise-grade reliability and performance combined with data residency to help enable the tremendous opportunity for economic growth, and increase access to cloud and internet services for organizations and people across the African continent.MyPOV – So 2018 will see the go live. No surprise there is an Office 365 and Dynamics 365 angle, two products that have to comply with data privacy and data residency legislation.

“We’re excited by the growing demand for cloud services in Africa and their ability to be a catalyst for new economic opportunities,” said Scott Guthrie, Executive Vice President, Cloud and Enterprise, Microsoft Corp. “With cloud services ranging from intelligent collaboration to predictive analytics, the Microsoft Cloud delivered from Africa will enable developers to build new and innovative apps, customers to transform their businesses, and governments to better serve the needs of their citizens.”MyPOV – Good quote from Guthrie, which also hints at the next generation application angle for developers and the government angle. Government usually require data residency.

Expanding Access & Opportunity: Currently many companies in Africa rely on cloud services delivered from outside of the continent. Microsoft’s new investment will provide highly available, scalable, and secure cloud services across Africa with the option of data residency in South Africa. With the introduction of these new cloud regions, Microsoft has now announced 40 regions around the world – more than any major cloud provider. The combination of Microsoft’s global cloud infrastructure with the new regions in Africa will connect businesses with opportunity across the globe, help accelerate new investments, and improve access to cloud and internet services for people and organizations from Cairo to Cape Town.MyPOV – South Africa is an island from a IaaS perspective. A large economy, but far away from a connectivity perspective, it’s in a similar situation like Australia, only that the IaaS vendors made it to Australia much earlier. With 40 regions Microsoft currently leads fellow competitors AWS and Google, but Microsoft does not clarify how many data centers are in one location. And to tackle reliable services in a geography, it needs to be at least, two data centers. Microsoft does not share how many data centers are in a region, but will have two regions with one each in Cape Town and Johannesburg. That should be a good answer for any HA (High Availability) concerns, though Oracle has moved the 'standard' quickly to three data centers per location / region.

“We greatly value Microsoft’s commitment to invest in cloud services delivered from Africa. Standard Bank already relies on cloud technology to provide our customers with a seamless experience,” says Brenda Niehaus, Group CIO at Standard Bank. “To achieve success as a business, we need to keep pace with market developments as well as customer needs, and Office 365 empowers us to make a culture shift towards becoming a more dynamic organization, whilst Azure enables us to deliver our apps and services to our customers in Africa. We’re looking forward to achieving even more with the cloud services available here on the continent.”MyPOV – Always good to have launch customers and good to have them provide a quote in a press release announcing future to be used products / services.

Investing in African Innovation: This announcement expands on ongoing investments in Africa, where organizations are using currently available cloud and mobile services as a platform for innovation in health care, agriculture, education, and entrepreneurship. Microsoft has been working to support local start-ups and NGOs, unleashing innovation that has the potential to solve some of the biggest problems facing humanity, such as the scarcity of water and food, and economic and environmental sustainability. One start-up, M-KOPA Solar, provides affordable pay-as-you-go solar energy to over 500,000 homes using mobile and cloud technology. AGIN has built an app connecting 140,000 smallholder farmers to key services, enabling them to share data and facilitating $1.3 million per month in finance, insurance and other services.MyPOV – Always good to show the potential and upside – and Africa has a lot of both. It’s not clear what M-Kopa and AGIN are or will be using from Microsoft.

Across Africa, Microsoft has brought 728,000 small and mid-size enterprises (SMEs) online to help them transform and modernize their businesses, and over 500,000 are now utilizing Microsoft cloud services, with 17,000 using the 4Afrika hub to promote and grow their businesses. The Microsoft Cloud is also helping Africans build job skills, with 775,000 trained on subjects ranging from digital literacy to software development. We anticipate the Microsoft Cloud from Africa will fuel extensive new opportunities for our 17,000 regional partners and customers alike.MyPOV – Impressive numbers, the consumer and educational aspect of the Microsoft product and services portfolio has a lot of potential in Africa. On the other side it also requires Microsoft to invest into infrastructure in Africa, and this is a first step.

“This development broadens the options available to us in our modernization journey of Government ICT infrastructure and services. It allows us to take advantage of new opportunities to develop innovative government solutions at manageable costs, as well as drive overall improvements in operations management, while improving transparency and accountability,” says Dr. Setumo Mohapi, CEO at SITA.MyPOV – Again – good to see a current / future customer quote – covering the government aspect and potential.

The Microsoft Trusted Cloud: Microsoft has deep expertise protecting data, championing privacy, and empowering customers around the globe to meet extensive security and privacy requirements. With Microsoft’s Trusted Cloud principles of security, privacy, compliance, transparency, and the broadest set of compliance certifications and attestations in the industry, Microsoft’s cloud infrastructure supports over a billion customers and 20 million businesses around the globe. […]MyPOV – Good to see Microsoft stressing the security aspect. As in every new geographic region where the cloud arrives, there is a large group of skeptical CxOs and security concerns are at the top of their list of reasons why they cannot move to the cloud. These concerns need to be addressed. There is no reason though why these concerns cannot be addressed as well in South Africa like they have in the rest of the world… with a broadly favorable outcome for the cloud.

Expands key capabilities of iPaaS to include end-to-end data managementMyPOV – Great summary, described what is happening and bridges between well know iPaaS to microservices and AI, introducing the CLAIRE engine.

Advances productivity with CLAIRE – metadata-driven Artificial Intelligence

Scales enterprise-wide to manage complex hybrid data environments

Adheres to the broadest security certifications MyPOV – A fair summary, these press releases are a bit too long, when the PR folks realize they need a bullet list of content items at the beginning.

Informatica World, SAN FRANCISCO, Calif., May 16, 2017 – Informatica, the Enterprise Cloud Data Management leader accelerating data-driven digital transformation, today announced Informatica Intelligent Cloud Services, the most advanced Integration Platform as a Service (iPaaS) solution available for end-to-end enterprise cloud data management. Informatica Intelligent Cloud Services will feature a next-generation user experience based on a modern API-based microservices architecture, powered by Informatica’s innovative enterprise unified metadata intelligence - known as CLAIRE Engine.

Informatica Intelligent Cloud Services expands Informatica’s leading application and data integration iPaaS capabilities to now include critical end-to-end cloud data management. This includes industry leading and enterprise-class data integration, API management, application integration, data quality and governance, master data management and data security, all re-imagined for the cloud. Informatica Intelligent Cloud Services is built on the Informatica Intelligent Data Platform™, and has reimagined the front- and back-end experience for the modern cloud environment, enabling organizations to efficiently unleash the power of all their data, wherever it resides, to fuel successful digital transformation initiatives.MyPOV – Ok more detail, and a reminder all is on one single platform – the Informatica Intelligent Data Platform.

“As the industry’s number one iPaaS leader, we are driving innovation in this market,” said Amit Walia, executive vice president and chief product officer, Informatica. “With this launch, we are completely re-inventing iPaaS. We are delivering the industry’s broadest end-to-end data management solution for the cloud, with a next-generation user experience running on an API-based microservices architecture. Powered by metadata and Artificial Intelligence, we help enterprises accelerate their cloud-powered digital transformations.”MyPOV – Good quote rom Walia. Everybody wants to re-invent things these days, but when putting AI into action, it truly has the potential of doing things differently. My concern is that Informatica uses ‘old’ terms like e.g. metadata. No need to talk about metadata in the AI era. More below.

Data Management Reimagined for CloudMyPOV – Ok – this is the third – and different collection of what the iPaaS does, maybe with a data management angle.

Informatica Intelligent Cloud Services moves past the traditional definition of iPaaS to include cloud data integration, cloud application and process integration, API management and connectivity. Informatica Intelligent Cloud Services delivers the industry’s first, and only, family of clouds that provides industry-leading data management capabilities, powered by CLAIRE from Informatica.

The family of clouds available in Informatica Intelligent Cloud Services include:MyPOV – Another collection of capabilities, would be good to have an example or customer proof point.

Informatica Integration Cloud – Modern digital strategies require a variety of integration approaches and patterns. Integration Cloud greatly expands the traditional definition of iPaaS to include advanced, unique functionality, such as Integration Hub and B2B. This also includes application integration, data integration and API management. For example, Cloud Integration Hub provides pub-sub-hub integration capabilities for hybrid data management.

Informatica Data Quality & Governance Cloud – Modern digital strategies require trusted data. Data Quality & Governance Cloud includes functionality that delivers the data quality and governance foundation for all cloud projects and initiatives. For example, Cloud Data Quality Radar provides the ability to assess and fix data quality issues within cloud applications, such as Salesforce and Marketo.

Informatica Master Data Management Cloud – Modern digital strategies require authoritative data. Master Data Management Cloud provides single, complete and accurate views across all forms of master data, in a single source of truth. For example, Cloud Customer 360 for Salesforce provides cloud MDM capabilities that scale to the most demanding enterprise requirements with a laser focus on business self-service and self-management of master data.

Future Clouds – Additional, modular data management clouds, products and solutions will be seamlessly added to Informatica Intelligent Cloud Services over time.MyPOV – So what used to be once products are now ‘clouds’. The separation between integration, data management and then data quality and governance makes sense as these are the organic organization and breaking points how data handling organizations are setup. Good to see Informatica leaving the door open for future clouds aka products.

Adopting cloud and using data to drive disruption requires excellence in data management. The innovative combination of a modern user experience and API-based microservices architecture, built on the industry’s only Intelligent Data Platform powered by CLAIRE, enables Informatica Intelligent Cloud Services to deliver increased productivity and address new use cases, at scale.

Next-Generation Experience and Architecture: Innovative Approach for Maximum ProductivityMyPOV – Always good to mention suite level benefits – and this time making them tangible with examples. Consistency and synergies is what enterprises want to see when they buy multiple, suite integrated products from the same vendor.

All the clouds that comprise Informatica Intelligent Cloud Services share a consistent, next-generation user experience across the entire spectrum of data management capabilities. The API-based microservices architecture delivers common services (e.g., user authentication, workflow creation, asset management, search, tagging, and more) that not only look the same, but also operate exactly the same wherever they are invoked across the cloud. This user experience dramatically reduces the learning curve for new tools and drives self-service across the environment.

The reimagined next-generation user experience includes a single, personalized home page with tiles for items such as personal tasks and connections, plus tiles that are dashboards for the projects that person has underway in each of the data management clouds. This home page gives them visibility and access to all the data management projects they may have underway across all the clouds of Informatica Intelligent Cloud Services.MyPOV - It's never enough to offer great capability and functionality behind the scenes, it has to been seen and experience by the user. Good to see the UX progress, which frankly was an area where Informatica has been challenged in the past, particularly in terms of consistency. Good to see the tile approach for UX - which has worked well across the industry to bring information together consistently for multiple user roles.

Additionally, the new microservices are based on open REST APIs. This will enable a continued rapid pace of innovation for Informatica, allowing the company to bring new services and advance existing services at a rapid pace. It will also enable quick integration with customer and partner reference architectures.MyPOV - An overdue move, good nonetheless. REST has won and it's time to adopt it for vendors.

Powered by CLAIRE: Intelligence Throughout the CloudMyPOV – Good intro and explanation of CLAIRE, though vague on detail in regards of machine learning algorithms, platform, pricing and so on – but it is early days.

CLAIRE—with clairvoyance in mind and AI in the center—is the industry’s most advanced metadata-driven Artificial Intelligence (AI) technology and is embedded in the Informatica Intelligent Data Platform. CLAIRE delivers intelligence to the entire portfolio of Informatica data management solutions that includes data integration, master data management, data quality and governance, data security, cloud data management, and big data management capabilities. CLAIRE delivers AI by applying machine learning to technical, business, operational and usage metadata across the entire enterprise. This scale and scope of metadata is transformational and allows CLAIRE to help data and integration developers by partially or fully automating many tasks, while business users find it easier to locate and prepare the data they are looking for from anywhere in the enterprise. Meanwhile, data scientists gain a faster understanding of data and data stewards find it easier to visualize data relationships.

MyPOV – We live in world of multi-cloud and hybrid systems – integrations in general and MDM in specific needs to span across them – so it is good to see Informatica providing a single pane of glass and tools on a common platform.

Enterprise-wide Management for a Hybrid World

Informatica Intelligent Cloud Services is built to enable enterprises to run complex hybrid environments. It provides operational insights delivered through a single dashboard for monitoring and managing all data management clouds and products and their data. Customers benefit from easy connectivity to all cloud, on-premise and big data sources across the enterprise using pre-built Informatica connectors.

Cloud with Industry-leading Security and Trust

Informatica Intelligent Cloud Services is built for the enterprise with security as a core design principle. It has the following certifications and standards for industry leading security:

AICPA SOC 2 Type 2 and SOC 3 attestations.

Externally audited HIPAA compliance.

ISO 27000-aligned Information Security Management System, and EU-US Privacy Shield and compliance security program.

Member of Cloud Security Alliance and Salesforce AppExchange certified.

The KNOW Identity Conference in Washington DC last week opened with a keynote fireside chat between tech writer Manoush Zomorodi and Edward Snowden.

Once again, the exiled security analyst gave us a balanced and nuanced view of the state of security, privacy, surveillance, government policy, and power. I have always found him to be a rock-solid voice of reason. Like most security policy analysts, Snowden sees security and privacy as symbiotic: they can be eroded together, and they must be bolstered together. When asked (inevitably) about the “security-privacy balance”, Snowden rejects the premise of the question, as many of us do, but he has an interesting take, arguing that governments tend to surveil rather than secure.

The interview was timely for it gave Snowden the opportunity to comment on the “Wannacry” ransomware episode which affected so many e-health systems recently. He highlighted the tragedy that cyber weapons developed by governments keep leaking and falling into the hands of criminals.

For decades, there has been an argument that cryptography is a type of “Dual-Use Technology”; like radio-isotopes, plastic explosives and supercomputers, it can be used in warfare, and thus the NSA and other security agencies try to include encryption in the “Wassenaar Arangement” of export restrictions. The so-called “Crypto Wars” policy debate is usually seen as governments seeking to stop terrorists from encrypting their communications. Even if crypto export control worked, it doesn’t address security agencies’ carelessness with their own cyber weapons.

But identity was the business of the conference. What did Snowden have to say about that?

When pressed, Snowden said actually he was not thinking of blockchain (and that he saw blockchain as being specifically good for showing that “a certain event happened at a certain time”).

Now, what are identity professionals to make of Ed Snowden’s take on all this?

For anyone who has worked in identity for years, he said nothing new, and the identerati might be tempted to skip Snowden. On the other hand, in saying nothing new, perhaps Snowden has shown that the identity problem space is fully defined.

There is a vital meta-message here.

In my view, identity professionals still spend too much time in analysis. We’re still writing new glossaries and standards. We’re still modelling. We’re still working on new “trust frameworks”. And all for what? Let’s reflect on the very ordinariness of Snowden’s account of digital identity. He’s one of the sharpest minds in security and privacy, and yet he doesn’t find anything new to say about identity. That’s surely a sign of maturity, and that it’s time to move on. We know what the problem is: What facts do we need about each other in order to deal digitally, and how do we make those facts available?

Snowden seems to think it’s not a complicated question, and I would agree with him.

Digital Safety, Privacy & Cybersecurity Innovation & Product-led Growth AI Blockchain Security Zero Trust Chief Executive Officer Chief Information Officer Chief Technology Officer Chief AI Officer Chief Data Officer Chief Analytics Officer Chief Information Security Officer Chief Product Officer Chief Privacy Officer

Cloud computing is projected to increase from $67B in 2015 to $162B in 2020 attaining a compound annual growth rate (CAGR) of 19%.

Cloud computing is projected to increase from $67B in 2015 to $162B in 2020 attaining a compound annual growth rate (CAGR) of 19%.Cloud platforms are enabling new, complex business models and orchestrating more globally-based integration networks in 2017 than many analyst and advisory firms predicted. Combined with Cloud Services adoption increasing in the mid-tier and small & medium businesses (SMB), leading researchers including Forrester are adjusting their forecasts upward. The best check of any forecast is revenue. Amazon’s latest quarterly results released two days ago show Amazon Web Services (AWS) attained 43% year-over-year growth, contributing 10% of consolidated revenue and 89% of consolidated operating income.

Additional key takeaways from the roundup include the following: