Why enterprise AI leaders need to bank on open-source LLMs

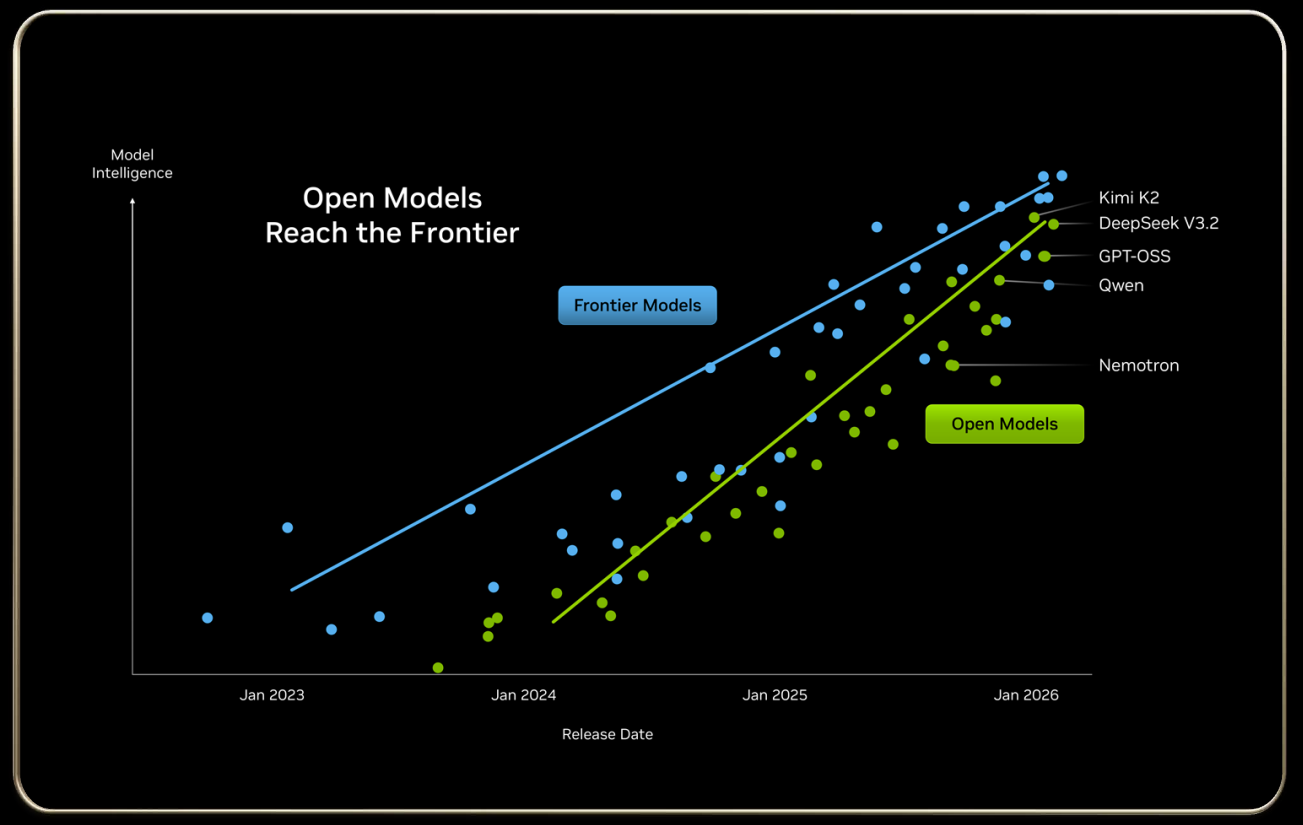

Nvidia, which is quickly becoming the champion of AI open-source models in the US, argues that open AI models are roughly six months behind more expensive proprietary frontier models. If that's the case, CxOs should base nearly all of their AI plans around open-source models.

In Nvidia CEO Jensen Huang's CES 2026 keynote, there were a lot of talk about agentic AI systems, physical AI and robotics, but his open-source comments stuck with me. He said the following (emphasis added):

"We now know that AI is going to proliferate everywhere with open-source and open innovation across every single company and every industry around the world is activated at the same time. We have open model systems all over the world of all different kinds and they have also reached the frontier. Open-source models are solidly six months behind the frontier models, but these models are getting smarter and smarter."

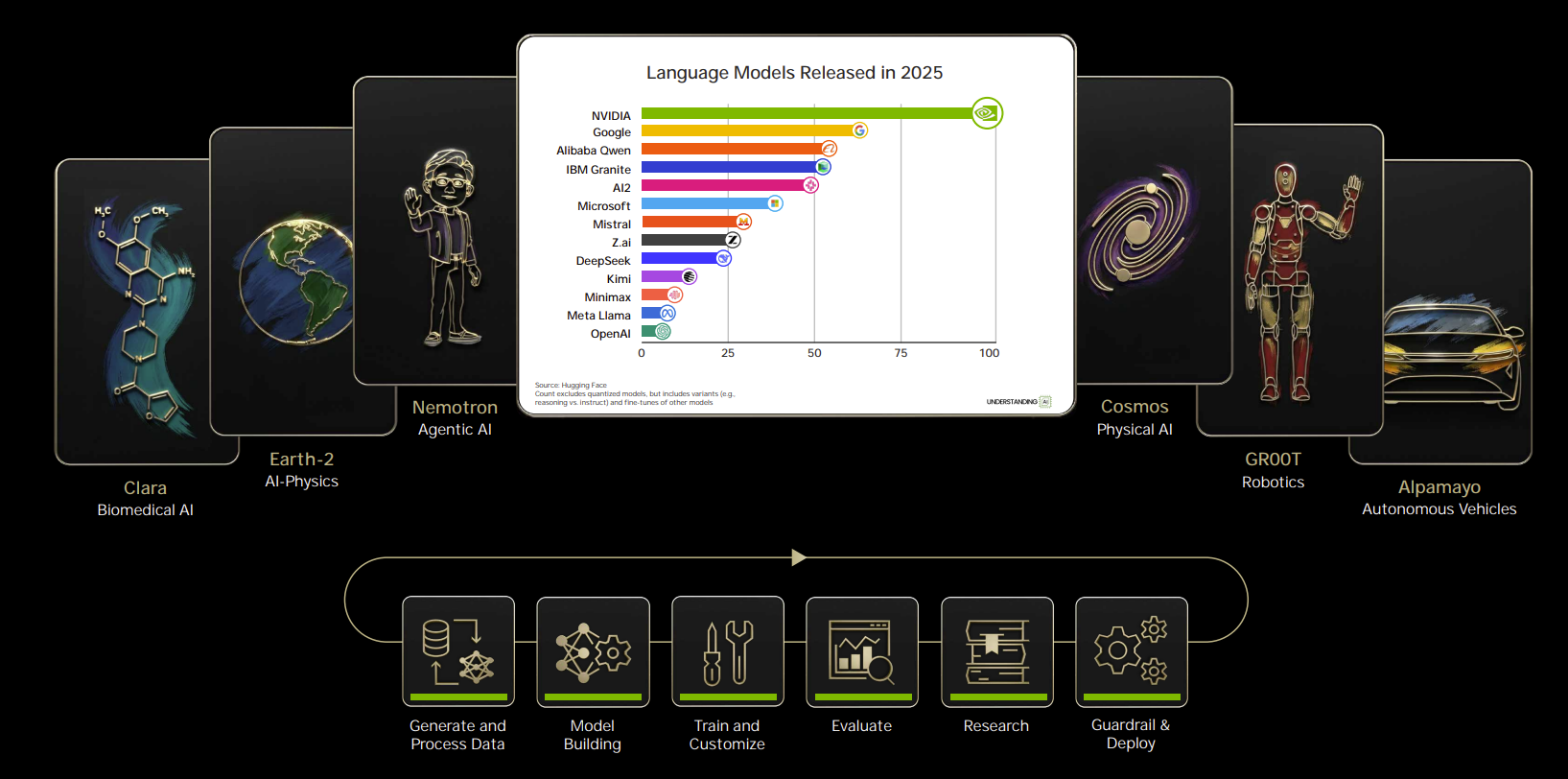

Nvidia backed up its open model case with releases of new Nvidia Nemotron models (speech, RAG, Safety) for agentic AI, Cosmos models for physical AI, Alpamayo for autonomous vehicles, GR00T for robotics and Clara for biomedical.

The number of companies using Nvidia's open-source models are very familiar including ServiceNow, Cadence, CrowdStrike, Caterpillar and a bevy of others. "Not only do we open,source the models, but we also open source the data we used to train those models. Only in that way can you truly trust how those models came to be," Huang said. “That's something Meta never did with its Llama model.â€

Huang said 80% of startups are building on open models, and that a quarter of OpenRouter tokens are generated by open models.

With the gap between open-source AI models and proprietary models closing why would an enterprise bet on a frontier model that will only have a lead of 6 months?

What's in it for Huang? Nvidia will obviously have the GPU and AI stack for the training and inference. Nvidia's software stack also dominates for AI. Simply put, Nvidia doesn't need a model for its business model. In other words, commodity LLMs are fine for most use cases--including yours.

- Nvidia touts Rubin platform production, hardware advances

- Nvidia highlights its robotics momentum as Qualcomm makes its platform case

- A look at how Mercedes Benz, Nvidia collaborated on autonomous vehicles

Good enough and cheap enough

Huang's comments aren't that surprising given that enterprises are tweaking commodity models with their proprietary data. One of the bigger announcements out of AWS re:Invent revolved around easy customization of its Nova models. Nvidia’s software and models are being integrated into Palantir, ServiceNow and Siemens. ServiceNow used Nvidia Nemotron for its Apriel Nemotron 15B reasoning model for lower cost and latency agentic AI. Siemens expanded its Nvidia partnership that includes integration of Nemotron models.

“We built on Nvidia Nemotron for the next generation of our platform, which enables customers to do extraordinary things with large language model power at a fraction of the big model cost, zero latency, total security, no hallucination and a cost-effective ROI,†said ServiceNow CEO Bill McDermott, on the company’s third quarter earnings call.

Although software vendors are leveraging Nvidia’s Nemotron models, making most CxOs users by default, there are signs enterprises are going Nvidia and open models. Caterpillar outlined its AI plans with a dose of Nvidia Nemotron and Qwen3 models as did PepsiCo with digital twin efforts via Siemens. Hyundai said it was leveraging Nvidia Nemotron models last year.

Salesforce CEO Marc Benioff also noted that LLMs are commoditizing. He said in December: "We use all of the large language models. They're all great. We love all of them. We love all of our children, but they're also all just commodities, and we can have the choice of choosing whatever one we want, whether it's OpenAI or Gemini or Anthropic or there's other open-source ones. They're all very good at this point. So, we can swap them in and out. The lowest cost one is the best one for us, making us basically the top user of these foundation models."

Benioff’s mantra applies to enterprises too: The lower cost one is the best one.

What's in it for you?

I'd argue that there will be few if any enterprise use cases that will require a bleeding edge LLM. And if you can wait six months for an open-source option to catch up (likely from Nvidia at this point) why would you blow your cost curve on a high-end model?

You can use a series of open models to form an agentic system. The whole is greater than the parts and the parts need to be cheaper.

You'll obviously have to evaluate open-source options, commoditized LLMs and cheaper models and gauge ease of customizing with your data, but there should be a high bar to go proprietary where you just might be locked in.

It's unclear what this will mean for the likes of OpenAI, Anthropic or Google and Gemini, but that's not your problem. Your job is to drive AI returns and that'll increasingly mean open-source and commoditized models.