AI Forum Washington, DC 2025: Everything we learned

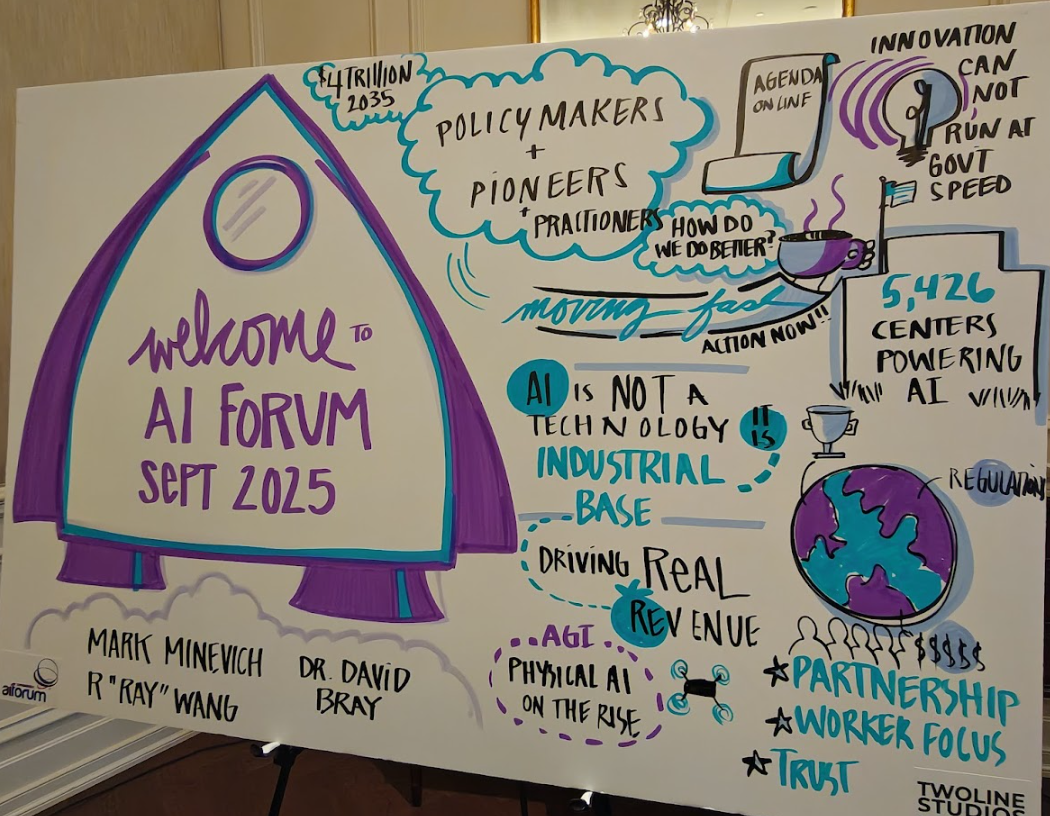

Constellation Research’s AI Forum in Washington DC featured 19 sessions, AI thought leaders and practitioners and a community drinking from a firehose.

Here’s a look at the takeaways.

- For AI agents to work, focus on business outcomes, ROI not technology

- Google DeepMind’s Danenberg on emerging LLM trends to watch

- Soul of the Machine: AI proof of concepts a waste of time

AI as a 1960s-ish moonshot?

As you would expect at an AI Forum held in Washington DC, there was a good bit of talk about AI as a battle between the West and China and the need for more power and less regulation.

Key points from the sessions:

- Data centers are becoming critical national infrastructure, with AI workloads expected to consume one-third of capacity. The global race for compute capacity requires 300 gigawatts in 4.5 years, with power demand doubling every 100 days. Countries need comprehensive digital transformation strategies to remain competitive.

- AI is viewed as "a new industrial base for the United States" that will determine whether America maintains global leadership or surrenders it. Speakers emphasized that leadership in AI is "never permanent" and the pace has gone "supersonic," making this a defense perimeter issue rather than just an economic opportunity

- The fragmented regulatory approach in the US contrasts with Europe's more restrictive AI Act, which is driving companies to relocate operations. The need for balanced policies that encourage innovation while providing appropriate guardrails remains a central challenge.

- Multiple panelists agreed that AI was going to affect jobs. These panelists also agreed that no government has an answer for the job losses.

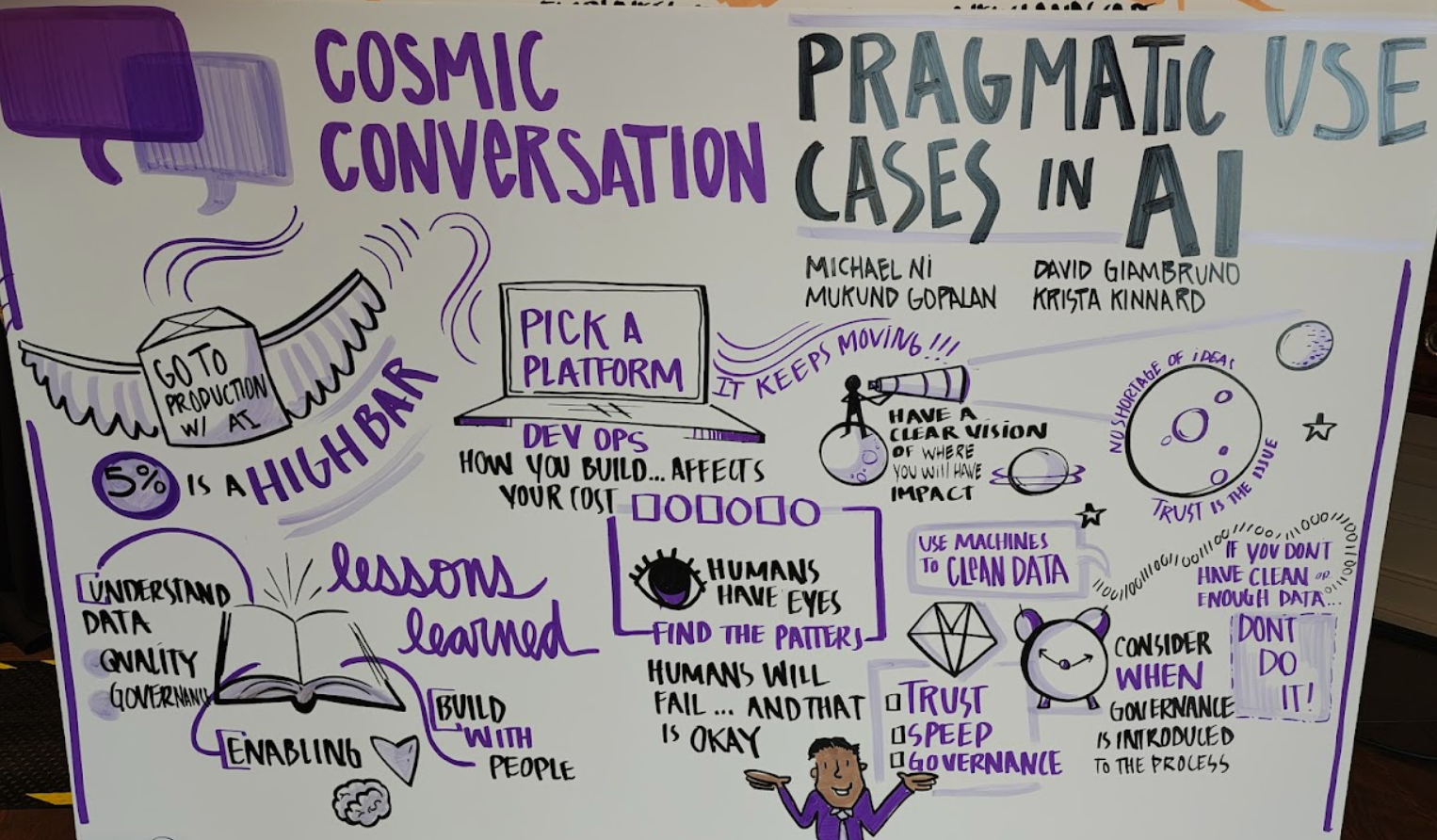

Pragmatic use cases

David Giambruno, CEO of Nucleaus, has seen his share of technology transformations. We covered Giambruno's approach to cutting IT costs last year.

To roll out real AI use cases, you'll have to speak to value first, said Giambruno, who also said you have to figure out how you're going to build and on what platform.

"How you build matters both in cost, speed to value, and how much glass you want to chew," he said. In other words, pick one platform and operating model and run.

Once that platform is picked give developers a safe place to experiment and see what's possible.

Mukund Gopalan, Global Chief Data Officer at Ingram Micro, said every use case for AI needs to have "a clear line of sight to the top line or bottom line."

Gopalan said every use case is different, but the guiding principle is that they need to save costs, drive revenue or save time.

Scott Gnau, Vice President of Data Platforms at Intersystems, cited a use cases that did all three with ambient listening AI that plugged into the workflow of electronic health records. "A physician could have a conversation, look a patient in the eye and have everything captured and get a list of recommendations from an AI agent," said Gnau. "This use case takes an existing process and makes it fully optimized yet human."

Use cases that turned up on a panel:

- Data cleansing and finding out where sensitive data resides.

- Data engineering.

- Pull logic out of stored procedures.

- Transforming legacy applications with AI.

- Knowledge management applications.

Nilanjan Sengupta, SVP / Industry Market Director, Public Sector and Healthcare, Americas at Thoughtworks, said the software development lifecycle is a clear use case for AI agents. "The main trend we're seeing is legacy modernization across the entire enterprise," he said.

Anand Iyer, Chief AI Officer at Welldoc, said his company has created a large sensor model that takes sensor data can uses it to predict glucose values in the hours ahead. "When we think about where healthcare is headed, a lot of us are trying to get to the prevention piece," said Iyer.

Peter Danenberg, a senior software engineer at Google's DeepMind who leads rapid prototyping for Gemini, said enterprises have been expanding the use case roster. Danenberg said that there has been a shift in companies about how they are using foundational models from reluctance to adoption. Companies are focusing on low hanging fruit for use cases, but these add up. "Anything where you need to extract structured data from unstructured data is beautiful low hanging fruit you can get started with," he said.

Proofs of concepts are panned widely

If there was a punching bag at AI Forum Washington DC it was the proof of concept.

CxOs repeatedly panned POCs because they were an excuse for not doing the work upfront, sucking in funds and creating rabbit holes. "Before you get to the POC we often trip over ourselves with understanding our data," said one federal government AI leader. "Rather than doing POCs, do a discovery sprint for AI and it will quickly unveil where the holes in your data are."

Sunil Karkera, Founder Soul of the Machine, is leveraging agentic AI to outpace much larger companies. "We solve boring problems and it's exciting," said Karkera. He also doesn't believe in proofs of concepts and pilots. Prototypes can be created in that first customer meeting and can rapidly go to production. "We are using an entirely end to end AI toolchain," said Karkera. "Vibe coding is about 10% to 20% in the prototyping phase. Then it's basically deep architecture. Engineering AI is really hard because most of the work is context engineering and it's not straightforward."

Are chief AI officers a thing?

Take a room with a few chief AI officers and ask them whether there's staying power in their titles and you're likely to get some interesting answers.

The takeaways from a panel:

- The chief AI officer role is needed now but will be structured into the organization.

- CAIOs revolve around a centralized approach, but will go away once AI is decentralized across an org.

- CIOs will need to work with CAIOs for the foreseeable future on frameworks, tools, platforms and governance.

- Enterprises with CAIOs need to balance business acumen and technical proficiency.

- It's not a vanity title...yet.

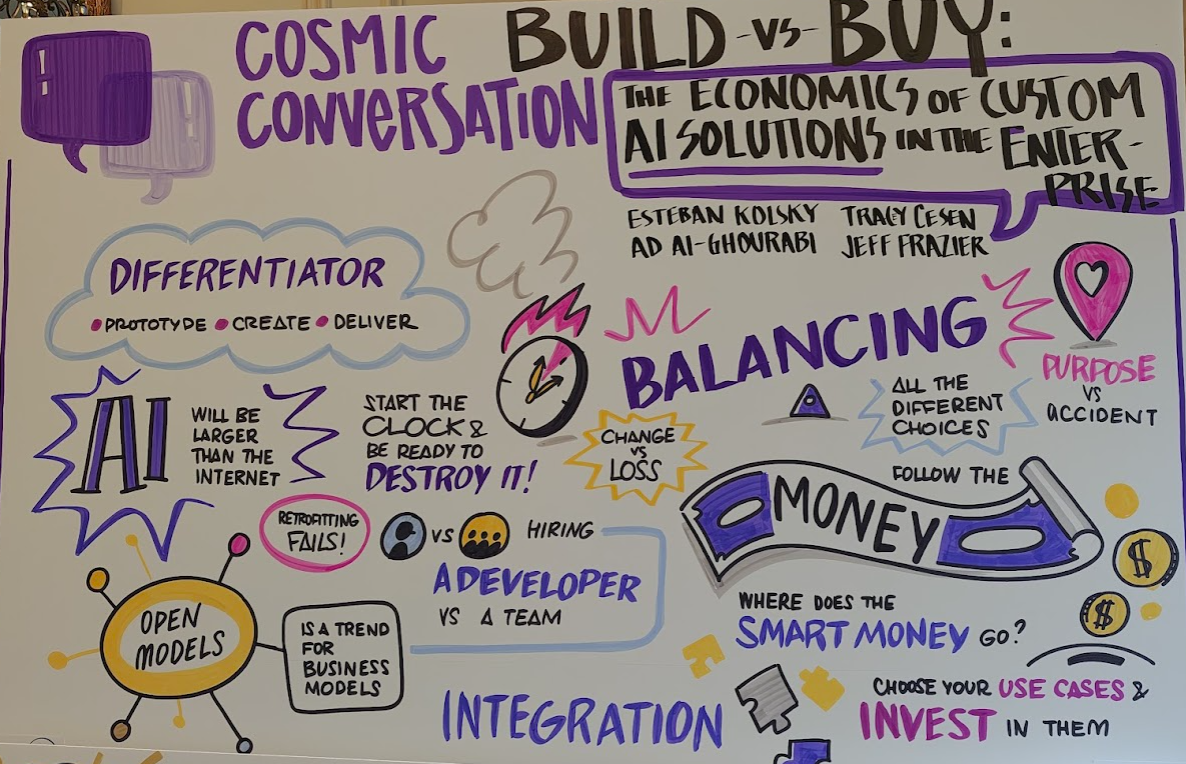

Build vs. Buy

When it came to building AI applications, CxOs at the AI Forum were split on build vs. buy. AD Al-Ghourabi, a senior technology leader, said the right answer is to build and buy. "Buy for parity and build for differentiation," he said. "A lot of AI capabilities and LLMs are now commodities, but anything between your data and a decision is your differentiator and core."

In recent years, buying from a big vendor offered more predictability over build and best of breed. AI has changed that equation. You can prototype, test, build and deliver in the time a large tech vendor goes through the procurement cycle.

Others argue that enterprises should buy and push their vendors to innovate. There's a huge gap between prototype and production.

Tracey Cesen, Founder & CEO of Forever Human.ai, said the problem with building is that "software is a living, breathing organism so it requires care and feeding." That care and feeding also means you continuously question whether it should have been built.

In the end, the buy vs. build debate boils down to flexibility. Don't get caught into an "ERP data prison," one delivery mode with cloud or an AI vendor. In the end, enterprise buyers need to take a portfolio approach and acquire components that enable you to be flexible and experiment. Also tier vendors based on approaches and business priorities.

Nicolai Wadstrom, Partner, Co-Head of Ares Venture Capital and AI Innovation Groups at Ares Management, said the buy vs. build debate really revolves around do both.

"Build vs buy is wrong because you need to build, partner and buy," said Wadstrom. "You want to buy things commoditized. You want to build things where you can pour in proprietary knowledge and build a moat. And when you need higher skills you partner. The complacent thinking of a traditional CIO approach where I buy someone else's technology roadmap is over. You're not going to be competitive. Understand the drivers and competitors, define your problem, opportunity landscape and drive a technology roadmap."

On-premises still matters

CxOs noted that the conversation around AI often includes an assumption of cloud computing. The reality is that on-premises may drive more returns as inference becomes the main AI workload.

"Don't discard on-premises. A lot of people are doing AI on-premises and focused on it," said one CxO.

Why you shouldn't discount on-premises AI:

- It's more effective for small models.

- Cloud costs can add up.

- On-premises AI may make more sense in terms of operations, privacy and security.

- Edge use cases are likely to become more of the AI landscape.

- There's a continuum of AI deployment models.

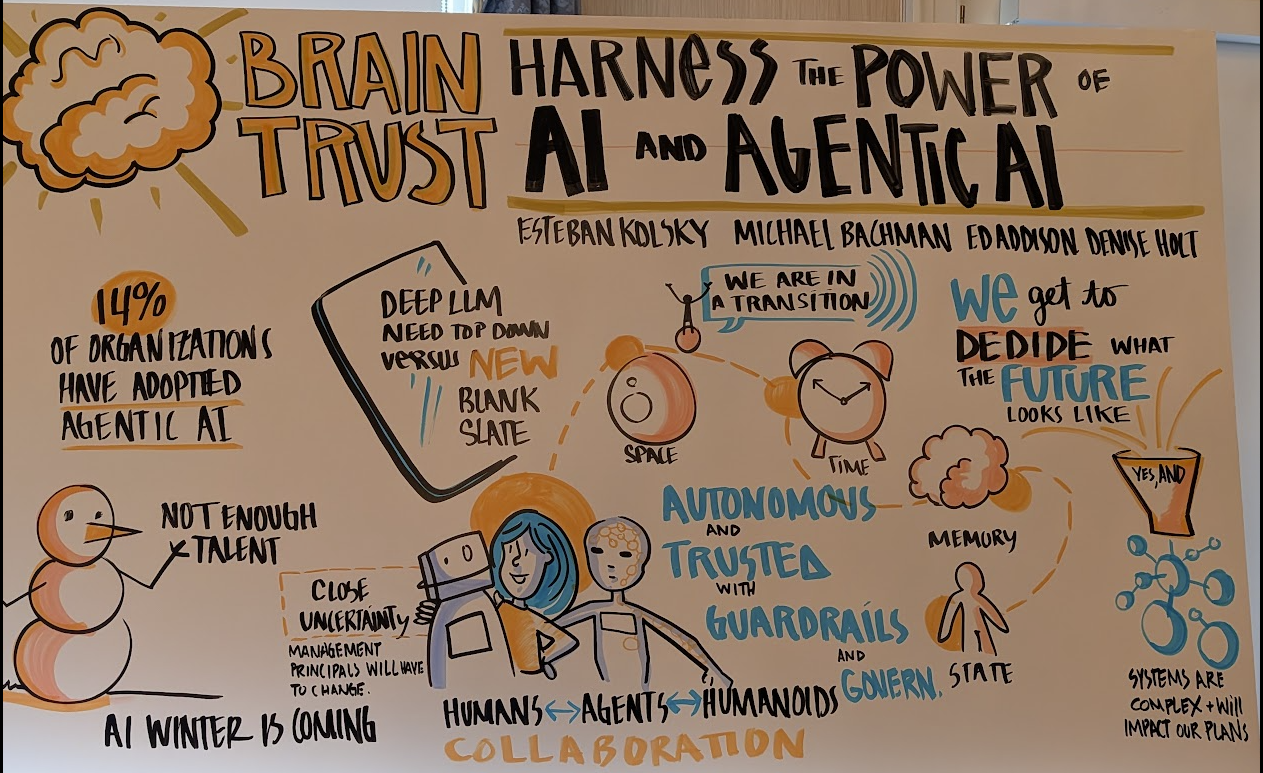

Future of work

The future of work was hotly debated. Most CxOs agreed that corporations will use fewer employees and more AI agents and robots. The impact on education, income and society will be large.

A few moving parts to ponder:

- New management structures will need to emerge to manage humans and digital workers.

- Governments aren't addressing the AI impact to jobs but will need to shortly--like in the next 12 to 18 months.

- Education will have to evolve and public institutions aren't prepared. Look for personalized education programs to emerge powered by AI.

- Professional training will become more important than university education.

- Some attendees noted that humans have always found new roles amid new technology trends.

- If AI uplevels the workforce you’ll see two side effects: First, everyone will move to the median in terms of performance. Second, you’ll have a shortage of experts since few workers will actually have the 10,000 hours required to be an expert.

Looming questions

Between the conversations in between panels, there were a few looming questions to ponder. These are worth some rumination.

- Have LLMs hit the wall? In a few talks, it was noted that LLMs have already ingested all of the human data available and public. Synthetic data leads to degradation over time as it becomes further removed from the original.

- Are we in an AI bubble? We covered this one before, but the worries aren’t going anywhere. See: Enterprise AI: It's all about the proprietary data | Watercooler debate: Are we in an AI bubble?

- Is our brute force compute approach misguided? In the US, the running assumption is that trillions of dollars, data centers the size of Manhattan and millions of GPUs are the way to get to AI nirvana. However, there has to be more elegant engineering and innovation out there. Are we mired in AI factory groupthink?