Sakana AI: Think LLM dream teams, not single models

Enterprises may want to start thinking of large language models (LLMs) as ensemble casts that can combine knowledge and reasoning to complete tasks, according to Japanese AI lab Sakana AI.

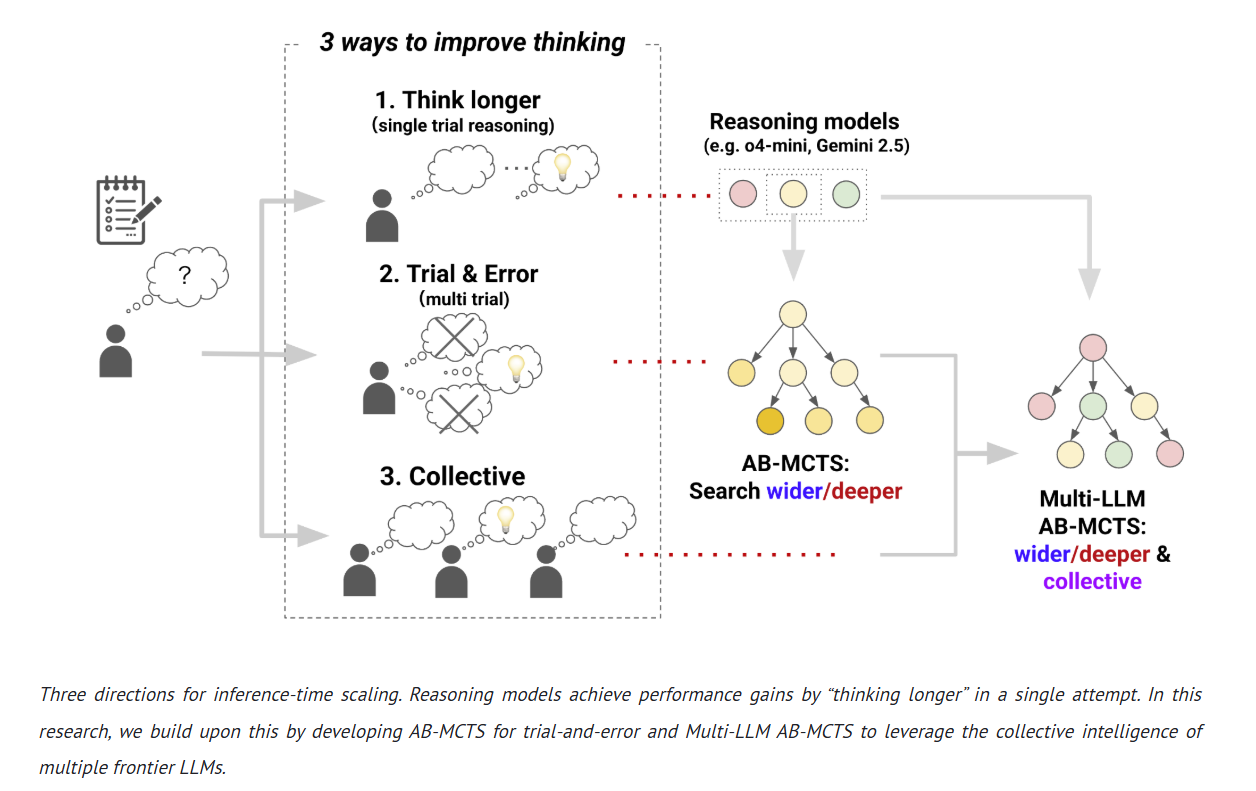

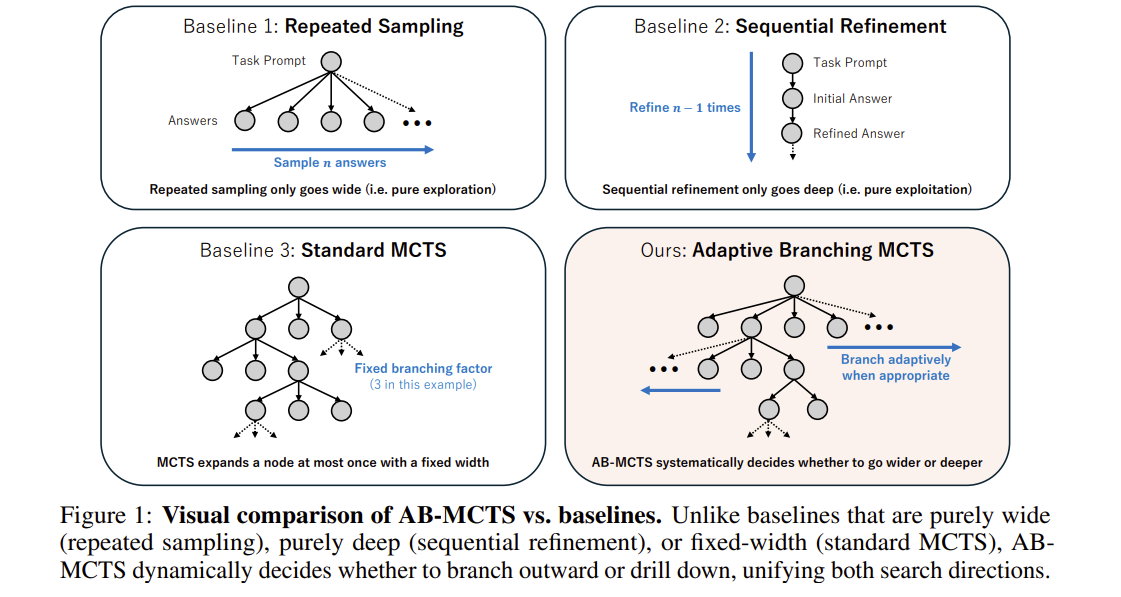

Sakana AI in a research paper outlined a method called Multi-LLM AB-MCTS (Adaptive Branching Monte Carlo Tree Search) that uses a collection of LLMs to cooperate, perform trial-and-error and leverage strengths to solve complex problems.

In a post, Sakana AI said:

"Frontier AI models like ChatGPT, Gemini, Grok, and DeepSeek are evolving at a breathtaking pace amidst fierce competition. However, no matter how advanced they become, each model retains its own individuality stemming from its unique training data and methods. We see these biases and varied aptitudes not as limitations, but as precious resources for creating collective intelligence. Just as a dream team of diverse human experts tackles complex problems, AIs should also collaborate by bringing their unique strengths to the table."

Sakana AI said AB-MCTS is a method for inference-time scaling to enable frontier AIs to cooperate and revisit problems and solutions. Sakana AI released the algorithm as an open source framework called TreeQuest, which has a flexible API that allows users to use AB-MCTS for tasks with multiple LLMs and custom scoring.

What's interesting is that Sakana AI gets out of that zero-sum LLM argument. The companies behind LLM training would like you to think there's one model to rule them all. And you'd do the same if you were spending so much on training models and wanted to lock in customers for scale and returns.

Sakana AI's deceptively simple solution can only come from a company that's not trying to play LLM leapfrog every few minutes. The power of AI is in the ability to maximize the potential of each LLM. Sakana AI said:

"We saw examples where problems that were unsolvable by any single LLM were solved by combining multiple LLMs. This went beyond simply assigning the best LLM to each problem. In (an) example, even though the solution initially generated by o4-mini was incorrect, DeepSeek-R1-0528 and Gemini-2.5-Pro were able to use it as a hint to arrive at the correct solution in the next step. This demonstrates that Multi-LLM AB-MCTS can flexibly combine frontier models to solve previously unsolvable problems, pushing the limits of what is achievable by using LLMs as a collective intelligence."

A few thoughts:

- Sakana AI's research and move to emphasize collective intelligence over on LLM and stack is critical to enterprises that need to create architectures that don't lock them into one provider.

- AB-MCTS could play into what agentic AI needs to become to be effective and complement emerging standards such as Model Context Protocol (MCP) and Agent2Agent.

- If combining multiple models to solve problems becomes frictionless, the costs will plunge. Will you need to pay up for OpenAI when you can leverage LLMs like DeepSeek combined with Gemini and a few others?

- Enterprises may want to start thinking about how to build decision engines instead of an overall AI stack.

- We could see a scenario where a collective of LLMs achieves superintelligence before any one model or provider. If that scenario plays out, can LLM giants maintain valuations?

- The value in AI may not be in the infrastructure or foundational models in the long run, but the architecture and approaches.

More:

- AI's boom and the questions few ask

- Hi-Fi process management needs to be foundation for AI

- Anthropic's multi-agent system overview a must read for CIOs

- Agentic AI: Is it really just about UX disruption for now?

- RPA and those older technologies aren’t dead yet

- Lessons from early AI agent efforts so far

- Every vendor wants to be your AI agent orchestrator: Here's how you pick

- Agentic AI: Everything that’s still missing to scale

- BT150 zeitgeist: Agentic AI has to be more than an 'API wearing an agent t-shirt'