OpenAI's open weight models gain AWS distribution: Why it matters

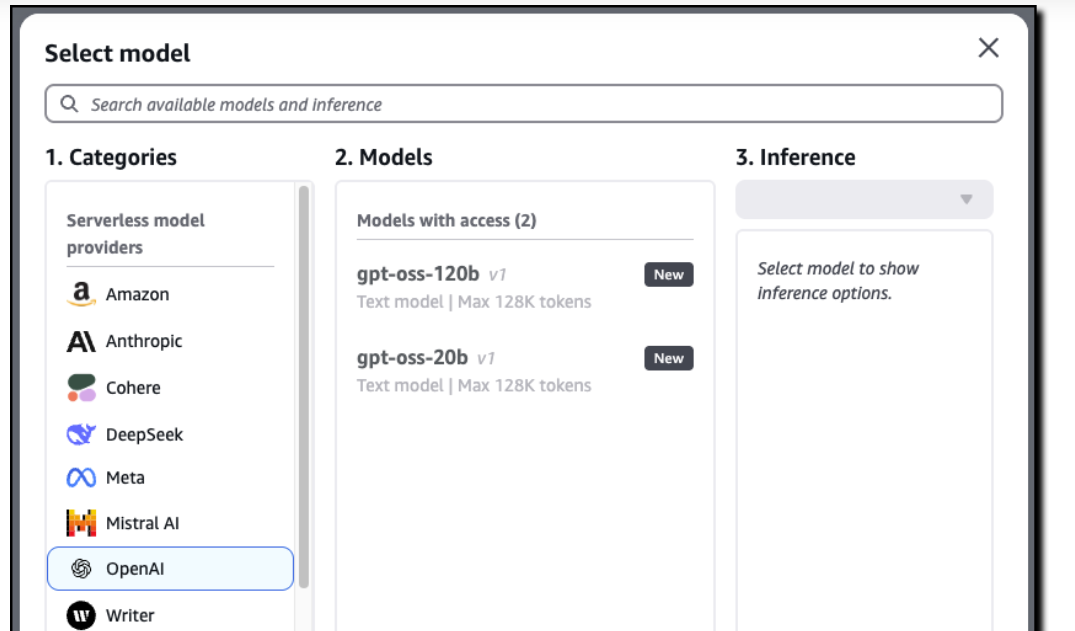

OpenAI released two open-weight models--gpt-oss-120b and gpt-oss-20b--but the real news revolves around distribution. These two new OpenAI models have a wide distribution including availability on Amazon Web Services for the first time.

The new OpenAI models, which can run locally, on-device and through third party providers, are not-surprisingly available on Microsoft Azure, but also Hugging Face, vLLM, Ollama, llama.cpp, LM Studio, AWS, Fireworks, Together AI, Baseten, Databricks, Vercel, Cloudflare and OpenRouter.

For good measure, OpenAI said it worked with Nvidia, AMD, Cerebras and Groq to optimize the models.

In a blog post, AWS said gpt-oss-120b and gpt-oss-20b will be available in Amazon Bedrock and Amazon SageMaker JumpStart. The models will also be available in frameworks for AI agent workflows such as AWS' Strands Agents.

The models and why they matter

According to OpenAI, the gpt-oss-120b model "achieves near-parity" with OpenAI o4-mini on core reasoning benchmarks running on a single 80 GB GPU. The gpt-oss-20b model delivers similar results to OpenAI o3-mini and can run on devices with 16 GB of memory for local inference.

OpenAI also outlined safety efforts and early development with AI Sweden Orange and Snowflake. The model card has all the details, but the primary takeaway is that OpenAI's open-weight models perform well.

You'd be forgiven for glazing over a bit with OpenAI's latest models. Alibaba's Qwen just released a new image model, Anthropic released Claude Opus 4.1 and improvements to Opus 4 and Google DeepMind launched Genie 3, which can generate 3D worlds.

Simply put, it's another day, another model improvement complete with various charts of benchmarks.

The big picture for OpenAI revolves around distribution and enterprise throughput. The AWS availability is a win for OpenAI, may open some doors and more importantly adds an option for enterprises.

The LLM game has become one that revolves around spheres of influence. Microsoft Azure has plenty of model choices, but is clearly aligned with OpenAI. Amazon is a big investor in Anthropic. Google Cloud is all about Gemini, but also has a lot of choice including Anthropic. OpenAI models to date have been available either direct or through Microsoft.

Add it up and OpenAI has to be available on multiple clouds so the open weight move makes a lot of sense.

Here's why? OpenAI can't afford to lose the enterprise because that's what'll pay the bills. Sure, OpenAI may upend Google in search. Yes, there are even OpenAI devices on tap for consumers. But the real dough will be in the enterprise.

- OpenAI takes stab at gauging productivity gains, AI economic impact

- OpenAI's CEO of Applications pens intro missive: 5 takeaways

- OpenAI vs. Microsoft: Why a breakup could be good

- OpenAI's enterprise business surging, says Altman

The problem? Enterprises are going to value price performance and practical applications with real guardrails. That's why I find AWS' practical approach so interesting. It may cause some consternation among analyst types, the practical appeal is what CxOs want and need if they're going to take AI agents to production.

- AWS' AI strategy: Jassy's long talking and the big picture

- AWS' practical AI agent pitch to CxOs: Fundamentals, ROI matter

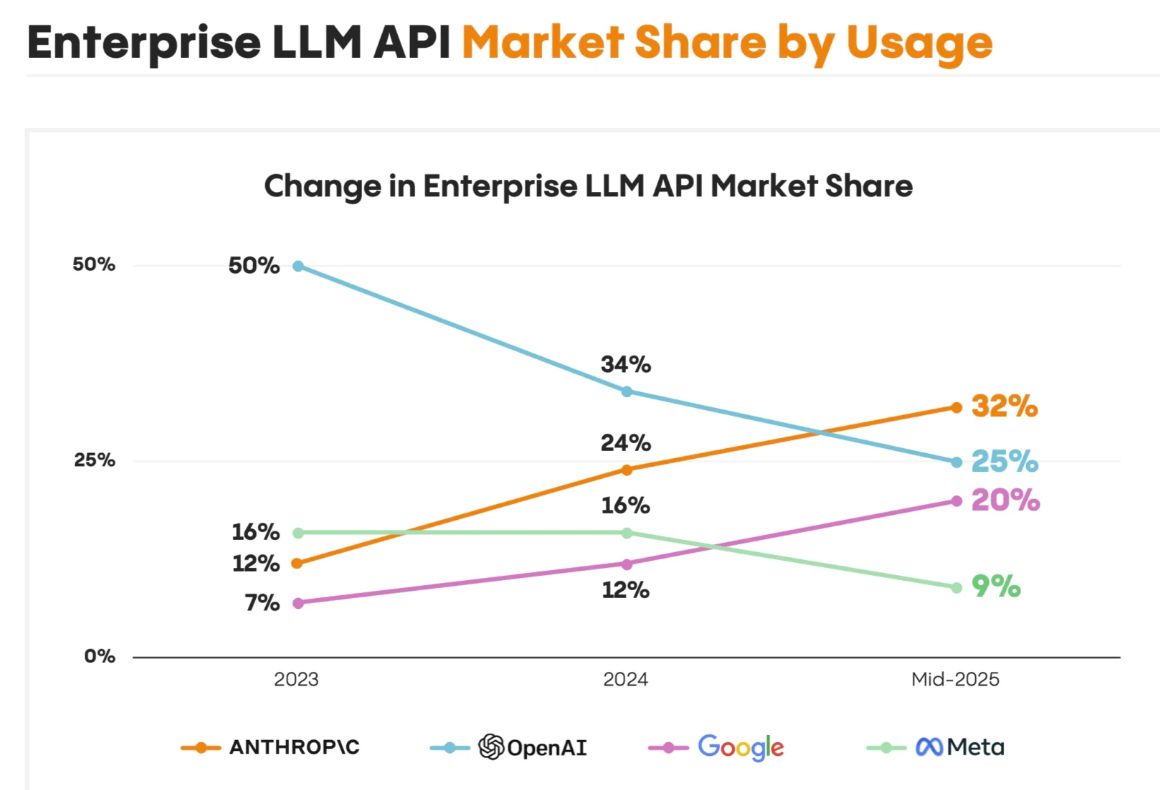

According to Menlo Ventures, Anthropic has 32% share of enterprise AI followed by OpenAI at 25% and Google at 20%. And Google's Gemini models are all over the enterprise as Google Cloud makes a big play to be the AI cloud layer. In a few months, it's highly likely that enterprise models will revolve around Anthropic and Google's Gemini family. We're in the era of good-enough LLMs and enterprises simply want the best model for the use case.

OpenAI's launch of open-weight models with broader distribution can keep it in the game. Also keep in mind that AI spending is going to revolve around inference in the enterprise.

We can haggle over benchmarks and performance all day, but like all things enterprise distribution matters.