Arm launches Lumex, an AI platform for devices with data center implications

Arm launches Lumex, its latest platform for on-device AI and a big bet that inference will move from the cloud to the edge in hybrid deployments.

Lumex is designed for premium mobile devices, but will also play into AI data center workloads. The more AI inferencing can be moved to the devices in your pocket, the less enterprises and developers will have to invest in cloud compute and AI infrastructure.

Chris Bergey, VP and GM of Arm's client business, said advances in large language models (LLMs) and agentic AI are making mobile devices more of a companion with high expectations for experiences.

"We have moved from Ai being a parlor trick to influencing how things get done. People of all ages are using these experiences every day, embedded seamlessly into apps, devices and systems they rely on, but we have only started to see how AI will shape our future expectations," said Bergey in a briefing. Bergey also outlined Lumex in a blog post.

He added that AI is "too essential, too interactive and too valuable" to be derailed by network glitches. When instant response is the expectations, local compute matters. "AI has to move to the device. Why? Because relying on Cloud to scale isn't sustainable. It's too expensive for developers and too slow for users and too concerning for privacy," said Bergey.

Arm is targeting smartphones and consumer devices with Lumex, but remember Arm has broad data center ambitions. Bergey added that the most advanced models will be in the cloud, but workloads that can move down to the device.

- Softbank buys Ampere for $6.5 billion, says complements Arm, AI infrastructure push

- Qualcomm, Arm cheer cheaper models, AI inference at the edge

Indeed, Geraint North, Fellow of AI and Developer Platforms at Arm, said AI costs are going to matter. North said:

"One of the things with developers is that everyone is in a user acquisition phase right now before they're in the 'we've got to make this profitable' phase. Developers are going to say, 'I can't just spend all this on cloud compute resource' and ask how much they can offload? (Offloading compute) will become increasingly important once people are under pressure for profitability, which is not necessarily the case for many of the AI app developers right now."

The evolution will be that small models will run on device and advanced models will stay in the cloud. Enterprises and developers will optimize for performance and costs.

Lumex tech details

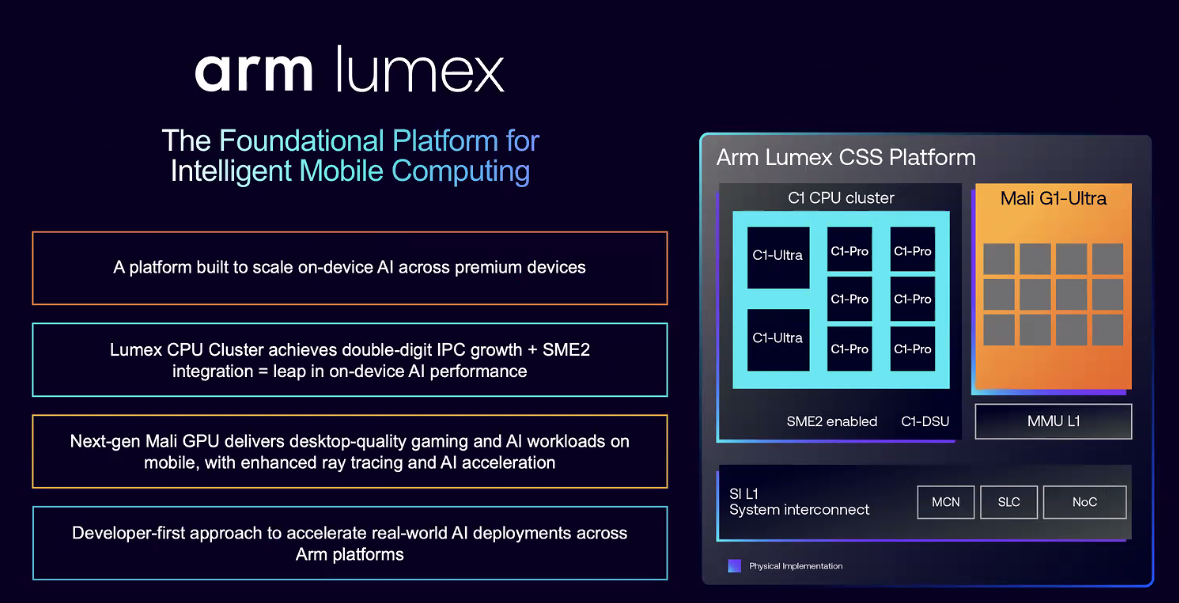

Lumex represents Arm's platform strategy with an "AI-first platform design" that's built from the ground up for AI. Lumex features better performance, tighter integration and a more scalable architecture.

"We're talking about tightly integrated compute, software and tools optimized for the next generation of mobile workloads, and it's built for AI with new architectural features and optimized implementations for the best performance," said James McNiven, who leads Arm's product management team for the company's client business.

The Lumex platform also gives Arm the ability to integrate the company's technology quickly.

Key items:

- Lumex has SME2 integration throughout. SME2 (Scalable Matrix Extension Version 2) is a hardware feature and extension of the Arm v9-A architecture that accelerates advanced operations for AI inference, HPC and other intense workloads.

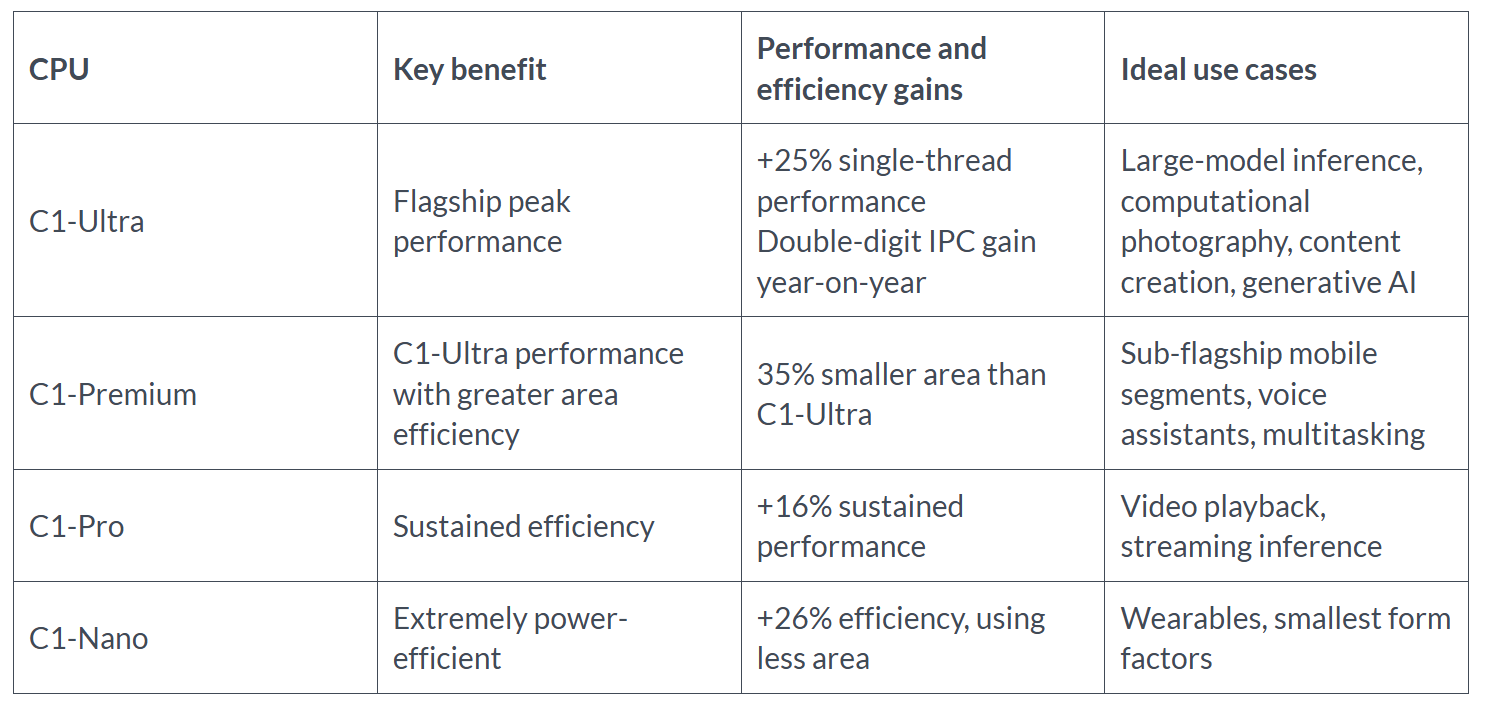

- Arm's C1 Ultra CPU provides a 25% performance gain and its C1 Pro, which is optimized for efficiency, has a 12% improvement in energy usage.

- The Mali Ultra GPU delivers a 20% gain in performance with 9% better energy efficiency as well as 20% faster AI inferencing.

- SME2 integration provides 5x acceleration in AI performance and 3x efficiency for AI experiences.

- Lumex is optimized for 3nm process technologies and can be manufactured in multiple foundries.