Microsoft launches Maia 200 as custom AI silicon accelerates

Microsoft launched its Maia 200 AI accelerator and said it is available in Azure. Microsoft said Maia 200 delivers 30% better performance per dollar than the latest generation of hardware.

The company is the latest cloud hyperscaler to roll out new custom silicon for AI workloads. Google Cloud has seen strong uptake on its TPUs, which were used to train Gemini, and AWS has its Trainium and Inferentia chips. Microsoft launched the Maia 100 in 2023.

According to Microsoft, Maia 200 is deployed in select Azure instances. Highlighting how custom silicon and price/performance matters, Microsoft said Maia 200 compares favorably to Trainium and TPUs. The upshot is that the choices beyond Nvidia are expanding.

- AWS launches AI factory service, Trainium 3 with Trainium 4 on deck

- AWS launches Graviton5 as custom silicon march continues

- Google Cloud's Ironwood ready for general availability

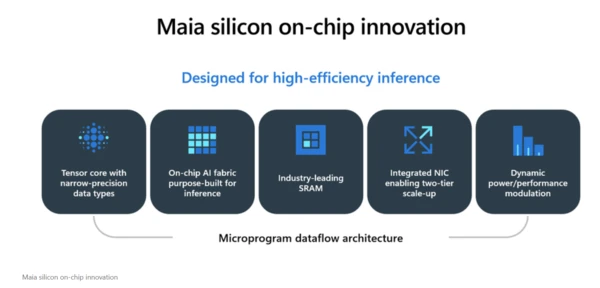

Microsoft said Maia 200 is built on Taiwan Semiconductor's 3nm process, features a redesigned memory system with 216GB HBM3e at 7 TB/s and 272MB of on-chip SRAM. Maia 200 also features data movement engines to keep models utilized. "This makes Maia 200 the most performant, first-party silicon from any hyperscaler, with three times the FP4 performance of the third generation Amazon Trainium, and FP8 performance above Google’s seventh generation TPU. Maia 200 is also the most efficient inference system Microsoft has ever deployed, with 30% better performance per dollar than the latest generation hardware in our fleet today," said Microsoft in a blog.

Like most chip benchmarks, it pays to read the fine print. The high-level view is that price/performance for AI inference is critical. And enterprises are going to look to hyperscalers' private-label chips to deliver results.

Constellation Research analyst Holger Mueller said:

“Microsoft is back at it - custom silicon for AI - after taking an almost 3 year break. Microsoft has had a big role fueling Nvidia’s financials. Maia 200 hits all the right numbers from performance, memory, liquid cooling and the cloud infrastructure to support it. Now it is all about the rollout speed across the Azure data center landscape.”

Microsoft said Maia 200 will be used with multiple models in Microsoft Foundry and Microsoft 365 Copilot. Microsoft's Superintelligence team will also leverage Maia 200.

The company said Maia 200 is deployed in its US Central datacenter region and US West 3 region with more areas to follow. A software development kit for Maia is in preview.

Other key items to note with Maia:

- Each Maia 200 chip delivers more than 10 petaFLOPS in 4-bit precision (FP4) and over 5 petaFLOPS of 8-bit (FP8) performance within a 750W SoC TDP envelope.

- Maia 200 AI accelerators are optimized for inference with 2.8 TB/s of bidirectional scaleup bandwidth.

- Cluster can be built with up to 6,144 accelerators.

- Maia 200 can run the largest models and accommodate bigger ones in the future.