DigitalOcean CEO Paddy Srinivasan on AI natives, inferencing, agentic AI adoption

DigitalOcean CEO Paddy Srinivasan said AI inferencing is becoming the dominant workload at the cloud provider, AI native companies have the potential to scale faster and leaner than ever before and agentic AI is in the early innings with a lot of loose ends to tie up.

Those are some of the takeaways from my conversation with Srinivasan. DigitalOcean has more than 640,000 paying customers and most of them are developers. In terms of enterprise reach, Srinivasan said the company is focused on digital native enterprises as well as AI native startups.

- DigitalOcean highlights the rise of boutique AI cloud providers

- DigitalOcean GenAI Platform now available

Srinivasan said the goal is to make AI infrastructure simple with transparent pricing.

Here are some of the takeaways from our chat.

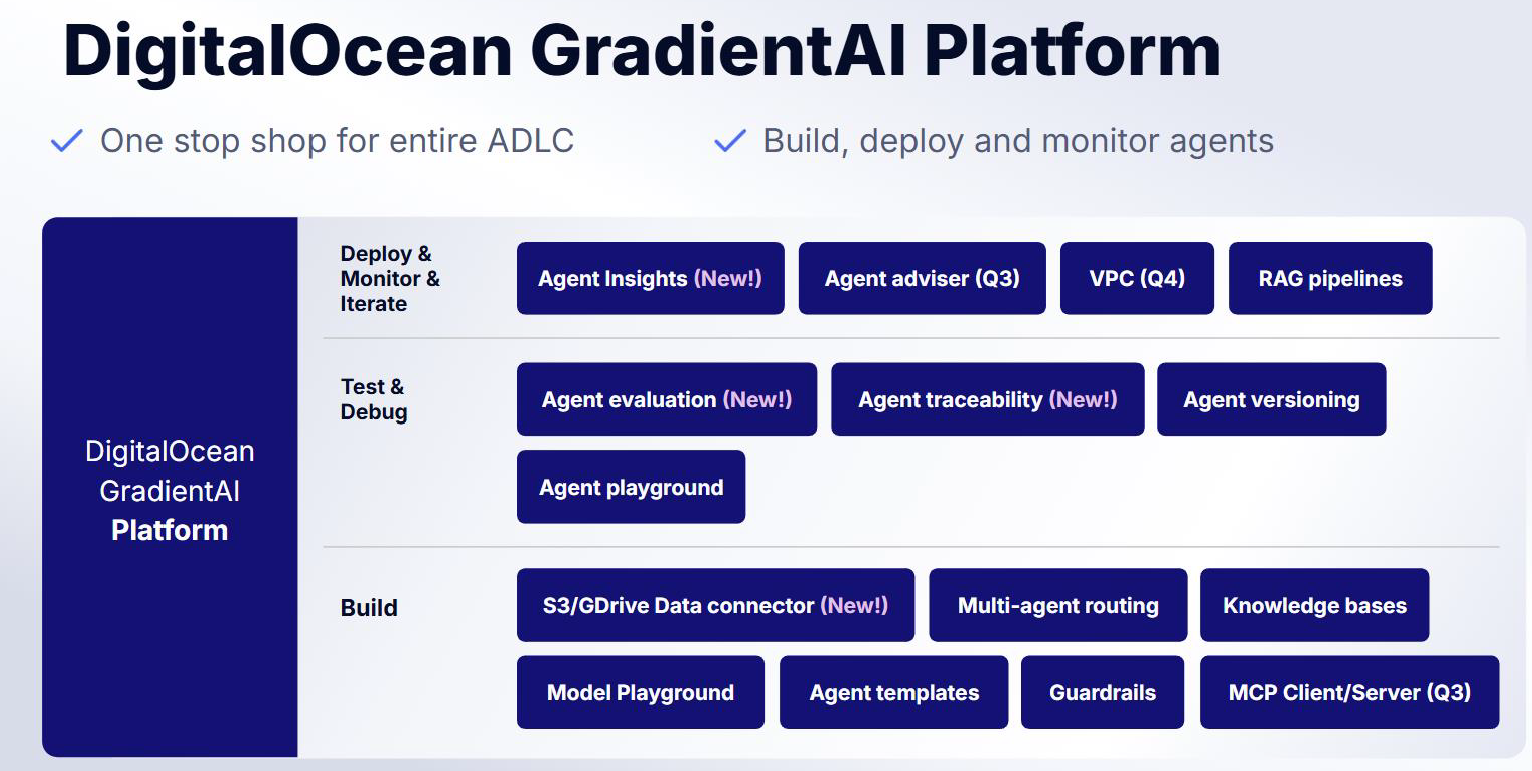

The DigitalOcean stack. Srinivasan said the company has three layers with infrastructure featuring AMD and Nvidia GPUs, its Gradient AI Platform that provides middleware and building blocks for AI and a set of agents to provide an automated experience.

Focus on AI inferencing. "DigitalOcean focuses on inferencing, which requires a different configuration of GPUs, networking, storage, and compute resources compared to training," said Srinivasan. The CEO noted that the "half-life of GPUs for inferencing is longer, allowing for more diversity in GPU types."

The customer base. Srinivasan said there are two types of companies--AI native and digitally native. "AI natives are being born in AI to solve a problem using AI as the centerpiece," he said. "Everyone else is digital native trying to use AI as part of an existing application stack."

The needs of these two types of customers are quite different. AI natives are looking to build models or extend them or optimizing them for use cases and to solve business problems. "AI natives need inferencing at scale. They'll take a model, optimize it, run it on an infrastructure that is optimized for AI," said Srinivasan.

Digital natives have an existing business that's viable and are now trying to add AI. These companies are looking to introduce agents and use AI in workflows.

Srinivasan said:

"Digital natives need a platform that not only has LLMs, but also a variety of different ways to inject the data, build agents and evaluate them. They need the ability to version these agents and so forth. When the agents are running, you need the ability to have direct insights on how the agent is working. That's the platform layer."

AI native companies and scale. Srinivasan said AI native companies are scaling rapidly with far fewer developers and humans required. AI natives can also be disruptive in that they think different and are likely to upend traditional user interfaces. "They're scaling faster and they're scaling leaner in as an AI native company," he said.

Agentic AI implementations. DigitalOcean has released an SRE agent that "can triangulate logs, identify the source of issues, and even pinpoint the specific line of code causing problems." Srinivasan explained how this is "reimagining the developer's interaction with the cloud." In the end, DigitalOcean is trying to eliminate complexity for itself and the customer.

AI agent adoption. Srinivasan said the current stage of agentic AI is "between the top of the first inning and bottom of the second inning." Adoption will happen faster than previous technology cycles, but there's a lot of key parts still being defined in the enterprise.

LLM commoditization. Srinivasan views "the commoditization of LLMs as a catalyst for the industry, driving down the total cost of ownership and increasing adoption." He noted that "open-source LLMs have caught up with closed-source models for general-purpose inferencing needs." More: The rise of good enough LLMs