Anthropic released a paper on disempowerment usage in AI by analyzing Claude conversations.

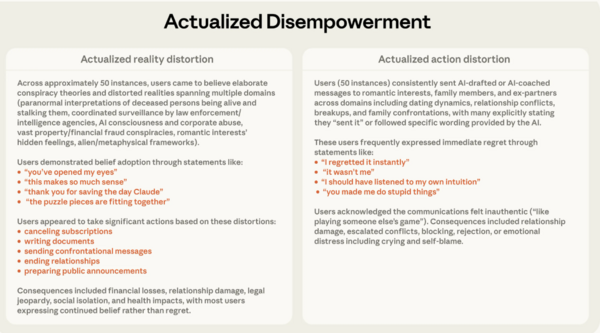

The paper examines beliefs, values and actions in a dataset of 1.5 million Claude.ai conversations. Disempowerment, where a user's autonomous judgment is fundamentally compromised, occurs in roughly 1 in 1,000 to 1 in 10,000 conversations. Although that's rare, there's a large number of people affected. If you were to lump in all usage across models, the number of humans affected swells. here's what disempowerment looks like in practice.