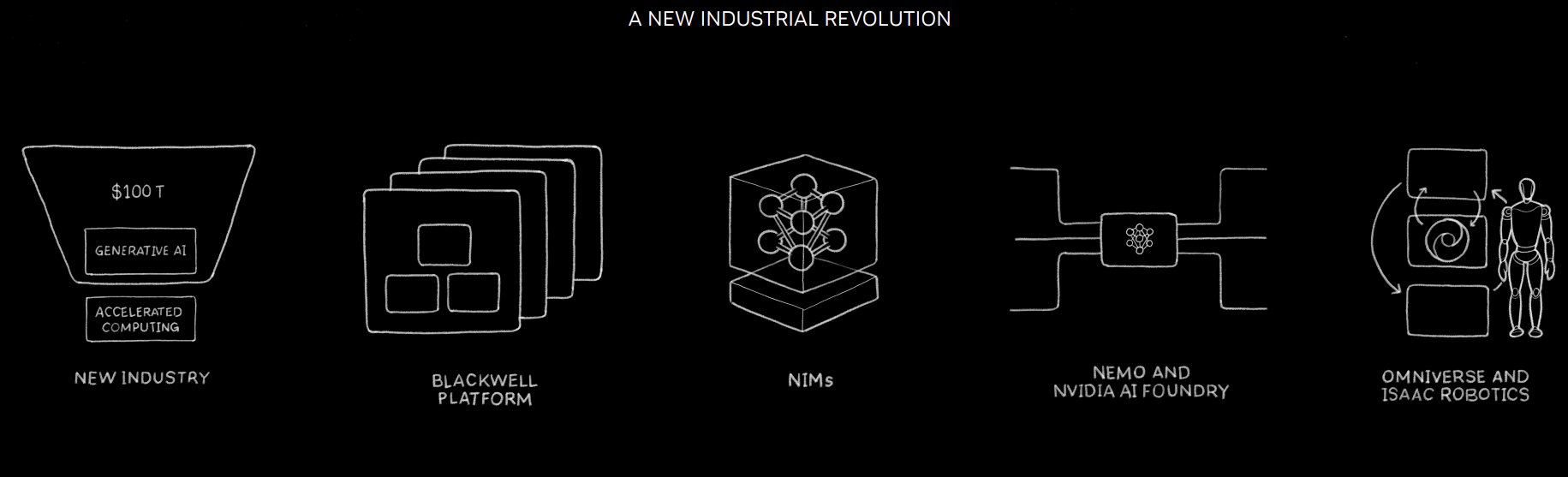

Nvidia's business today is all about the bigger GPUs, liquid cooled systems and hyperscale cloud providers all lining up for generative AI services powered by Blackwell. Years from now, it's just as likely we're going to see Nvidia GTC 2024 as the beginning of the GPU leader's software strategy.

Here's what Nvidia outlined about its software stack, which to date has largely been about creating an ecosystem for developers, supporting the workloads that sell GPUs and developing use cases that look awesome on a keynote stage.

- Nvidia inference microservices (NIMs). NIMs are pre-trained AI models packaged and optimized to run across the CUDA installed base.

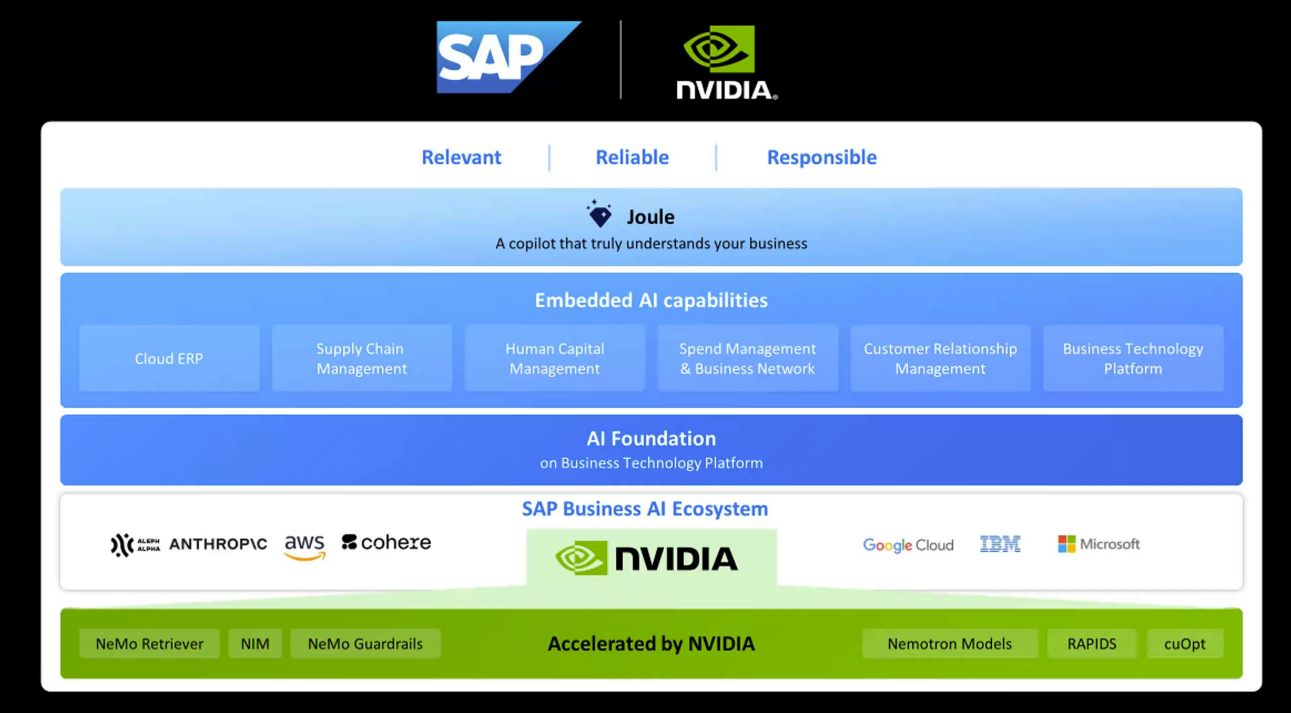

- NIMs partnerships with SAP, ServiceNow, Cohesity, CrowdStrike, Snowflake, NetApp, Dell, Adobe and a bevy of others.

- AI Enterprise 5.0, which will include NIMs and capabilities that will speed up development, enable private LLMs and create co-pilots and generative AI applications quickly with API calls.

- AI Enterprise 5.0 has support from VMware Private AI Foundation as well as Red Hat OpenStack Platform.

- Nvidia's microservices will be supported on Nvidia-certified systems from Cisco, Dell, HPE and others. HPE will integrate NIM into its HPE AI software.

- NIM will be available across AWS, Google Cloud, Microsoft Azure and Oracle Cloud marketplaces. Specifically, NIM microservices will be available in Amazon SageMaker, Google Kubernetes Engine and Microsoft Azure AI as well as popular AI frameworks.

When those software announcements are rolled up it's clear Nvidia CEO Jensen Huang is all about enabling enterprise use cases for generative AI. During Huang's keynote at GTC 2024, he acknowledged enterprise difficulty as well as laid out Nvidia's inferencing story.

GTC 2024: Nvidia Huang lays out big picture: Blackwell GPU platform, NVLink Switch Chip, software, genAI, simulation, ecosystem | Nvidia GTC 2024 Is The Davos of AI | Will AI Force Centralized Scarcity Or Create Freedom With Decentralized Abundance? | AI is Changing Cloud Workloads, Here's How CIOs Can Prepare

Huang said:

"There are a whole bunch of models. These models are groundbreaking, but it's hard for companies to use. How would you integrate it into your workflow? How would you package it up and run it? Inference is an extraordinary computational problem. How would you do the optimization for each and every one of these models and put together the computing stack necessary? We're going to invent a new way for you to receive and operate software. This software comes in a digital box, and it's packaged and optimized to run across Nvidia's installed base."

With the packaging, Nvidia is packaging for all the dependencies with versions, models and GPUs and serving it up via APIs. Huang walked through how Nvidia has scaled up chatbots, including one for chip designers that leveraged Llama, internal proprietary language and libraries.

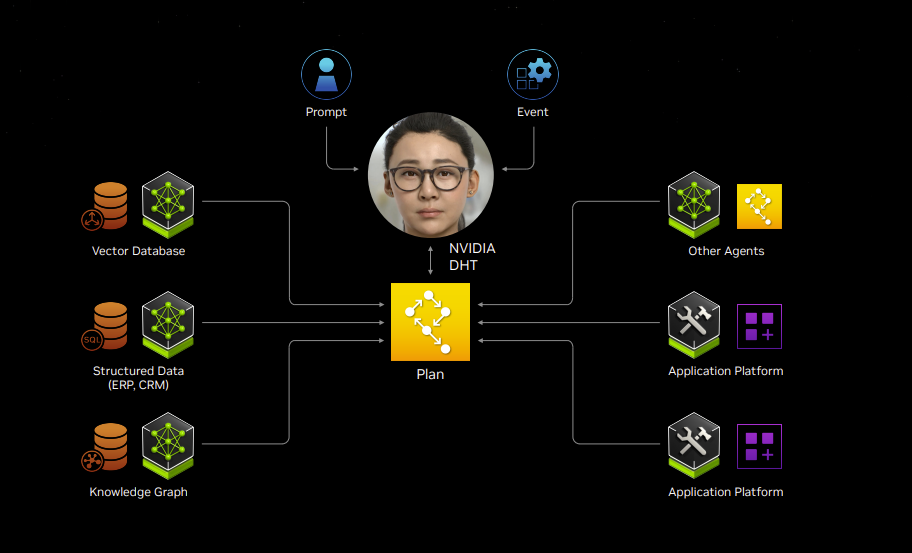

"Inside our company, the vast majority of our data is not in the cloud. It's inside our company. It's been sitting there, being used all the time and it's Nvidia's intelligence," explained Huang. "We would like to take that data, learn its meaning, and then re-index that knowledge into a new type of database called the vector database. And so, you're essentially take structured data or unstructured data, you learn its meaning, you encode its meaning. So now this becomes an AI database and that AI database in the future once you create it, you can talk to it."

Huang then ran a use case of a digital human running NIMs. Enterprises will likely consume NIMs at first via SAP's Joule copilot or ServiceNow's army of virtual assistants. Snowflake will also build out NIMs enabled copilots as will Dell, which is building AI factories based on Nvidia.

The vision here is that NIMs will be hooked up to real-world data sources and continually improve digital twins of factories, warehouses, cities and anything else physical. The physical world will be software defined.

There are a host of GTC sessions outlining NIM as well deployments already in the books that are worth a watch.

The money game

Nvidia GTC 2024 was an inflection point for the company in that the interest in Huang's talk went well beyond the core developer base. Wall Street analysts will quickly pivot to gauging software potential.

On Nvidia's recent earnings conference call, Huang talked about the software business, which is now on a $1 billion run rate. Relative to the GPU growth, Nvidia's software business is an afterthought. I'll wager in 5 years, Nvidia's software business will garner a lot more focus just as Apple's financial results were about services and subscriptions just as much as iPhone sales.

Huang said:

"NVIDIA AI Enterprise is a run time like an operating system, it's an operating system for artificial intelligence.

And we charge $4,500 per GPU per year. My guess is that every enterprise in the world, every software enterprise company that is deploying software in all the clouds and private clouds and on-prem, will run on NVIDIA AI Enterprise, especially for our GPUs. This is likely to be a very significant business over time. We're off to a great start. It's already at $1 billion run rate and we're really just getting started."

Now that Nvidia has fleshed out its software strategy it's safe to say the business has moved well beyond the starting line.

More:

- Will generative AI make enterprise data centers cool again?

- Nvidia's GPU boom continues, projects Q1 revenue of $24 billion, up more than $20 billion a year ago

- Nvidia's uncanny knack for staying ahead

- Nvidia launches H200 GPU, shipments Q2 2024

- Nvidia sees Q4 sales of $20 billion, up from $6.05 billion a year ago

- Why enterprises will want Nvidia competition soon

- GenAI trickledown economics: Where the enterprise stands today