Nvidia CEO Jensen Huang said "accelerated computing has reached the tipping point" across multiple industries as the company launched new GPUs including a new platform called Blackwell, NVLink Switch Chip, applications and a developer stack that blends virtual simulations, generative AI, robotics and multiple computing fronts.

Huang laid out Nvidia's lofty goal during his Nvidia GTC 2024 keynote. "The industry of using simulation tools to create products, and it's not about driving down the cost of computing. It's about driving up the scale of computing. We would like to be able to simulate the entire product that we do completely in full fidelity completely digitally. Essentially what we call digital twins. We would like to design it, build it simulated operated completely digitally," said Huang.

A big theme of Huang's talk was Nvidia as a software provider and ecosystem that sits in the middle of generative AI and multiple key categories. And yes, to do what Nvidia wants it's going to take much bigger GPUs. "We need much, much bigger GPUs. We recognized this early on. And we realized that the answer is to put a whole bunch of GPUs together. And of course, innovate a whole bunch of things along the way," said Huang. "We're trying to help the world build things. And in order to help the world building states we gotta go first. We build the chips, the systems, networking, all of the software necessary to do this."

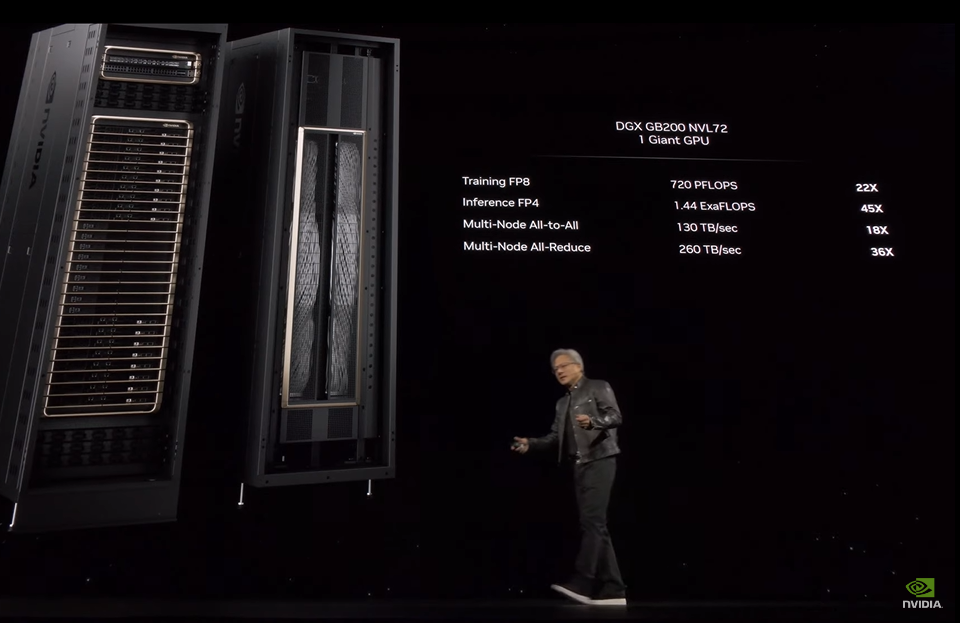

Huang laid out more powerful GPUs and systems in a cadence. The upshot is Nvidia wants to network GPUs together so they can operate as one. "In the future, data centers are going to be thought of as an AI factory. An AI factories' goal in life is to generate revenues and intelligence," he said.

Nvidia’s GTC conference used to be for developers—and still is by the way—but given the GPU giant’s recent run Wall Street and the tech industry was closely watching Huang’s talk for signs of continuing demand, the roadmap ahead and indicators for generative AI growth. Constellation Research CEO Ray Wang noted that GTC is now the Davos of AI. Also see: Will AI Force Centralized Scarcity Or Create Freedom With Decentralized Abundance? | AI is Changing Cloud Workloads, Here's How CIOs Can Prepare

Also see: Will AI Force Centralized Scarcity Or Create Freedom With Decentralized Abundance? | AI is Changing Cloud Workloads, Here's How CIOs Can Prepare

There’s a good reason why analysts were closely evaluating everything Huang said. To date, most of the spoils from the generative AI boom have gone to Nvidia with SuperMicro being an exception. Huang knew about the newfound interest in GTC. He took the stage and joked to the audience that he hoped they realized they weren’t at a concert. Huang warned that folks would here a lot of science and wonky topics.

- Will generative AI make enterprise data centers cool again?

- Nvidia's GPU boom continues, projects Q1 revenue of $24 billion, up more than $20 billion a year ago

- Nvidia's uncanny knack for staying ahead

- Nvidia launches H200 GPU, shipments Q2 2024

- Nvidia sees Q4 sales of $20 billion, up from $6.05 billion a year ago

- Why enterprises will want Nvidia competition soon

- GenAI trickledown economics: Where the enterprise stands today

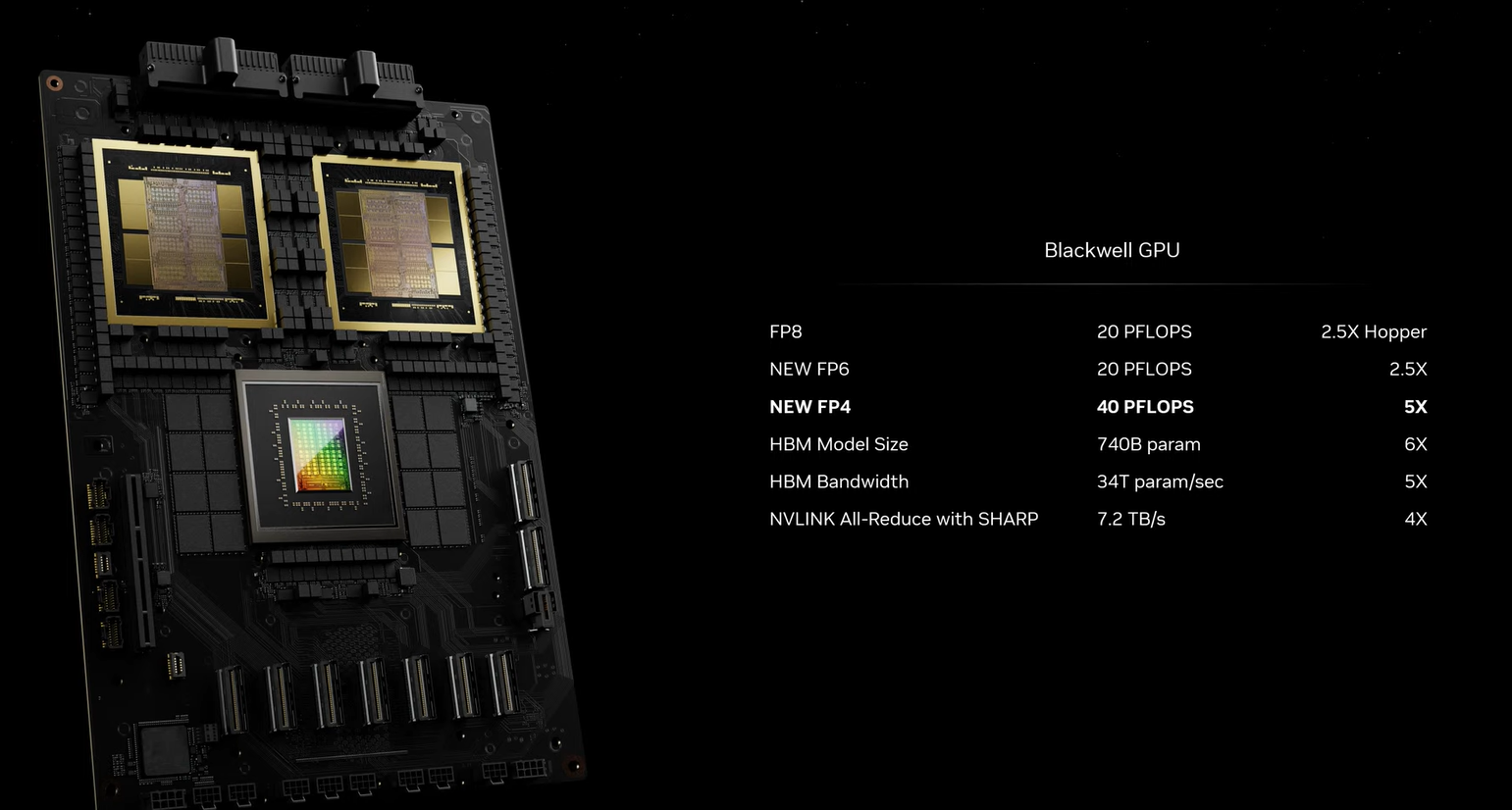

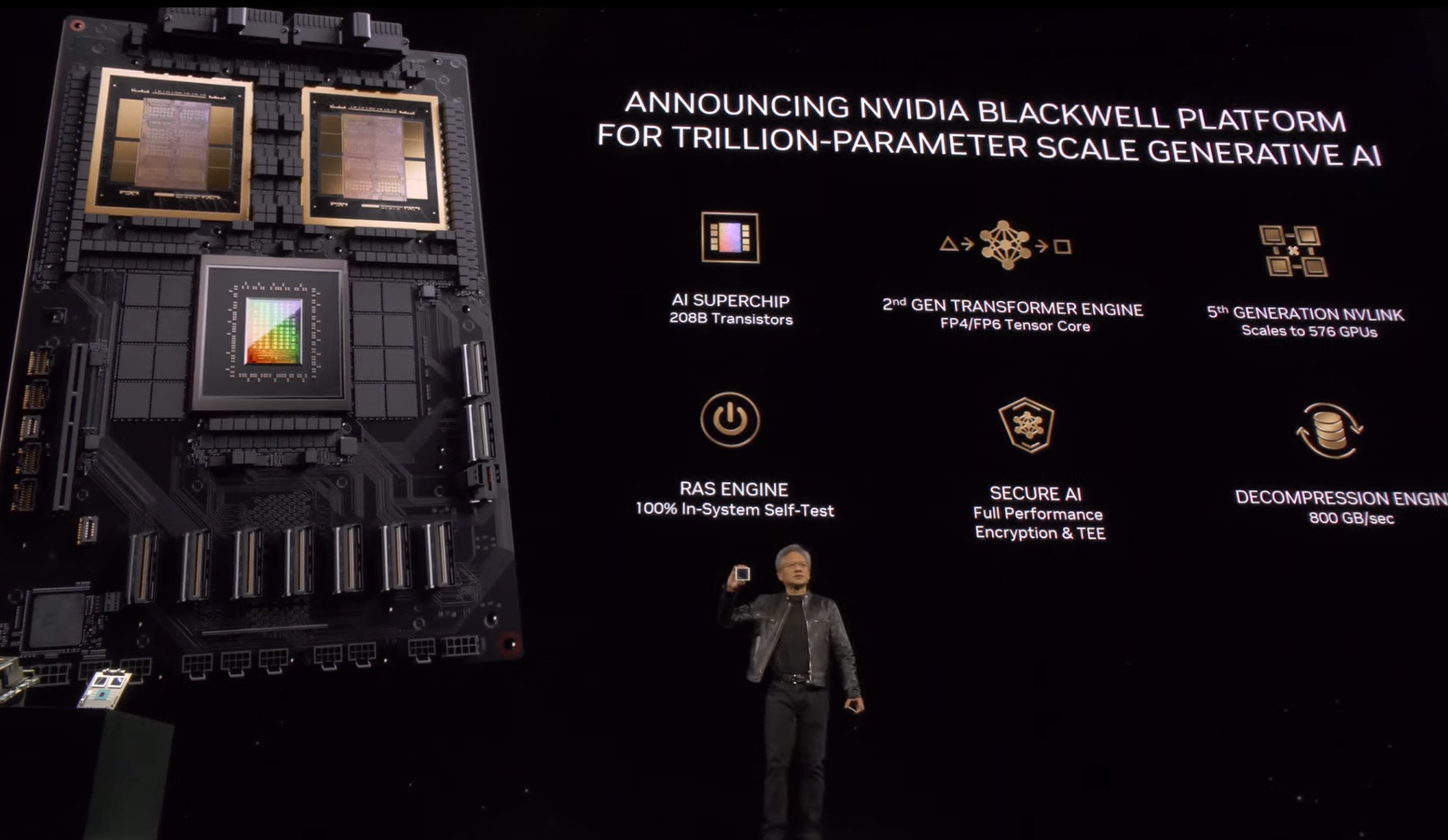

Nvidia’s Blackwell platform is an AI superchip with 208 billion transistors, second generation transformer engine, 5th generation Nvlink that scales to 576 GPUs and other features.

The game here is to build up to full data centers—powered by Nvidia of course.

Key items about the Blackwell platform and supporting cast.

- Blackwell Compute note has two Grace CPUs and four Blackwell GPUs.

- 880 petaFLOPs of AI performance.

- 32TB/s of memory bandwidth.

- Liquid cooled MGX design.

- Blackwell is ramping to launch with cloud service providers including AWS, which will leverage CUDA for Sagemaker and Bedrock. Google Cloud will use Blackwell as will Oracle and Microsoft.

"Blackwell will be the most successful product launch in our history," said Huang.

Other launches include the GB200 Grace Blackwell Superchip with 864GB of fast memory, 40 petaFLOSs of AI performance. The Blackwell GPU has 20 petaFLOPs of AI performance. Relative to Nvidia’s Hopper, Blackwell has 5x the AI performance and 4x the on-die memory.

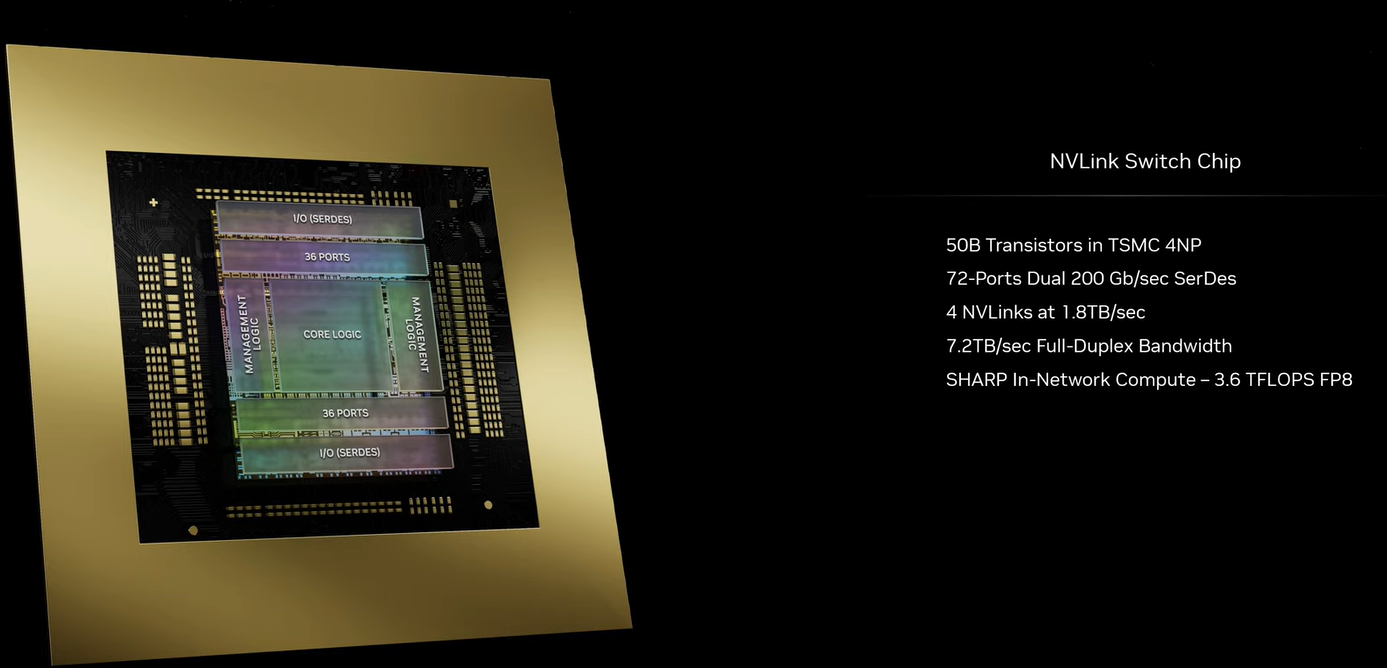

To complement those processors, Nvidia has a stack of networking enhancements, accelerations and models to synchronize and have GPUs work together.

Huang said:

“We have to synchronize and update each other. And every so often, we have to reduce the partial products and then rebroadcast out the partial products that sum of the partial products back to everybody else. So, there's a lot of what is called all reduce and all the all in all gathers. It's all part of this area of synchronization and collectives so that we can have GPUs working with each other. Having extraordinarily fast lakes and being able to do mathematics right in the network allows us to essentially amplify even further.”

That amplification will come via the NVLink Switch chip that will have 50 billion and bandwidth to make GPUs connect and operate as one.

Huang made a few jokes about pricing but did note that the company is focusing on the quality of service and balance cost of tokens. Nvidia outlined inference vs. training and data center throughput. Huang argued that Nvidia’s software stack, led by CUDA, can optimize model inference and training. Blackwell has optimization built in and “the inference capability of Blackwell is off the charts.”

Other items:

- Nvidia said it will also package software by workloads and purpose. Nvidia Inferencing Micro Service (NIMS) will aim to assemble chatbots in an optimized fashion without starting from scratch. Huang said these micro services can hand off to enterprise software platforms such as SAP and ServiceNow and optimize AI applications.

- Nvidia is working with semiconductor design partners to create new processors, digital twins connected to Omniverse. Ansys is reengineering its stack and ecosystem on Nvidia's CUDA. Nvidia will "CUDA accelerate" Synopsys. Cadence is also partnering with Nvidia.

- "We need even larger models. We're going to train it with multimodality data, not just text on the internet, but we're going to train it on texts and images and graphs and charts."

- Huang said he even simulated his keynote. "I hope it's going to turn out as well as I head into my head."

-

Nvidia press releases on switches, Blackwell SuperPod, Blackwell, AWS partnership, Earth Climate Digital Twin, partnership with Microsoft and more.